Dell M1000e-based Small HPC Cluster Architecture

A HPC cluster is a set of identical blades or small 1U servers connected to one

or two specialized network switches (often based on Infiniband) with libraries and programs

installed which allow processing to be shared among them. The result is a high-performance

parallel computing cluster from inexpensive personal computer hardware.

HPC cluster which usually consists of one headnode, and a dozen or more computational

nodes connected via Infiniband (IB), Ethernet or some other network protocol. Such clusters

typically use of-the-shelf commodity OS like Linux. Software

is more specialized -- Message Passing Interface (MPI).

The headnode controls the whole cluster and serves files to the client

nodes. In simplest case via NFS. There are three main components that are running

on the front node:

- NFS server

- Scheduler

- IB subnet manager (if IB is used)

The headnode can also serve as a login node for users. this presupplose that several netwrok

interfaces exist. For example, one for the private cluster network (typically eth1) and a

network interface to the outside world (typically eth0).

For small clusters (say blow 32 computational nodes) the headnode can also have attached storage

that is exported to the compute nodes using NFS Or, for larger clusters, the master node should be

attached to the storage network based for example of GPFS (probably most populat parrale subsystem

or Lustre.

For small clusters the neadnode can also run other cluster functions

such as:

- Job scheduling

- Cluster monitoring

- Cluster reporting

- User account management

Nodes are configured and controlled by the front node, and do only what they

are told to do. Tasks are executed via scheduler such as Sun Grid Engine. In a network

boot situation just a single image for the node exists. But with 16 nodes you can

achieve the same using rsync with one "etalon" node. One can build a Beowulf class

machine using a standard Linux distribution without any additional software.

Compute Nodes

The compute nodes do really one thing—compute. That is really about

it. Not much else to say about this except that the form factor

(chassis) for the compute node can vary based on the requirements for

the compute node as discussed in the next section.

The key problem for multiple computer nodes and how to keep all of

them in sync as for configuration files and pataches.

Blades are typically used for computational node, especially in

situations when:

- High density is critical

- Power and cooling is absolutely critical

(blades typically have better power and cooling than rack-mount

nodes)

- The applications don’t require much local

storage capacity

- PCI-e expansion cards are not required

(typically blades have built-in IB or GbE)

- Larger clusters (blades can be cheaper than

1U nodes for larger systems)

There are several networking architecture issues with small HPC cluster based on M1000e

- Usage of Infiniband

- Dynamic reimaging over the network of computational nodes on boot (implemented in Bright

Cluster manager)

- Bonding of interfaces

- Tools necessary to maintain multiple identical computational nodes.

- ssh configuration

- Usage of head node as center of universe and computational nodes as satellites on private

segment, vs single nework segment for both head node and computational nodes.

Major hardware components to consider are:

- Head node

- In case of Infiniband also can be used as Fabric Management Server

- Blades

- Ethernet cards and switch

- InfiniBand cards and IB switch

Blades can have two interfaces: 10Gbit Ethernet and IB. Each need to be connected

to its own network:

- 10Gbit network can carry NFS and other staff like boot images for

computational nodes

- IB network can carry MPI traffic. You use single InfiniBand subnet.

Please do not confuse InfiniBand subnets with IP subnets. In the context of

this topology planning section, unless otherwise noted, the term subnet will

refer to an InfiniBand subnet.

We also need several software applications, including:

-

Scheduler (for example, SGE)

-

xCAT xCAT offers complete management for HPC clusters. See

XCAT iDataPlex Cluster Quick Start - xcat It enables you to:

- Provision Operating Systems on physical or virtual machines

- Provision using scripted install, stateless, satellites, iSCSI, or cloning

- Remotely manage systems: lights-out management, remote console, and

distributed shell support

- Quickly configure and control management node services: DNS, HTTP, DHCP,

TFTP, NFS

- Nagios

- Puppet

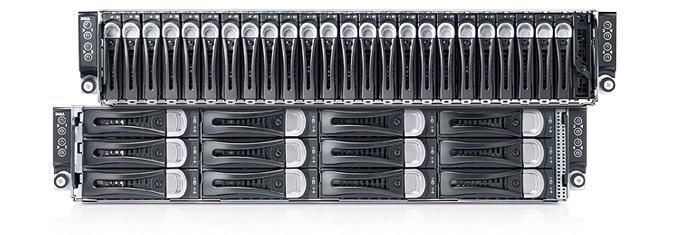

PowerEdge C6220 II Rack

Server was released in April 26, 2012 and in 2013 updated for Xeon E5-2600 v2

line of Intel CPUs.

The PowerEdge C6220 is being marketed as a high-density server solution primarily

targeting HPC (high-performance computing) and virtual server clusters but in reality if does not

provide significant advantages in comparisom with M1000e enclosure and m630 blades. On the contrary

it provides several disadvantages.

The C6220 offers four completely independent, hot-swappable server nodes (pesudo-blades).

Unlike blade Each

server node can be configured independently of the other nodes in the same chassis

to perform specific tasks. They can have different CPUs, different amount of memory

and different number of harddrive, providing better flexibility in respect to harddrives

that 1U servers. The chassis also accepts a pair of dual-height nodes which have

two PCI-e expansion slots.

The four nodes are accessed at the rear where each one provides dual Gigabit

ports (not 10GBit), IPMI, two USB2 port,

VGA and a serial port.

C6220 allow to use CPUs consuming up to 135W. This means the entire range of E5-2600

and v2 CPUs is supported.

Highlights:

- Intel® Xeon® processor E5-2600v2 product family, each with up to twelve

cores and four memory channels, offering up to 40% greater performance over

previous generation processors

- Dual Turbo Boost Technology 2.0, Advanced Vector Extensions and Ivy Bridge

micro-architecture

- Intel® Integrated I/O, which can reduce I/O latency by up to 30%

- Intel® Power Tuning Technology that uses onboard sensors to help give greater

control over power and thermal levels across the system

Each node supports:

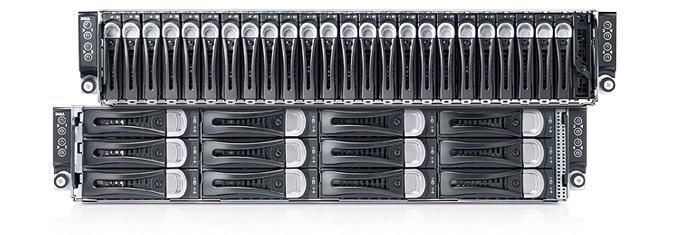

A small cluster on the base of Dell blade enclosure

The Dell PowerEdge M1000e Enclosure is an attractive option for building small

clusters. 16 blades can be installed into 10U enclosure. With 16 core per

blade and 16 blade per enclosure you get 256 node cluster.

Enclosure supports up to 6 network & storage I/O interconnect modules.

A high speed passive midplane connects the server modules in the front and power,

I/O, and management infrastructure in the rear of the enclosure.

Thorough power management capabilities including delivering shared power to ensure

full capacity of the power supplies available to all server modules

To understand the PowerEdge M1000e architecture, it is necessary to first define

the term fabric.

- A fabric is defined as a method of encoding, transporting, and synchronizing

data between devices. Examples of fabrics are Gigabit Ethernet (GE), Fibre Channel

(FC) or InfiniBand (IB). Fabrics are carried inside the PowerEdge M1000e system,

between server module and I/O Modules through the midplane. They are also carried

to the outside world through the physical copper or optical interfaces on the

I/O modules. Each blade connects to two potentially different fabrics.

- The first embedded high speed fabric (Fabric A) is comprised of dual 10GB

Gigabit Ethernet LOMs and their associated IOMs in the chassis. The LOMs are

based on the Broadcom NetXtreme II Ethernet controller, supporting TCP/IP Offload

Engine (TOE) and iSCSI boot capability.

- Optional customer configured fabrics are supported by adding up to two dual

port I/O Mezzanine cards. Each Half‐Height server module

supports two identical I/O Mezzanine card connectors which enable connectivity

to I/O fabrics B and C. These optional Mezzanine cards offer a wide array of

Ethernet (including iSCSI), Fibre Channel, and InfiniBand technologies, with

others possible in the future.

History

A Beowulf cluster is a computer cluster of what are normally identical, commodity-grade

computers networked into a small local area network with libraries and programs

installed which allow processing to be shared among them. The result is a high-performance

parallel computing cluster from inexpensive personal computer hardware.

The name Beowulf originally referred to a specific computer built in 1994 by

Thomas Sterling and Donald Becker at NASA.[1] The name "Beowulf" comes from the

main character in the Old English epic poem Beowulf, which was bestowed by Sterling

because the eponymous hero is described as having "thirty men's heft of grasp in

the gripe of his hand".

There is no particular piece of software that defines a cluster as a Beowulf.

Beowulf clusters normally run a Unix-like operating system, such as BSD, Linux,

or Solaris, normally built from free and open source software. Commonly used parallel

processing libraries include Message Passing Interface (MPI) and Parallel Virtual

Machine (PVM). Both of these permit the programmer to divide a task among a group

of networked computers, and collect the results of processing. Examples of MPI software

include OpenMPI or MPICH.

A description of the Beowulf cluster, from the original "how-to", which was published

by Jacek Radajewski and Douglas Eadline under the Linux Documentation Project in

1998.

Beowulf is a multi-computer architecture which can be used for parallel computations.

It is a system which usually consists of one server node, and one or more client

nodes connected via Ethernet or some other network. It is a system built using

commodity hardware components, like any PC capable of running a Unix-like operating

system, with standard Ethernet adapters, and switches. It does not contain any

custom hardware components and is trivially reproducible. Beowulf also uses

commodity software like the FreeBSD, Linux or Solaris operating system, Parallel

Virtual Machine (PVM) and Message Passing Interface (MPI). The server node controls

the whole cluster and serves files to the client nodes. It is also the cluster's

console and gateway to the outside world. Large Beowulf machines might have

more than one server node, and possibly other nodes dedicated to particular

tasks, for example consoles or monitoring stations. In most cases client nodes

in a Beowulf system are dumb, the dumber the better. Nodes are configured and

controlled by the server node, and do only what they are told to do. In a disk-less

client configuration, a client node doesn't even know its IP address or name

until the server tells it.

One of the main differences between Beowulf and a Cluster of Workstations

(COW) is that Beowulf behaves more like a single machine rather than many workstations.

In most cases client nodes do not have keyboards or monitors, and are accessed

only via remote login or possibly serial terminal. Beowulf nodes can be thought

of as a CPU + memory package which can be plugged into the cluster, just like

a CPU or memory module can be plugged into a motherboard.

Beowulf is not a special software package, new network topology, or the latest

kernel hack. Beowulf is a technology of clustering computers to form a parallel,

virtual supercomputer. Although there are many software packages such as kernel

modifications, PVM and MPI libraries, and configuration tools which make the

Beowulf architecture faster, easier to configure, and much more usable, one

can build a Beowulf class machine using a standard Linux distribution without

any additional software. If you have two networked computers which share at

least the /home file system via NFS, and trust each other to execute remote

shells (rsh), then it could be argued that you have a simple, two node Beowulf

machine.

Resource intensive jobs (long running, high memory demand, etc) should be

run on the compute nodes of the cluster. You cannot execute jobs directly on

the compute nodes yourself; you must request the cluster's batch system do it

on your behalf. To use the batch system, you will submit a special script which

contains instructions to execute your job on the compute nodes. When submitting

your job, you specify a partition (group of nodes: for testing vs. production)

and a QOS (a classification that determines what kind of resources your job

will need). Your job will wait in the queue until it is "next in line", and

free processors on the compute nodes become available. Which job is "next in

line" is determined by the

scheduling

policy of the cluster. Once a job is started, it continues until it either

completes or reaches its time limit, in which case it is terminated by the system.

The batch system used on maya is called SLURM, which is short for

Simple Linux Utility for Resource

Management. Users transitioning from the cluster hpc should be aware that

SLURM behaves a bit differently than PBS, and the scripting is a little different

too. Unfortunately, this means you will need to rewrite your batch scripts.

However many of the confusing points of PBS, such as requesting the number of

nodes and tasks per node, are simplified in SLURM.

The systems have a simple queue structure with two main levels of priority; the

queue names reflect their priority. There is no longer a separate queue for the

lowest priority "bonus jobs" as these are to be submitted to the other queues,

and PBS lowers their priority within the queues.

Intel Xeon Sandy Bridge

express:

- Each node has 2x 8 core Intel Xeon E5-2670 (Sandy Bridge) 2.6GHz

- high priority queue for testing, debugging or quick turnaround

- charging rate of 3 SUs per processor-hour (walltime)

- small limits particularly on time and number of cpus

normal:

- Each node has 2x 8 core Intel Xeon E5-2670 (Sandy Bridge) 2.6GHz

- the default queue designed for all production use

- charging rate of 1 SU per processor-hour (walltime)

- allows the largest resource requests

copyq:

- specifically for IO work, in particular, mdss commands for copying data

to the mass-data system.

- charging rate of 1 SU per processor-hour (walltime)

- runs on nodes with external network interface(s) and so can be used for

remote data transfers (you may need to configure passwordless ssh).

- tars, compresses and other manipulation of /short files can be done in

copyq.

- purely compute jobs will be deleted whenever detected.

Architecturally, Hyades is a cluster comprised of the following components:

| Component |

QTY |

Description |

| Master Node |

1 |

Dell PowerEdge R820, 4x 8-core Intel Xeon E5-4620 (2.2 GHz), 128GB memory, 8x

1TB HDDs |

| Analysis Node |

1 |

Dell PowerEdge R820, 4x 8-core Intel Xeon E5-4640 (2.4 GHz), 512GB memory, 2x

600GB SSDs |

| Type I Compute Nodes |

180 |

Dell PowerEdge R620, 2x 8-core Intel Xeon E5-2650 (2.0 GHz), 64GB memory, 1TB

HDD |

| Type IIa Compute Nodes |

8 |

Dell PowerEdge C8220x, 2x 8-core Intel Xeon E5-2650 (2.0 GHz), 64GB memory, 2x

500GB HDDs, 1x Nvidia K20 |

| Type IIb Compute Nodes |

1 |

Dell PowerEdge R720, 2x 6-core Intel Xeon E5-2630L (2.0 GHz), 64GB memory,

500GB HDD, 2x Xeon Phi 5110P |

| Lustre Storage |

1 |

146TB of usable storage served from a Terascala/Dell storage cluster |

| ZFS Server |

1 |

SuperMicro Server, 2x 4-core Intel Xeon E5-2609V2 (2.5 GHz), 64GB memory, 2x

120GB SSDs, 36x 4TB HDDs |

| Cloud Storage |

1 |

1PB of raw storage served from a Huawei UDS system |

| InfiniBand |

17 |

17x Mellanox IS5024 QDR (40Gb/s) InfiniBand switches, configured in a 1:1

non-blocking Fat Tree topology |

| Gigabit Ethernet |

7 |

7x Dell 6248 GbE switches, stacked in a Ring topology |

| 10-gigabit Ethernet |

1 |

1x Dell 8132F 10GbE switch |

Master Node

The Master/Login Node is the entry point to the Hyades cluster. It is a Dell

PowerEdge R820 server that contains four (4x) 8-core Intel Sandy Bridge Xeon E5-4620

processors at 2.2 GHz, 128 GB memory and eight (8x) 1TB hard drives in a RAID-6 array.

Primary tasks to be performed on the Master Node are:

- Editing codes and scripts

- Compiling codes

- Short test runs and debugging runs

- Submitting and monitoring jobs

UPDATE: Please try the updated SL6 image ami-d60185bf to fix SSH key issues.If you haven't heard of StarCluster from MIT, it is a toolkit for launching clusters

of virtual compute nodes within the Amazon Elastic Compute Cloud (EC2). StarCluster

provides a simple way to utilize the cloud for research, scientific, high-performance

and high-throughput computing.

StarCluster defaults to using Ubuntu Linux (deb) images for its base, but I have

prepared a Scientific Linux (rp

I've been experimenting with Amazon EC2 for prototyping HPC clusters. Spinning up

a cluster of micro instances is very convenient for testing software and systems

configurations. In order to facilitate training and discussion, my Python script

is now available on GitHub. This piece of code utilizes the Fabric and Boto Python

modules.An Amazon Web Services account is required to use this script.

Softpanorama Recommended

-

HPC Cluster Nodes - High Performance Computing - Wiki - High Performance Computing - Dell

Community

- PowerEdge

M1000e Blade Enclosure Details Dell

-

PowerEdge M620 Blade Server Details Dell

- High-performance computing (HPC) Dell

- ^ Becker, Donald J and Sterling, Thomas and Savarese, Daniel and Dorband,

John E and Ranawak, Udaya A and Packer, Charles V, "BEOWULF: A parallel workstation

for scientific computation", in Proceedings, International Conference on Parallel

Processing vol. 95, (1995). URL http://www.phy.duke.edu/~rgb/brahma/Resources/beowulf/papers/ICPP95/icpp95.html

Society

Groupthink :

Two Party System

as Polyarchy :

Corruption of Regulators :

Bureaucracies :

Understanding Micromanagers

and Control Freaks : Toxic Managers :

Harvard Mafia :

Diplomatic Communication

: Surviving a Bad Performance

Review : Insufficient Retirement Funds as

Immanent Problem of Neoliberal Regime : PseudoScience :

Who Rules America :

Neoliberalism

: The Iron

Law of Oligarchy :

Libertarian Philosophy

Quotes

War and Peace

: Skeptical

Finance : John

Kenneth Galbraith :Talleyrand :

Oscar Wilde :

Otto Von Bismarck :

Keynes :

George Carlin :

Skeptics :

Propaganda : SE

quotes : Language Design and Programming Quotes :

Random IT-related quotes :

Somerset Maugham :

Marcus Aurelius :

Kurt Vonnegut :

Eric Hoffer :

Winston Churchill :

Napoleon Bonaparte :

Ambrose Bierce :

Bernard Shaw :

Mark Twain Quotes

Bulletin:

Vol 25, No.12 (December, 2013) Rational Fools vs. Efficient Crooks The efficient

markets hypothesis :

Political Skeptic Bulletin, 2013 :

Unemployment Bulletin, 2010 :

Vol 23, No.10

(October, 2011) An observation about corporate security departments :

Slightly Skeptical Euromaydan Chronicles, June 2014 :

Greenspan legacy bulletin, 2008 :

Vol 25, No.10 (October, 2013) Cryptolocker Trojan

(Win32/Crilock.A) :

Vol 25, No.08 (August, 2013) Cloud providers

as intelligence collection hubs :

Financial Humor Bulletin, 2010 :

Inequality Bulletin, 2009 :

Financial Humor Bulletin, 2008 :

Copyleft Problems

Bulletin, 2004 :

Financial Humor Bulletin, 2011 :

Energy Bulletin, 2010 :

Malware Protection Bulletin, 2010 : Vol 26,

No.1 (January, 2013) Object-Oriented Cult :

Political Skeptic Bulletin, 2011 :

Vol 23, No.11 (November, 2011) Softpanorama classification

of sysadmin horror stories : Vol 25, No.05

(May, 2013) Corporate bullshit as a communication method :

Vol 25, No.06 (June, 2013) A Note on the Relationship of Brooks Law and Conway Law

History:

Fifty glorious years (1950-2000):

the triumph of the US computer engineering :

Donald Knuth : TAoCP

and its Influence of Computer Science : Richard Stallman

: Linus Torvalds :

Larry Wall :

John K. Ousterhout :

CTSS : Multix OS Unix

History : Unix shell history :

VI editor :

History of pipes concept :

Solaris : MS DOS

: Programming Languages History :

PL/1 : Simula 67 :

C :

History of GCC development :

Scripting Languages :

Perl history :

OS History : Mail :

DNS : SSH

: CPU Instruction Sets :

SPARC systems 1987-2006 :

Norton Commander :

Norton Utilities :

Norton Ghost :

Frontpage history :

Malware Defense History :

GNU Screen :

OSS early history

Classic books:

The Peter

Principle : Parkinson

Law : 1984 :

The Mythical Man-Month :

How to Solve It by George Polya :

The Art of Computer Programming :

The Elements of Programming Style :

The Unix Hater’s Handbook :

The Jargon file :

The True Believer :

Programming Pearls :

The Good Soldier Svejk :

The Power Elite

Most popular humor pages:

Manifest of the Softpanorama IT Slacker Society :

Ten Commandments

of the IT Slackers Society : Computer Humor Collection

: BSD Logo Story :

The Cuckoo's Egg :

IT Slang : C++ Humor

: ARE YOU A BBS ADDICT? :

The Perl Purity Test :

Object oriented programmers of all nations

: Financial Humor :

Financial Humor Bulletin,

2008 : Financial

Humor Bulletin, 2010 : The Most Comprehensive Collection of Editor-related

Humor : Programming Language Humor :

Goldman Sachs related humor :

Greenspan humor : C Humor :

Scripting Humor :

Real Programmers Humor :

Web Humor : GPL-related Humor

: OFM Humor :

Politically Incorrect Humor :

IDS Humor :

"Linux Sucks" Humor : Russian

Musical Humor : Best Russian Programmer

Humor : Microsoft plans to buy Catholic Church

: Richard Stallman Related Humor :

Admin Humor : Perl-related

Humor : Linus Torvalds Related

humor : PseudoScience Related Humor :

Networking Humor :

Shell Humor :

Financial Humor Bulletin,

2011 : Financial

Humor Bulletin, 2012 :

Financial Humor Bulletin,

2013 : Java Humor : Software

Engineering Humor : Sun Solaris Related Humor :

Education Humor : IBM

Humor : Assembler-related Humor :

VIM Humor : Computer

Viruses Humor : Bright tomorrow is rescheduled

to a day after tomorrow : Classic Computer

Humor

The Last but not Least Technology is dominated by

two types of people: those who understand what they do not manage and those who manage what they do not understand ~Archibald Putt.

Ph.D

Copyright © 1996-2021 by Softpanorama Society. www.softpanorama.org

was initially created as a service to the (now defunct) UN Sustainable Development Networking Programme (SDNP)

without any remuneration. This document is an industrial compilation designed and created exclusively

for educational use and is distributed under the Softpanorama Content License.

Original materials copyright belong

to respective owners. Quotes are made for educational purposes only

in compliance with the fair use doctrine.

FAIR USE NOTICE This site contains

copyrighted material the use of which has not always been specifically

authorized by the copyright owner. We are making such material available

to advance understanding of computer science, IT technology, economic, scientific, and social

issues. We believe this constitutes a 'fair use' of any such

copyrighted material as provided by section 107 of the US Copyright Law according to which

such material can be distributed without profit exclusively for research and educational purposes.

This is a Spartan WHYFF (We Help You For Free)

site written by people for whom English is not a native language. Grammar and spelling errors should

be expected. The site contain some broken links as it develops like a living tree...

Disclaimer:

The statements, views and opinions presented on this web page are those of the author (or

referenced source) and are

not endorsed by, nor do they necessarily reflect, the opinions of the Softpanorama society. We do not warrant the correctness

of the information provided or its fitness for any purpose. The site uses AdSense so you need to be aware of Google privacy policy. You you do not want to be

tracked by Google please disable Javascript for this site. This site is perfectly usable without

Javascript.

Last modified:

September, 12, 2017