|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

By Dr. Nikolai Bezroukov

Version 0.7 (Oct 09, 2020)

|

|

There's no benefits to rewriting a ton of code from one similar language to another. This is especially stupid, if we are talking about sysadmin utilities. Perl 5 is here to stay and will not go anywhere. Perl is much closer to shell, knowledge of which is a must for any sysadmin then Python. Any sysadmin worth its title knows Bash and AWK, even if he does not know Perl; and that means that he/she can at least superficially understand Perl code.

But popularity of Python and its dominance in research creates pressure for Perl programmers to learn Python. also sometimes the test of maintaining Perl code gets into the hand of Python programmers who iether do not know or hate Perl. In such cases it make sense to try to migrate some of utilities that can benefit from Python features (to example tuples) to Python, so that they can be maintained in Python. For Perl programmer who want to lean some Python this is probably the fastest way to learn Python. People who wrote complex Perl scripts can eventually adapt to Python idiosyncrasies, although not without huge effort and pain in the process. For experienced Perl programmer many things in Python look wrong and many unnecessary convoluted and. worse, completely detached from Unix culture. That latter is true as Python created came from Europe and was influenced (albeit indirectly) to "Pascal family" tradition, the set of language developed by famous language designer Nicklaus Wirth and which is completely distinct from C-language tradition which dominated in the USA. Although formally ABC which was a direct Python prototype was influenced by SETL originally developed by (Jack) Jacob T. Schwartz at the New York University (NYU) Courant Institute of Mathematical Sciences in the late 1960s. Also developers previously used some idiosyncratic "teaching" OS.

BTW Nicklaus Wirth he popularized the adage now named Wirth's law, which states that software is getting slower more rapidly than hardware becomes faster. In his 1995 paper A Plea for Lean Software he attributes it to Martin Reiser , who in the preface to his book on the Oberon System wrote:

"The hope is that the progress in hardware will cure all software ills. However, a critical observer may observe that software manages to outgrow hardware in size and sluggishness."[3] Other observers had noted this for some time before; indeed, the trend was becoming obvious as early as 1987.[4].

I think Wirth would strongly disapprove Python 3.8 :-)

At the very beginning any Perl programmer feels about Python like experienced floor dancer put on skates and pushed on a skating ring.

|

At the very beginning any Perl programmer feels about Python like experienced floor dancer put on skates and pushed on a skating ring |

Here the sweet dream of semi-automatic translation comes into play. The author is trying to implement this idea with his pythonizer tool.

Some progress was achieved during a year of development. See Two-pass "fuzzy" transformer from Perl to "semi-Python" Currently pythonizer is version 0.5, but despite being essentially in alpha stage it proved to be pretty useful for such tasks. At least for me.

But of course transliteration is never enough. Rewriting even medium complexity Perl script in Python, usually requires some level of redesign, as languages have different ways to express things and there is no direct mapping between several functions and special variables from Perl to Python. Arrays in Perl behave differently (Python has fixed sized arrays, while Perl has "automatically expandable" arrays, so the assignment of index out of range expands the array in Perl and generates an exception in Python (which can be used to expand the array to necessary size). But that means that a typical loop that create array in Perl is not directly translatable in Python.

Also set of text processing function is Python is richer and better thought out, so in many cases instead of regular expressions you can use them.

Many simple Perl regex (for example used for stripping leading or trailing blanks) can be rewritten with functions in Python. But the problem is that the set of modifiers in Python is more limited. Due to this translation of some functions from Perl to Python requires jumping through hoops. One classic example is the translation of the statement $line=~tr/:,;/ /s

Also even if a similar function exists in Python there is no guarantee that it behaves the same way as corresponding Perl function. For example, while $^O gives you operating system in Perl as "linux" on linux and "cygwin" on CygWin, Python os.name gives you "posix" in both cases. The same is true for other flavors of Unix, for example, for Solaris. It also returns posix in Python.

Python 2.7 probably will stay with us much longer then Python designer anticipated. And there are several reasons for this and first of all change of string representation from ASCII to Unicode in Python 3.

Python idiosyncratic for loop that uses range in Python 2.x allocates all elements on the range at once and only then iterates over them. To compensate for this design blunder (outside usage for intro courses) Perl 2.7 has xrange function. But the function xrange() has been removed in python 3.5

One of the headaches of translating Perl assignments within conditional statements was absence of corresponding Python feature. And this is explainable as Python was first designed as the language for novices, kind of new Basic. And such construct is error prone and benefits mainly seasoned programmers.

Python 3.8 implements the walrus operator ( C-style assignment expression), which looks like this:

name := expression

Slightly artificial but simple example (most commonly walrus is used when you search sub-string in the string and want to preserve the starting position of this search)

my_list = [1,2,3,4,5]

if len(my_list) > 3:

print(f"The list is too long with {len(my_list)}

elements")

In this case the walrus operator eliminates the need to call the len() function twice.

my_list = [1,2,3,4,5]

if (n := len(my_list)) > 3:

print(f"The list is too long with {n} elements")

The walrus also can be used with for and while loops. It simplifies translating Perl while loop that otherwise generally need to be translated by duplicating the code in while clause before and at the end of the loop (the only viable method of translation Perl while loop with the assignment if the target is Python 2,7) .

Without walrus operator:

line = file.read() while line: process(line) line = file.read()

With walrus operator the loop resembles the typical Perl loop while( $line=<> ) much more:

while line := file.read(): process(line)

F-stings used in example above introduced in Python 3.6 more or less corresponds to Perl double quoted constants (new is well forgotten old). They simplify the translation of Perl double quotes literals, which otherwise need to be decompiled into series of concatenations. Problem remains abut they are much less:

print(f"Hello, {name}. You are {age}.")

In Python 2.7 print is a statement while in 3.x it is a function with additional capabilities due to availability of keyword arguments. Keyword argument end="" is used to avoid printing a newline at the end of function call.

Only simple scripts that use non OO subset of Perl5 can be semi-automatically translated. Luckily, they constitute significant share of useful Perl scripts, especially small (less then 1K lines). By semi-automatically I means that you can automatically find close correspondence for approximately 80-90% of statements, and do the rest of conversion manually. In other words as the first step you can generate "Pythonized" Perl and then try to correct/transform remaining differences and problems by hand. This approach works well for the majority of monolithic simple ( non OO) scripts.

For non OO subset of Perl 5 there are also greater similarities with Python: a very similar precedence model for operators, access to a very similar set of basic data types – the familiar scalar, array, and hash are all available to us, albeit under different names. Regex are different, but basics of Perl regex are similar to Python re.match and re.matchall. Many simple Perl regex operation, such as trimming leading or tail blanks are better implemented in Python using built-in functions.

As soon as you encounter the script with Perl5 pointers or OO, the situation became close to hopeless and complete rewrite might be a better option then semi-automated translation. Which probably involves algorithm redesign, so this task can be classified as "software renovation"

The test below shows the current capabilities version pythonizer the source code of which was recently uploaded to GitHub It was run using the source of pre_pythonizer.pl -- the script which was already posted on GitHub (see see pre_pythonizer.pl ):

Full protocol is available: Full protocol of translation of pre_pythonizer.pl by the current version of pythonizer. Here is a relevant fragment:

PYTHONIZER: Fuzzy translator of Python to Perl. Version 0.50 (last modified 200831_0011) Running at 20/08/31 09:11

Logs are at /tmp/Pythonizer/pythonizer.200831_0911.log. Type -h for help.

================================================================================

Results of transcription are written to the file pre_pythonizer.py

==========================================================================================

... ... ...

54 | 0 | |#$debug=3; # starting from Debug=3 only the first chunk processed

55 | 0 | |STOP_STRING='' # In debug mode gives you an ability to switch trace on any type of error message for example S (via hook in logme).

#PL: $STOP_STRING='';

56 | 0 | |use_git_repo='' #PL: $use_git_repo='';

57 | 0 | |

58 | 0 | |# You can switch on tracing from particular line of source ( -1 to disable)

59 | 0 | |breakpoint=-1 #PL: $breakpoint=-1;

60 | 0 | |SCRIPT_NAME=__file__[__file__.rfind('/')+1:] #PL: $SCRIPT_NAME=substr($0,rindex($0,'/')+1);

61 | 0 | |if (dotpos:=SCRIPT_NAME.find('.'))>-1: #PL: if( ($dotpos=index($SCRIPT_NAME,'.'))>-1 ) {

62 | 1 | | SCRIPT_NAME=SCRIPT_NAME[0:dotpos] #PL: $SCRIPT_NAME=substr($SCRIPT_NAME,0,$dotpos);

64 | 0 | |

65 | 0 | |OS=os.name # $^O is built-in Perl variable that contains OS name

#PL: $OS=$^O;

66 | 0 | |if OS=='cygwin': #PL: if($OS eq 'cygwin' ){

67 | 1 | | HOME='/cygdrive/f/_Scripts' # $HOME/Archive is used for backups

#PL: $HOME="/cygdrive/f/_Scripts";

68 | 0 | |elif OS=='linux': #PL: elsif($OS eq 'linux' ){

69 | 1 | | HOME=os.environ['HOME'] # $HOME/Archive is used for backups

#PL: $HOME=$ENV{'HOME'};

71 | 0 | |LOG_DIR=f"/tmp/{SCRIPT_NAME}" #PL: $LOG_DIR="/tmp/$SCRIPT_NAME";

72 | 0 | |FormattedMain=('sub main\n','{\n') #PL: @FormattedMain=("sub main\n","{\n");

73 | 0 | |FormattedSource=FormattedSub.copy #PL: @FormattedSource=@FormattedSub=@FormattedData=();

74 | 0 | |mainlineno=len(FormattedMain) # we need to reserve one line for sub main

#PL: $mainlineno=scalar( @FormattedMain);

75 | 0 | |sourcelineno=sublineno=datalineno=0 #PL: $sourcelineno=$sublineno=$datalineno=0;

76 | 0 | |

77 | 0 | |tab=4 #PL: $tab=4;

78 | 0 | |nest_corrections=0 #PL: $nest_corrections=0;

79 | 0 | |keyword={'if': 1,'while': 1,'unless': 1,'until': 1,'for': 1,'foreach': 1,'given': 1,'when': 1,'default': 1}

#PL: %keyword=('if'=>1,'while'=>1,'unless'=>1, 'until'=>1,'for'=>1,'foreach'=>1,'give

Cont: n'=>1,'when'=>1,'default'=>1);

80 | 0 | |

81 | 0 | |logme(['D',1,2]) # E and S to console, everything to the log.

#PL: logme('D',1,2);

82 | 0 | |banner([LOG_DIR,SCRIPT_NAME,'PREPYTHONIZER: Phase 1 of pythonizer',30]) # Opens SYSLOG and print STDERRs banner; parameter 4 is log retention period

#PL: banner($LOG_DIR,$SCRIPT_NAME,'PREPYTHONIZER: Phase 1 of pythonizer',30);

83 | 0 | |get_params() # At this point debug flag can be reset

#PL: get_params();

84 | 0 | |if debug>0: #PL: if( $debug>0 ){

85 | 1 | | logme(['D',2,2]) # Max verbosity

#PL: logme('D',2,2);

86 | 1 | | print(f"ATTENTION!!! {SCRIPT_NAME} is working in debugging mode {debug} with autocommit of source to {HOME}/Archive\n",file=sys.stderr,end="")

#PL: print STDERR "ATTENTION!!! $SCRIPT_NAME is working in debugging mode $debug with

Cont: autocommit of source to $HOME/Archive\n";

87 | 1 | | autocommit([f"{HOME}/Archive",use_git_repo]) # commit source archive directory (which can be controlled by GIT)

#PL: autocommit("$HOME/Archive",$use_git_repo);

89 | 0 | |print(f"Log is written to {LOG_DIR}, The original file will be saved as {fname}.original unless this file already exists ")

#PL: say "Log is written to $LOG_DIR, The original file will be saved as $fname.origi

Cont: nal unless this file already exists ";

90 | 0 | |print('=' * 80,'\n',file=sys.stderr) #PL: say STDERR "=" x 80,"\n";

91 | 0 | |

92 | 0 | |#

93 | 0 | |# Main loop initialization variables

94 | 0 | |#

95 | 0 | |new_nest=cur_nest=0 #PL: $new_nest=$cur_nest=0;

96 | 0 | |#$top=0; $stack[$top]='';

97 | 0 | |lineno=noformat=SubsNo=0 #PL: $lineno=$noformat=$SubsNo=0;

98 | 0 | |here_delim='\n' # impossible combination

#PL: $here_delim="\n";

99 | 0 | |InfoTags='' #PL: $InfoTags='';

100 | 0 | |SourceText=sys.stdin.readlines().copy #PL: @SourceText=<STDIN>;

101 | 0 | |

102 | 0 | |#

103 | 0 | |# Slurp the initial comment block and use statements

104 | 0 | |#

105 | 0 | |ChannelNo=lineno=0 #PL: $ChannelNo=$lineno=0;

106 | 0 | |while 1: #PL: while(1){

107 | 1 | | if lineno==breakpoint: #PL: if( $lineno == $breakpoint ){

109 | 2 | | pdb.set_trace() #PL: }

110 | 1 | | line=line.rstrip("\n") #PL: chomp($line=$SourceText[$lineno]);

111 | 1 | | if re.match(r'^\s*$',line): #PL: if( $line=~/^\s*$/ ){

112 | 2 | | process_line(['\n',-1000]) #PL: process_line("\n",-1000);

113 | 2 | | lineno+=1 #PL: $lineno++;

114 | 2 | | continue #PL: next;

116 | 1 | | intact_line=line #PL: $intact_line=$line;

117 | 1 | | if intact_line[0:1]=='#': #PL: if( substr($intact_line,0,1) eq '#' ){

118 | 2 | | process_line([line,-1000]) #PL: process_line($line,-1000);

119 | 2 | | lineno+=1 #PL: $lineno++;

120 | 2 | | continue #PL: next;

122 | 1 | | line=normalize_line(line) #PL: $line=normalize_line($line);

123 | 1 | | line=line.rstrip("\n") #PL: chomp($line);

124 | 1 | | (line)=line.split(' '),1 #PL: ($line)=split(' ',$line,1);

125 | 1 | | if re.match(r'^use\s+',line): #PL: if($line=~/^use\s+/){

126 | 2 | | process_line([line,-1000]) #PL: process_line($line,-1000);

127 | 1 | | else: #PL: else{

128 | 2 | | break #PL: last;

130 | 1 | | lineno+=1 #PL: $lineno++;

131 | 0 | |#while

While only a small fragment is shown, the program was able to translate (or more correctly transliterate) all the code.

also the current version does not attempt to match types and convert numeric values into string, when necessary. For example, instead of:

InfoTags="=" + cur_nestthere should be:

InfoTags="=" + str(cur_nest)

For full protocol see Full protocol of translation of pre_pythonizer.pl by version 0.07 of pythonizer

For some additional information see Two-pass "fuzzy" transformer from Perl to "semi-Python"

The idea that that you can define your own types with a set operation on them sounds great, but the devil is in detail. As soon as you start using somebody else modules you are thrown into the necessity of learning of underling type system used in the package. And this is not a trivial exercise as the package designer typically has much higher level of understanding of language than you. And uses it in more complex way, including the use and abuse of type system.

For a Perl programmer the benefits of more strict type system are not obvious, but the pain of using it is quite evident from the very beginning.

We will illustrate subtle difficulties arising in more strict type system on example of two very simple Perl functions: functions defined and exists .

The function defined is used in Perl for determining if element of the array with the particular index exists. But in Python the attempt to access the array element with subscript above max element allocated generates exception so you need to check first if the index is within the bounds, and only then check for the existence of the particular element.

For scalar variables in Perl defined function determines if the variable was assigned any value, or not. If not it returns special value undef, similar to Python None (see discussion at Stack Overflow ). But Python variables don't have an initial, empty state. Python variables are bound (only) when they're defined. You can't create a Python variable without giving it a value. So Python fragment

>>> if a == None:

... print ("OK")

...

Un-expectantly print nothing and does not produce any error message.

NOTE: in Perl attempt to assign an element above current upper bound simply extends the array to this new bound, no questions asked.

If element does not exists and you use the value in expression Perl convents undef in two usable default values depending on context (string operation, or numeric):

All this needs to be manually programmed in Python.

Function exists in Perl is applicable to hashes and checks if element of the hash with the particular key exists.

And in different contexts Python programmer use different expressions of the same:

if d is a dictionary, d.get(k) will return d[k] if it exists, but None if d has no key k.

NOTE: Perl exists returns 1, if the element exists and 0 if it is not (not undef) if the value of the hash element with the given key is undef. So in Perl the element of hash can exist, but has not been defined.

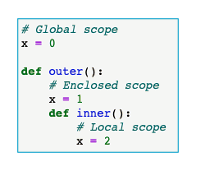

Language designers usually have pretty strange and different tastes as for the rules of visibility of variables and lifespan of variables. Here lies one of the main problem with conversion of Perl script into Python.

Generally the part of a program where a variable is accessible is called the scope of the variable, and the duration for which the variable exists its lifetime.

Classic rules were established by PL/1 in late 60th and they include three parameters

Visibility of variables is a tricky concept. It is somewhat similar to Unix hierarchical filesystem structure and the concept of permissions on files.

It can be regulated only by special types of blocks (subroutines in Python), or all blocks including loops and if statement blocks like in Perl. Not that it matter much but that complicates exact translation as there is no way to exactly translate such typical usage of my variable in Perl in such simple construct as:

for( my $i; $i<@text_array; $i++){

...

}

Here $i in undefined outside the loop body, which does not have a proper Python analog as in Python local variables visibility is subroutines block.

Python uses "birth on the first assignment" concept: variables comes into being when it is initialized. If variable first initialized within a subroutine, it gets a local scope, else a global scope. So the scope (local or global) is determined implicitly, based on the point in which the variable is initialized:

foo = 12 bar = 19

The variable also can be explicitly declared as global so that subroutines within a program can see and change it. But the trick is that this variable should already exist in main namespace. If it does not exists Python interpreter complains that this is a name error. In other words you need to initialize global variables first before you can use global declaration in subroutines.

Variable is local if outer subroutines can's see and change it. But Python introduced "sunglass" visibility for global variables: global variables in Python declared in outer scope of a given subroutine are restricted to "read-only mode" -- the "inner" subroutines can see them and fetch their value (read them), but can't change them without additional declaration "global". This visibility mode does not have direct analogs in Perl so Perl global variables need to be made visible in subroutines by explicitly declaring them as global.

Bu that's only beginning of the troubles. Even worse is that fact that Python does not allow to initialize the global variable at declaration. So Perl initialization of such variables need to be does separately and in some case (state variables) moved to other context or you need to jump though the hoops and introduce special variable that record number of invocations of the subroutine.

In Perl, which originated from shell and AWK, any global variable can be declared and changed in any subroutine without limitations, unless they are masked by declaration of local (my) variable with the same name. By default all declaration of variables in Perl are global unless pragma strict is used. In version 5.10 with the introduction of state variables it becomes more tricky.

In Perl local variables can be static (called state): they are created and initialized on loading the program or module and retain value from one subroutine invocation to another.

It does not matter whether they were declared in a subroutine or not: subroutine defines only scope for them, not the lifespan. Unfortunately there are no such variables in Python and you need to emulate them with global variables.

NOTE: To emulate Perl behaviour of state variables with Python global variables you need to avoid conflicts with "regular" global variables used in main or other subs; to achieve that you can prefix the name of the variable with the name of the sub. So that

sub mysub { state $limit=132; ... ... ... }translated to something like

def mysub: global mysub_limitmysub_limit=132def mysub2 global message_levelmessage_level=3

As you can see the translation involved two steps:

def mysub():

global mysub_state_init_done

global a,b,c

if mysub_state_init_done==0:

[a,b,c]=[0,1,3]

mysub_state_init_done==1

mysub_state_init_done=0

mysub()

print(a,b,c)

In Python you can't initialize global variables along with declaration.

# python3.8 globals.py

File "globals.py", line 4

global s = "initialization_in_global_statement_is_prohibited"

^

SyntaxError: invalid syntax

While Perl scripts with program script behave better, many old scripts do not use this pragma. Such "ancient" scripts tend to overuse global variables (partially because initially in Perl those were the only variable available)

In Perl global variable can be declared any subroutine and even if their use is limited to subroutines they are still global. Actually this is the default scope and visibility of variables in Perl, which stems from shell. if Unix filesystem terms all files in Perl have by default mode 777. That's why later Perl tried to restrict this with pragma strict.

In Python you can see variables that are declared in outer scope -- that is, get their values -- but you can't set their values without using a nonlocal or global statement.

A variable which is defined inside a function is local to that function. It is accessible from the point at which it is defined until the end of the function, and exists for as long as the function is executing.

The parameter names in the function definition behave like local variables, but they contain the values that we pass into the function when we call it.

In Perl, the my declares a lexical variable. A variable can be initialized with =. This variable can either be declared first and later initialized or declared and initialized at once.

my $foo; # declare $foo = 12; # initialize my $bar = 19; # both at once

Also, as you may have noticed, variables in Perl usually start with sigils -- symbols indicating the type of their container. Variables starting with a $ hold scalars. Variables starting with an @ hold arrays, and variables starting with a % hold a hash (dict). Sigilless variables, declared with a \ but used without them, are bound to the value they are assigned to and are thus immutable.

Please note that, from now on, we are going to use sigilless variables in most examples just to illustrate the similarity with Python. That is technically correct, but in general we are going to use sigilless variables in places where their immutability (or independence of type, when they are used in signatures) is needed or needs to be highlighted.

s = 10

L = [1, 2, 3]

d = { a : 12, b : 99 }

print s

print l[2]

print d['a']

# 10, 2, 12

Perl

my $s = 10;

my @l = 1, 2, 3;

my %d = (a => 12, b => 99);

my \x = 99;

say $s;

say @l[1];

say $d{'a'}

say x;

# 10, 2, 12, 99

Blocks

In Python, indentation is used to indicate a block so { and } in Perl translated into indent in Python. Blocks in Perl and Python

determine visibility. But only Per allow arbitrary blocks. In Python you are out of luck and need some conditional

prefix

[127] # cat blocks.py

:

print( "block nestin 1")

:

print(block nesting 2")

print("block nesting zero")

Instead you need something like

[127] # cat blocks.py

if True :

print( "block nestin 1")

if True :

print(block nesting 2")

print("block nesting zero")

So you need to invent prefixes:

if True :

print( "block nesting 1")

if True :

print("block nesting 2")

print("block nesting zero")

This Fortran-style

solution has its pluses and minuses. In a way you can view ":" as an equivalent of opening bracket in Perl, which makes the

situation half less bizarre.

Python

if 1 == 2:

print "Wait, what?"

else:

print "1 is not 2."

Perl

if( 1 == 2 ){

say "Wait, what?"

} else {

say "1 is not 2."

}

If you strongly allergic to absence of closing bracket you can imitate it with comment of ';'. Parentheses are optional in both languages for expressions in conditionals, as shown above. Perl has yet another function of blocks -- it allow loop control statements such as last and next in any block. This capability

need to be emulated in Python.

Scope of variables

In Python, functions and classes create a new scope, but no other block constructor (e.g. loops, conditionals) creates a scope. In

Python 2, list comprehensions do not create a new scope, but in Python 3, they do.

In Perl any block create a new scope and local variable are limited ot this particular scope: my variables belong to the scope defined by curvy brackets in which

they are enclosed. Otherwise in Perl the variable is

simply global

Python

if True:

x = 10

print x

# x is now 10

Perl

if True {

my $x = 10

}

say $x

# error, $x is not declared in this scope

my $x;

if True {

$x = 10

}

say $x

# ok, $x is 10

Python

x = 10

for x in 1, 2, 3:

pass

print x

# x is 3

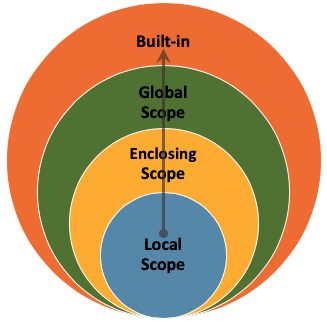

LEGB (Local -> Enclosing -> Global -> Built-in) rule

Whenever a variable is defined outside of any function, it becomes a global variable, and its scope is anywhere within the program.

Which means it can be read by any function but written only by functions which declare it as global.

The following quote was

borrowed from Python Scope & the LEGB Rule- Resolving Names in Your

Code – Real Python with some clarifications

LEGB (Local -> Enclosing -> Global -> Built-in) is the logic followed by a Python interpreter when it is executing your program.

Let's say you're calling print(x) within inner(),

which is a function nested in outer().

Then Python will first look if "x" was defined locally within inner().

If not, the variable defined in outer() will

be used. This is the enclosing function. If it also wasn't defined there, the Python interpreter will go up another level - to

the global scope. Above that, you will only find the built-in scope, which contains special variables reserved for Python itself.

So far, so good!

Here is another useful discussion, this time in Stack Overflow (

Python variable visibility)

range_dur = 0

xrange_dur = 0

def do_range():

nonlocal range_dur

start = time.time()

for i in range(2,10):

print i

range_dur += time.time() - start

def do_xrange():

nonlocal xrange_dur

start = time.time()

for i in xrange(2,10):

print i

xrange_dur += time.time() - start

do_range()

do_xrange()

print range_dur

print xrange_dur

if __name__ == '__main__':

main()

Prefix and postfix operators "++" and '--"

When people coming from C/C++, Java or Perl write x[++i] or if ++i > len(line): it will compile in Python.

But the meaning is different and incorrect. In Python ++ is not a special operator. It is two unary operators "+", which does not change

the value of the variable. Opps !

Only starting from Python 3.8 you can use walrus operator to imitate prefix "++" and "--" operators is subscripts like x[i:=i+1]

The situation with postfix ++ and -- operators is even more bizarre. They just do not exists and need to be emulated, which

is not easy. See

Behaviour of increment and decrement operators in Python - Stack Overflow One pretty tricky hack is

def PostIncr(name, local={}):

#Equivalent to name++

if name in local:

local[name]+=1

return local[name]-1

globals()[name]+=1

return globals()[name]-1

def PostDecrement(name, local={}):

#Equivalent to name--

if name in local:

local[name]-=1

return local[name]+1

globals()[name]-=1

return globals()[name]+1

In this case you are probably better off modifying algorithms to prefix notation or use iterable objects.

Lists in Perl vs Lists in Python

Dimension of lists in Perl are flexible. Which means that an assignment of the element above current size (above max

index) leads to automatic extension of

the list to the necessary size.

In Python the size of the list is fixed at creation and is, essentially, a part of its type. So it can be expanded only via append

operation, never via assignment. That leads to Pascal style allocation of two dimensional lists in Python, which suggests that iether

many Python programmers do not understand the language or the language has way too many gotchas:

myList=[[0] * n] * m # Pascal style allocation of two dimensional list(list of lists) -- Incorrect solution

And here troubles start (How

to initialize a two-dimensional array in Python- - Stack Overflow: )

You can do just this:

[[element] * numcols] * numrows

For example:

>>> [['a'] *3] * 2

[['a', 'a', 'a'], ['a', 'a', 'a']]

But this has a undesired side effect:

>>> b = [['a']*3]*3

>>> b

[['a', 'a', 'a'], ['a', 'a', 'a'], ['a', 'a', 'a']]

>>> b[1][1]

'a'

>>> b[1][1] = 'b'

>>> b

[['a', 'b', 'a'], ['a', 'b', 'a'], ['a', 'b', 'a']]

...In my experience, this "undesirable" effect is often a source of some very bad logical errors. ...Because of the undersired

side effect, you cannot really treat it as a matrix.

Here is another description of this gotcha

How to initialize a

two-dimensional array in Python -- Stack Overflow

Don't use [[v]*n]*n, it is a trap!

>>> a = [[0]*3]*3

>>> a

[[0, 0, 0], [0, 0, 0], [0, 0, 0]]

>>> a[0][0]=1

>>> a

[[1, 0, 0], [1, 0, 0], [1, 0, 0]]

but

t = [ [0]*3 for i in range(3)]

works great.

Assignment of complex structures, such as lists and hashes in Perl is "copy" assignment in terms of Python. It

creates a copy of the structure not just an alias to the pointer to this structure. This affects translation of assignment of

lists and hashes.

Generally the statement @a_list=@b_list in Perl requires copy method to be used in Python.

Assignments: copy of value vs copy of pointer

Assignment in Perl is almost always copy of value assignments, even for aggregate data types such as list, arrays and

hashes. They create a new variable as copy the content of the variable on the right side into it.

In Python assignments are copy assignment only for simple types (integer, strings, etc) and this is a trap for Perl programmers.

So the assignment for list should be written as application of the method copy to the list on the right side, not as a simple

assignment.

In other words, Perl

@x=@y

corresponds to Python

x=y.copy

NOTE: In Python the assignment x=y essentially copy the reference y to reference x and does not create new copy

of any aggregate varible. Some Python tutorials try to explain this using concept of labels, but this is bunk -- Python

operates with references to object just like Perl can.

Another problem is that widespread usage of strict pragma in Perl led to the annoying proliferation of usage of function

defined, to check if the variable is initialized. But the meaning of defined function is different then the

meaning of the variable having the value None on Python. In Python the usage of variable that was not assigned a value of

the right side of the assignment statement is a compile time error, if complier can detect this, and runtime error otherwise.

Due to this check var != None is not applicable to variable which are not yet defined (which in

Python means assigned a value).

As in Python the valuable that is first assigned value within function is local to this function assignment of

the value value None can be used for imitation of Perl my variables, which are not assigned the initial

(default) value at declaration.

Comparison of variable and type casting

Type casting means to convert variable data of one type to another type, In Perl type casting is done automatically during

comparisons, in Python you need explicitly use functions int(), float(), or str().

Perl enforces conversion of variables in comparison depending of the type of comparison (numeric or spring) for which like Unix

shell it uses two set of comparison operations: regular for numeric comparison and abbreviations like eq, gt,

lt for string comparison. The latter feature is a frequent source of errors in Perl, especially if a programmer uses

another language along with Perl, for example C or Javascript. So, for example, Perl if statement:

if ( $a > $b ) {

$max=$a;

}else{

$max=$b;

}

implicitly involves forced conversion to float of both operands, if they are strings. But in reality most Perl

programmers do not change type of their variables after the first assignment and thus in most cases the use of if float(a)>float(b):

would be is redundant. Few exception can be corrected by hand.

The real problem is the treatment of input values, when they are converted to numeric values. In Perl the string 123abc

will convert to 123 in numerical assignment. Python int and float function will not do that.

Another problem is that numeric type in Perl is by default represented by float even if this is a counter in the for loop.

Here Python offer usage of int. So if the initial value of the index is fractional you need explicit conversion to

int via floor function. But such cases are rare.

Function mapping

Python has around 100 built-in functions, while Python with its plethora of built-in functions and methods has much more. So some analogs to Perl

function almost always can be found but the devil is in details, as type

systems of both languages are radically different and conversion functions play much more prominent role in Python then in Perl. Two

examples when we have problems do exists for hashes vs dictionaries (for example the close analog in conditional statement is not a

function but in keyword; another is dict.has_key() function)

See Perl to Python functions map

Some Perl functions badly map to Python. One such function is tr. In Python translation of one character set to another

was made into two stage operation: first you need to create a map of characters using maketrans function, and only

then you can use translate function, using this map.

Another difficulty is that only modifier 'd' in Perl tr function is directly translatable. And its translation differs

between Python 2.7 and Python 3.x and even between Python 3.0-3.5 and Python 3.6-3.8.

All other modifiers (which means c, r and s) present difficulties:

- c Complement the SEARCHLIST. (need to be emulated Complement search list also need to be translated

manually. via deletion from full set of characters, the characters present in the string.

- d Delete found but unreplaced characters (can be translated).

- r Return the modified string and leave the original

string untouched. This is a tricky case which depends on context. In Perl normally tr is operation that modifies the

source string. With Python 'r' behaviour is default. So in most cases no translation is needed. This option is used very

rarely in Perl in any case and most Perl programmers do not suspect that it exists

- ($HOST = $host) =~ tr/a-z/A-Z/;

- $HOST = $host =~ tr/a-z/A-Z/r; # same thing

- @stripped = map tr/a-zA-Z/ /csr, @original; # /r with map

- s Squash duplicate replaced characters.If order to replicate squash modifier (s) you need to write you own tr

function which iterates over the string as there is no direct mapping.

Some idioms like

tr [\200-\377] [\000-\177]; # delete 8th bit

generally are translatable.

But in Python there is no way to know how many replacements in the string were made by the translate function so idioms like

if ($line=~tr/a/a/) {...}

Need to be translated into a custom written function.

Regular expressions

the standard way of using regex in Python is recompiling them via re.compile function. This is the only way to pass

regex modifiers to the regex.

Here’s a table of the available flags for re-complie , followed by a more detailed explanation of each one.

|

Flag |

Meaning |

|---|---|

|

|

Makes several escapes like |

|

|

Make |

|

|

Do case-insensitive matches. Corresponds to Perl i |

|

|

Do a locale-aware match. |

|

|

Multi-line matching, affecting |

|

|

Enable verbose REs, which can be organized more cleanly and understandably. |

in Perl modifier are as following

mTreat the string being matched

against as multiple lines. That is, change "^" and "$" from

matching the start of the string's first line and the end of its last line to matching the start and end of each line within the

string.

sTreat the string as single line.

That is, change "." to

match any character whatsoever, even a newline, which normally it would not match.

Used together, as /ms ,

they let the "." match

any character whatsoever, while still allowing "^" and "$" to

match, respectively, just after and just before newlines within the string.

i

Do case-insensitive pattern matching. For example, "A" will match "a" under /i .

x and xxExtend your pattern's legibility by permitting whitespace and comments. Details in /x and /xx

p

Preserve the string matched such that ${^PREMATCH} , ${^MATCH} ,

and ${^POSTMATCH} are

available for use after matching.

In Perl 5.20 and higher this is ignored. Due to a new

copy-on-write mechanism, ${^PREMATCH} , ${^MATCH} ,

and ${^POSTMATCH} will

be available after the match regardless of the modifier.

a , d , l ,

and uThese modifiers, all new in 5.14, affect which character-set rules (Unicode, etc.) are used, as described below in Character set modifiers.

n

Prevent the grouping metacharacters () from

capturing. This modifier, new in 5.22, will stop $1 , $2 , etc...

from being filled in.

There are a number of flags that can be found at the end of regular expression constructs that are not generic

regular expression flags, but apply to the operation being performed, like matching or substitution (m// or s/// respectively).

Flags described further in Using regular expressions in Perl in perlretut are:

Substitution-specific modifiers described in s/PATTERN/REPLACEMENT/msixpodualngcer in perlop are:

Bothe language deviate from classic C-language control structures but is a different ways. In booth language a better language and a set of control structure is hidden and strives to get our but can not.

Python introduced a new syntax for the for loop by using function range instead of specifying the condition for exit and increment like in classic C-loop. In most cases this is OK, although this is less flexible control structure than classic for loop.

Perl brazenly renamed C loop control statements (next instead of continue and last instead of break) under the false pretext of being more "English language like" -- the latter is fake justification that is often used to justify numerous Perl warts). Nothing can be further from English language then a programming language.

It also tried to innovate and introduced a new one -- continue block, which proved to be only marginally useful and is not used much to matter.

Python has just for loop and while loop. While loop is pretty much traditional:

my $j = 1;

while $j < 3 {

say $j;

$j += 1

}

Python for loop is idiosyncratic and deviates from C-language tradition:for i in range(1,3):

print i

j = 1

# 1, 2, 1, 2

Perl has traditional C-style for loops and while loops, which are well understood but suffers from a classic C-wart -- using round bracket to delimit conditionals. For loop in Perl allows multiple counters, the feature which proved to be a flop and never used.

(Perl also has until loop: repeat...until repeat...while which need to be emulated in Python, but they are rarely use, so this is not a problem.

Python implements C-style addition control operators within the loop: last leaves a loop in Perl, and is analogous to break in Python. continue in Python corresponds to the next in Perl.

Python

for i in range(10):

if i == 3:

continue

if i == 5:

break

print i

Using if as a statement modifier (as above) is acceptable in Perl, even outside of a list comprehension.

The yield statement within a for loop in Python, which produces a generator, is not available in Perl 5.

Python

def count():

for i in 1, 2, 3:

yield i

Both language has postfix conditionals, only postfix loop for some very strange reason called list comprehensions (may be because they are not that comprehensible).

Absence of postfix conditions is not a big deal, as prefix conditions are more clear, so instead of

return if( $i < $limit );

you can write

if i>limit: return

Python wants to be idiosyncratic here too. But, at least, such a construct is present (BTW it is not needed for novices at all ;-):

$imax=($a>$b) ? $a : $b;

imax=a if (a<b) else b

SyntaxError: invalid syntax >>> a=1 if True File "", line 1 a=1 if True ^ SyntaxError: invalid syntax >>> a=1 if True else 0

Declaring a function (subroutine) with def in Python is accomplished with sub in Perl.

def add(a, b):

return a + b

In Perl all arguments are converted into the array with the name @_ and are referenced by index. This is approach is similar to bash and it automatically provides variable number of parameters as well as the ability to check that number of parameters actually passed and set defaults fro missing without any additional language constructs.

The return in Perl is optional; if it is absent that the value of the last expression is used as the return value:

sub add {

$_[0]+$_[1]; # equivalent to return $_[0]+$_[1]; This idiosyncrasy is rarely used.

}

Python 2 functions can be called with positional arguments or keyword arguments. These are determined by the caller. In Python 3, some arguments may be "keyword only".

def speak(word, times):

for i in range(times):

print word

speak('hi', 2)

speak(word='hi', times=2)

sub speak

{

my ($work, $times)=@_;

say $word for ^$times

}

speak('hi', 2);

In Python 3, the input keyword is used to prompt the user. This keyword can be provided with an optional argument which is written to standard output without a trailing newline:

user_input = input("Say hi → ")

print(user_input)

looping over files given on the command line or stdin

The useful Perl idiom of:

while (<>) {

... # code for each line

}

loops over each line of every file named on the commandline when executing the script; or, if no files are named, it will loop over

every line of the standard input file descriptor. The Python fileinput module does a similar task:

import fileinput

for line in fileinput.input():

... # code to process each line

The fileinput module also allows in place editing or editing with the creation of a backup of the files.In Python 3 versions, open can act as iterator, so you would just write:

for line in open(filename):

... # code to process each line

If you want to read from standard in, then use it as the filename:

import sys

for line in open(sys.stdin):

... # code to process each line

If you want to loop over several filenames given on the command line, then you need to write an outer loop over the command line. (You

might also choose to use the fileinput module as noted above).

Perl does this implistly

import sys

for fname in sys.argv[1:]

for line in open(fname):

... # code to process each line

Tom Limoncelli in his post Python for Perl Programmers observed

There are certain Perl idioms that every Perl programmer uses: "while (<>) { foo; }" and "foo ~= s/old/new/g" both come to mind.When I was learning Python I was pretty peeved that certain Python books don't get to that kind of thing until much later chapters. One didn't cover that kind of thing until the end! As [a long-time Perl user]( https://everythingsysadmin.com/2011/03/overheard-at-the-office-perl-e.html ) this annoyed and confused me.

While they might have been trying to send a message that Python has better ways to do those things, I think the real problem was that the audience for a general Python book is a lot bigger than the audience for a book for Perl people learning Python. Imagine how confusing it would be to a person learning their first programming language if their book started out comparing one language you didn't know to a different language you didn't know!

So here are the idioms I wish were in Chapter 1. I'll be updating this document as I think of new ones, but I'm trying to keep this to be a short list.

Processing every line in a file

Perl:

while (<>) { print $_; }Python:

for line in file('filename.txt'): print lineTo emulate the Perl <> technique that reads every file on the command line or stdin if there is none:

import fileinput for line in fileinput.input(): print lineIf you must access stdin directly, that is in the "sys" module:

import sys for line in sys.stdin: print lineHowever, most Python programmers tend to just read the entire file into one huge string and process it that way. I feel funny doing that. Having used machines with very limited amounts of RAM, I tend to try to keep my file processing to a single line at a time. However, that method is going the way of the dodo.

contents = file('filename.txt').read() all_input = sys.stdin.read()If you want the file to be one string per line, with the newline removed just change read() to readlines()

list_of_strings = file('filename.txt').readlines() all_input_as_list = sys.stdin.readlines()Regular expressions

Python has a very powerful RE system, you just have to enable it with "import re". Any place you can use a regular expression you can also use a compiled regular expression. Python people tend to always compile their regular expressions; I guess they aren't used to writing throw-away scripts like in Perl:

import re RE_DATE = re.compile(r'\d\d\d\d-\d{1,2}-\d{1,2}') for line in sys.stdin: mo = re.search(RE_DATE, line) if mo: print mo.group(0)There is re.search() and re.match(). re.match() only matches if the string starts with the regular expression. It is like putting a "^" at the front of your regex. re.search() is like putting a ".*" at the front of your regex. Since match comes before search alphabetically, most Perl users find "match" in the documentation, try to use it, and get confused that r'foo' does not match 'i foo you'. My advice? Pretend match doesn't exist (just kidding).

The big change you'll have to get used to is that the result of a match is an object, and you pull various bits of information from the object. If nothing is found, you don't get an object, you get None, which makes it easy to test for in a if/then. An object is always True, None is always false. Now that code above makes more sense, right?

Yes, you can put parenthesis around parts of the regular expression to extract out data. That's where the match object that gets returned is pretty cool:

import re for line in sys.stdin: mo = re.search(r'(\d\d\d\d)-(\d{1,2})-(\d{1,2})', line) if mo: print mo.group(0)The first thing you'll notice is that the "mo =" and the "if" are on separate lines. There is no "if x = re.search() then" idiom in Python like there is in Perl. It is annoying at first, but eventually I got used to it and now I appreciate that I can't accidentally assign a variable that I meant to compare.

Let's look at that match object that we assigned to the variable "mo" earlier:

- mo.group(0) -- The part of the string that matched the regex.

- mo.group(1) -- The first ()'ed part

- mo.group(2) -- The second ()'ed part

- mo.group(1,3) -- The first and third matched parts (as a tuple)

- mo.groups() -- A tuple containing all the matched parts.

The perl s// substitutions are easily done with re.sub() but if you don't require a regular expression "replace" is much faster:

>>> re.sub(r'\d\d+', r'', '1 22 333 4444 55555') '1 ' >>> re.sub(r'\d+', r'', '9876 and 1234') ' and ' >>> re.sub(r'remove', r'', 'can you remove from') 'can you from' >>> 'can you remove from'.replace('remove', '') 'can you from'You can even do multiple parenthesis substitutions as you would expect:

>>> re.sub(r'(\d+) and (\d+)', r'yours=\1 mine=\2', '9876 and 1234') 'yours=9876 mine=1234'After you get used to that, read the ""pydoc re" page":http://docs.python.org/library/re.html for more information.

String manipulations

I found it odd that Python folks don't use regular expressions as much as Perl people. At first I though this was due to the fact that Python makes it more cumbersome ('cause I didn't like to have to do 'import re').

It turns out that Python string handling can be more powerful. For example the common Perl idiom "s/foo/bar" (as long as "foo" is not a regex) is as simple as:

credit = 'i made this' print credit.replace('made', 'created')or

print 'i made this'.replace('made', 'created')It is kind of fun that strings are objects that have methods. It looks funny at first.

Notice that replace returns a string. It doesn't modify the string. In fact, strings can not be modified, only created. Python cleans up for automatically, and it can't do that very easily if things change out from under it. This is very Lisp-like. This is odd at first but you get used to it. Wait... by "odd" I mean "totally fucking annoying". However, I assure you that eventually you'll see the benefits of string de-duplication and (I'm told) speed.

It does mean, however, that accumulating data in a string is painfully slow:

s = 'this is the first part\n' s += 'i added this.\n' s += 'and this.\n' s += 'and then this.\n'The above code is bad. Each assignment copies all the previous data just to make a new string. The more you accumulate, the more copying is needed. The Pythonic way is to accumulate a list of the strings and join them later.

s = [] s.append('this is the first part\n') s.append('i added this.\n') s.append('and this.\n') s.append('and then this.\n') print ''.join(s)It seems slower, but it is actually faster. The strings stay in their place. Each addition to "s" is just adding a pointer to where the strings are in memory. You've essentially built up a linked list of pointers, which are much more light-weight and faster to manage than copying those strings around. At the end, you join the strings. Python makes one run through all the strings, copying them to a buffer, a pointer to which is sent to the "print" routine. This is about the same amount of work as Perl, which internally was copying the strings into a buffer along the way. Perl did copy-bytes, copy-bytes, copy-bytes, copy-bytes, pass pointer to print. Python did append-pointer 4 times then a highly optimized copy-bytes, copy-bytes, copy-bytes, copy-bytes, pass pointer to print.

joining and splitting.

This killed me until I got used to it. The join string is not a parameter to join but is a method of the string type.

Perl:

new = join('|', str1, str2, str3)Python:

new = '|'.join([str1, str2, str3])Python's join is a function of the delimiter string. It hurt my brain until I got used to it.

Oh, the join() function only takes one argument. What? It's joining a list of things... why does it take only one argument? Well, that one argument is a list. (see example above). I guess that makes the syntax more uniform.

Splitting strings is much more like Perl...

Kind of. The parameter is what you split on, or leave it blank for "awk-like splitting" (which heathens call "perl-like splitting" but they are forgetting their history).

Perl:

my @values = split('|', $data);Python:

values = data.split('|');You can split a string literal too. In this example we don't give split() any parameters so that it does "awk-like splitting".

print 'one two three four'.split() ['one', 'two', 'three', 'four']If you have a multi-line string that you want to break into its individual lines, bigstring.splitlines() will do that for you.

Getting help

pydoc fooexcept it doesn't work half the time because you need to know the module something is in . I prefer the "quick search" box on http://docs.python.org or "just use Google".

I have not read ""Python for Unix and Linux System Administration":http://www.amazon.com/dp/0596515820/safocus-20" but the table of contents looks excellent. I have read most of Python Cookbook (the first edition, there is a 2nd edition out too) and learned a lot. Both are from O'Reilly and can be read on Safari Books Online.

That's it!

That's it! Those few idioms make up most of the Perl code I usually wrote. Learning Python would have been so much easier if someone had showed me the Python equivalents early on.

One last thing... As a sysadmin there are a few modules that I've found useful:

- subprocess -- Replaces the need to figure out Popen(), system() and a ton of other error-prone system calls.

- logging -- very nice way to log debug info

- os -- OS-independent ways to do things like copy files, look at ENV variables, etc.

- sys -- argv, stdio, etc.

- gflags -- My fav. flag/getopt replacement http://code.google.com/p/python-gflags/

- pexpect -- Like Expect.PM http://pexpect.sourceforge.net/

- paramiko -- Python access to ssh/scp/sftp. http://www.lag.net/paramiko/

See also

- PerlPhrasebook - http://wiki.python.org/moin/PerlPhrasebook is kind of ok, but hasn't been updated in a while.

Variables such as $_ (the result of operation, such as reading a line from a file) and $. (line number) gives a lot of troubles. Python implements neither. So they need to be emulated.

With mapping Perl functions to Python we also run into multiple problems. For example, while string function index exists in Python as method in string class, its behaviour is different from behaviour of Perl index function. It raises exception is substring is not found. You need to use a different string class methods find (and rfind) to imitate Perl behavior in which if substring is not found the function simply returns -1 making it compatible with Perl index behaviour.

Python 2.7 does not allow assignments in conditional expressions (for a good reason, as they can be a source of of subtle bugs) so in this case you iether need to translate the script into Python 3.8 or factored out of conditional expressions and convert them into statements the precede comparison:

61 | 0 | |dotpos=SCRIPT_NAME.find( '.') #Perl: if( ($dotpos=index($SCRIPT_NAME,'.'))>-1 ) {

61 | 0 | |if dotpos>-1:

62 | 1 | | SCRIPT_NAME=SCRIPT_NAME[0:dotpos] #Perl: $SCRIPT_NAME=substr($SCRIPT_NAME,0,$dotpos);

64 | 0 | |

Perl left hand substr function does not have direct Python analogs and needs to be emulated iether via concatenation or via join function.

Even larger problems exists with translation of double quoted and HERE strings. The closest analog are Python 3 f-strings. The latter is a literal string, prefixed with ‘f’, which contains expressions inside curvy braces. Each expression is replaced with their values. Like any Perl double quoted string, an f-string is really an expression evaluated at run time, not a constant value. (Python f-string tutorial - formatting strings in Python with f-string):

print(f'{name} is {age} years old')Python f-strings are available since Python 3.6. The string has the

fprefix and uses{}to evaluate variables.$ python formatting_st...

We can work with dictionaries in f-strings.

dicts.py#!/usr/bin/env python3 user = {'name': 'John Doe', 'occupation': 'gardener'} print(f"{user['name']} is a {user['occupation']}")...Python 3.8 introduced the self-documenting expression with the

debug.py=character.#!/usr/bin/env python3 import math x = 0.8 print(f'{math.cos(x) = }') print(f'{math.sin(x) = }')

The self-documenting expression are re-invention of PL/1 put data statement. They makes code more concise, readable, and less prone to error when printing variables with their names (and that is useful not only in debugging by in many "production" cases such as printing options, etc. )

To take advantage of this new feature, type your f-string as follows:

f'{expr=}'where

expris the variable that you want to expose. In this way, you get to generate a string that will show both your expression and its output.

And this is only the beginning of troubles with conversion from Perl to Python. Generally the more rich subset of Perl is used, the lessee are chances that semiautomatic translation is useful.

Several emulators of Perl features exist. for example fileinput.py that comes with the standard Python distribution allows to iterate between all files supplied as the argument (implicit cat for the files), while glob.py allow to return list of files for given regular expression.

So Perl behaviour when by default default arguments re viewed as files that are concatenated can be emulated this way

In addition, tempfile.py help to create temp files.

NOTE: BTW Python 3 (and to a lesser extent Python 2.5+) supports coroutines, so in many cases of sysadmins related scripts a large Perl script might be broken into several "passes" to make them simpler and make debugging more transparent. That's a distinct advantage which allows to create more manageable easier understandable programs.

While having there own set of warts, Python avoids three major Perl pitfalls and that also complicates translation:

Coercing type of operands by the operand, when any operator can convert the variable into a new type

(often with disastrous results as in case converting text string to a number). In reality most Perl programmers imitate "strong

typing" -- the type of a variable never changes during execution of the script. That helps a lot. But you need somehow to

figure this out for semi-automatic translations. Which means it requires preliminary pass (which is desirable from several other

standpoints)

Assignment of arrays and hashes ion Perl is copy method in Python.

There are many subtle differences is treating each of "composite" data types as as lists and

hashes/dictionaries between Perl and Python. For example the size of the list is one of "fixed" properties of the list in

Python (list can be extended only via append operator) but it is implicit operation in Perl. Which means that such simple

statement as $a[$i]=5

should be translated differently depending on whether $i is within the bound of the list or in upside them.

Perl operations with pointers generally are untranslatable directly into Python.

While we discuss the conversion of Perl to Python as something already decided, often it is better not to move to Python. Much depends on the skillset available on the floor. Outside simple scripts a direct conversion of reasonably complex medium size script (over 1K lines) is a very complex task even for high level professional who devoted much of his/her professional career to studying programming languages

A useful discussion can be found at Conversion of Perl to Python page and for details) and often requires restructuring of the code. If you need a small change in existing Perl code, usually correcting it directly is a better deal. Maybe adding use strict, and running the script via perltidy. You might benefit from buying some books like Perl Cookbook, or talking to your co-workers.

If you plan to re-write existing, working Perl code because you're worried about Perl being not-quite-as-purely OO as Python, then there is no help. You need to pay the price. That's actually a good punishment for their folly ;-). OO fundamentalists is the worst type of co-workers you can have in any case and the decision to occupy them with some semi-useless task is a good management decision.

The same is true for those who are worried about Perl obsolesce. Please note that many people who predicted the demise of Fortran are already gone, but Fortran is here to stay. This also can be a "programming fashion addicts" with the beta addicts mentality: "Ooh! I found this cool new OO language and want to share its coolness with everyone by converting old scripts to it!" Of course, programming is driven by fashion, much like woman clothing, but an excessive zeal in following fashion can cost you...

Learning Python typically requires forgetting Perl: few programmers are able to write fluently in both languages as are they sufficiently similar and at the same time require different code practices. Also the volume and the complexity of each language is so big that you just can't have enough memory cells for both. Of course, we ned to admin that games with Perl 6 (when people who decided to go this way knew in advance that they have neither resources, not talent to do a proper job) undermines Perl, and pushed many people to Python.

Perl is more close to Unix culture while Python looks like a completely foreign entity with its own idiosyncratic vision. Also the cost of running Python in many case is excessive and that's why it is now squeezes by Go language.

From the point of view of history of evolution of programming language, Python represents a descendant of Nicklaus Wirth family of languages (Pascal, Modula, Modula 2). Or more correctly it is a direct descendant of ABC ( white space for indentation, syntax of if statements with semicolon as the delimiter (bad idea if you ask me) as in if expression: syntax, etc) with some Perl ideas incorporated in the process (Perl 4 existed during the first several year of Python development and greatly influenced Python development)

Most system administrators who know Perl are quite satisfied with it. In Unix sysadmin domain Perl is slightly higher language then Python in a sense that you can write the same utility with less lines of code. Those sysadmin usually started their small projects in Python in order to better understand the needs and problem that users which use Python as their primary (and often the only) programming language face. This page is written for them. I assume high to medium level of proficiency in Perl on this page.

Actually studying Python allow better understand strong points of Perl. After using Python such thing that people generally do not value much like double quoted strings or integration of regular expression engine into the language now look in completely different light.

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Sep 29, 2020 | drjohnstechtalk.com

Pythonizer

If you fit a certain profile: been in IT for > 20 years, managed to crate a few utility scripts in Perl, ut never wrapped your head around the newer and flashier Python, this blog post is for you.

Conversely, if you have grown up with Python and find yourself stuck maintaining some obscure legacy Perl code, this post is also for you.

A friend of mine has written a conceptually cool program that converts Perl programs into Python which he calls a Pythonizer .

I'm sure it won't do well with special Perl packages and such. In fact it is an alpha release I think. But perhaps for those scripts which use the basic built-in Perl functions and operations, it will do the job.

When I get a chance to try it myself I will give some more feedback here. I have a perfect example in mind, i.e., a self-contained little Perl script which ought to work if anything will.

Conclusion

Old Perl programs have been given new life by Pythonizer, which can convert Perl programs into Python.

References and related

h ttps://github.com/softpano/pythonizer

Perl is not a dead language after all. Work continues on Perl 7, which will be known as v5.32. Should be ready next year: https://www.perl.com/article/announcing-perl-7/?ref=alian.info

Jan 01, 2014 | stackoverflow.com

Ask Question Asked 6 years, 3 months ago Active 7 months ago Viewed 77k times

Juicy ,

I am using:

grepOut = subprocess.check_output("grep " + search + " tmp", shell=True)To run a terminal command, I know that I can use a try/except to catch the error but how can I get the value of the error code?

I found this on the official documentation:

exception subprocess.CalledProcessError Exception raised when a process run by check_call() or check_output() returns a non-zero exit status. returncode Exit status of the child process.But there are no examples given and Google was of no help.

jfs ,

"Google was of no help" : the first link (almost there it showse.output), the second link is the exact match (it showse.returncode) the search term:CalledProcessError. – jfs May 2 '14 at 15:06DanGar , 2014-05-02 05:07:05

You can get the error code and results from the exception that is raised.

This can be done through the fields

returncodeandoutput.For example:

import subprocess try: grepOut = subprocess.check_output("grep " + "test" + " tmp", shell=True) except subprocess.CalledProcessError as grepexc: print "error code", grepexc.returncode, grepexc.outputDanGar ,

Thank you exactly what I wanted. But now I am wondering, is there a way to get a return code without a try/except? IE just get the return code of the check_output, whether it is 0 or 1 or other is not important to me and I don't actually need to save the output. – Juicy May 2 '14 at 5:12jfs , 2014-05-02 16:09:20

is there a way to get a return code without a try/except?

check_outputraises an exception if it receives non-zero exit status because it frequently means that a command failed.grepmay return non-zero exit status even if there is no error -- you could use.communicate()in this case:from subprocess import Popen, PIPE pattern, filename = 'test', 'tmp' p = Popen(['grep', pattern, filename], stdin=PIPE, stdout=PIPE, stderr=PIPE, bufsize=-1) output, error = p.communicate() if p.returncode == 0: print('%r is found in %s: %r' % (pattern, filename, output)) elif p.returncode == 1: print('%r is NOT found in %s: %r' % (pattern, filename, output)) else: assert p.returncode > 1 print('error occurred: %r' % (error,))You don't need to call an external command to filter lines, you could do it in pure Python:

with open('tmp') as file: for line in file: if 'test' in line: print line,If you don't need the output; you could use

subprocess.call():import os from subprocess import call try: from subprocess import DEVNULL # Python 3 except ImportError: # Python 2 DEVNULL = open(os.devnull, 'r+b', 0) returncode = call(['grep', 'test', 'tmp'], stdin=DEVNULL, stdout=DEVNULL, stderr=DEVNULL)mkobit , 2017-09-15 14:52:56

Python 3.5 introduced the

subprocess.run()method. The signature looks like:subprocess.run( args, *, stdin=None, input=None, stdout=None, stderr=None, shell=False, timeout=None, check=False )The returned result is a

subprocess.CompletedProcess. In 3.5, you can access theargs,returncode,stdout, andstderrfrom the executed process.Example:

>>> result = subprocess.run(['ls', '/tmp'], stdout=subprocess.DEVNULL) >>> result.returncode 0 >>> result = subprocess.run(['ls', '/nonexistent'], stderr=subprocess.DEVNULL) >>> result.returncode 2Dean Kayton ,

I reckon this is the most up-to-date approach. The syntax is much more simple and intuitive and was probably added for just that reason. – Dean Kayton Jul 22 '19 at 11:46Noam Manos ,

In Python 2 - use commands module:

import command rc, out = commands.getstatusoutput("ls missing-file") if rc != 0: print "Error occurred: %s" % outIn Python 3 - use subprocess module:

import subprocess rc, out = subprocess.getstatusoutput("ls missing-file") if rc != 0: print ("Error occurred:", out)Error occurred: ls: cannot access missing-file: No such file or directory

Jan 01, 2014 | stackoverflow.com

subprocess.check_output return code Ask Question Asked 6 years, 3 months ago Active 7 months ago Viewed 77k times

Juicy ,

I am using:

grepOut = subprocess.check_output("grep " + search + " tmp", shell=True)To run a terminal command, I know that I can use a try/except to catch the error but how can I get the value of the error code?

I found this on the official documentation:

exception subprocess.CalledProcessError Exception raised when a process run by check_call() or check_output() returns a non-zero exit status. returncode Exit status of the child process.But there are no examples given and Google was of no help.

jfs ,

"Google was of no help" : the first link (almost there it showse.output), the second link is the exact match (it showse.returncode) the search term:CalledProcessError. – jfs May 2 '14 at 15:06DanGar , 2014-05-02 05:07:05

You can get the error code and results from the exception that is raised.

This can be done through the fields

returncodeandoutput.For example:

import subprocess try: grepOut = subprocess.check_output("grep " + "test" + " tmp", shell=True) except subprocess.CalledProcessError as grepexc: print "error code", grepexc.returncode, grepexc.outputDanGar ,

Thank you exactly what I wanted. But now I am wondering, is there a way to get a return code without a try/except? IE just get the return code of the check_output, whether it is 0 or 1 or other is not important to me and I don't actually need to save the output. – Juicy May 2 '14 at 5:12jfs , 2014-05-02 16:09:20

is there a way to get a return code without a try/except?

check_outputraises an exception if it receives non-zero exit status because it frequently means that a command failed.grepmay return non-zero exit status even if there is no error -- you could use.communicate()in this case:from subprocess import Popen, PIPE pattern, filename = 'test', 'tmp' p = Popen(['grep', pattern, filename], stdin=PIPE, stdout=PIPE, stderr=PIPE, bufsize=-1) output, error = p.communicate() if p.returncode == 0: print('%r is found in %s: %r' % (pattern, filename, output)) elif p.returncode == 1: print('%r is NOT found in %s: %r' % (pattern, filename, output)) else: assert p.returncode > 1 print('error occurred: %r' % (error,))You don't need to call an external command to filter lines, you could do it in pure Python:

with open('tmp') as file: for line in file: if 'test' in line: print line,If you don't need the output; you could use

subprocess.call():import os from subprocess import call try: from subprocess import DEVNULL # Python 3 except ImportError: # Python 2 DEVNULL = open(os.devnull, 'r+b', 0) returncode = call(['grep', 'test', 'tmp'], stdin=DEVNULL, stdout=DEVNULL, stderr=DEVNULL)> ,

add a commentmkobit , 2017-09-15 14:52:56

Python 3.5 introduced the

subprocess.run()method. The signature looks like:subprocess.run( args, *, stdin=None, input=None, stdout=None, stderr=None, shell=False, timeout=None, check=False )The returned result is a

subprocess.CompletedProcess. In 3.5, you can access theargs,returncode,stdout, andstderrfrom the executed process.Example:

>>> result = subprocess.run(['ls', '/tmp'], stdout=subprocess.DEVNULL) >>> result.returncode 0 >>> result = subprocess.run(['ls', '/nonexistent'], stderr=subprocess.DEVNULL) >>> result.returncode 2Dean Kayton ,

I reckon this is the most up-to-date approach. The syntax is much more simple and intuitive and was probably added for just that reason. – Dean Kayton Jul 22 '19 at 11:46simfinite , 2019-07-01 14:36:06

To get both output and return code (without try/except) simply use subprocess.getstatusoutput (Python 3 required)

electrovir ,

please read stackoverflow.com/help/how-to-answer on how to write a good answer. – DjSh Jul 1 '19 at 14:45> ,

In Python 2 - use commands module:

import command rc, out = commands.getstatusoutput("ls missing-file") if rc != 0: print "Error occurred: %s" % outIn Python 3 - use subprocess module:

import subprocess rc, out = subprocess.getstatusoutput("ls missing-file") if rc != 0: print ("Error occurred:", out)Error occurred: ls: cannot access missing-file: No such file or directory

Aug 19, 2020 | stackoverflow.com

n This question already has answers here : subprocess.check_output return code (5 answers) Closed 5 years ago .

While developing python wrapper library for Android Debug Bridge (ADB), I'm using subprocess to execute adb commands in shell. Here is the simplified example:

import subprocess ... def exec_adb_command(adb_command): return = subprocess.call(adb_command)If command executed propery exec_adb_command returns 0 which is OK.

But some adb commands return not only "0" or "1" but also generate some output which I want to catch also. adb devices for example:

D:\git\adb-lib\test>adb devices List of devices attached 07eeb4bb deviceI've already tried subprocess.check_output() for that purpose, and it does return output but not the return code ("0" or "1").

Ideally I would want to get a tuple where t[0] is return code and t[1] is actual output.

Am I missing something in subprocess module which already allows to get such kind of results?

Thanks! python subprocess adb share improve this question follow asked Jun 19 '15 at 12:10 Viktor Malyi 1,761 2 2 gold badges 18 18 silver badges 34 34 bronze badges

> ,

add a comment 1 Answer Active Oldest VotesPadraic Cunningham ,

Popen and communicate will allow you to get the output and the return code.

from subprocess import Popen,PIPE,STDOUT out = Popen(["adb", "devices"],stderr=STDOUT,stdout=PIPE) t = out.communicate()[0],out.returncode print(t) ('List of devices attached \n\n', 0)check_output may also be suitable, a non-zero exit status will raise a CalledProcessError:

from subprocess import check_output, CalledProcessError try: out = check_output(["adb", "devices"]) t = 0, out except CalledProcessError as e: t = e.returncode, e.messageYou also need to redirect stderr to store the error output:

from subprocess import check_output, CalledProcessError from tempfile import TemporaryFile def get_out(*args): with TemporaryFile() as t: try: out = check_output(args, stderr=t) return 0, out except CalledProcessError as e: t.seek(0) return e.returncode, t.read()Just pass your commands:

In [5]: get_out("adb","devices") Out[5]: (0, 'List of devices attached \n\n') In [6]: get_out("adb","devices","foo") Out[6]: (1, 'Usage: adb devices [-l]\n')Noam Manos ,

Thank you for the broad answer! – Viktor Malyi Jun 19 '15 at 12:48

Aug 18, 2020 | axialcorps.wordpress.com

Don't Slurp: How to Read Files in Python Posted on September 27, 2013 by mssaxm

A few weeks ago, a well-intentioned Python programmer asked a straight-forward question to a LinkedIn group for professional Python programmers:

What's the best way to read file in Python?

Invariably a few programmers jumped in and told our well-intentioned programmer to just read the whole thing into memory:

f = open('/path/to/file', 'r+') contents = f.read()Just to mix things up, someone followed-up to demonstrate the exact same technique using 'with' (a great improvement as it ensures the file is properly closed in all cases):