|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

|

|

"Languages come and go,

but algorithms stand the test of time"

"An algorithm must be seen to be believed."

Donald Knuth

The quality of CS education is by-and-large determined by the knowledge of algorithms as well as OS and compilers internals (which utilize several rather complex algorithms). Unfortunately since late 70th the USA the level of CS education gradually deteriorated to the extent that even bright students graduate with rather superficial knowledge of those topics. As such they are second class programmers no matter how hard they try to learn the latest fancy OO-oriented language.

Why should you want to know more about algorithms? There are a number of possible reasons:

Those formulas mean that for sorting a small number of items simpler algorithms (or shellsort) are better than quicksort and for sorting large number of items mergesort is better as it can utilize natural orderness of the data ( can benefit from the fact that most "real" files are partially sorted; quicksort does not take into account this property; moreover it demonstrates worst case behavior on some nearly sorted files). Partially ordered files probably represent the most important in practice case of sorting that is often overlooked in superficial books. Often sorting is initiated just to put a few new records in proper place of the already sorted file.

BTW this is a good litmus test of a quality of any book that covers sorting;

if a book does not provide this information you might try to find another.

|

|

For all these reasons it is useful to have a broad familiarity with a range of algorithms, and to understand what types of problems they can help to solve. This is especially important for open source developers. Algorithms are intrinsically connected with data structures because data structures are dreaming to become elegant algorithms the same way ordinary people are dreaming about Hollywood actors ;-)

I would like to note that the value of browsing the WEB in search of algorithms is somewhat questionable :-). One probably will be much better off buying Donald Knuth's TAOCP and ... disconnecting from the Internet for a couple of months. Internet contains way too much noise that suppress useful signal... This is especially true about algorithms.

Note: MIX assembler is not very essential :-). Actually it represent class of computers which became obsolete even before the first volume Knuth book was published (with the release of IBM 360 which took world by storm). You can use Intel assembler if you wish. I used to teach TAOCP on mainframes and IBM assembler was pretty much OK. Knuth probably would be better off using a derivative of S/360 architecture and assembler from the very beginning ;-).Later he updated MIX to MMIX with a new instruction set for use in future volumes, but still existing three volumes use an old one. There are pages on Internet that have them rewritten in IBM 260 or other more modern instruction set.

An extremely important and often overlooked class of algorithms are compiler algorithms (lex analysis, parsing and code generation). They actually represent a very efficient paradigm for solving wide class of tasks similar to compilation of the program. For example complex conversions from one format to another. Also any complex program usually has its own command language and knowledge of compiler algorithms can prevent authors from making typical for amateurs blunders (which are common in open source software). In a way Greenspun's tenth rule - Wikipedia, the free encyclopedia

Any sufficiently complicated C or Fortran program contains an ad hoc, informally-specified, bug-ridden, slow implementation of half of Common Lisp.

can be reformulated to:

Any sufficiently complicated contains an ad hoc, informally-specified, bug-ridden, slow implementation of half of a typical programming language interpreter.

Many authors writing about algorithms try to hide their lack of competence with abuse of mathematical symbolic. Excessive use of mathematics in presentation of algorithms is often counterproductive (verification fiasco can serve as a warning for all future generation; it buried such talented authors as E. Dijkstra, David Gries and many others). Please remember Famous Donald Knuth's citation about correctness proofs:

On March 22, 1977, as I was drafting Section 7.1 of The Art of Computer Programming, I read four papers by Peter van Emde Boas that turned out to be more appropriate for Chapter 8 than Chapter 7. I wrote a five-page memo entitled ``Notes on the van Emde Boas construction of priority deques: An instructive use of recursion,'' and sent it to Peter on March 29 (with copies also to Bob Tarjan and John Hopcroft). The final sentence was this: ``Beware of bugs in the above code; I have only proved it correct, not tried it.''

See also Softpanorama Computer Books Reviews / Algorithms

Dr. Nikolai Bezroukov

P.S: In order to save the bandwidth for humans (as opposed to robots ;-), previous years of Old News section were converted into separate pages.

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

| 2005 | 2004 | 2003 | 2002 | 2001 | 2000 | 1999 |

Nov 21, 2019 | perlmonks.org

This code was written as a solution to the problem posed in Search for identical substrings . As best I can tell it runs about 3 million times faster than the original code.

The code reads a series of strings and searches them for the longest substring between any pair of strings. In the original problem there were 300 strings about 3K long each. A test set comprising 6 strings was used to test the code with the result given below.

Someone with Perl module creation and publication experience could wrap this up and publish it if they wish.

use strict; use warnings; use Time::HiRes; use List::Util qw(min max); my $allLCS = 1; my $subStrSize = 8; # Determines minimum match length. Should be a power of 2 # and less than half the minimum interesting match length. The larger this value # the faster the search runs. if (@ARGV != 1) { print "Finds longest matching substring between any pair of test strings\n"; print "the given file. Pairs of lines are expected with the first of a\n"; print "pair being the string name and the second the test string."; exit (1); } # Read in the strings my @strings; while () { chomp; my $strName = $_; $_ = ; chomp; push @strings, [$strName, $_]; } my $lastStr = @strings - 1; my @bestMatches = [(0, 0, 0, 0, 0)]; # Best match details my $longest = 0; # Best match length so far (unexpanded) my $startTime = [Time::HiRes::gettimeofday ()]; # Do the search for (0..$lastStr) { my $curStr = $_; my @subStrs; my $source = $strings[$curStr][1]; my $sourceName = $strings[$curStr][0]; for (my $i = 0; $i 0; push @localBests, [@test] if $dm >= 0; $offset = $test[3] + $test[4]; next if $test[4] 0; push @bestMatches, [@test]; } continue {++$offset;} } next if ! $allLCS; if (! @localBests) { print "Didn't find LCS for $sourceName and $targetName\n"; next; } for (@localBests) { my @curr = @$_; printf "%03d:%03d L[%4d] (%4d %4d)\n", $curr[0], $curr[1], $curr[4], $curr[2], $curr[3]; } } } print "Completed in " . Time::HiRes::tv_interval ($startTime) . "\n"; for (@bestMatches) { my @curr = @$_; printf "Best match: %s - %s. %d characters starting at %d and %d.\n", $strings[$curr[0]][0], $strings[$curr[1]][0], $curr[4], $curr[2], $curr[3]; } sub expandMatch { my ($index1, $index2, $str1Start, $str2Start, $matchLen) = @_; my $maxMatch = max (0, min ($str1Start, $subStrSize + 10, $str2Start)); my $matchStr1 = substr ($strings[$index1][1], $str1Start - $maxMatch, $maxMatch); my $matchStr2 = substr ($strings[$index2][1], $str2Start - $maxMatch, $maxMatch); ($matchStr1 ^ $matchStr2) =~ /\0*$/; my $adj = $+[0] - $-[0]; $matchLen += $adj; $str1Start -= $adj; $str2Start -= $adj; return ($index1, $index2, $str1Start, $str2Start, $matchLen); }Output using bioMan 's six string sample:

Completed in 0.010486 Best match: >string 1 - >string 3 . 1271 characters starting at 82 an + d 82. [download]

Oct 13, 2019 | www.quora.com

Eugene Miya , A friend/colleague. Sometimes driver. Other shared experiences. Updated Mar 22 2017 · Author has 11.2k answers and 7.9m answer views

He mostly writes in C today.

I can assure you he at least knows about Python. Guido's office at Dropbox is 1 -- 2 blocks by a backdoor gate from Don's house.

I would tend to doubt that he would use R (I've used S before as one of my stat packages). Don would probably write something for himself.

Don is not big on functional languages, so I would doubt either Haskell (sorry Paul) or LISP (but McCarthy lived just around the corner from Don; I used to drive him to meetings; actually, I've driven all 3 of us to meetings, and he got his wife an electric version of my car based on riding in my car (score one for friend's choices)). He does use emacs and he does write MLISP macros, but he believes in being closer to the hardware which is why he sticks with MMIX (and MIX) in his books.

Don't discount him learning the machine language of a given architecture.

I'm having dinner with Don and Jill and a dozen other mutual friends in 3 weeks or so (our quarterly dinner). I can ask him then, if I remember (either a calendar entry or at job). I try not to bother him with things like this. Don is well connected to the hacker community

Don's name was brought up at an undergrad architecture seminar today, but Don was not in the audience (an amazing audience; I took a photo for the collection of architects and other computer scientists in the audience (Hennessey and Patterson were talking)). I came close to biking by his house on my way back home.

We do have a mutual friend (actually, I introduced Don to my biology friend at Don's request) who arrives next week, and Don is my wine drinking proxy. So there is a chance I may see him sooner.

Steven de Rooij , Theoretical computer scientist Answered Mar 9, 2017 · Author has 4.6k answers and 7.7m answer views

Nice question :-)

Don Knuth would want to use something that’s low level, because details matter . So no Haskell; LISP is borderline. Perhaps if the Lisp machine ever had become a thing.

He’d want something with well-defined and simple semantics, so definitely no R. Python also contains quite a few strange ad hoc rules, especially in its OO and lambda features. Yes Python is easy to learn and it looks pretty, but Don doesn’t care about superficialities like that. He’d want a language whose version number is converging to a mathematical constant, which is also not in favor of R or Python.

What remains is C. Out of the five languages listed, my guess is Don would pick that one. But actually, his own old choice of Pascal suits him even better. I don’t think any languages have been invented since was written that score higher on the Knuthometer than Knuth’s own original pick.

And yes, I feel that this is actually a conclusion that bears some thinking about. 24.1k views ·

Dan Allen , I've been programming for 34 years now. Still not finished. Answered Mar 9, 2017 · Author has 4.5k answers and 1.8m answer views

In The Art of Computer Programming I think he'd do exactly what he did. He'd invent his own architecture and implement programs in an assembly language targeting that theoretical machine.

He did that for a reason because he wanted to reveal the detail of algorithms at the lowest level of detail which is machine level.

He didn't use any available languages at the time and I don't see why that would suit his purpose now. All the languages above are too high-level for his purposes.

Sep 07, 2019 | archive.computerhistory.org

Knuth: Yeah. That's absolutely true. I've got to get another thought out of my mind though. That is, early on in the TeX project I also had to do programming of a completely different type. I told you last week that this was my first real exercise in structured programming, which was one of Dijkstra's huge... That's one of the few breakthroughs in the history of computer science, in a way. He was actually responsible for maybe two of the ten that I know.

So I'm doing structured programming as I'm writing TeX. I'm trying to do it right, the way I should've been writing programs in the 60s. Then I also got this typesetting machine, which had, inside of it, a tiny 8080 chip or something. I'm not sure exactly. It was a Zilog, or some very early Intel chip. Way before the 386s. A little computer with 8-bit registers and a small number of things it could do. I had to write my own assembly language for this, because the existing software for writing programs for this little micro thing were so bad. I had to write actually thousands of lines of code for this, in order to control the typesetting. Inside the machine I had to control a stepper motor, and I had to accelerate it.

Every so often I had to give another [command] saying, "Okay, now take a step," and then continue downloading a font from the mainframe.

I had six levels of interrupts in this program. I remember talking to you at this time, saying, "Ed, I'm programming in assembly language for an 8-bit computer," and you said "Yeah, you've been doing the same thing and it's fun again."

You know, you'll remember. We'll undoubtedly talk more about that when I have my turn interviewing you in a week or so. This is another aspect of programming: that you also feel that you're in control and that there's not a black box separating you. It's not only the power, but it's the knowledge of what's going on; that nobody's hiding something. It's also this aspect of jumping levels of abstraction. In my opinion, the thing that computer scientists are best at is seeing things at many levels of detail: high level, intermediate levels, and lowest levels. I know if I'm adding 1 to a certain number, that this is getting me towards some big goal at the top. People enjoy most the things that they're good at. Here's a case where if you're working on a machine that has only this 8-bit capability, but in order to do this you have to go through levels, of not only that machine, but also to the next level up of the assembler, and then you have a simulator in which you can help debug your programs, and you have higher level languages that go through, and then you have the typesetting at the top. There are these six or seven levels all present at the same time. A computer scientist is in heaven in a situation like this.

Feigenbaum: Don, to get back, I want to ask you about that as part of the next question. You went back into programming in a really serious way. It took you, as I said before, ten years, not one year, and you didn't quit. As soon as you mastered one part of it, you went into Metafont, which is another big deal. To what extent were you doing that because you needed to, what I might call expose yourself to, or upgrade your skills in, the art that had emerged over the decade-and-a-half since you had done RUNCIBLE? And to what extent did you do it just because you were driven to be a programmer? You loved programming.

Knuth: Yeah. I think your hypothesis is good. It didn't occur to me at the time that I just had to program in order to be a happy man. Certainly I didn't find my other roles distasteful, except for fundraising. I enjoyed every aspect of being a professor except dealing with proposals, which I did my share of, but that was a necessary evil sort of in my own thinking, I guess. But the fact that now I'm still compelled to I wake up in the morning with an idea, and it makes my day to think of adding a couple of lines to my program. Gives me a real high. It must be the way poets feel, or musicians and so on, and other people, painters, whatever. Programming does that for me. It's certainly true. But the fact that I had to put so much time in it was not totally that, I'm sure, because it became a responsibility. It wasn't just for Phyllis and me, as it turned out. I started working on it at the AI lab, and people were looking at the output coming out of the machine and they would say, "Hey, Don, how did you do that?" Guy Steele was visiting from MIT that summer and he said, "Don, I want to port this to take it to MIT." I didn't have two users.

First I had 10, and then I had 100, and then I had 1000. Every time it went to another order of magnitude I had to change the system, because it would almost match their needs but then they would have very good suggestions as to something it wasn't covering. Then when it went to 10,000 and when it went to 100,000, the last stage was 10 years later when I made it friendly for the other alphabets of the world, where people have accented letters and Russian letters. <p>I had started out with only 7-bit codes. I had so many international users by that time, I saw that was a fundamental error. I started out with the idea that nobody would ever want to use a keyboard that could generate more than about 90 characters. It was going to be too complicated. But I was wrong. So it [TeX] was a burden as well, in the sense that I wanted to do a responsible job.

I had actually consciously planned an end-game that would take me four years to finish, and [then] not continue maintaining it and adding on, so that I could have something where I could say, "And now it's done and it's never going to change." I believe this is one aspect of software that, not for every system, but for TeX, it was vital that it became something that wouldn't be a moving target after while.

Feigenbaum: The books on TeX were a period. That is, you put a period down and you said, "This is it."

Sep 07, 2019 | archive.computerhistory.org

Dijkstra said he was proud to be a programmer. Unfortunately he changed his attitude completely, and I think he wrote his last computer program in the 1980s. At this conference I went to in 1967 about simulation language, Chris Strachey was going around asking everybody at the conference what was the last computer program you wrote. This was 1967. Some of the people said, "I've never written a computer program." Others would say, "Oh yeah, here's what I did last week." I asked Edsger this question when I visited him in Texas in the 90s and he said, "Don, I write programs now with pencil and paper, and I execute them in my head." He finds that a good enough discipline.

I think he was mistaken on that. He taught me a lot of things, but I really think that if he had continued... One of Dijkstra's greatest strengths was that he felt a strong sense of aesthetics, and he didn't want to compromise his notions of beauty. They were so intense that when he visited me in the 1960s, I had just come to Stanford. I remember the conversation we had. It was in the first apartment, our little rented house, before we had electricity in the house.

We were sitting there in the dark, and he was telling me how he had just learned about the specifications of the IBM System/360, and it made him so ill that his heart was actually starting to flutter.

He intensely disliked things that he didn't consider clean to work with. So I can see that he would have distaste for the languages that he had to work with on real computers. My reaction to that was to design my own language, and then make Pascal so that it would work well for me in those days. But his response was to do everything only intellectually.

So, programming.

I happened to look the other day. I wrote 35 programs in January, and 28 or 29 programs in February. These are small programs, but I have a compulsion. I love to write programs and put things into it. I think of a question that I want to answer, or I have part of my book where I want to present something. But I can't just present it by reading about it in a book. As I code it, it all becomes clear in my head. It's just the discipline. The fact that I have to translate my knowledge of this method into something that the machine is going to understand just forces me to make that crystal-clear in my head. Then I can explain it to somebody else infinitely better. The exposition is always better if I've implemented it, even though it's going to take me more time.

Sep 07, 2019 | archive.computerhistory.org

So I had a programming hat when I was outside of Cal Tech, and at Cal Tech I am a mathematician taking my grad studies. A startup company, called Green Tree Corporation because green is the color of money, came to me and said, "Don, name your price. Write compilers for us and we will take care of finding computers for you to debug them on, and assistance for you to do your work. Name your price." I said, "Oh, okay. $100,000.", assuming that this was In that era this was not quite at Bill Gate's level today, but it was sort of out there.

The guy didn't blink. He said, "Okay." I didn't really blink either. I said, "Well, I'm not going to do it. I just thought this was an impossible number."

At that point I made the decision in my life that I wasn't going to optimize my income; I was really going to do what I thought I could do for well, I don't know. If you ask me what makes me most happy, number one would be somebody saying "I learned something from you". Number two would be somebody saying "I used your software". But number infinity would be Well, no. Number infinity minus one would be "I bought your book". It's not as good as "I read your book", you know. Then there is "I bought your software"; that was not in my own personal value. So that decision came up. I kept up with the literature about compilers. The Communications of the ACM was where the action was. I also worked with people on trying to debug the ALGOL language, which had problems with it. I published a few papers, like "The Remaining Trouble Spots in ALGOL 60" was one of the papers that I worked on. I chaired a committee called "Smallgol" which was to find a subset of ALGOL that would work on small computers. I was active in programming languages.

Sep 07, 2019 | conservancy.umn.edu

Frana: You have made the comment several times that maybe 1 in 50 people have the "computer scientist's mind." Knuth: Yes. Frana: I am wondering if a large number of those people are trained professional librarians? [laughter] There is some strangeness there. But can you pinpoint what it is about the mind of the computer scientist that is....

Knuth: That is different?

Frana: What are the characteristics?

Knuth: Two things: one is the ability to deal with non-uniform structure, where you have case one, case two, case three, case four. Or that you have a model of something where the first component is integer, the next component is a Boolean, and the next component is a real number, or something like that, you know, non-uniform structure. To deal fluently with those kinds of entities, which is not typical in other branches of mathematics, is critical. And the other characteristic ability is to shift levels quickly, from looking at something in the large to looking at something in the small, and many levels in between, jumping from one level of abstraction to another. You know that, when you are adding one to some number, that you are actually getting closer to some overarching goal. These skills, being able to deal with nonuniform objects and to see through things from the top level to the bottom level, these are very essential to computer programming, it seems to me. But maybe I am fooling myself because I am too close to it.

Frana: It is the hardest thing to really understand that which you are existing within.

Knuth: Yes.

Sep 07, 2019 | conservancy.umn.edu

Knuth: Well, certainly it seems the way things are going. You take any particular subject that you are interested in and you try to see if somebody with an American high school education has learned it, and you will be appalled. You know, Jesse Jackson thinks that students know nothing about political science, and I am sure the chemists think that students don't know chemistry, and so on. But somehow they get it when they have to later. But I would say certainly the students now have been getting more of a superficial idea of mathematics than they used to. We have to do remedial stuff at Stanford that we didn't have to do thirty years ago.

Frana: Gio [Wiederhold] said much the same thing to me.

Knuth: The most scandalous thing was that Stanford's course in linear algebra could not get to eigenvalues because the students didn't know about complex numbers. Now every course at Stanford that takes linear algebra as a prerequisite does so because they want the students to know about eigenvalues. But here at Stanford, with one of the highest admission standards of any university, our students don't know complex numbers. So we have to teach them that when they get to college. Yes, this is definitely a breakdown.

Frana: Was your mathematics training in high school particularly good, or was it that you spent a lot of time actually doing problems?

Knuth: No, my mathematics training in high school was not good. My teachers could not answer my questions and so I decided I'd go into physics. I mean, I had played with mathematics in high school. I did a lot of work drawing graphs and plotting points and I used pi as the radix of a number system, and explored what the world would be like if you wanted to do logarithms and you had a number system based on pi. And I had played with stuff like that. But my teachers couldn't answer questions that I had.

... ... ... Frana: Do you have an answer? Are American students different today? In one of your interviews you discuss the problem of creativity versus gross absorption of knowledge.

Knuth: Well, that is part of it. Today we have mostly a sound byte culture, this lack of attention span and trying to learn how to pass exams. Frana: Yes,

Sep 07, 2019 | conservancy.umn.edu

Knuth: I can be a writer, who tries to organize other people's ideas into some kind of a more coherent structure so that it is easier to put things together. I can see that I could be viewed as a scholar that does his best to check out sources of material, so that people get credit where it is due. And to check facts over, not just to look at the abstract of something, but to see what the methods were that did it and to fill in holes if necessary. I look at my role as being able to understand the motivations and terminology of one group of specialists and boil it down to a certain extent so that people in other parts of the field can use it. I try to listen to the theoreticians and select what they have done that is important to the programmer on the street; to remove technical jargon when possible.

But I have never been good at any kind of a role that would be making policy, or advising people on strategies, or what to do. I have always been best at refining things that are there and bringing order out of chaos. I sometimes raise new ideas that might stimulate people, but not really in a way that would be in any way controlling the flow. The only time I have ever advocated something strongly was with literate programming; but I do this always with the caveat that it works for me, not knowing if it would work for anybody else.

When I work with a system that I have created myself, I can always change it if I don't like it. But everybody who works with my system has to work with what I give them. So I am not able to judge my own stuff impartially. So anyway, I have always felt bad about if anyone says, 'Don, please forecast the future,'...

Sep 06, 2019 | archive.computerhistory.org

...I showed the second version of this design to two of my graduate students, and I said, "Okay, implement this, please, this summer. That's your summer job." I thought I had specified a language. I had to go away. I spent several weeks in China during the summer of 1977, and I had various other obligations. I assumed that when I got back from my summer trips, I would be able to play around with TeX and refine it a little bit. To my amazement, the students, who were outstanding students, had not competed [it]. They had a system that was able to do about three lines of TeX. I thought, "My goodness, what's going on? I thought these were good students." Well afterwards I changed my attitude to saying, "Boy, they accomplished a miracle."

Because going from my specification, which I thought was complete, they really had an impossible task, and they had succeeded wonderfully with it. These students, by the way, [were] Michael Plass, who has gone on to be the brains behind almost all of Xerox's Docutech software and all kind of things that are inside of typesetting devices now, and Frank Liang, one of the key people for Microsoft Word.

He did important mathematical things as well as his hyphenation methods which are quite used in all languages now. These guys were actually doing great work, but I was amazed that they couldn't do what I thought was just sort of a routine task. Then I became a programmer in earnest, where I had to do it. The reason is when you're doing programming, you have to explain something to a computer, which is dumb.

When you're writing a document for a human being to understand, the human being will look at it and nod his head and say, "Yeah, this makes sense." But then there's all kinds of ambiguities and vagueness that you don't realize until you try to put it into a computer. Then all of a sudden, almost every five minutes as you're writing the code, a question comes up that wasn't addressed in the specification. "What if this combination occurs?"

It just didn't occur to the person writing the design specification. When you're faced with implementation, a person who has been delegated this job of working from a design would have to say, "Well hmm, I don't know what the designer meant by this."

If I hadn't been in China they would've scheduled an appointment with me and stopped their programming for a day. Then they would come in at the designated hour and we would talk. They would take 15 minutes to present to me what the problem was, and then I would think about it for a while, and then I'd say, "Oh yeah, do this. " Then they would go home and they would write code for another five minutes and they'd have to schedule another appointment.

I'm probably exaggerating, but this is why I think Bob Floyd's Chiron compiler never got going. Bob worked many years on a beautiful idea for a programming language, where he designed a language called Chiron, but he never touched the programming himself. I think this was actually the reason that he had trouble with that project, because it's so hard to do the design unless you're faced with the low-level aspects of it, explaining it to a machine instead of to another person.

Forsythe, I think it was, who said, "People have said traditionally that you don't understand something until you've taught it in a class. The truth is you don't really understand something until you've taught it to a computer, until you've been able to program it." At this level, programming was absolutely important

Sep 06, 2019 | www-cs-faculty.stanford.edu

Having just celebrated my 10000th birthday (in base three), I'm operating a little bit in history mode. Every once in awhile, people have asked me to record some of my memories of past events --- I guess because I've been fortunate enough to live at some pretty exciting times, computersciencewise. These after-the-fact recollections aren't really as reliable as contemporary records; but they do at least show what I think I remember. And the stories are interesting, because they involve lots of other people.

So, before these instances of oral history themselves begin to fade from my memory, I've decided to record some links to several that I still know about:

- Interview by Philip L Frana at the Charles Babbage Institute, November 2001

- transcript of OH 332

audio file (2:00:33)- Interviews commissioned by Peoples Archive, taped in March 2006

- playlist for 97 videos (about 2--8 minutes each)

- Interview by Ed Feigenbaum at the Computer History Museum, March 2007

- Part 1 (3:07:25) Part 2 (4:02:46)

(transcript)- Interview by Susan Schofield for the Stanford Historical Society, May 2018

- (audio files, 2:20:30 and 2:14:25; transcript)

- Interview by David Brock and Hansen Hsu about the computer programs that I wrote during the 1950s, July 2018

- video (1:30:0)

(texts of the actual programs)Some extended interviews, not available online, have also been published in books, notably in Chapters 7--17 of Companion to the Papers of Donald Knuth (conversations with Dikran Karagueuzian in the summer of 1996), and in two books by Edgar G. Daylight, The Essential Knuth (2013), Algorithmic Barriers Falling (2014).

Sep 06, 2019 | conservancy.umn.edu

Knuth: No, I stopped going to conferences. It was too discouraging. Computer programming keeps getting harder because more stuff is discovered. I can cope with learning about one new technique per day, but I can't take ten in a day all at once. So conferences are depressing; it means I have so much more work to do. If I hide myself from the truth I am much happier.

Sep 06, 2019 | archive.computerhistory.org

Knuth: This is, of course, really the story of my life, because I hope to live long enough to finish it. But I may not, because it's turned out to be such a huge project. I got married in the summer of 1961, after my first year of graduate school. My wife finished college, and I could use the money I had made -- the $5000 on the compiler -- to finance a trip to Europe for our honeymoon.

We had four months of wedded bliss in Southern California, and then a man from Addison-Wesley came to visit me and said "Don, we would like you to write a book about how to write compilers."

The more I thought about it, I decided "Oh yes, I've got this book inside of me."

I sketched out that day -- I still have the sheet of tablet paper on which I wrote -- I sketched out 12 chapters that I thought ought to be in such a book. I told Jill, my wife, "I think I'm going to write a book."

As I say, we had four months of bliss, because the rest of our marriage has all been devoted to this book. Well, we still have had happiness. But really, I wake up every morning and I still haven't finished the book. So I try to -- I have to -- organize the rest of my life around this, as one main unifying theme. The book was supposed to be about how to write a compiler. They had heard about me from one of their editorial advisors, that I knew something about how to do this. The idea appealed to me for two main reasons. One is that I did enjoy writing. In high school I had been editor of the weekly paper. In college I was editor of the science magazine, and I worked on the campus paper as copy editor. And, as I told you, I wrote the manual for that compiler that we wrote. I enjoyed writing, number one.

Also, Addison-Wesley was the people who were asking me to do this book; my favorite textbooks had been published by Addison Wesley. They had done the books that I loved the most as a student. For them to come to me and say, "Would you write a book for us?", and here I am just a secondyear gradate student -- this was a thrill.

Another very important reason at the time was that I knew that there was a great need for a book about compilers, because there were a lot of people who even in 1962 -- this was January of 1962 -- were starting to rediscover the wheel. The knowledge was out there, but it hadn't been explained. The people who had discovered it, though, were scattered all over the world and they didn't know of each other's work either, very much. I had been following it. Everybody I could think of who could write a book about compilers, as far as I could see, they would only give a piece of the fabric. They would slant it to their own view of it. There might be four people who could write about it, but they would write four different books. I could present all four of their viewpoints in what I would think was a balanced way, without any axe to grind, without slanting it towards something that I thought would be misleading to the compiler writer for the future. I considered myself as a journalist, essentially. I could be the expositor, the tech writer, that could do the job that was needed in order to take the work of these brilliant people and make it accessible to the world. That was my motivation. Now, I didn't have much time to spend on it then, I just had this page of paper with 12 chapter headings on it. That's all I could do while I'm a consultant at Burroughs and doing my graduate work. I signed a contract, but they said "We know it'll take you a while." I didn't really begin to have much time to work on it until 1963, my third year of graduate school, as I'm already finishing up on my thesis. In the summer of '62, I guess I should mention, I wrote another compiler. This was for Univac; it was a FORTRAN compiler. I spent the summer, I sold my soul to the devil, I guess you say, for three months in the summer of 1962 to write a FORTRAN compiler. I believe that the salary for that was $15,000, which was much more than an assistant professor. I think assistant professors were getting eight or nine thousand in those days.

Feigenbaum: Well, when I started in 1960 at [University of California] Berkeley, I was getting $7,600 for the nine-month year.

Knuth: Knuth: Yeah, so you see it. I got $15,000 for a summer job in 1962 writing a FORTRAN compiler. One day during that summer I was writing the part of the compiler that looks up identifiers in a hash table. The method that we used is called linear probing. Basically you take the variable name that you want to look up, you scramble it, like you square it or something like this, and that gives you a number between one and, well in those days it would have been between 1 and 1000, and then you look there. If you find it, good; if you don't find it, go to the next place and keep on going until you either get to an empty place, or you find the number you're looking for. It's called linear probing. There was a rumor that one of Professor Feller's students at Princeton had tried to figure out how fast linear probing works and was unable to succeed. This was a new thing for me. It was a case where I was doing programming, but I also had a mathematical problem that would go into my other [job]. My winter job was being a math student, my summer job was writing compilers. There was no mix. These worlds did not intersect at all in my life at that point. So I spent one day during the summer while writing the compiler looking at the mathematics of how fast does linear probing work. I got lucky, and I solved the problem. I figured out some math, and I kept two or three sheets of paper with me and I typed it up. ["Notes on 'Open' Addressing', 7/22/63] I guess that's on the internet now, because this became really the genesis of my main research work, which developed not to be working on compilers, but to be working on what they call analysis of algorithms, which is, have a computer method and find out how good is it quantitatively. I can say, if I got so many things to look up in the table, how long is linear probing going to take. It dawned on me that this was just one of many algorithms that would be important, and each one would lead to a fascinating mathematical problem. This was easily a good lifetime source of rich problems to work on. Here I am then, in the middle of 1962, writing this FORTRAN compiler, and I had one day to do the research and mathematics that changed my life for my future research trends. But now I've gotten off the topic of what your original question was.

Feigenbaum: We were talking about sort of the.. You talked about the embryo of The Art of Computing. The compiler book morphed into The Art of Computer Programming, which became a seven-volume plan.

Knuth: Exactly. Anyway, I'm working on a compiler and I'm thinking about this. But now I'm starting, after I finish this summer job, then I began to do things that were going to be relating to the book. One of the things I knew I had to have in the book was an artificial machine, because I'm writing a compiler book but machines are changing faster than I can write books. I have to have a machine that I'm totally in control of. I invented this machine called MIX, which was typical of the computers of 1962.

In 1963 I wrote a simulator for MIX so that I could write sample programs for it, and I taught a class at Caltech on how to write programs in assembly language for this hypothetical computer. Then I started writing the parts that dealt with sorting problems and searching problems, like the linear probing idea. I began to write those parts, which are part of a compiler, of the book. I had several hundred pages of notes gathering for those chapters for The Art of Computer Programming. Before I graduated, I've already done quite a bit of writing on The Art of Computer Programming.

I met George Forsythe about this time. George was the man who inspired both of us [Knuth and Feigenbaum] to come to Stanford during the '60s. George came down to Southern California for a talk, and he said, "Come up to Stanford. How about joining our faculty?" I said "Oh no, I can't do that. I just got married, and I've got to finish this book first." I said, "I think I'll finish the book next year, and then I can come up [and] start thinking about the rest of my life, but I want to get my book done before my son is born." Well, John is now 40-some years old and I'm not done with the book. Part of my lack of expertise is any good estimation procedure as to how long projects are going to take. I way underestimated how much needed to be written about in this book. Anyway, I started writing the manuscript, and I went merrily along writing pages of things that I thought really needed to be said. Of course, it didn't take long before I had started to discover a few things of my own that weren't in any of the existing literature. I did have an axe to grind. The message that I was presenting was in fact not going to be unbiased at all. It was going to be based on my own particular slant on stuff, and that original reason for why I should write the book became impossible to sustain. But the fact that I had worked on linear probing and solved the problem gave me a new unifying theme for the book. I was going to base it around this idea of analyzing algorithms, and have some quantitative ideas about how good methods were. Not just that they worked, but that they worked well: this method worked 3 times better than this method, or 3.1 times better than this method. Also, at this time I was learning mathematical techniques that I had never been taught in school. I found they were out there, but they just hadn't been emphasized openly, about how to solve problems of this kind.

So my book would also present a different kind of mathematics than was common in the curriculum at the time, that was very relevant to analysis of algorithm. I went to the publishers, I went to Addison Wesley, and said "How about changing the title of the book from 'The Art of Computer Programming' to 'The Analysis of Algorithms'." They said that will never sell; their focus group couldn't buy that one. I'm glad they stuck to the original title, although I'm also glad to see that several books have now come out called "The Analysis of Algorithms", 20 years down the line.

But in those days, The Art of Computer Programming was very important because I'm thinking of the aesthetical: the whole question of writing programs as something that has artistic aspects in all senses of the word. The one idea is "art" which means artificial, and the other "art" means fine art. All these are long stories, but I've got to cover it fairly quickly.

I've got The Art of Computer Programming started out, and I'm working on my 12 chapters. I finish a rough draft of all 12 chapters by, I think it was like 1965. I've got 3,000 pages of notes, including a very good example of what you mentioned about seeing holes in the fabric. One of the most important chapters in the book is parsing: going from somebody's algebraic formula and figuring out the structure of the formula. Just the way I had done in seventh grade finding the structure of English sentences, I had to do this with mathematical sentences.

Chapter ten is all about parsing of context-free language, [which] is what we called it at the time. I covered what people had published about context-free languages and parsing. I got to the end of the chapter and I said, well, you can combine these ideas and these ideas, and all of a sudden you get a unifying thing which goes all the way to the limit. These other ideas had sort of gone partway there. They would say "Oh, if a grammar satisfies this condition, I can do it efficiently." "If a grammar satisfies this condition, I can do it efficiently." But now, all of a sudden, I saw there was a way to say I can find the most general condition that can be done efficiently without looking ahead to the end of the sentence. That you could make a decision on the fly, reading from left to right, about the structure of the thing. That was just a natural outgrowth of seeing the different pieces of the fabric that other people had put together, and writing it into a chapter for the first time. But I felt that this general concept, well, I didn't feel that I had surrounded the concept. I knew that I had it, and I could prove it, and I could check it, but I couldn't really intuit it all in my head. I knew it was right, but it was too hard for me, really, to explain it well.

So I didn't put in The Art of Computer Programming. I thought it was beyond the scope of my book. Textbooks don't have to cover everything when you get to the harder things; then you have to go to the literature. My idea at that time [is] I'm writing this book and I'm thinking it's going to be published very soon, so any little things I discover and put in the book I didn't bother to write a paper and publish in the journal because I figure it'll be in my book pretty soon anyway. Computer science is changing so fast, my book is bound to be obsolete.

It takes a year for it to go through editing, and people drawing the illustrations, and then they have to print it and bind it and so on. I have to be a little bit ahead of the state-of-the-art if my book isn't going to be obsolete when it comes out. So I kept most of the stuff to myself that I had, these little ideas I had been coming up with. But when I got to this idea of left-to-right parsing, I said "Well here's something I don't really understand very well. I'll publish this, let other people figure out what it is, and then they can tell me what I should have said." I published that paper I believe in 1965, at the end of finishing my draft of the chapter, which didn't get as far as that story, LR(k). Well now, textbooks of computer science start with LR(k) and take off from there. But I want to give you an idea of

Dec 27, 2018 | en.wikipedia.org

Comb sort is a relatively simple sorting algorithm originally designed by Włodzimierz Dobosiewicz in 1980. [1] [2] Later it was rediscovered by Stephen Lacey and Richard Box in 1991. [3] Comb sort improves on bubble sort . Contents

Algorithm [ edit ]The basic idea is to eliminate turtles , or small values near the end of the list, since in a bubble sort these slow the sorting down tremendously. Rabbits , large values around the beginning of the list, do not pose a problem in bubble sort.

In bubble sort , when any two elements are compared, they always have a gap (distance from each other) of 1. The basic idea of comb sort is that the gap can be much more than 1. The inner loop of bubble sort, which does the actual swap , is modified such that gap between swapped elements goes down (for each iteration of outer loop) in steps of a "shrink factor" k : [ n / k , n / k 2 , n / k 3 , ..., 1 ].

The gap starts out as the length of the list n being sorted divided by the shrink factor k (generally 1.3; see below) and one pass of the aforementioned modified bubble sort is applied with that gap. Then the gap is divided by the shrink factor again, the list is sorted with this new gap, and the process repeats until the gap is 1. At this point, comb sort continues using a gap of 1 until the list is fully sorted. The final stage of the sort is thus equivalent to a bubble sort, but by this time most turtles have been dealt with, so a bubble sort will be efficient.

The shrink factor has a great effect on the efficiency of comb sort. k = 1.3 has been suggested as an ideal shrink factor by the authors of the original article after empirical testing on over 200,000 random lists. A value too small slows the algorithm down by making unnecessarily many comparisons, whereas a value too large fails to effectively deal with turtles, making it require many passes with 1 gap size.

The pattern of repeated sorting passes with decreasing gaps is similar to Shellsort , but in Shellsort the array is sorted completely each pass before going on to the next-smallest gap. Comb sort's passes do not completely sort the elements. This is the reason that Shellsort gap sequences have a larger optimal shrink factor of about 2.2.

Pseudocode

function combsort(array input) gap := input.size // Initialize gap size shrink := 1.3 // Set the gap shrink factor sorted := false loop while sorted = false // Update the gap value for a next comb gap := floor(gap / shrink) if gap ≤ 1 gap := 1 sorted := true // If there are no swaps this pass, we are done end if // A single "comb" over the input list i := 0 loop while i + gap < input.size // See Shell sort for a similar idea if input[i] > input[i+gap] swap(input[i], input[i+gap]) sorted := false // If this assignment never happens within the loop, // then there have been no swaps and the list is sorted. end if i := i + 1 end loop end loop end function

Dec 25, 2018 | news.ycombinator.com

Slightly Skeptical View on Sorting Algorithms ( softpanorama.org ) 17 points by nkurz on Oct 14, 2014 | hide | past | web | favorite | 12 comments

flebron on Oct 14, 2014 [-]

>Please note that O matters. Even if both algorithms belong to O(n log n) class, algorithm for which O=1 is 100 times faster then algorithm for which O=100.Wait, what? O = 100? That's just... not how it works.

nightcracker on Oct 14, 2014 [-]

I think they meant to illustrate that O(100) == O(1), despite a runtime of 100 being a hundred times slower than 1.

parados on Oct 14, 2014 [-]

I think what the author meant was that the elapsed time to sort: t = k * O(f(n)). So given two algorithms that have the same O(f(n)) then the smaller the k, the faster the sort.

mqsiuser on Oct 14, 2014 [-]

> Among complex algorithms Mergesort is stable> Among non-stable algorithms [...] quicksort [...]

QuickSort can be implemented so that it is stable: http://www.mqseries.net/phpBB2/viewtopic.php?p=273722&highli... (I am author, AMA)

Why is gnu-core-utils-sort implemented as mergesort (also in place, but slower) ?

Edit: And sorry: In-Place matters: Quicksort is fastest AND uses least memory.

Who the heck can say sth about the imput (that it may be like "pre-sorted" ?!)

wffurr on Oct 14, 2014 [-]

>> Who the heck can say sth about the imput (that it may be like "pre-sorted" ?!)Lots of real world (as opposed to synthetic random test data) may be already ordered or contain ordered subsequences. One wants to run a sorting algorithm to guarantee the data is sorted, and thus performance on pre-sorted data is important.

This is why Timsort is the default sort in Python and Java. http://en.m.wikipedia.org/wiki/Timsort

It is the worst case performance for quick sort.

abetusk on Oct 14, 2014 [-]

As far as I know, Quicksort cannot be implemented to be stable without an auxiliary array. So implementing Quicksort to be stable destroys the in-place feature.If you want something in-place and stable, you'll have to use something like WikiSort [1] or GrailSort [2].

mqsiuser on Oct 14, 2014 [-]

> Quicksort cannot be implemented to be stable without an auxiliary arrayOkay, you need an additional array (I am using a separate array, the "result array") [1]: But that doesn't matter, since the additional array can just grow (while the partitions/other arrays shrink).

Though my implementation is not cache-aware, which is very interesting and pretty relevant for performance.

[1] Actually I am using a linked tree data structure: "In-place"... which IS HIGHLY relevant: It can occur that the input data is large ((already) filling up (almost) all RAM) and these programs ("Execution Groups") terminate "the old way", so just 'abend'.

And hence it stands: By the way I have proven that you can implement QuickSort STABLE AND IN-PLACE

Thank you :) and fix you wording, when saying "Quicksort is..."

TheLoneWolfling on Oct 14, 2014 [-]

The problem with radix sort is the same problem in general with big-O notation:A factor of log(n) (or less) difference can often be overwhelmed for all practical inputs by a constant factor.

sika_grr on Oct 14, 2014 [-]

No. For radix sort this constant factor is quite low, so it outperforms std::sort in most cases.Try sorting a million integers, my results are: std::sort: 60 ms; radix-sort (LSB, hand coded, less than 20 lines): 11 ms. It gets even better when you mix MSB with LSB for better locality.

No, there are no problems with doubles or negative integers. For sizeof(key_value)<=16 B (8 for key, 8 for pointer), radix is the best sort on desktop computers.

sika_grr on Oct 14, 2014 [-]

Please note that radix sort is easy to implement to work correctly with doubles, Table 1 is misleading.

whoisjuan on Oct 14, 2014 [-]

timsort?

cbd1984 on Oct 14, 2014 [-]

It was invented by Tim Peters for Python, and has been the default there since 2.3. The Java runtime uses it to sort arrays of non-primitive type (that is, arrays of values that can be treated as Objects).http://en.wikipedia.org/wiki/Timsort

OpenJDK implementation. Good notes in the comments:

http://cr.openjdk.java.net/~martin/webrevs/openjdk7/timsort/...

Dec 04, 2016 | ask.slashdot.org

EditorDavidIn 1962, 24-year-old Donald Knuth began writing The Art of Computer Programming, publishing three volumes by 1973, with volume 4 arriving in 2005. (Volume 4A appeared in 2011 , with new paperback fascicles planned for every two years, and fascicle 6, "Satisfiability," arriving last December).

"You should definitely send me a resume if you can read the whole thing," Bill Gates once said, in a column where he described working through the book . "If somebody is so brash that they think they know everything, Knuth will help them understand that the world is deep and complicated."

But now long-time Slashdot reader Qbertino has a question:

I've had The Art of Computer Programming on my book-buying list for just about two decades now and I'm still torn...about actually getting it. I sometimes believe I would mutate into some programming demi-god if I actually worked through this beast, but maybe I'm just fooling myself...

Have any of you worked through or with TAOCP or are you perhaps working through it? And is it worthwhile? I mean not just for bragging rights. And how long can it reasonably take? A few years?

Share your answers and experiences in the comments. Have you read The Art of Computer Programming ?

Jun 13, 2018 | stackoverflow.com

David Holm ,Oct 15, 2008 at 15:08

You can use IDA Pro by Hex-Rays . You will usually not get good C++ out of a binary unless you compiled in debugging information. Prepare to spend a lot of manual labor reversing the code.If you didn't strip the binaries there is some hope as IDA Pro can produce C-alike code for you to work with. Usually it is very rough though, at least when I used it a couple of years ago.

davenpcj ,May 5, 2012 at

To clarify, IDA will only give the disassembly. There's an add-on to it called Hex-Rays that will decompile the rest of the way into C/C++ source, to the extent that's possible. – davenpcj May 5 '12 atDustin Getz ,Oct 15, 2008 at 15:15

information is discarded in the compiling process. Even if a decompiler could produce the logical equivalent code with classes and everything (it probably can't), the self-documenting part is gone in optimized release code. No variable names, no routine names, no class names - just addresses.Darshan Chaudhary ,Aug 14, 2017 at 17:36

"the soul" of the program is gone, just an empty shell of it's former self..." – Darshan Chaudhary Aug 14 '17 at 17:36,

Yes, but none of them will manage to produce readable enough code to worth the effort. You will spend more time trying to read the decompiled source with assembler blocks inside, than rewriting your old app from scratch.

May 22, 2018 | cs.brandeis.edu

The Data Compression Conference (DCC) is an international forum for current work on data compression and related applications.

- Compression of specific types of data (text, images, video, etc.).

- Compression in networking, communications, and storage.

- Applications to bioinformatics.

- Applications to mobile computing.

- Applications to information retrieval.

- Computational issues for compression related applications.

- Inpainting-based compression, perceptual coding.

- Compressed data structures.

- Quantization theory, and vector quantization (VQ).

- Joint source-channel coding.

- Compression related standards.

Both theoretical and experimental work are of interest. More >

Contact UsIf you would like to be added to, or removed from the DCC email list, please contact us.

May 21, 2018 | superuser.com

I find myself having to compress a number of very large files (80-ish GB), and I am surprised at the (lack of) speed my system is exhibiting. I get about 500 MB / min conversion speed; using

top, I seem to be using a single CPU at approximately 100%.I am pretty sure it's not (just) disk access speed, since creating a

tarfile (that's how the 80G file was created) took just a few minutes (maybe 5 or 10), but after more than 2 hours my simple gzip command is still not done.In summary:

tar -cvf myStuff.tar myDir/*Took <5 minutes to create an 87 G tar file

gzip myStuff.tarTook two hours and 10 minutes, creating a 55G zip file.

My question: Is this normal? Are there certain options in

gzipto speed things up?Would it be faster to concatenate the commands and use

tar -cvfz?I saw reference to

pigz- Parallel Implementation of GZip - but unfortunatly I cannot install software on the machine I am using, so that is not an option for me. See for example this earlier question .I am intending to try some of these options myself and time them - but it is quite likely that I will not hit "the magic combination" of options. I am hoping that someone on this site knows the right trick to speed things up.

When I have the results of other trials available I will update this question - but if anyone has a particularly good trick available, I would really appreciate it. Maybe the gzip just takes more processing time than I realized...

UPDATE

As promised, I tried the tricks suggested below: change the amount of compression, and change the destination of the file. I got the following results for a tar that was about 4.1GB:

flag user system size sameDisk -1 189.77s 13.64s 2.786G +7.2s -2 197.20s 12.88s 2.776G +3.4s -3 207.03s 10.49s 2.739G +1.2s -4 223.28s 13.73s 2.735G +0.9s -5 237.79s 9.28s 2.704G -0.4s -6 271.69s 14.56s 2.700G +1.4s -7 307.70s 10.97s 2.699G +0.9s -8 528.66s 10.51s 2.698G -6.3s -9 722.61s 12.24s 2.698G -4.0sSo yes, changing the flag from the default

-6to the fastest-1gives me a 30% speedup, with (for my data) hardly any change to the size of the zip file. Whether I'm using the same disk or another one makes essentially no difference (I would have to run this multiple times to get any statistical significance).If anyone is interested, I generated these timing benchmarks using the following two scripts:

#!/bin/bash # compare compression speeds with different options sameDisk='./' otherDisk='/tmp/' sourceDir='/dirToCompress' logFile='./timerOutput' rm $logFile for i in {1..9} do /usr/bin/time -a --output=timerOutput ./compressWith $sourceDir $i $sameDisk $logFile do /usr/bin/time -a --output=timerOutput ./compressWith $sourceDir $i $otherDisk $logFile doneAnd the second script (

compressWith):#!/bin/bash # use: compressWith sourceDir compressionFlag destinationDisk logFile echo "compressing $1 to $3 with setting $2" >> $4 tar -c $1 | gzip -$2 > $3test-$2.tar.gzThree things to note:

- Using

/usr/bin/timerather thantime, since the built-in command ofbashhas many fewer options than the GNU command- I did not bother using the

--formatoption although that would make the log file easier to read- I used a script-in-a-script since

timeseemed to operate only on the first command in a piped sequence (so I made it look like a single command...).With all this learnt, my conclusions are

- Speed things up with the

-1flag (accepted answer)- Much more time is spend compressing the data than reading from disk

- Invest in faster compression software (

pigzseems like a good choice).Thanks everyone who helped me learn all this! You can change the speed of gzip using

--fast--bestor-#where # is a number between 1 and 9 (1 is fastest but less compression, 9 is slowest but more compression). By default gzip runs at level 6.The reason tar takes so little time compared to gzip is that there's very little computational overhead in copying your files into a single file (which is what it does). gzip on the otherhand, is actually using compression algorithms to shrink the tar file.The problem is that gzip is constrained (as you discovered) to a single thread.

Enter pigz , which can use multiple threads to perform the compression. An example of how to use this would be:

tar -c --use-compress-program=pigz -f tar.file dir_to_zipThere is a nice succint summary of the --use-compress-program option over on a sister site .

Thanks for your answer and links. I actually mentioned pigz in the question. –

David Spillett 21.6k 39 62

I seem to be using a single CPU at approximately 100%.

That implies there isn't an I/O performance issue but that the compression is only using one thread (which will be the case with gzip).

If you manage to achieve the access/agreement needed to get other tools installed, then 7zip also supports multiple threads to take advantage of multi core CPUs, though I'm not sure if that extends to the gzip format as well as its own.

If you are stuck to using just gzip for the time being and have multiple files to compress, you could try compressing them individually - that way you'll use more of that multi-core CPU by running more than one process in parallel.

Be careful not to overdo it though because as soon as you get anywhere near the capacity of your I/O subsystem performance will drop off precipitously (to lower than if you were using one process/thread) as the latency of head movements becomes a significant bottleneck.

Thanks for your input. You gave me an idea (for which you get an upvote): since I have multiple archives to create I can just write the individual commands followed by a

&- then let the system take care of it from there. Each will run on its own processor, and since I spend far more time on compression than on I/O, it will take the same time to do one as to do all 10 of them. So I get "multi core performance" from an executable that's single threaded... –

May 04, 2018 | unix.stackexchange.com

のbるしtyぱんky ,Jan 6, 2014 at 18:39

More and moretararchives use thexzformat based on LZMA2 for compression instead of the traditionalbzip2(bz2)compression. In fact kernel.org made a late " Good-bye bzip2 " announcement, 27th Dec. 2013 , indicating kernel sources would from this point on be released in both tar.gz and tar.xz format - and on the main page of the website what's directly offered is intar.xz.Are there any specific reasons explaining why this is happening and what is the relevance of

gzipin this context?> ,

For distributing archives over the Internet, the following things are generally a priority:

- Compression ratio (i.e., how small the compressor makes the data);

- Decompression time (CPU requirements);

- Decompression memory requirements; and

- Compatibility (how wide-spread the decompression program is)

Compression memory & CPU requirements aren't very important, because you can use a large fast machine for that, and you only have to do it once.

Compared to bzip2, xz has a better compression ratio and lower (better) decompression time. It, however -- at the compression settings typically used -- requires more memory to decompress [1] and is somewhat less widespread. Gzip uses less memory than either.

So, both gzip and xz format archives are posted, allowing you to pick:

- Need to decompress on a machine with very limited memory (<32 MB): gzip. Given, not very likely when talking about kernel sources.

- Need to decompress minimal tools available: gzip

- Want to save download time and/or bandwidth: xz

There isn't really a realistic combination of factors that'd get you to pick bzip2. So its being phased out.

I looked at compression comparisons in a blog post . I didn't attempt to replicate the results, and I suspect some of it has changed (mostly, I expect

xzhas improved, as its the newest.)(There are some specific scenarios where a good bzip2 implementation may be preferable to xz: bzip2 can compresses a file with lots of zeros and genome DNA sequences better than xz. Newer versions of xz now have an (optional) block mode which allows data recovery after the point of corruption and parallel compression and [in theory] decompression. Previously, only bzip2 offered these. [2] However none of these are relevant for kernel distribution)

1: In archive size,

xz -3is aroundbzip -9. Then xz uses less memory to decompress. Butxz -9(as, e.g., used for Linux kernel tarballs) uses much more thanbzip -9. (And evenxz -0needs more thangzip -9).2: F21 System Wide Change: lbzip2 as default bzip2 implementation

> ,

First of all, this question is not directly related totar. Tar just creates an uncompressed archive, the compression is then applied later on.Gzip is known to be relatively fast when compared to LZMA2 and bzip2. If speed matters,

gzip(especially the multithreaded implementationpigz) is often a good compromise between compression speed and compression ratio. Although there are alternatives if speed is an issue (e.g. LZ4).However, if a high compression ratio is desired LZMA2 beats

bzip2in almost every aspect. The compression speed is often slower, but it decompresses much faster and provides a much better compression ratio at the cost of higher memory usage.There is not much reason to use

bzip2any more, except of backwards compatibility. Furthermore, LZMA2 was desiged with multithreading in mind and many implementations by default make use of multicore CPUs (unfortunatelyxzon Linux does not do this, yet). This makes sense since the clock speeds won't increase any more but the number of cores will.There are multithreaded

bzip2implementations (e.g.pbzip), but they are often not installed by default. Also note that multithreadedbzip2only really pay off while compressing whereas decompression uses a single thread if the file was compress using a single threadedbzip2, in contrast to LZMA2. Parallelbzip2variants can only leverage multicore CPUs if the file was compressed using a parallelbzip2version, which is often not the case.Slyx ,Jan 6, 2014 at 19:14

Short answer : xz is more efficient in terms of compression ratio. So it saves disk space and optimizes the transfer through the network.

You can see this Quick Benchmark so as to discover the difference by practical tests.Mark Warburton ,Apr 14, 2016 at 14:15

LZMA2 is a block compression system whereas gzip is not. This means that LZMA2 lends itself to multi-threading. Also, if corruption occurs in an archive, you can generally recover data from subsequent blocks with LZMA2 but you cannot do this with gzip. In practice, you lose the entire archive with gzip subsequent to the corrupted block. With an LZMA2 archive, you only lose the file(s) affected by the corrupted block(s). This can be important in larger archives with multiple files.,

May 04, 2018 | www.rootusers.com

Gzip vs Bzip2 vs XZ Performance Comparison Posted by Jarrod on September 17, 2015 Leave a comment (21) Go to comments Gzip, Bzip2 and XZ are all popular compression tools used in UNIX based operating systems, but which should you use? Here we are going to benchmark and compare them against each other to get an idea of the trade off between the level of compression and time taken to achieve it.

For further information on how to use gzip, bzip2 or xz see our guides below:

The Test ServerThe test server was running CentOS 7.1.1503 with kernel 3.10.0-229.11.1 in use, all updates to date are fully applied. The server had 4 CPU cores and 16GB of available memory, during the tests only one CPU core was used as all of these tools run single threaded by default, while testing this CPU core would be fully utilized. With XZ it is possible to specify the amount of threads to run which can greatly increase performance , for further information see example 9 here .

All tests were performed on linux-3.18.19.tar, a copy of the Linux kernel from kernel.org . This file was 580,761,600 Bytes in size prior to compression.

The Benchmarking ProcessThe linux-3.18.19.tar file was compressed and decompressed 9 times each by gzip, bzip2 and xz at each available compression level from 1 to 9. A compression level of 1 indicates that the compression will be fastest but the compression ratio will not be as high so the file size will be larger. Compression level 9 on the other hand is the best possible compression level, however it will take the longest amount of time to complete.

There is an important trade off here between the compression levels between CPU processing time and the compression ratio. To get a higher compression ratio and save a greater amount of disk space, more CPU processing time will be required. To save and reduce CPU processing time a lower compression level can be used which will result in a lower compression ratio, using more disk space.

Each time the compression or decompression command was run, the 'time' command was placed in front so that we could accurately measure how long the command took to execute.

Below are the commands that were run for compression level 1:

time bzip2 -1v linux-3.18.19.tar time gzip -1v linux-3.18.19.tar time xz -1v linux-3.18.19.tarAll commands were run with the time command, verbosity and the compression level of -1 which was stepped through incrementally up to -9. To decompress, the same command was used with the -d flag.

The versions pf these tools were gzip 1.5, bzip2 1.0.6, and xz (XZ Utils) 5.1.2alpha.

ResultsThe raw data that the below graphs have been created from has been provided in tables below and can also be accessed in this spreadsheet .

Compressed SizeThe table below indicates the size in bytes of the linux-3.18.19.tar file after compression, the first column numbered 1..9 shows the compression level passed in to the compression tool.

Compression Time

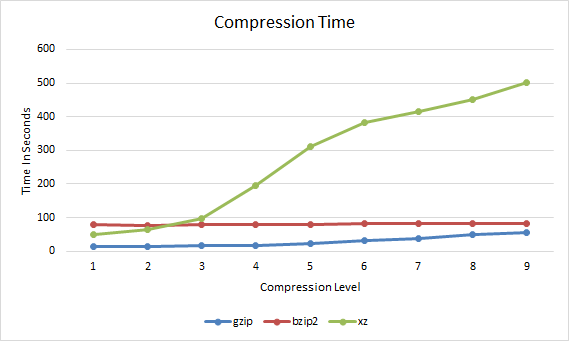

gzip bzip2 xz 1 153617925 115280806 105008672 2 146373307 107406491 100003484 3 141282888 103787547 97535320 4 130951761 101483135 92377556 5 125581626 100026953 85332024 6 123434238 98815384 83592736 7 122808861 97966560 82445064 8 122412099 97146072 81462692 9 122349984 96552670 80708748 First we'll start with the compression time, this graph shows how long it took for the compression to complete at each compression level 1 through to 9.

gzip bzip2 xz 1 13.213 78.831 48.473 2 14.003 77.557 65.203 3 16.341 78.279 97.223 4 17.801 79.202 196.146 5 22.722 80.394 310.761 6 30.884 81.516 383.128 7 37.549 82.199 416.965 8 48.584 81.576 451.527 9 54.307 82.812 500.859

Gzip vs Bzip2 vs XZ Compression Time

So far we can see that gzip takes slightly longer to complete as the compression level increases, bzip2 does not change very much, while xz increases quite significantly after a compression level of 3.

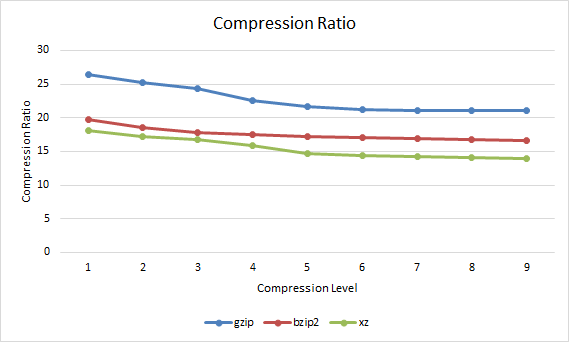

Compression RatioNow that we have an idea of how long the compression took we can compare this with how well the file compressed. The compression ratio represents the percentage that the file has been reduced to. For example if a 100mb file has been compressed with a compression ratio of 25% it would mean that the compressed version of the file is 25mb.

gzip bzip2 xz 1 26.45 19.8 18.08 2 25.2 18.49 17.21 3 24.32 17.87 16.79 4 22.54 17.47 15.9 5 21.62 17.22 14.69 6 21.25 17.01 14.39 7 21.14 16.87 14.19 8 21.07 16.73 14.02 9 21.06 16.63 13.89

Gzip vs Bzip2 vs XZ Compression Ratio

The overall trend here is that with a higher compression level applied, the lower the compression ratio indicating that the overall file size is smaller. In this case xz is always providing the best compression ratio, closely followed by bzip2 with gzip coming in last, however as shown in the compression time graph xz takes a lot longer to get these results after compression level 3.

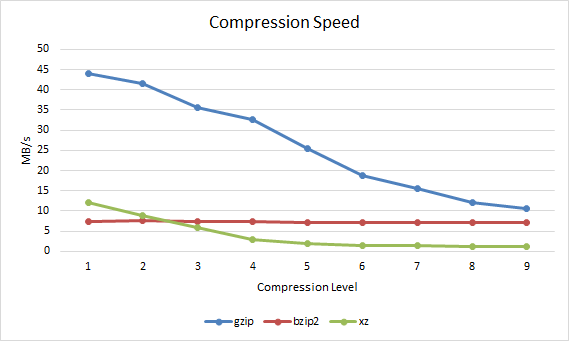

Compression SpeedThe compression speed in MB per second can also be observed.

gzip bzip2 xz 1 43.95 7.37 11.98 2 41.47 7.49 8.9 3 35.54 7.42 5.97 4 32.63 7.33 2.96 5 25.56 7.22 1.87 6 18.8 7.12 1.52 7 15.47 7.07 1.39 8 11.95 7.12 1.29 9 10.69 7.01 1.16

Gzip vs Bzip2 vs XZ Compression Speed Decompression Time

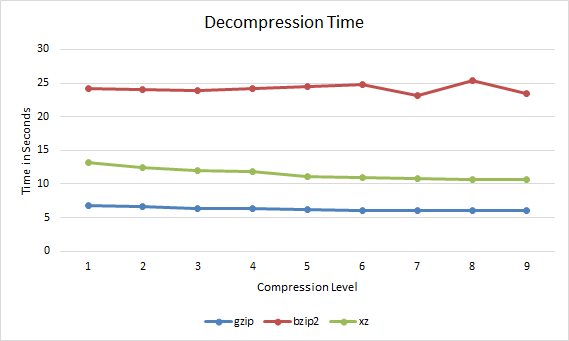

Next up is how long each file compressed at a particular compression level took to decompress.

gzip bzip2 xz 1 6.771 24.23 13.251 2 6.581 24.101 12.407 3 6.39 23.955 11.975 4 6.313 24.204 11.801 5 6.153 24.513 11.08 6 6.078 24.768 10.911 7 6.057 23.199 10.781 8 6.033 25.426 10.676 9 6.026 23.486 10.623

Gzip vs Bzip2 vs XZ Decompression Time

In all cases the file decompressed faster if it had been compressed with a higher compression level. Therefore if you are going to be serving out a compressed file over the Internet multiple times it may be worth compressing it with xz with a compression level of 9 as this will both reduce bandwidth over time when transferring the file, and will also be faster for everyone to decompress.

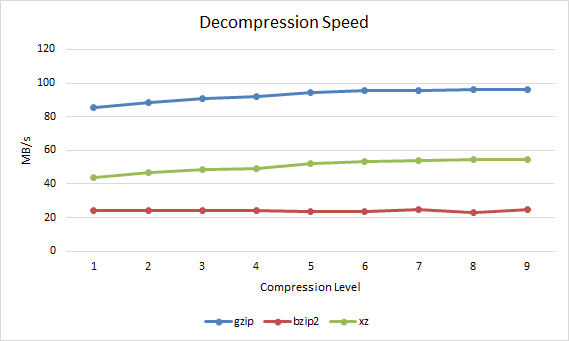

Decompression SpeedThe decompression speed in MB per second can also be observed.

1 85.77 23.97 43.83 2 88.25 24.1 46.81 3 90.9 24.24 48.5 4 91.99 24 49.21 5 94.39 23.7 52.42 6 95.55 23.45 53.23 7 95.88 25.03 53.87 8 96.26 22.84 54.4 9 96.38 24.72 54.67

Gzip vs Bzip2 vs XZ Decompression Speed Performance Differences and Comparison

By default when the compression level is not specified, gzip uses -6, bzip2 uses -9 and xz uses -6. The reason for this is pretty clear based on the results. For gzip and xz -6 as a default compression method provides a good level of compression yet does not take too long to complete, it's a fair trade off point as higher compression levels take longer to process. Bzip2 on the other hand is best used with the default compression level of 9 as is also recommended in the manual page, the results here confirm this, the compression ratio increases but the time taken is almost the same and differs by less than a second between levels 1 to 9.

In general xz achieves the best compression level, followed by bzip2 and then gzip. In order to achieve better compression however xz usually takes the longest to complete, followed by bzip2 and then gzip.

xz takes a lot more time with its default compression level of 6 while bzip2 only takes a little longer than gzip at compression level 9 and compresses a fair amount better, while the difference between bzip2 and xz is less than the difference between bzip2 and gzip making bzip2 a good trade off for compression.

Interestingly the lowest xz compression level of 1 results in a higher compression ratio than gzip with a compression level of 9 and even completes faster. Therefore using xz with a compression level of 1 instead of gzip for a better compression ratio in a faster time.

Based on these results, bzip2 is a good middle ground for compression, gzip is only a little faster while xz may not really worth it at its higher default compression ratio of 6 as it takes much longer to complete for little extra gain.

However decompressing with bzip2 takes much longer than xz or gzip, xz is a good middle ground here while gzip is again the fastest.

ConclusionSo which should you use? It's going to come down to using the right tool for the job and the particular data set that you are working with.

If you are interactively compressing files on the fly then you may want to do this quickly with gzip -6 (default compression level) or xz -1, however if you're configuring log rotation which will run automatically over night during a low resource usage period then it may be acceptable to use more CPU resources with xz -9 to save the greatest amount of space possible. For instance kernel.org compress the Linux kernel with xz, in this case spending extra time to compress the file well once makes sense when it will be downloaded and decompressed thousands of times resulting in bandwidth savings yet still decent decompression speeds.

Based on the results here, if you're simply after being able to compress and decompress files as fast as possible with little regard to the compression ratio, then gzip is the tool for you. If you want a better compression ratio to save more disk space and are willing to spend extra processing time to get it then xz will be best to use. Although xz takes the longest to compress at higher compression levels, it has a fairly good decompression speed and compresses quite fast at lower levels. Bzip2 provides a good trade off between compression ratio and processing speed however it takes the longest to decompress so it may be a good option if the content that is being compressed will be infrequently decompressed.