|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

| Checking the loaded functions | Encoding and decoding strings |

Manipulating files and pathnames |

Arguments | |||

| Interacting with the user | Manipulating strings | Parsing command line options | Printing messages to the console | Using external programs | Catching signals | Manipulating variables |

| Sysadmin Horror Stories | Unix shells history | Tips | Humor | Etc |

|

|

Functions in shell are really scripts which run in the current context of the current shell instance (no secondary shell is forked to run the function; it's run within the current shell.)

|

|

Functions provide a lot more flexibility that aliases. Here are two simplest functions possible: "do nothing" function and "Hello world" function:

function quit {

exit

}

function hello {

print "Hello world !"

}

hello

quit

Declaring a function is just a matter of writing function my_func { my_code }. Functions can be declared in arbitrary order. But they need to be declared before they are invoked in the script.

Calling a function is just like calling another program, you just write its name and (optionally) arguments separated by spaces.

NOTE: you can't enclose arguments in round parenthesis -- this would be a syntactic error. You can't use comma after arguments like you are inclined after using C or Perl.

The best way to create a set of useful shell functions in a separate file. Then you can source the file with the dot (.) command or in your startup scripts. You can also just enter the function at the command line.

To create a function from the command line, you would do something like this:

$ psgrep() {

> ps -ef | grep $1

> }

This is a pretty simple function, and could be implemented as an alias as well. Let's try to solve the problem of displayed files over 1K. awk can be used to find any matching files that are larger than 100K bytes:

largesize() {

ls -la | awk ' { if ( $5 gt 100000 ) print $1 } '

}

As in almost any programming language, you can use functions to group pieces of code in a more logical way or practice the divine art of recursion.

Bash shell's function feature is pretty primitive and just an slightly enhanced version of a similar facility in the System V Bourne shell. You can create collection of functions that help you in your work and store them in you .profile file.

Functions are faster then subshell invocation: when you invoke a function, it is already in memory. Modern computers have large RAM, so there is no need to worry about the amount of space a typical function takes up.

The other advantage of functions is that they can and should be used for organizing shell scripts into modular "chunks" of related functions that are easier to develop and maintain. To define a function, you can use the following form (there is also second C-style form of function definition that we will not discuss hee):

function function_name {

shell commands

}

You can also delete a function definition with the command unset -f function_name.

Like list of aliases is produced by typing command alias, you can find out what functions are defined in your login session by typing functions. The shell will print not just the names but the definitions of all functions, in alphabetical order by function name. Since this may result in long output, you might want to pipe the output through more or redirect it to a file and view it with the view command.

Note: functions is actually an alias for typeset -f;

There are two important differences between functions and scripts. First, functions do not run in separate processes, as scripts are when you invoke them by name; the "semantics" of running a function are more like those of your .profile when you log in or any script when invoked with the "dot" command. Second, if a function has the same name as a script or executable program, the function takes precedence.

|

if a function has the same name as a script or executable program, the function takes precedence. |

This is a good time to show the order of precedence for the various sources of commands. When you type a command to the shell, it looks in the following places until it finds a match:

Note: that means that you can overwrite built-ins with functions

If you need to know the exact source of a command, you can use the whence built-in command .If you type whence -v command_name, you also get information about how particular command is implemented, for example:

$ whence -v cd cd is a shell builtin $ whence -v function function is a keyword $ whence -v man man is /usr/bin/man $ whence -v ll ll is an alias for ls -l

The statement return N, which causes the surrounding script or function to exit with exit status N. N is actually optional; it defaults to 0.

In the scripts that finish without a return statement (i.e., every one we have seen so far) the return value is equal to the return value (sucess of failue) of the last executed statement.

Exist is similar to return: the statement exit N exits the entire script, no matter how deeply you are nested in functions.

For example is we need a function to implement enhanced cd we can write something like:

function cd {

"cd" $*

rs=$?

print $OLDPWD -> $PWD

return $rs

}

Here is fist save the exit status of cd in the variable rs and then return it as the return value of the function.

# Capture value returned by last command

ret=$?

return $ret

return [n], exit

[n] return, the function returns when it reaches

the end, and the value is the exit status of the last command it ran.

Example:

die()

{

# Print an error message and exit with given status

# call as: die status "message" ["message" ...]

exitstat=$1; shift

for i in "$@"; do

print -R "$i"

done

exit $exitstat

}

[ -w $filename ] || \ die 1 "$file not writeable" "check permissions"

Example:

logme()

{

# Print or not depending on global "$verbosity"

# Change the verbosity with a single variable.

# Arg. 1 is the level for this message.

level=$1; shift

if [[ $level -le $verbosity ]]; then

print -R $*

fi

}

verbosity=2

logme 1 This message will appear

logme 3 This only appears if verbosity is 3 or higher

Two common errors with declaring and using functions are

The following example illustrates the first type of error:

lsl { ls -l ; }

Here, the parentheses are missing after lsl. This is an invalid function definition and will result in an error message similar to the following:

sh: syntax error: '}' unexpected

The following command illustrates the second type of error:

$ lsl()

Here, the function lsl is executed along with the parentheses, (). This will not work because the shell interprets it as a redefinition of the function with the name lsl. Usually such an invocation results in a prompt similar to the following:

>

This is a prompt produced by the shell when it expects you to provide more input. The input it expects is the body of the function lsl.

NOTES:

For example:

name_error()

{

echo " $@ contains errors, it must contain only letters"

}

Here is an example of a function that takes arbitrary number of parameters and prints some information about them:

function invocation_inform {

print "The first argument is:" $1

print "List of argumnets is:" $@

print "Number of arguments is:" $#

}

The command shift performs shifts of argument to the left by given number of positions, extra argumnat shifted to left of index 1 are discarded

1=$2 2=$3 ...

for every argument, regardless of how many there are. If you supply a numeric argument to shift , it will shift the arguments that many times over; for example, shift 3 has this effect:

1=$4 2=$5 ...

This leads immediately to some code that handles a single option (call it -o ) and arbitrarily many arguments:

if [[ $1 = -o ]]; then # process the -o option shift fi # normal processing of arguments...

After the if construct, $1 , $2 , etc., are set to the correct arguments.

Messages to stdout may be captured by command substitution (`myfunction`,

which provides another way for a function to return information to the calling script. Beware of side-effects

(and reducing reusability) in functions which perform I/O.

Calling a function is just like calling another program, you just write its name and (optionally) arguments separated by spaces

NOTE: you can't enclose arguments in round parenthesis -- this would be a syntactic error. You can't use comma after arguments like you are inclined after using C or Perl.

arg1_echo test all_arg_echo test 1 test 2

the set command displays all the loaded functions available to the shell.

$ set

USER=dave

findit=()

{

if [ $# -lt 1 ]; then

echo "usage :findit file";

return 1;

fi;

find / -name $1 -print

}

...

You can use unset command to remove functions:

unset function_name

Traditionally .bash_profile file contained aliases, but now they are often separates and group into a separate file, for example .aliases. So for functions it might be also be beneficial to use a separate file called, for example, .functions.

Let's consider a very artificial (bad) example of creating a function that will call find for each argument so that we can find several different files with one command (useless exersize) The function will now look like this:

$ pg .functions

#!/bin/sh

findit()

{

# findit if [ $# -lt 1 ]; then echo "usage :findit file" return 1 fi for member do find / -name $member -print done

}

Now source the file again:

. ./.functions

Using the set command to see that it is indeed loaded, you will notice that the shell has correctly interpreted the for loop to take in all parameters.

$ set

findit=()

{

if [ $# -lt 1 ]; then

echo "usage :`basename $0` file";

return 1;

fi;

for loop in "$@";

do

find / -name $loop -print;

done

}

...

Now to execute the changed Supplying a couple of files to find:

$ findit LPSO.doc passwd /usr/local/accounts/LPSO.doc /etc/passwd ...

By default all variables, except for the special variables associated with function arguments, have global scope. In ksh, bash, and zsh, variables with local scope can be declared using the typeset command. The typeset command is discussed later in this chapter. This command is not supported in the Bourne shell, so it is not possible to have programmer-defined local variables in scripts that rely strictly on the Bourne shell.

Local variables are defined using typeset command (in bash you usually use declare instead):

typeset var1[=val1] ... varN[=valN]

Here, var1 ... varN are variable names and val1 ... valN are values to assign to the variables. The values are optional as the following example illustrates:

typeset fruit1 fruit2=banana

If you need to know the exact source of a command, there is an option to the whence built-in command.. whence by itself will print the pathname of a command if the command is a script or executable program, but it will only parrot the command's name back if it is anything else. But if you type whence -v commandname , you get more complete information, such as:

$ whence -v cd cd is a shell builtin $ whence -v function function is a keyword $ whence -v man man is /usr/bin/man $ whence -v ll ll is an alias for ls -l

We will refer mainly to scripts throughout the remainder of this book, but unless we note otherwise, you should assume that whatever we say applies equally to functions.

shell assignment statment has the form of varname=value, e.g.:

$ greeting="hello world!" $ print "$greeting"

Some environment variables are predefined by the shell when you log in. There are several built-in variables that are vital to shell programming like HOME, HOSTNAME, PWD, OLD_PWD, etc

There is also a special class on built-in variables called positional parameters. These hold the command-line arguments to scripts when they are invoked. Positional parameters have names 1, 2, 3, etc., meaning that their values are denoted by $1, $2, $3, etc. There is also a positional parameter 0, whose value is the name of the script (i.e., the command typed in to invoke it).

Two special variables contain all of the positional parameters (except positional parameter 0):

* and @. The difference between them is subtle but important, and it's apparent

only when they are used within double quotes:

"$*" is a single string that consists of all of the positional

parameters, separated by the first character in the environment variable IFS (internal field

separator), which is a space, TAB, and NEWLINE by default. "$@" is equal to "$1"

"$2"... "$N", where

N is the number of positional parameters. That is, it's equal to N separate double-quoted

strings, which are separated by spaces. We'll explore the ramifications of this difference in a little

while.The variable $# holds the number of positional parameters (as a character string). All of these variables are "read-only," meaning that you can't assign new values to them within scripts.

For example, assume that you create a file testme that contains the following simple shell script:

print "testme: $@" print "$0: Arg 1 is '$1' and Arg 2 is $2" print "$# arguments"

Then if you type bash 3.2, you will see the following output:

testme: bash 3.2 testme: Arg 1 is 'bash' and Arg 2 is '3.2' 2 arguments

In this case, $3, $4, etc., are all unset, which means that the shell will substitute the empty (or null) string for them (Unless the option nounset is turned on).

Shell functions use positional parameters and special variables like * and #

in exactly the same way as shell scripts do. If you wanted to define testme as a function,

you could put the following in your .profile or environment file:

function testme {

print "testme: $*"

print "$0: $1 and $2"

print "$# arguments"

}

You will get the same result if you type testme bash 3.2

Typically, several shell functions are defined within a single shell script. Therefore each function will need to handle its own arguments, which in turn means that each function needs to keep track of positional parameters separately. Sure enough, each function has its own copies of these variables (even though functions don't run in their own subshells, as scripts do); we say that such variables are local to the function.

However, other variables defined within functions are not local (they are global), meaning that their values are known throughout the entire shell script. For example, assume that you have a shell script called ascript that contains this:

Note: typeset can be used for making variables local to functions.

Let's take a closer look at "$@" and "$*". These variables are

two of the shell's greatest idiosyncracies, so we'll discuss some of the most common sources of confusion.

*" are separated by the first character of IFS

instead of spaces to give you some output flexibility. As a simple example, let's say you want to

print a list of positional parameters separated by commas. This script would do it:OLD_IFS=IFSIFS=, print $*IFS=$OLD_IFS

Generally you should restore IFS to old value as soon as you finish use a new value

Before we show the many things you can do with shell variables, we have to make a confession: the syntax of $varname for taking the value of a variable is not quite accurate. Actually, it's the simple form of the more general syntax, which is ${varname}.

Why two syntaxes? For one thing, the more general syntax is necessary if your code refers to more than nine positional parameters: you must use ${10} for the tenth instead of $10.

Also useful is the next character is not a delimiter:

PS1="${LOGNAME}_ "

we would get the desired $yourname_. It is safe to omit the curly brackets ({}) if the variable name is followed by a character that isn't a letter, digit, or underscore.

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Jul 08, 2020 | www.tldp.org

Appendix E. Exit Codes With Special Meanings Table E-1. Reserved Exit Codes

Exit Code Number Meaning Example Comments 1 Catchall for general errors let "var1 = 1/0" Miscellaneous errors, such as "divide by zero" and other impermissible operations 2 Misuse of shell builtins (according to Bash documentation) empty_function() {} Missing keyword or command, or permission problem (and diff return code on a failed binary file comparison ). 126 Command invoked cannot execute /dev/null Permission problem or command is not an executable 127 "command not found" illegal_command Possible problem with $PATH or a typo 128 Invalid argument to exit exit 3.14159 exit takes only integer args in the range 0 - 255 (see first footnote) 128+n Fatal error signal "n" kill -9 $PPID of script $? returns 137 (128 + 9) 130 Script terminated by Control-C Ctl-C Control-C is fatal error signal 2 , (130 = 128 + 2, see above) 255* Exit status out of range exit -1 exit takes only integer args in the range 0 - 255 According to the above table, exit codes 1 - 2, 126 - 165, and 255 [1] have special meanings, and should therefore be avoided for user-specified exit parameters. Ending a script with exit 127 would certainly cause confusion when troubleshooting (is the error code a "command not found" or a user-defined one?). However, many scripts use an exit 1 as a general bailout-upon-error. Since exit code 1 signifies so many possible errors, it is not particularly useful in debugging.

There has been an attempt to systematize exit status numbers (see /usr/include/sysexits.h ), but this is intended for C and C++ programmers. A similar standard for scripting might be appropriate. The author of this document proposes restricting user-defined exit codes to the range 64 - 113 (in addition to 0 , for success), to conform with the C/C++ standard. This would allot 50 valid codes, and make troubleshooting scripts more straightforward. [2] All user-defined exit codes in the accompanying examples to this document conform to this standard, except where overriding circumstances exist, as in Example 9-2 .

Notes

Issuing a $? from the command-line after a shell script exits gives results consistent with the table above only from the Bash or sh prompt. Running the C-shell or tcsh may give different values in some cases.

[1] Out of range exit values can result in unexpected exit codes. An exit value greater than 255 returns an exit code modulo 256 . For example, exit 3809 gives an exit code of 225 (3809 % 256 = 225). [2] An update of /usr/include/sysexits.h allocates previously unused exit codes from 64 - 78 . It may be anticipated that the range of unallotted exit codes will be further restricted in the future. The author of this document will not do fixups on the scripting examples to conform to the changing standard. This should not cause any problems, since there is no overlap or conflict in usage of exit codes between compiled C/C++ binaries and shell scripts.

Jul 08, 2020 | zwischenzugs.com

Not everyone knows that every time you run a shell command in bash, an 'exit code' is returned to bash.

Generally, if a command 'succeeds' you get an error code of

0. If it doesn't succeed, you get a non-zero code.

1is a 'general error', and others can give you more information (e.g. which signal killed it, for example). 255 is upper limit and is "internal error"grep joeuser /etc/passwd # in case of success returns 0, otherwise 1or

grep not_there /dev/null echo $?

$?is a special bash variable that's set to the exit code of each command after it runs.Grep uses exit codes to indicate whether it matched or not. I have to look up every time which way round it goes: does finding a match or not return

0?

Sep 11, 2009 | www.linuxjournal.com

Bash functions, unlike functions in most programming languages do not allow you to return a value to the caller. When a bash function ends its return value is its status: zero for success, non-zero for failure. To return values, you can set a global variable with the result, or use command substitution, or you can pass in the name of a variable to use as the result variable. The examples below describe these different mechanisms.

Although bash has a return statement, the only thing you can specify with it is the function's status, which is a numeric value like the value specified in an exit statement. The status value is stored in the $? variable. If a function does not contain a return statement, its status is set based on the status of the last statement executed in the function. To actually return arbitrary values to the caller you must use other mechanisms.

The simplest way to return a value from a bash function is to just set a global variable to the result. Since all variables in bash are global by default this is easy:

function myfunc() { myresult='some value' } myfunc echo $myresultThe code above sets the global variable myresult to the function result. Reasonably simple, but as we all know, using global variables, particularly in large programs, can lead to difficult to find bugs.

A better approach is to use local variables in your functions. The problem then becomes how do you get the result to the caller. One mechanism is to use command substitution:

function myfunc() { local myresult='some value' echo "$myresult" } result=$(myfunc) # or result=`myfunc` echo $resultHere the result is output to the stdout and the caller uses command substitution to capture the value in a variable. The variable can then be used as needed.

The other way to return a value is to write your function so that it accepts a variable name as part of its command line and then set that variable to the result of the function:

function myfunc() { local __resultvar=$1 local myresult='some value' eval $__resultvar="'$myresult'" } myfunc result echo $resultSince we have the name of the variable to set stored in a variable, we can't set the variable directly, we have to use eval to actually do the setting. The eval statement basically tells bash to interpret the line twice, the first interpretation above results in the string result='some value' which is then interpreted once more and ends up setting the caller's variable.

When you store the name of the variable passed on the command line, make sure you store it in a local variable with a name that won't be (unlikely to be) used by the caller (which is why I used __resultvar rather than just resultvar ). If you don't, and the caller happens to choose the same name for their result variable as you use for storing the name, the result variable will not get set. For example, the following does not work:

function myfunc() { local result=$1 local myresult='some value' eval $result="'$myresult'" } myfunc result echo $resultThe reason it doesn't work is because when eval does the second interpretation and evaluates result='some value' , result is now a local variable in the function, and so it gets set rather than setting the caller's result variable.

For more flexibility, you may want to write your functions so that they combine both result variables and command substitution:

function myfunc() { local __resultvar=$1 local myresult='some value' if [[ "$__resultvar" ]]; then eval $__resultvar="'$myresult'" else echo "$myresult" fi } myfunc result echo $result result2=$(myfunc) echo $result2Here, if no variable name is passed to the function, the value is output to the standard output.

Mitch Frazier is an embedded systems programmer at Emerson Electric Co. Mitch has been a contributor to and a friend of Linux Journal since the early 2000s.

David Krmpotic • 6 years ago • edited ,lxw David Krmpotic • 6 years ago ,This is the best way: http://stackoverflow.com/a/... return by reference:

function pass_back_a_string() {

eval "$1='foo bar rab oof'"

}return_var=''

pass_back_a_string return_var

echo $return_varphil • 6 years ago ,I agree. After reading this passage, the same idea with yours occurred to me.

lxw • 6 years ago ,Since this page is a top hit on google:

The only real issue I see with returning via echo is that forking the process means no longer allowing it access to set 'global' variables. They are still global in the sense that you can retrieve them and set them within the new forked process, but as soon as that process is done, you will not see any of those changes.

e.g.

#!/bin/bashmyGlobal="very global"

call1() {

myGlobal="not so global"

echo "${myGlobal}"

}tmp=$(call1) # keep in mind '$()' starts a new process

echo "${tmp}" # prints "not so global"

echo "${myGlobal}" # prints "very global"code_monk • 6 years ago • edited ,Hello everyone,

In the 3rd method, I don't think the local variable __resultvar is necessary to use. Any problems with the following code?

function myfunc()

{

local myresult='some value'

eval "$1"="'$myresult'"

}myfunc result

echo $resultEmil Vikström code_monk • 5 years ago ,i would caution against returning integers with "return $int". My code was working fine until it came across a -2 (negative two), and treated it as if it were 254, which tells me that bash functions return 8-bit unsigned ints that are not protected from overflow

A function behaves as any other Bash command, and indeed POSIX processes. That is, they can write to stdout, read from stdin and have a return code. The return code is, as you have already noticed, a value between 0 and 255. By convention 0 means success while any other return code means failure.

This is also why Bash "if" statements treat 0 as success and non+zero as failure (most other programming languages do the opposite).

Jun 12, 2020 | opensource.com

Source is like a Python import or a Java include. Learn it to expand your Bash prowess. Seth Kenlon (Red Hat) Feed 25 up 2 comments Image by : Opensource.com x Subscribe nowWhen you log into a Linux shell, you inherit a specific working environment. An environment , in the context of a shell, means that there are certain variables already set for you, which ensures your commands work as intended. For instance, the PATH environment variable defines where your shell looks for commands. Without it, nearly everything you try to do in Bash would fail with a command not found error. Your environment, while mostly invisible to you as you go about your everyday tasks, is vitally important.

There are many ways to affect your shell environment. You can make modifications in configuration files, such as

Add to your environment with source~/.bashrcand~/.profile, you can run services at startup, and you can create your own custom commands or script your own Bash functions .Bash (along with some other shells) has a built-in command called

source. And here's where it can get confusing:sourceperforms the same function as the command.(yes, that's but a single dot), and it's not the samesourceas theTclcommand (which may come up on your screen if you typeman source). The built-insourcecommand isn't in yourPATHat all, in fact. It's a command that comes included as a part of Bash, and to get further information about it, you can typehelp source.The

More on Bash.command is POSIX -compliant. Thesourcecommand is not defined by POSIX but is interchangeable with the.command.According to Bash

- Bash cheat sheet

- An introduction to programming with Bash

- A sysadmin's guide to Bash scripting

- Latest Bash articles

help, thesourcecommand executes a file in your current shell. The clause "in your current shell" is significant, because it means it doesn't launch a sub-shell; therefore, whatever you execute withsourcehappens within and affects your current environment.Before exploring how

#!/usr/bin/env bashsourcecan affect your environment, trysourceon a test file to ensure that it executes code as expected. First, create a simple Bash script and save it as a file calledhello.sh:

echo "hello world"Using

$ source hello.shsource, you can run this script even without setting the executable bit:

hello worldYou can also use the built-in

$ . hello.sh.command for the same results:

hello worldThe

Set variables and import functionssourceand.commands successfully execute the contents of the test file.You can use

sourceto "import" a file into your shell environment, just as you might use theincludekeyword in C or C++ to reference a library or theimportkeyword in Python to bring in a module. This is one of the most common uses forsource, and it's a common default inclusion in.bashrcfiles tosourcea file called.bash_aliasesso that any custom aliases you define get imported into your environment when you log in.Here's an example of importing a Bash function. First, create a function in a file called

function myip () {myfunctions. This prints your public IP address and your local IP address:

curl http: // icanhazip.comip addr | grep inet $IP | \

cut -d "/" -f 1 | \

grep -v 127 \.0 | \

grep -v \:\: 1 | \

awk '{$1=$1};1'

}Import the function into your shell:

$ source myfunctionsTest your new function:

$ myip

93.184.216.34

inet 192.168.0.23

inet6 fbd4:e85f:49c: 2121 :ce12:ef79:0e77:59d1

inet 10.8.42.38 Search for sourceWhen you use

sourcein Bash, it searches your current directory for the file you reference. This doesn't happen in all shells, so check your documentation if you're not using Bash.If Bash can't find the file to execute, it searches your

PATHinstead. Again, this isn't the default for all shells, so check your documentation if you're not using Bash.These are both nice convenience features in Bash. This behavior is surprisingly powerful because it allows you to store common functions in a centralized location on your drive and then treat your environment like an integrated development environment (IDE). You don't have to worry about where your functions are stored, because you know they're in your local equivalent of

/usr/include, so no matter where you are when you source them, Bash finds them.For instance, you could create a directory called

~/.local/includeas a storage area for common functions and then put this block of code into your.bashrcfile:for i in $HOME / .local / include /* ; do source $i

doneThis "imports" any file containing custom functions in

~/.local/includeinto your shell environment.Bash is the only shell that searches both the current directory and your

Using source for open sourcePATHwhen you use either thesourceor the.command.Using

sourceor.to execute files can be a convenient way to affect your environment while keeping your alterations modular. The next time you're thinking of copying and pasting big blocks of code into your.bashrcfile, consider placing related functions or groups of aliases into dedicated files, and then usesourceto ingest them.Get started with Bash scripting for sysadmins Learn the commands and features that make Bash one of the most powerful shells available.

Seth Kenlon (Red Hat) Introduction to automation with Bash scripts In the first article in this four-part series, learn how to create a simple shell script and why they are the best way to automate tasks.

David Both (Correspondent) Bash cheat sheet: Key combos and special syntax Download our new cheat sheet for Bash commands and shortcuts you need to talk to your computer.

Jul 25, 2017 | wiki.bash-hackers.org

Intro The day will come when you want to give arguments to your scripts. These arguments are known as positional parameters . Some relevant special parameters are described below:

Parameter(s) Description $0the first positional parameter, equivalent to argv[0]in C, see the first argument$FUNCNAMEthe function name ( attention : inside a function, $0is still the$0of the shell, not the function name)$1 $9the argument list elements from 1 to 9 ${10} ${N}the argument list elements beyond 9 (note the parameter expansion syntax!) $*all positional parameters except $0, see mass usage$@all positional parameters except $0, see mass usage$#the number of arguments, not counting $0These positional parameters reflect exactly what was given to the script when it was called.

Option-switch parsing (e.g.

-hfor displaying help) is not performed at this point.See also the dictionary entry for "parameter" . The first argument The very first argument you can access is referenced as

$0. It is usually set to the script's name exactly as called, and it's set on shell initialization:Testscript - it just echos

$0:#!/bin/bash echo "$0"You see,$0is always set to the name the script is called with ($is the prompt ):> ./testscript ./testscript> /usr/bin/testscript /usr/bin/testscriptHowever, this isn't true for login shells:

> echo "$0" -bashIn other terms,

$0is not a positional parameter, it's a special parameter independent from the positional parameter list. It can be set to anything. In the ideal case it's the pathname of the script, but since this gets set on invocation, the invoking program can easily influence it (theloginprogram does that for login shells, by prefixing a dash, for example).Inside a function,

$0still behaves as described above. To get the function name, use$FUNCNAME. Shifting The builtin commandshiftis used to change the positional parameter values:

$1will be discarded$2will become$1$3will become$2- in general:

$Nwill become$N-1The command can take a number as argument: Number of positions to shift. e.g.

shift 4shifts$5to$1. Using them Enough theory, you want to access your script-arguments. Well, here we go. One by one One way is to access specific parameters:#!/bin/bash echo "Total number of arguments: $#" echo "Argument 1: $1" echo "Argument 2: $2" echo "Argument 3: $3" echo "Argument 4: $4" echo "Argument 5: $5"While useful in another situation, this way is lacks flexibility. The maximum number of arguments is a fixedvalue - which is a bad idea if you write a script that takes many filenames as arguments.

⇒ forget that one Loops There are several ways to loop through the positional parameters.

You can code a C-style for-loop using

$#as the end value. On every iteration, theshift-command is used to shift the argument list:numargs=$# for ((i=1 ; i <= numargs ; i++)) do echo "$1" shift doneNot very stylish, but usable. The

numargsvariable is used to store the initial value of$#because the shift command will change it as the script runs.

Another way to iterate one argument at a time is the

forloop without a given wordlist. The loop uses the positional parameters as a wordlist:for arg do echo "$arg" doneAdvantage: The positional parameters will be preserved

The next method is similar to the first example (the

forloop), but it doesn't test for reaching$#. It shifts and checks if$1still expands to something, using the test command :while [ "$1" ] do echo "$1" shift doneLooks nice, but has the disadvantage of stopping when

$1is empty (null-string). Let's modify it to run as long as$1is defined (but may be null), using parameter expansion for an alternate value :while [ "${1+defined}" ]; do echo "$1" shift doneGetopts There is a small tutorial dedicated to ''getopts'' ( under construction ). Mass usage All Positional Parameters Sometimes it's necessary to just "relay" or "pass" given arguments to another program. It's very inefficient to do that in one of these loops, as you will destroy integrity, most likely (spaces!).

The shell developers created

$*and$@for this purpose.As overview:

Syntax Effective result $*$1 $2 $3 ${N}$@$1 $2 $3 ${N}"$*""$1c$2c$3c c${N}""$@""$1" "$2" "$3" "${N}"Without being quoted (double quotes), both have the same effect: All positional parameters from

$1to the last one used are expanded without any special handling.When the

$*special parameter is double quoted, it expands to the equivalent of:"$1c$2c$3c$4c ..$N", where 'c' is the first character ofIFS.But when the

$@special parameter is used inside double quotes, it expands to the equivanent of

"$1" "$2" "$3" "$4" .. "$N"which reflects all positional parameters as they were set initially and passed to the script or function. If you want to re-use your positional parameters to call another program (for example in a wrapper-script), then this is the choice for you, use double quoted

"$@".Well, let's just say: You almost always want a quoted

"$@"! Range Of Positional Parameters Another way to mass expand the positional parameters is similar to what is possible for a range of characters using substring expansion on normal parameters and the mass expansion range of arrays .

${@:START:COUNT}

${*:START:COUNT}

"${@:START:COUNT}"

"${*:START:COUNT}"The rules for using

@or*and quoting are the same as above. This will expandCOUNTnumber of positional parameters beginning atSTART.COUNTcan be omitted (${@:START}), in which case, all positional parameters beginning atSTARTare expanded.If

STARTis negative, the positional parameters are numbered in reverse starting with the last one.

COUNTmay not be negative, i.e. the element count may not be decremented.Example: START at the last positional parameter:

echo "${@: -1}"Attention : As of Bash 4, a

STARTof0includes the special parameter$0, i.e. the shell name or whatever $0 is set to, when the positional parameters are in use. ASTARTof1begins at$1. In Bash 3 and older, both0and1began at$1. Setting Positional Parameters Setting positional parameters with command line arguments, is not the only way to set them. The builtin command, set may be used to "artificially" change the positional parameters from inside the script or function:set "This is" my new "set of" positional parameters # RESULTS IN # $1: This is # $2: my # $3: new # $4: set of # $5: positional # $6: parametersIt's wise to signal "end of options" when setting positional parameters this way. If not, the dashes might be interpreted as an option switch by

setitself:# both ways work, but behave differently. See the article about the set command! set -- ... set - ...Alternately this will also preserve any verbose (-v) or tracing (-x) flags, which may otherwise be reset by

setset -$- ...

continue Production examples Using a while loop To make your program accept options as standard command syntax:

COMMAND [options] <params># Like 'cat -A file.txt'See simple option parsing code below. It's not that flexible. It doesn't auto-interpret combined options (-fu USER) but it works and is a good rudimentary way to parse your arguments.

#!/bin/sh # Keeping options in alphabetical order makes it easy to add more. while : do case "$1" in -f | --file) file="$2" # You may want to check validity of $2 shift 2 ;; -h | --help) display_help # Call your function # no shifting needed here, we're done. exit 0 ;; -u | --user) username="$2" # You may want to check validity of $2 shift 2 ;; -v | --verbose) # It's better to assign a string, than a number like "verbose=1" # because if you're debugging the script with "bash -x" code like this: # # if [ "$verbose" ] ... # # You will see: # # if [ "verbose" ] ... # # Instead of cryptic # # if [ "1" ] ... # verbose="verbose" shift ;; --) # End of all options shift break; -*) echo "Error: Unknown option: $1" >&2 exit 1 ;; *) # No more options break ;; esac done # End of fileFilter unwanted options with a wrapper script This simple wrapper enables filtering unwanted options (here:

-aand–allforls) out of the command line. It reads the positional parameters and builds a filtered array consisting of them, then callslswith the new option set. It also respects the–as "end of options" forlsand doesn't change anything after it:#!/bin/bash # simple ls(1) wrapper that doesn't allow the -a option options=() # the buffer array for the parameters eoo=0 # end of options reached while [[ $1 ]] do if ! ((eoo)); then case "$1" in -a) shift ;; --all) shift ;; -[^-]*a*|-a?*) options+=("${1//a}") shift ;; --) eoo=1 options+=("$1") shift ;; *) options+=("$1") shift ;; esac else options+=("$1") # Another (worse) way of doing the same thing: # options=("${options[@]}" "$1") shift fi done /bin/ls "${options[@]}"Using getopts There is a small tutorial dedicated to ''getopts'' ( under construction ). See also

Discussion 2010/04/14 14:20

- Internal: Small getopts tutorial

- Internal: The while-loop

- Internal: The C-style for-loop

- Internal: Arrays (for equivalent syntax for mass-expansion)

- Internal: Substring expansion on a parameter (for equivalent syntax for mass-expansion)

- Dictionary, internal: Parameter

The shell-developers invented $* and $@ for this purpose.Without being quoted (double-quoted), both have the same effect: All positional parameters from $1 to the last used one >are expanded, separated by the first character of IFS (represented by "c" here, but usually a space):

$1c$2c$3c$4c........$NWithout double quotes, $* and $@ are expanding the positional parameters separated by only space, not by IFS.

#!/bin/bash export IFS='-' echo -e $* echo -e $@$./test "This is" 2 3 This is 2 3 This is 2 3(Edited: Inserted code tags)

2010/04/14 17:12 Thank you very much for this finding. I know how$*works, thus I can't understand why I described it that wrong. I guess it was in some late night session.Thanks again.

2011/02/18 16:11 #!/bin/bashOLDIFS="$IFS" IFS='-' #export IFS='-'

#echo -e $* #echo -e $@ #should be echo -e "$*" echo -e "$@" IFS="$OLDIFS"

2011/02/18 16:14 #should be echo -e "$*" Dave Carlton , 2010/05/18 15:23 I would suggext using a different prompt as the $ is confusing to newbies. Otherwise, an excellent treatise on use of positional parameters. 2010/05/24 10:48 Thanks for the suggestion, I use "> " here now, and I'll change it in whatever text I edit in future (whole wiki). Let's see if "> " is okay. 2012/04/20 10:32 Here's yet another non-getopts way.http://bsdpants.blogspot.de/2007/02/option-ize-your-shell-scripts.html

2012/07/16 14:48 Hi there!What if I use "$@" in subsequent function calls, but arguments are strings?

I mean, having:

#!/bin/bash echo "$@" echo n: $#If you use it

mypc$ script arg1 arg2 "asd asd" arg4 arg1 arg2 asd asd arg4 n: 4But having

#!/bin/bash myfunc() { echo "$@" echo n: $# } ech "$@" echo n: $# myfunc "$@"you get:

mypc$ myscrpt arg1 arg2 "asd asd" arg4 arg1 arg2 asd asd arg4 4 arg1 arg2 asd asd arg4 5As you can see, there is no way to make know the function that a parameter is a string and not a space separated list of arguments.

Any idea of how to solve it? I've test calling functions and doing expansion in almost all ways with no results.

2012/08/12 09:11 I don't know why it fails for you. It should work if you use"$@", of course.See the exmaple I used your second script with:

$ ./args1 a b c "d e" f a b c d e f n: 5 a b c d e f n: 52015/06/10 10:00 Thanks a lot for this tutorial. Especially the first example is very helpful.

Mar 30, 2018 | sookocheff.com

Parsing bash script options with getopts Posted on January 4, 2015 | 5 minutes | Kevin Sookocheff A common task in shell scripting is to parse command line arguments to your script. Bash provides the

getoptsbuilt-in function to do just that. This tutorial explains how to use thegetoptsbuilt-in function to parse arguments and options to a bash script.The

getoptsfunction takes three parameters. The first is a specification of which options are valid, listed as a sequence of letters. For example, the string'ht'signifies that the options-hand-tare valid.The second argument to

getoptsis a variable that will be populated with the option or argument to be processed next. In the following loop,optwill hold the value of the current option that has been parsed bygetopts.while getopts ":ht" opt; do case ${opt} in h ) # process option a ;; t ) # process option t ;; \? ) echo "Usage: cmd [-h] [-t]" ;; esac doneThis example shows a few additional features of

getopts. First, if an invalid option is provided, the option variable is assigned the value?. You can catch this case and provide an appropriate usage message to the user. Second, this behaviour is only true when you prepend the list of valid options with:to disable the default error handling of invalid options. It is recommended to always disable the default error handling in your scripts.The third argument to

Shifting processed optionsgetoptsis the list of arguments and options to be processed. When not provided, this defaults to the arguments and options provided to the application ($@). You can provide this third argument to usegetoptsto parse any list of arguments and options you provide.The variable

OPTINDholds the number of options parsed by the last call togetopts. It is common practice to call theshiftcommand at the end of your processing loop to remove options that have already been handled from$@.shift $((OPTIND -1))Parsing options with argumentsOptions that themselves have arguments are signified with a

:. The argument to an option is placed in the variableOPTARG. In the following example, the optionttakes an argument. When the argument is provided, we copy its value to the variabletarget. If no argument is providedgetoptswill setoptto:. We can recognize this error condition by catching the:case and printing an appropriate error message.while getopts ":t:" opt; do case ${opt} in t ) target=$OPTARG ;; \? ) echo "Invalid option: $OPTARG" 1>&2 ;; : ) echo "Invalid option: $OPTARG requires an argument" 1>&2 ;; esac done shift $((OPTIND -1))An extended example – parsing nested arguments and optionsLet's walk through an extended example of processing a command that takes options, has a sub-command, and whose sub-command takes an additional option that has an argument. This is a mouthful so let's break it down using an example. Let's say we are writing our own version of the

pipcommand . In this version you can callpipwith the-hoption to display a help message.> pip -h Usage: pip -h Display this help message. pip install Install a Python package.We can use

getoptsto parse the-hoption with the followingwhileloop. In it we catch invalid options with\?andshiftall arguments that have been processed withshift $((OPTIND -1)).while getopts ":h" opt; do case ${opt} in h ) echo "Usage:" echo " pip -h Display this help message." echo " pip install Install a Python package." exit 0 ;; \? ) echo "Invalid Option: -$OPTARG" 1>&2 exit 1 ;; esac done shift $((OPTIND -1))Now let's add the sub-command

installto our script.installtakes as an argument the Python package to install.> pip install urllib3

installalso takes an option,-t.-ttakes as an argument the location to install the package to relative to the current directory.> pip install urllib3 -t ./src/libTo process this line we must find the sub-command to execute. This value is the first argument to our script.

subcommand=$1 shift # Remove `pip` from the argument listNow we can process the sub-command

install. In our example, the option-tis actually an option that follows the package argument so we begin by removinginstallfrom the argument list and processing the remainder of the line.case "$subcommand" in install) package=$1 shift # Remove `install` from the argument list ;; esacAfter shifting the argument list we can process the remaining arguments as if they are of the form

package -t src/lib. The-toption takes an argument itself. This argument will be stored in the variableOPTARGand we save it to the variabletargetfor further work.case "$subcommand" in install) package=$1 shift # Remove `install` from the argument list while getopts ":t:" opt; do case ${opt} in t ) target=$OPTARG ;; \? ) echo "Invalid Option: -$OPTARG" 1>&2 exit 1 ;; : ) echo "Invalid Option: -$OPTARG requires an argument" 1>&2 exit 1 ;; esac done shift $((OPTIND -1)) ;; esacPutting this all together, we end up with the following script that parses arguments to our version of

pipand its sub-commandinstall.package="" # Default to empty package target="" # Default to empty target # Parse options to the `pip` command while getopts ":h" opt; do case ${opt} in h ) echo "Usage:" echo " pip -h Display this help message." echo " pip install <package> Install <package>." exit 0 ;; \? ) echo "Invalid Option: -$OPTARG" 1>&2 exit 1 ;; esac done shift $((OPTIND -1)) subcommand=$1; shift # Remove 'pip' from the argument list case "$subcommand" in # Parse options to the install sub command install) package=$1; shift # Remove 'install' from the argument list # Process package options while getopts ":t:" opt; do case ${opt} in t ) target=$OPTARG ;; \? ) echo "Invalid Option: -$OPTARG" 1>&2 exit 1 ;; : ) echo "Invalid Option: -$OPTARG requires an argument" 1>&2 exit 1 ;; esac done shift $((OPTIND -1)) ;; esacAfter processing the above sequence of commands, the variable

bash getoptspackagewill hold the package to install and the variabletargetwill hold the target to install the package to. You can use this as a template for processing any set of arguments and options to your scripts.

Jul 10, 2017 | stackoverflow.com

Livven, Jul 10, 2017 at 8:11

Update: It's been more than 5 years since I started this answer. Thank you for LOTS of great edits/comments/suggestions. In order save maintenance time, I've modified the code block to be 100% copy-paste ready. Please do not post comments like "What if you changed X to Y ". Instead, copy-paste the code block, see the output, make the change, rerun the script, and comment "I changed X to Y and " I don't have time to test your ideas and tell you if they work.

Method #1: Using bash without getopt[s]Two common ways to pass key-value-pair arguments are:

Bash Space-Separated (e.g.,--option argument) (without getopt[s])Usage

demo-space-separated.sh -e conf -s /etc -l /usr/lib /etc/hostscat >/tmp/demo-space-separated.sh <<'EOF' #!/bin/bash POSITIONAL=() while [[ $# -gt 0 ]] do key="$1" case $key in -e|--extension) EXTENSION="$2" shift # past argument shift # past value ;; -s|--searchpath) SEARCHPATH="$2" shift # past argument shift # past value ;; -l|--lib) LIBPATH="$2" shift # past argument shift # past value ;; --default) DEFAULT=YES shift # past argument ;; *) # unknown option POSITIONAL+=("$1") # save it in an array for later shift # past argument ;; esac done set -- "${POSITIONAL[@]}" # restore positional parameters echo "FILE EXTENSION = ${EXTENSION}" echo "SEARCH PATH = ${SEARCHPATH}" echo "LIBRARY PATH = ${LIBPATH}" echo "DEFAULT = ${DEFAULT}" echo "Number files in SEARCH PATH with EXTENSION:" $(ls -1 "${SEARCHPATH}"/*."${EXTENSION}" | wc -l) if [[ -n $1 ]]; then echo "Last line of file specified as non-opt/last argument:" tail -1 "$1" fi EOF chmod +x /tmp/demo-space-separated.sh /tmp/demo-space-separated.sh -e conf -s /etc -l /usr/lib /etc/hostsoutput from copy-pasting the block above:

FILE EXTENSION = conf SEARCH PATH = /etc LIBRARY PATH = /usr/lib DEFAULT = Number files in SEARCH PATH with EXTENSION: 14 Last line of file specified as non-opt/last argument: #93.184.216.34 example.comBash Equals-Separated (e.g.,--option=argument) (without getopt[s])Usage

demo-equals-separated.sh -e=conf -s=/etc -l=/usr/lib /etc/hostscat >/tmp/demo-equals-separated.sh <<'EOF' #!/bin/bash for i in "$@" do case $i in -e=*|--extension=*) EXTENSION="${i#*=}" shift # past argument=value ;; -s=*|--searchpath=*) SEARCHPATH="${i#*=}" shift # past argument=value ;; -l=*|--lib=*) LIBPATH="${i#*=}" shift # past argument=value ;; --default) DEFAULT=YES shift # past argument with no value ;; *) # unknown option ;; esac done echo "FILE EXTENSION = ${EXTENSION}" echo "SEARCH PATH = ${SEARCHPATH}" echo "LIBRARY PATH = ${LIBPATH}" echo "DEFAULT = ${DEFAULT}" echo "Number files in SEARCH PATH with EXTENSION:" $(ls -1 "${SEARCHPATH}"/*."${EXTENSION}" | wc -l) if [[ -n $1 ]]; then echo "Last line of file specified as non-opt/last argument:" tail -1 $1 fi EOF chmod +x /tmp/demo-equals-separated.sh /tmp/demo-equals-separated.sh -e=conf -s=/etc -l=/usr/lib /etc/hostsoutput from copy-pasting the block above:

FILE EXTENSION = conf SEARCH PATH = /etc LIBRARY PATH = /usr/lib DEFAULT = Number files in SEARCH PATH with EXTENSION: 14 Last line of file specified as non-opt/last argument: #93.184.216.34 example.comTo better understand

Method #2: Using bash with getopt[s]${i#*=}search for "Substring Removal" in this guide . It is functionally equivalent to`sed 's/[^=]*=//' <<< "$i"`which calls a needless subprocess or`echo "$i" | sed 's/[^=]*=//'`which calls two needless subprocesses.from: http://mywiki.wooledge.org/BashFAQ/035#getopts

getopt(1) limitations (older, relatively-recent

getoptversions):

- can't handle arguments that are empty strings

- can't handle arguments with embedded whitespace

More recent

getoptversions don't have these limitations.Additionally, the POSIX shell (and others) offer

getoptswhich doesn't have these limitations. I've included a simplisticgetoptsexample.Usage

demo-getopts.sh -vf /etc/hosts foo barcat >/tmp/demo-getopts.sh <<'EOF' #!/bin/sh # A POSIX variable OPTIND=1 # Reset in case getopts has been used previously in the shell. # Initialize our own variables: output_file="" verbose=0 while getopts "h?vf:" opt; do case "$opt" in h|\?) show_help exit 0 ;; v) verbose=1 ;; f) output_file=$OPTARG ;; esac done shift $((OPTIND-1)) [ "${1:-}" = "--" ] && shift echo "verbose=$verbose, output_file='$output_file', Leftovers: $@" EOF chmod +x /tmp/demo-getopts.sh /tmp/demo-getopts.sh -vf /etc/hosts foo baroutput from copy-pasting the block above:

verbose=1, output_file='/etc/hosts', Leftovers: foo barThe advantages of

getoptsare:

- It's more portable, and will work in other shells like

dash.- It can handle multiple single options like

-vf filenamein the typical Unix way, automatically.The disadvantage of

getoptsis that it can only handle short options (-h, not--help) without additional code.There is a getopts tutorial which explains what all of the syntax and variables mean. In bash, there is also

help getopts, which might be informative.johncip ,Jul 23, 2018 at 15:15

No answer mentions enhanced getopt . And the top-voted answer is misleading: It either ignores-vfdstyle short options (requested by the OP) or options after positional arguments (also requested by the OP); and it ignores parsing-errors. Instead:

- Use enhanced

getoptfrom util-linux or formerly GNU glibc . 1- It works with

getopt_long()the C function of GNU glibc.- Has all useful distinguishing features (the others don't have them):

- handles spaces, quoting characters and even binary in arguments 2 (non-enhanced

getoptcan't do this)- it can handle options at the end:

script.sh -o outFile file1 file2 -v(getoptsdoesn't do this)- allows

=-style long options:script.sh --outfile=fileOut --infile fileIn(allowing both is lengthy if self parsing)- allows combined short options, e.g.

-vfd(real work if self parsing)- allows touching option-arguments, e.g.

-oOutfileor-vfdoOutfile- Is so old already 3 that no GNU system is missing this (e.g. any Linux has it).

- You can test for its existence with:

getopt --test→ return value 4.- Other

getoptor shell-builtingetoptsare of limited use.The following calls

myscript -vfd ./foo/bar/someFile -o /fizz/someOtherFile myscript -v -f -d -o/fizz/someOtherFile -- ./foo/bar/someFile myscript --verbose --force --debug ./foo/bar/someFile -o/fizz/someOtherFile myscript --output=/fizz/someOtherFile ./foo/bar/someFile -vfd myscript ./foo/bar/someFile -df -v --output /fizz/someOtherFileall return

verbose: y, force: y, debug: y, in: ./foo/bar/someFile, out: /fizz/someOtherFilewith the following

myscript#!/bin/bash # saner programming env: these switches turn some bugs into errors set -o errexit -o pipefail -o noclobber -o nounset # -allow a command to fail with !'s side effect on errexit # -use return value from ${PIPESTATUS[0]}, because ! hosed $? ! getopt --test > /dev/null if [[ ${PIPESTATUS[0]} -ne 4 ]]; then echo 'I'm sorry, `getopt --test` failed in this environment.' exit 1 fi OPTIONS=dfo:v LONGOPTS=debug,force,output:,verbose # -regarding ! and PIPESTATUS see above # -temporarily store output to be able to check for errors # -activate quoting/enhanced mode (e.g. by writing out "--options") # -pass arguments only via -- "$@" to separate them correctly ! PARSED=$(getopt --options=$OPTIONS --longoptions=$LONGOPTS --name "$0" -- "$@") if [[ ${PIPESTATUS[0]} -ne 0 ]]; then # e.g. return value is 1 # then getopt has complained about wrong arguments to stdout exit 2 fi # read getopt's output this way to handle the quoting right: eval set -- "$PARSED" d=n f=n v=n outFile=- # now enjoy the options in order and nicely split until we see -- while true; do case "$1" in -d|--debug) d=y shift ;; -f|--force) f=y shift ;; -v|--verbose) v=y shift ;; -o|--output) outFile="$2" shift 2 ;; --) shift break ;; *) echo "Programming error" exit 3 ;; esac done # handle non-option arguments if [[ $# -ne 1 ]]; then echo "$0: A single input file is required." exit 4 fi echo "verbose: $v, force: $f, debug: $d, in: $1, out: $outFile"

1 enhanced getopt is available on most "bash-systems", including Cygwin; on OS X try brew install gnu-getopt or

sudo port install getopt

2 the POSIXexec()conventions have no reliable way to pass binary NULL in command line arguments; those bytes prematurely end the argument

3 first version released in 1997 or before (I only tracked it back to 1997)Tobias Kienzler ,Mar 19, 2016 at 15:23

from : digitalpeer.com with minor modificationsUsage

myscript.sh -p=my_prefix -s=dirname -l=libname#!/bin/bash for i in "$@" do case $i in -p=*|--prefix=*) PREFIX="${i#*=}" ;; -s=*|--searchpath=*) SEARCHPATH="${i#*=}" ;; -l=*|--lib=*) DIR="${i#*=}" ;; --default) DEFAULT=YES ;; *) # unknown option ;; esac done echo PREFIX = ${PREFIX} echo SEARCH PATH = ${SEARCHPATH} echo DIRS = ${DIR} echo DEFAULT = ${DEFAULT}To better understand

${i#*=}search for "Substring Removal" in this guide . It is functionally equivalent to`sed 's/[^=]*=//' <<< "$i"`which calls a needless subprocess or`echo "$i" | sed 's/[^=]*=//'`which calls two needless subprocesses.Robert Siemer ,Jun 1, 2018 at 1:57

getopt()/getopts()is a good option. Stolen from here :The simple use of "getopt" is shown in this mini-script:

#!/bin/bash echo "Before getopt" for i do echo $i done args=`getopt abc:d $*` set -- $args echo "After getopt" for i do echo "-->$i" doneWhat we have said is that any of -a, -b, -c or -d will be allowed, but that -c is followed by an argument (the "c:" says that).

If we call this "g" and try it out:

bash-2.05a$ ./g -abc foo Before getopt -abc foo After getopt -->-a -->-b -->-c -->foo -->--We start with two arguments, and "getopt" breaks apart the options and puts each in its own argument. It also added "--".

hfossli ,Jan 31 at 20:05

More succinct wayscript.sh

#!/bin/bash while [[ "$#" -gt 0 ]]; do case $1 in -d|--deploy) deploy="$2"; shift;; -u|--uglify) uglify=1;; *) echo "Unknown parameter passed: $1"; exit 1;; esac; shift; done echo "Should deploy? $deploy" echo "Should uglify? $uglify"Usage:

./script.sh -d dev -u # OR: ./script.sh --deploy dev --uglifybronson ,Apr 27 at 23:22

At the risk of adding another example to ignore, here's my scheme.

- handles

-n argand--name=arg- allows arguments at the end

- shows sane errors if anything is misspelled

- compatible, doesn't use bashisms

- readable, doesn't require maintaining state in a loop

Hope it's useful to someone.

while [ "$#" -gt 0 ]; do case "$1" in -n) name="$2"; shift 2;; -p) pidfile="$2"; shift 2;; -l) logfile="$2"; shift 2;; --name=*) name="${1#*=}"; shift 1;; --pidfile=*) pidfile="${1#*=}"; shift 1;; --logfile=*) logfile="${1#*=}"; shift 1;; --name|--pidfile|--logfile) echo "$1 requires an argument" >&2; exit 1;; -*) echo "unknown option: $1" >&2; exit 1;; *) handle_argument "$1"; shift 1;; esac doneRobert Siemer ,Jun 6, 2016 at 19:28

I'm about 4 years late to this question, but want to give back. I used the earlier answers as a starting point to tidy up my old adhoc param parsing. I then refactored out the following template code. It handles both long and short params, using = or space separated arguments, as well as multiple short params grouped together. Finally it re-inserts any non-param arguments back into the $1,$2.. variables. I hope it's useful.#!/usr/bin/env bash # NOTICE: Uncomment if your script depends on bashisms. #if [ -z "$BASH_VERSION" ]; then bash $0 $@ ; exit $? ; fi echo "Before" for i ; do echo - $i ; done # Code template for parsing command line parameters using only portable shell # code, while handling both long and short params, handling '-f file' and # '-f=file' style param data and also capturing non-parameters to be inserted # back into the shell positional parameters. while [ -n "$1" ]; do # Copy so we can modify it (can't modify $1) OPT="$1" # Detect argument termination if [ x"$OPT" = x"--" ]; then shift for OPT ; do REMAINS="$REMAINS \"$OPT\"" done break fi # Parse current opt while [ x"$OPT" != x"-" ] ; do case "$OPT" in # Handle --flag=value opts like this -c=* | --config=* ) CONFIGFILE="${OPT#*=}" shift ;; # and --flag value opts like this -c* | --config ) CONFIGFILE="$2" shift ;; -f* | --force ) FORCE=true ;; -r* | --retry ) RETRY=true ;; # Anything unknown is recorded for later * ) REMAINS="$REMAINS \"$OPT\"" break ;; esac # Check for multiple short options # NOTICE: be sure to update this pattern to match valid options NEXTOPT="${OPT#-[cfr]}" # try removing single short opt if [ x"$OPT" != x"$NEXTOPT" ] ; then OPT="-$NEXTOPT" # multiple short opts, keep going else break # long form, exit inner loop fi done # Done with that param. move to next shift done # Set the non-parameters back into the positional parameters ($1 $2 ..) eval set -- $REMAINS echo -e "After: \n configfile='$CONFIGFILE' \n force='$FORCE' \n retry='$RETRY' \n remains='$REMAINS'" for i ; do echo - $i ; done> ,

I have found the matter to write portable parsing in scripts so frustrating that I have written Argbash - a FOSS code generator that can generate the arguments-parsing code for your script plus it has some nice features:

May 10, 2013 | stackoverflow.com

An example of how to use getopts in bash Ask Question Asked 6 years, 3 months ago Active 10 months ago Viewed 419k times 288 132

chepner ,May 10, 2013 at 13:42

I want to callmyscriptfile in this way:$ ./myscript -s 45 -p any_stringor

$ ./myscript -h >>> should display help $ ./myscript >>> should display helpMy requirements are:

getopthere to get the input arguments- check that

-sexists, if not return error- check that the value after the

-sis 45 or 90- check that the

-pexists and there is an input string after- if the user enters

./myscript -hor just./myscriptthen display helpI tried so far this code:

#!/bin/bash while getopts "h:s:" arg; do case $arg in h) echo "usage" ;; s) strength=$OPTARG echo $strength ;; esac doneBut with that code I get errors. How to do it with Bash and

getopt?,

#!/bin/bash usage() { echo "Usage: $0 [-s <45|90>] [-p <string>]" 1>&2; exit 1; } while getopts ":s:p:" o; do case "${o}" in s) s=${OPTARG} ((s == 45 || s == 90)) || usage ;; p) p=${OPTARG} ;; *) usage ;; esac done shift $((OPTIND-1)) if [ -z "${s}" ] || [ -z "${p}" ]; then usage fi echo "s = ${s}" echo "p = ${p}"Example runs:

$ ./myscript.sh Usage: ./myscript.sh [-s <45|90>] [-p <string>] $ ./myscript.sh -h Usage: ./myscript.sh [-s <45|90>] [-p <string>] $ ./myscript.sh -s "" -p "" Usage: ./myscript.sh [-s <45|90>] [-p <string>] $ ./myscript.sh -s 10 -p foo Usage: ./myscript.sh [-s <45|90>] [-p <string>] $ ./myscript.sh -s 45 -p foo s = 45 p = foo $ ./myscript.sh -s 90 -p bar s = 90 p = bar

Aug 07, 2016 | shapeshed.com

Tutorial on using exit codes from Linux or UNIX commands. Examples of how to get the exit code of a command, how to set the exit code and how to suppress exit codes.Estimated reading time: 3 minutes

Table of contentsWhat is an exit code in the UNIX or Linux shell?

An exit code, or sometimes known as a return code, is the code returned to a parent process by an executable. On POSIX systems the standard exit code is

0for success and any number from1to255for anything else.Exit codes can be interpreted by machine scripts to adapt in the event of successes of failures. If exit codes are not set the exit code will be the exit code of the last run command.

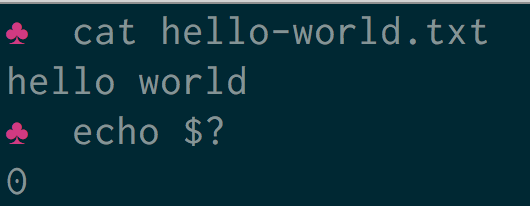

How to get the exit code of a commandTo get the exit code of a command type

echo $?at the command prompt. In the following example a file is printed to the terminal using the cat command.cat file.txt hello world echo $? 0The command was successful. The file exists and there are no errors in reading the file or writing it to the terminal. The exit code is therefore

0.In the following example the file does not exist.

cat doesnotexist.txt cat: doesnotexist.txt: No such file or directory echo $? 1The exit code is

How to use exit codes in scripts1as the operation was not successful.To use exit codes in scripts an

ifstatement can be used to see if an operation was successful.#!/bin/bash cat file.txt if [ $? -eq 0 ] then echo "The script ran ok" exit 0 else echo "The script failed" >&2 exit 1 fiIf the command was unsuccessful the exit code will be

How to set an exit code0and 'The script ran ok' will be printed to the terminal.To set an exit code in a script use

exit 0where0is the number you want to return. In the following example a shell script exits with a1. This file is saved asexit.sh.#!/bin/bash exit 1Executing this script shows that the exit code is correctly set.

bash exit.sh echo $? 1What exit code should I use?The Linux Documentation Project has a list of reserved codes that also offers advice on what code to use for specific scenarios. These are the standard error codes in Linux or UNIX.

How to suppress exit statuses

1- Catchall for general errors2- Misuse of shell builtins (according to Bash documentation)126- Command invoked cannot execute127- "command not found"128- Invalid argument to exit128+n- Fatal error signal "n"130- Script terminated by Control-C255\*- Exit status out of rangeSometimes there may be a requirement to suppress an exit status. It may be that a command is being run within another script and that anything other than a

0status is undesirable.In the following example a file is printed to the terminal using cat . This file does not exist so will cause an exit status of

1.To suppress the error message any output to standard error is sent to

/dev/nullusing2>/dev/null.If the cat command fails an

ORoperation can be used to provide a fallback -cat file.txt || exit 0. In this case an exit code of0is returned even if there is an error.Combining both the suppression of error output and the

ORoperation the following script returns a status code of0with no output even though the file does not exist.#!/bin/bash cat 'doesnotexist.txt' 2>/dev/null || exit 0Further reading

Aug 26, 2019 | www.shellscript.sh

Exit codes are a number between 0 and 255, which is returned by any Unix command when it returns control to its parent process.

Other numbers can be used, but these are treated modulo 256, soexit -10is equivalent toexit 246, andexit 257is equivalent toexit 1.These can be used within a shell script to change the flow of execution depending on the success or failure of commands executed. This was briefly introduced in Variables - Part II . Here we shall look in more detail in the available interpretations of exit codes.

Success is traditionally represented with

exit 0; failure is normally indicated with a non-zero exit-code. This value can indicate different reasons for failure.

For example, GNUgrepreturns0on success,1if no matches were found, and2for other errors (syntax errors, non-existent input files, etc).We shall look at three different methods for checking error status, and discuss the pros and cons of each approach.

Firstly, the simple approach:

#!/bin/sh # First attempt at checking return codes USERNAME=`grep "^${1}:" /etc/passwd|cut -d":" -f1` if [ "$?" -ne "0" ]; then echo "Sorry, cannot find user ${1} in /etc/passwd" exit 1 fi NAME=`grep "^${1}:" /etc/passwd|cut -d":" -f5` HOMEDIR=`grep "^${1}:" /etc/passwd|cut -d":" -f6` echo "USERNAME: $USERNAME" echo "NAME: $NAME" echo "HOMEDIR: $HOMEDIR"

This script works fine if you supply a valid username in/etc/passwd. However, if you enter an invalid code, it does not do what you might at first expect - it keeps running, and just shows:USERNAME: NAME: HOMEDIR:Why is this? As mentioned, the$?variable is set to the return code of the last executed command . In this case, that iscut.cuthad no problems which it feels like reporting - as far as I can tell from testing it, and reading the documentation,cutreturns zero whatever happens! It was fed an empty string, and did its job - returned the first field of its input, which just happened to be the empty string.So what do we do? If we have an error here,

grepwill report it, notcut. Therefore, we have to testgrep's return code, notcut's.

#!/bin/sh # Second attempt at checking return codes grep "^${1}:" /etc/passwd > /dev/null 2>&1 if [ "$?" -ne "0" ]; then echo "Sorry, cannot find user ${1} in /etc/passwd" exit 1 fi USERNAME=`grep "^${1}:" /etc/passwd|cut -d":" -f1` NAME=`grep "^${1}:" /etc/passwd|cut -d":" -f5` HOMEDIR=`grep "^${1}:" /etc/passwd|cut -d":" -f6` echo "USERNAME: $USERNAME" echo "NAME: $NAME" echo "HOMEDIR: $HOMEDIR"

This fixes the problem for us, though at the expense of slightly longer code.

That is the basic way which textbooks might show you, but it is far from being all there is to know about error-checking in shell scripts. This method may not be the most suitable to your particular command-sequence, or may be unmaintainable. Below, we shall investigate two alternative approaches.As a second approach, we can tidy this somewhat by putting the test into a separate function, instead of littering the code with lots of 4-line tests:

#!/bin/sh # A Tidier approach check_errs() { # Function. Parameter 1 is the return code # Para. 2 is text to display on failure. if [ "${1}" -ne "0" ]; then echo "ERROR # ${1} : ${2}" # as a bonus, make our script exit with the right error code. exit ${1} fi } ### main script starts here ### grep "^${1}:" /etc/passwd > /dev/null 2>&1 check_errs $? "User ${1} not found in /etc/passwd" USERNAME=`grep "^${1}:" /etc/passwd|cut -d":" -f1` check_errs $? "Cut returned an error" echo "USERNAME: $USERNAME" check_errs $? "echo returned an error - very strange!"

This allows us to test for errors 3 times, with customised error messages, without having to write 3 individual tests. By writing the test routine once. we can call it as many times as we wish, creating a more intelligent script, at very little expense to the programmer. Perl programmers will recognise this as being similar to thediecommand in Perl.As a third approach, we shall look at a simpler and cruder method. I tend to use this for building Linux kernels - simple automations which, if they go well, should just get on with it, but when things go wrong, tend to require the operator to do something intelligent (ie, that which a script cannot do!):

#!/bin/sh cd /usr/src/linux && \ make dep && make bzImage && make modules && make modules_install && \ cp arch/i386/boot/bzImage /boot/my-new-kernel && cp System.map /boot && \ echo "Your new kernel awaits, m'lord."This script runs through the various tasks involved in building a Linux kernel (which can take quite a while), and uses the&&operator to check for success. To do this withifwould involve:

#!/bin/sh cd /usr/src/linux if [ "$?" -eq "0" ]; then make dep if [ "$?" -eq "0" ]; then make bzImage if [ "$?" -eq "0" ]; then make modules if [ "$?" -eq "0" ]; then make modules_install if [ "$?" -eq "0" ]; then cp arch/i386/boot/bzImage /boot/my-new-kernel if [ "$?" -eq "0" ]; then cp System.map /boot/ if [ "$?" -eq "0" ]; then echo "Your new kernel awaits, m'lord." fi fi fi fi fi fi fi fi

... which I, personally, find pretty difficult to follow.

The&&and||operators are the shell's equivalent of AND and OR tests. These can be thrown together as above, or:

#!/bin/sh cp /foo /bar && echo Success || echo FailedThis code will either echo

Successor

Faileddepending on whether or not the

cpcommand was successful. Look carefully at this; the construct iscommand && command-to-execute-on-success || command-to-execute-on-failureOnly one command can be in each part. This method is handy for simple success / fail scenarios, but if you want to check on the status of the

echocommands themselves, it is easy to quickly become confused about which&&and||applies to which command. It is also very difficult to maintain. Therefore this construct is only recommended for simple sequencing of commands.In earlier versions, I had suggested that you can use a subshell to execute multiple commands depending on whether the

cpcommand succeeded or failed:cp /foo /bar && ( echo Success ; echo Success part II; ) || ( echo Failed ; echo Failed part II )But in fact, Marcel found that this does not work properly. The syntax for a subshell is:

( command1 ; command2; command3 )The return code of the subshell is the return code of the final command (

command3in this example). That return code will affect the overall command. So the output of this script:cp /foo /bar && ( echo Success ; echo Success part II; /bin/false ) || ( echo Failed ; echo Failed part II )Is that it runs the Success part (because

cpsucceeded, and then - because/bin/falsereturns failure, it also executes the Failure part:Success Success part II Failed Failed part IISo if you need to execute multiple commands as a result of the status of some other condition, it is better (and much clearer) to use the standard

if,then,elsesyntax.

Jun 18, 2019 | linuxconfig.org

Before proceeding further, let me give you one tip. In the example above the shell tried to expand a non-existing variable, producing a blank result. This can be very dangerous, especially when working with path names, therefore, when writing scripts, it's always recommended to use the

nounsetoption which causes the shell to exit with error whenever a non existing variable is referenced:$ set -o nounset $ echo "You are reading this article on $site_!" bash: site_: unbound variableWorking with indirectionThe use of the

${!parameter}syntax, adds a level of indirection to our parameter expansion. What does it mean? The parameter which the shell will try to expand is notparameter; instead it will try to use the the value ofparameteras the name of the variable to be expanded. Let's explain this with an example. We all know theHOMEvariable expands in the path of the user home directory in the system, right?$ echo "${HOME}" /home/egdocVery well, if now we assign the string "HOME", to another variable, and use this type of expansion, we obtain:

$ variable_to_inspect="HOME" $ echo "${!variable_to_inspect}" /home/egdocAs you can see in the example above, instead of obtaining "HOME" as a result, as it would have happened if we performed a simple expansion, the shell used the value of

Case modification expansionvariable_to_inspectas the name of the variable to expand, that's why we talk about a level of indirection.This parameter expansion syntax let us change the case of the alphabetic characters inside the string resulting from the expansion of the parameter. Say we have a variable called

name; to capitalize the text returned by the expansion of the variable we would use the${parameter^}syntax:$ name="egidio" $ echo "${name^}" EgidioWhat if we want to uppercase the entire string, instead of capitalize it? Easy! we use the

${parameter^^}syntax:$ echo "${name^^}" EGIDIOSimilarly, to lowercase the first character of a string, we use the

${parameter,}expansion syntax:$ name="EGIDIO" $ echo "${name,}" eGIDIOTo lowercase the entire string, instead, we use the

${parameter,,}syntax:$ name="EGIDIO" $ echo "${name,,}" egidioIn all cases a

patternto match a single character can also be provided. When the pattern is provided the operation is applied only to the parts of the original string that matches it:$ name="EGIDIO" $ echo "${name,,[DIO]}" EGidio

In the example above we enclose the characters in square brackets: this causes anyone of them to be matched as a pattern.

When using the expansions we explained in this paragraph and the

parameteris an array subscripted by@or*, the operation is applied to all the elements contained in it:$ my_array=(one two three) $ echo "${my_array[@]^^}" ONE TWO THREEWhen the index of a specific element in the array is referenced, instead, the operation is applied only to it:

$ my_array=(one two three) $ echo "${my_array[2]^^}" THREESubstring removalThe next syntax we will examine allows us to remove a

patternfrom the beginning or from the end of string resulting from the expansion of a parameter.Remove matching pattern from the beginning of the stringThe next syntax we will examine,

${parameter#pattern}, allows us to remove apatternfrom the beginning of the string resulting from theparameterexpansion:$ name="Egidio" $ echo "${name#Egi}" dioA similar result can be obtained by using the