|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

| News | C language | Best C books | Recommended Links | Debugging | Make |

| Richard Stallman and War of Software Clones | The Short History of GCC development | History | Humor | Etc |

|

|

GCC was and still is the main programming achievement of RMS. I think that the period when RMS wrote gcc by reusing Pastel compiler developed at the Lawrence Livermore Lab constitutes the most productive part of RMS' programmer career, much more important then his Emacs efforts, although he is still fanatically attached to Emacs.

|

|

He stared writing the compiler being almost thirty years old. That means at the time when his programming abilities started to decline. Therefore gcc can be considered to be his "swan song" as a programmer. The prototype that he used was Pastel compiler developed at the Lawrence Livermore Lab. He is his version of the history GCC creation:

Shortly before beginning the GNU project, I heard about the Free University Compiler Kit, also known as VUCK. (The Dutch word for "free" is written with a V.) This was a compiler designed to handle multiple languages, including C and Pascal, and to support multiple target machines. I wrote to its author asking if GNU could use it.

He responded derisively, stating that the university was free but the compiler was not. I therefore decided that my first program for the GNU project would be a multi-language, multi-platform compiler.

Hoping to avoid the need to write the whole compiler myself, I obtained the source code for the Pastel compiler, which was a multi-platform compiler developed at Lawrence Livermore Lab. It supported, and was written in, an extended version of Pascal, designed to be a system-programming language. I added a C front end, and began porting it to the Motorola 68000 computer. But I had to give that up when I discovered that the compiler needed many megabytes of stack space, and the available 68000 Unix system would only allow 64k.

I then realized that the Pastel compiler functioned by parsing the entire input file into a syntax tree, converting the whole syntax tree into a chain of "instructions", and then generating the whole output file, without ever freeing any storage. At this point, I concluded I would have to write a new compiler from scratch. That new compiler is now known as GCC; none of the Pastel compiler is used in it, but I managed to adapt and use the C front end that I had written.

Of course there were collaborators, because RMS seems to be far from the cutting edge of compiler technology and never wrote any significant paper about compiler construction. But still he was the major driving force behind the project and the success of this project was achieved to large extent due to his personal efforts.

From the political standpoint it was a very bright idea: a person who controls the compiler has enormous influence on everybody who use it. Although at the beginning it was just C-compiler, eventually "after-Stallman" GCC (GNU-cc) became one of the most flexible, most powerful and most portable C ,C++, FORTRAN and (now) even Java compiler. Together with its libraries, it constitutes the powerful development platform that gives the possibility to write code which is still portable to almost all computer platforms you can imagine, from handhelds to supercomputers.

The first version of GCC seems to be finished around 1985 when RMS was 32 year old. Here is the first mention of gcc in GNU manifesto:

So far we have an Emacs text editor with Lisp for writing editor commands, a source level debugger, a yacc-compatible parser generator, a linker, and around 35 utilities. A shell (command interpreter) is nearly completed. A new portable optimizing C compiler has compiled itself and may be released this year. An initial kernel exists but many more features are needed to emulate Unix. When the kernel and compiler are finished, it will be possible to distribute a GNU system suitable for program development. We will use TeX as our text formatter, but an nroff is being worked on. We will use the free, portable X window system as well. After this we will add a portable Common Lisp, an Empire game, a spreadsheet, and hundreds of other things, plus on-line documentation. We hope to supply, eventually, everything useful that normally comes with a Unix system, and more.

GNU will be able to run Unix programs, but will not be identical to Unix. We will make all improvements that are convenient, based on our experience with other operating systems. In particular, we plan to have longer file names, file version numbers, a crashproof file system, file name completion perhaps, terminal-independent display support, and perhaps eventually a Lisp-based window system through which several Lisp programs and ordinary Unix programs can share a screen. Both C and Lisp will be available as system programming languages. We will try to support UUCP, MIT Chaosnet, and Internet protocols for communication.

Compilers take a long time to mature. The first more or less stable release seems to be 1.17 (January 9, 1988) That was pure luck as in 1989 the Emacs/Xemacs split started that consumed all his energy. RMS used a kind of Microsoft "embrace and extend" policy in GCC development: extensions to the C-language were enabled by default.

It looks like RMS personally participated in the development of the compiler at least till the GCC/egcs split (i.e 1997). Being a former compiler writer myself, I can attest that it is pretty physically challenging to run such a project for more then ten years, even if you are mostly a manager.

Development was not without problems with Cygnus emerging as an alternative force. Cygnus, the first commercial company devoted to provide commercial support for GNU software and especially GCC complier was co-founded by Michael Tiemann in 1989 and Tieman became the major driving force behind GCC since then.

In 1997 RMS was so tired of his marathon that he just wanted the compiler to be stable because it was the most important publicity force for FSF. But with GNU license you cannot stop: it enforces the law of jungle and the strongest take it all. Interest of other more flesh and motivated developers (as well as RMS personal qualities first demonstrated in Emacs/Emacs saga) led to a painful fork:

Subject: A new compiler project to merge the existing GCC forks

A bunch of us (including Fortran, Linux, Intel and RTEMS hackers) have decided to start a more experimental development project, just like Cygnus and the FSF started the gcc2 project about 6 years ago. Only this time the net community with which we are working is larger! We are calling this project 'egcs' (pronounced 'eggs'). Why are we doing this? It's become increasingly clear in the course of hacking events that the FSF's needs for gcc2 are at odds with the objectives of many in the community who have done lots of hacking and improvement over the years. GCC is part of the FSF's publicity for the GNU project, as well as being the GNU system's compiler, so stability is paramount for them. On the other hand, Cygnus, the Linux folks, the pgcc folks, the Fortran folks and many others have done development work which has not yet gone into the GCC2 tree despite years of efforts to make it possible. This situation has resulted in a lot of strong words on the gcc2 mailing list which really is a shame since at the heart we all want the same thing: the continued success of gcc, the FSF, and Free Software in general. Apart from ill will, this is leading to great divergence which is increasingly making it harder for us all to work together -- It is almost as if we each had a proprietary compiler! Thus we are merging our efforts, building something that won't damage the stability of gcc2, so that we can have the best of both worlds. As you can see from the list below, we represent a diverse collection of streams of GCC development. These forks are painful and waste time; we are bringing our efforts together to simplify the development of new features. We expect that the gcc2 and egcs communities will continue to overlap to a great extent, since they're both working on GCC and both working on Free Software. All code will continue to be assigned to the FSF exactly as before and will be passed on to the gcc2 maintainers for ultimate inclusion into the gcc2 tree. Because the two projects have different objectives, there will be different sets of maintainers. Provisionally we have agreed that Jim Wilson is to act as the egcs maintainer and Jason Merrill as the maintainer of the egcs C++ front end. Craig Burley will continue to maintain the Fortran front end code in both efforts. What new features will be coming up soon? There is such a backlog of tested, un-merged-in features that we have been able to pick a useful initial set: New alias analysis support from John F. Carr. g77 (with some performance patches). A C++ repository for G++. A new instruction scheduler from IBM Haifa. A regmove pass (2-address machine optimizations that in future will help with compilation for the x86 and for now will help with some RISC machines). This will use the development snapshot of 3 August 97 as its base -- in other words we're not starting from the 18 month old gcc-2.7 release, but from a recent development snapshot with all the last 18 months' improvements, including major work on G++. We plan an initial release for the end of August. The second release will include some subset of the following: global cse and partial redundancy elimination. live range splitting. More features of IBM Haifa's instruction scheduling, including software pipelineing, and branch scheduling. sibling call opts. various new embedded targets. Further work on regmove. The egcs mailing list at cygnus.com will be used to discuss and prioritize these features. How to join: send mail to egcs-request at cygnus.com. That list is under majordomo. We have a web page that describes the various mailing lists and has this information at: http://www.cygnus.com/egcs. Alternatively, look for these releases as they spread through other projects such as RTEMS, Linux, etc. Come join us! David Henkel-Wallace (for the egcs members, who currently include, among others): Per Bothner Joe Buck Craig Burley John F. Carr Stan Cox David Edelsohn Kaveh R. Ghazi Richard Henderson David Henkel-Wallace Gordon Irlam Jakub Jelinek Kim Knuttila Gavin Koch Jeff Law Marc Lehmann H.J. Lu Jason Merrill Michael Meissner David S. Miller Toon Moene Jason Molenda Andreas Schwab Joel Sherrill Ian Lance Taylor Jim Wilson

After version 2.8.1, GCC development split into FSF GCC on the one hand, and Cygnus EGCS on the other. The first EGCS version (1.0.0) was released by Cygnus on December 3, 1997 and that instantly put FSF version on the back burner:

March 15, 1999 egcs-1.1.2 is released. March 10, 1999 Cygnus donates improved global constant propagation and lazy code motion optimizer framework. March 7, 1999 The egcs project now has additional online documentation. February 26, 1999 Richard Henderson of Cygnus Solutions has donated a major rewrite of the control flow analysis pass of the compiler. February 25, 1999 Marc Espie has donated support for OpenBSD on the Alpha, SPARC, x86, and m68k platforms. Additional targets are expected in the future. January 21, 1999 Cygnus donates support for the PowerPC 750 processor. The PPC750 is a 32bit superscalar implementation of the PowerPC family manufactured by both Motorola and IBM. The PPC750 is targeted at high end Macs as well as high end embedded applications. January 18, 1999 Christian Bruel and Jeff Law donate improved local dead store elimination. January 14, 1999 Cygnus donates support for Hypersparc (SS20) and Sparclite86x (embedded) processors. December 7, 1998 Cygnus donates support for demangling of HP aCC symbols. December 4, 1998 egcs-1.1.1 is released. November 26, 1998 A database with test results is now available online, thanks to Marc Lehmann. November 23, 1998 egcs now can dump flow graph information usable for graphical representation. Contributed by Ulrich Drepper. November 21, 1998 Cygnus donates support for the SH4 processor. November 10, 1998 An official steering committee has been formed. Here is the original announcement. November 5, 1998 The third snapshot of the rewritten libstdc++ is available. You can read some more on http://sources.redhat.com/libstdc++/. October 27, 1998 Bernd Schmidt donates localized spilling support. September 22, 1998 IBM Corporation delivers an update to the IBM Haifa instruction scheduler and new software pipelining and branch optimization support. September 18, 1998 Michael Hayes donates c4x port. September 6, 1998 Cygnus donates Java front end. September 3, 1998 egcs-1.1 is released. August 29, 1998 Cygnus donates Chill front end and runtime. August 25, 1998 David Miller donates rewritten sparc backend. August 19, 1998 Mark Mitchell donates load hoisting and store sinking support. July 15, 1998 The first snapshot of the rewritten libstdc++ is available. You can read some more here. June 29, 1998 Mark Mitchell donates alias analysis framework. May 26, 1998 We have added two new mailing lists for the egcs project. gcc-cvs and egcs-patches. When a patch is checked into the CVS repository, a check-in notification message is automatically sent to the gcc-cvs mailing list. This will allow developers to monitor changes as they are made.

Patch submissions should be sent to egcs-patches instead of the main egcs list. This is primarily to help ensure that patch submissions do not get lost in the large volume of the main mailing list.

May 18, 1998 Cygnus donates gcse optimization pass. May 15, 1998 egcs-1.0.3 released!. March 18, 1998 egcs-1.0.2 released!. February 26, 1998 The egcs web pages are now supported by egcs project hardware and are searchable with webglimpse. The CVS sources are browsable with the free cvsweb package. February 7, 1998 Stanford has volunteered to host a high speed mirror for egcs. This should significantly improve download speeds for releases and snapshots. Thanks Stanford and Tobin Brockett for the use of their network, disks and computing facilities! January 12, 1998 Remote access to CVS sources is available!. January 6, 1998 egcs-1.0.1 released!. December 3, 1997 egcs-1.0 released!. August 15, 1997 The egcs project is announced publicly and the first snapshot is put on-line.

The egcs mailing list archives for details. I've also heard assertions that the only reason gcc-2.8 was released as quickly as it was is because of the pressures of the egcs release. Here is a Slashdot discussion that contains some additional info. After the fork egcs team proved to be definitely stronger and the development of the original branch stagnated.

This was pretty painful fork, especially personally for RMS, and consequences are still felt today. For example Linus Torvalds still prefer old GCC version and recompilation of the kernel with newer version lead to some subtle bugs due to not full standard compatibility of the old GCC compiler. Alan Cox has said for years that 2.0.x kernels is to be compiled by gcc, not egcs.

As FSF GCC died a silent death from malnutrition, both were (formally) reunited as of version 2.95 in April 1999. With a simple renaming trick, egcs became gcc now and formally the split was over:

Re: egcs to take over gcc maintenance

- To: [email protected]

- Subject: Re: egcs to take over gcc maintainance

- From: Theodore Papadopoulo <[email protected]>

- Date: Fri, 16 Apr 1999 18:35:00 +0200

[email protected] said:

> I'm pleased to announce that the egcs team is taking over as the collective GCC maintainer of GCC. This means that the egcs > steering committee is changing its name to the gcc steering committee and future gcc releases will be made by the egcs> (then gcc) team. This also means that the open development style is also carried over to gcc (a good thing).

That's a great piece of news...

Congratulations !!!--------------------------------------------------------------------

Theodore Papadopoulo

Email: [email protected] Tel: (33) 04 92 38 76 01

More information about the event can be found in the following Slashdot post:

yes, it's true; egcs is gcc. Some details (Score:4)

by JoeBuck (7947) on Tuesday April 20, @12:22PM (#1925069)

(http://www.welsh-buck.org/jbuck/)As a member of the egcs steering committee, which will become the gcc steering commitee, I can confirm that yes, the merger is official ... sometime in the near future there will be a gcc 3.0 from the egcs code base. The steering committee has been talking to RMS about doing this for months now; at times it's been contentious but now that we understand each other better, things are going much better. The important thing to understand is that when we started egcs, this is what we were planning all along (well, OK, what some of us were planning). We wanted to change the way gcc worked, not just create a variant. That's why assignments always went to the FSF, why GNU coding style is rigorously followed.

Technically, egcs/gcc will run the same way as before. Since we are now fully GNU, we'll be making some minor changes to reflect that, but we've been doing them gradually in the past few months anyway so nothing that significant will change. Jeff Law remains the release manager; a number of other people have CVS write access; the steering committee handles the "political" and other nontechnical stuff and "hires" the release manager.

egcs/gcc is at this point considerably more bazaar-like than the Linux kernel in that many more people have the ability to get something into the official code (for Linux, only Linus can do that). Jeff Law decides what goes in the release, but he delegates major areas to other maintainers.

The reason for the delay in the announcement is that we were waiting for RMS to announce it (he sent a message to the gnu.*.announce lists), but someone cracked an important FSF machine and did an rm -rf / command. It was noticed and someone powered off the machine, but it appears that this machine hosted the GNU mailing lists, if I understand correctly, so there's nothing on gnu.announce. I don't know why there's still nothing on www.gnu.org (which was not cracked). Why do people do things like this?

Currently GCC Release Manager is Mark Mitchell, CodeSourcery's President and Chief Technical Officer. He received a MS in Computer Science from Stanford in 1999 and a BA from Harvard in 1994. His research interests centered around computational complexity and computer security. Mark worked at CenterLine Software as a software engineer before co-founding CodeSourcery. In his recent interview he provided some interesting facts about current problems and perspectives of GCC development as well as the reasons of growing independence of the product from FSF (for example a pretty interesting fact that version 2.96 of GCC was not even FSF version at all):

JB: There has been a problem with so called gcc-2.96. Why did several distributors create this version?

It's important for everyone to know that there was no version of GCC 2.96 from the FSF. I know Red Hat distributed a version that it called 2.96, and other companies may have done that too. I only know about the Red Hat version.

It is too bad that this version was released. It is essentially a development snapshot of the GCC tree, with a lot of Red Hat fixes. There are a lot of bugs in it, relative to either 2.95 or 3.0, and the C++ code generated is incompatible (at the binary level) with either 2.95 or 3.0. It's been very confusing to users, especially because the error messages generated by the 2.96 release refer users back to the FSF GCC bug-reporting address, even though a lot of the bugs in that release don't exist in the FSF releases. The saddest part is that a lot of people at Red Hat knew that using that release was a bad idea, and they couldn't convince their management of that fact.

Partly, this release is our fault, as GCC maintainers. There was a lot of frustruation because it took so long to produce a new GCC release. I'm currently leading an effort to reduce the time between GCC releases so that this kind of thing is less likely to happen again. I can understand why a company might need to put out an intermediate release of GCC if we are not able to do it ourselves. That's why I think it's important for people to support independent development of GCC, which is, of course, what CodeSourcery does. We're not affiliated with any of the distributors, and so we can act to try to improve the FSF version of GCC directly. When people financially support our work on the releases, that helps to make sure that there are frequent enough release to avoid these problems.

JB: Do you feel, that the 2.96 release speeded up the development and allowed gcc-3.0 to be ready faster?

That is a very difficult question to answer. On the one hand, Red Hat certainly fixed some bugs in Red Hat's 2.96 version, and some of those improvements were contributed back for GCC 3.0. (I do not know if all of them were contributed back, or not.) On the other hand, GCC developers at Red Hat must have spent a lot of time on testing and improving their 2.96 version, and therefore that time was not spent on GCC 3.0.

The problem is that for a company like Red Hat (or CodeSourcery) you can't choose between helping out with the FSF release of GCC and doing something else based just on what would be good for the FSF release. You have to try to make the best business decision, which might mean that you have to do something to please a customer, even though it doesn't help the FSF release.

If people would like to keep companies from making their own releases, there are two things to do: a) make that sentiment known to the companies, since companies like to please their customers, and b) hire companies like CodeSourcery to help work on the FSF releases.

JB: How many developers are currently working on GCC?

It's impossible to count. Hundreds, probably -- but there is definitely a group of ten or twenty that is responsible for most of the changes.

... ... ...

JB: Do you see compiling java to native code as a drawback when using free (speech) code? (compared to using p-code only)

I'm not so moralistic about these issues as some people. I think it's good that we support compiling from byte-code because that's how lots of Java code is distributed. Whether or not that code is free, we're providing a useful product. I suspect the FSF has a different viewpoint.

JB: What are future plans in gcc development?

I think the number one issue is the performance of the generated code. People are looking into different ways to optimize better. I'd also like to see a more robust compiler that issues better error messages and never crashes on incorrect input.

JB: Often, the major problem with hardware vendors is that they don't want to provide the technical documentation for their hardware (forcing you to use their or third party proprietary code). Is this also true with processor documentation?

Most popular workstation processors are well-documented from the point of view of their instruction set. There often isn't as much information available about timing and scheduling information. And some embedded vendors never make any information about their chips available, which means that they can't really distribute a version of GCC for their chips because the GCC source code would give away information about the chip.

AMD is a great example of a company trying to work closely with GCC up front. They made a lot of information about their new chip available very early in the process.

JB: Which systems do currently use GCC as their primary compiler set (not counting *BSD and GNU/Linux)?

Apple's OS X. If Apple succeeds, there will probably be more OS X developers using GCC than there are GNU/Linux developers.

... ... ...

Having RMS as a member of gcc steering committee(SC) has its problems and still invites forking ;-). As one of the participants of the Slashdot discussion noted:

Re:Speaking as a GCC maintainer, I call bullshit (Score:3, Informative)

by devphil (51341) on Sunday August 15, @02:16PM (#9974914)

(http://www.devphil.com/)

You're not completely right, and not completely wrong. The politics are exceedingly complicated, and I regret it every time I learn more about them.RMS doesn't have dictatorial power over the SC, nor a formal veto vote.

He does hold the copyright to GCC. (Well, the FSF holds the copyright, but he is the FSF.) That's a lot more important that many people realize.

Choice of implementation language is, strictly speaking, a purely technical issue. But it has so many consequences that it gets special attention.

The SC specifically avoids getting involved in technical issues whenever possible. Even when the SC is asked to decide something, they never go to RMS when they can help it, because he's so unaware of modern real-world technical issues and the bigger picture. It's far, far better to continue postponing a question than to ask it, when RMS is involved, because he will make a snap decision based on his own bizarre technical ideas, and then never change his mind in time for the new decision to be worth anything.

He can be convinced. Eventually. It took the SC over a year to explain and demonstrate that Java bytecode could not easily be used to subvert the GPL, therefore permitting GCJ to be checked in to the official repository was okay. I'm sure that someday we'll be using C++ in core code. Just not anytime soon.

As for forking again... well, yeah, I personally happen to be a proponent of that path. But I'm keenly aware of the damange that would to do GCC's reputation -- beyond the short-sighted typical

/. viewpoint of "always disobey every authority" -- and I'm still probably underestimating the problems.

Some additional information about gcc development can be found at History - GCC Wiki

About history of gcc development see: The Short History of GCC development The first version of gcc was released in March 1987:

Date: Sun, 22 Mar 87 10:56:56 EST

From: rms (Richard M. Stallman)The GNU C compiler is now available for ftp from the file

/u2/emacs/gcc.tar on prep.ai.mit.edu. This includes machine

descriptions for vax and sun, 60 pages of documentation on writing

machine descriptions (internals.texinfo, internals.dvi and Info

file internals).This also contains the ANSI standard (Nov 86) C preprocessor and 30

pages of reference manual for it.This compiler compiles itself correctly on the 68020 and did so

recently on the vax. It recently compiled Emacs correctly on the

68020, and has also compiled tex-in-C and Kyoto Common Lisp.

However, it probably still has numerous bugs that I hope you will

find for me.I will be away for a month, so bugs reported now will not be

handled until then.If you can't ftp, you can order a compiler beta-test tape from the

Free Software Foundation for $150 (plus 5% sales tax in

Massachusetts, or plus $15 overseas if you want air mail).Free Software Foundation

1000 Mass Ave

Cambridge, MA 02138

Sunfreeware.com has packages for gcc-3.4.2 and gcc-3.3.2 for Solaris 9 and package for gcc-3.3.2 for Solaris 10. Usually one needs only gcc_small package has ONLY the C and C++ compilers and is a much smaller download but sunfreeware.com does not provide that.

If you use gcc it's very convenient to use Midnight Commander on Solaris as a command line pseudo IDE.

Please note that Sun Studio 11 is free both for Solaris and Linux and might be a better option for compilation on UltraSparc then GCC (10% or more faster code).

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Jun 17, 2020 | opensource.com

Knowing how Linux uses libraries, including the difference between static and dynamic linking, can help you fix dependency problems. Feed 27 up Image by : Internet Archive Book Images. Modified by Opensource.com. CC BY-SA 4.0 x Subscribe nowGet the highlights in your inbox every week.

https://opensource.com/eloqua-embedded-email-capture-block.html?offer_id=70160000000QzXNAA0

Linux, in a way, is a series of static and dynamic libraries that depend on each other. For new users of Linux-based systems, the whole handling of libraries can be a mystery. But with experience, the massive amount of shared code built into the operating system can be an advantage when writing new applications.

To help you get in touch with this topic, I prepared a small application example that shows the most common methods that work on common Linux distributions (these have not been tested on other systems). To follow along with this hands-on tutorial using the example application, open a command prompt and type:

$ git clone https: // github.com / hANSIc99 / library_sample

$ cd library_sample /

$ make

cc -c main.c -Wall -Werror

cc -c libmy_static_a.c -o libmy_static_a.o -Wall -Werror

cc -c libmy_static_b.c -o libmy_static_b.o -Wall -Werror

ar -rsv libmy_static.a libmy_static_a.o libmy_static_b.o

ar: creating libmy_static.a

a - libmy_static_a.o

a - libmy_static_b.o

cc -c -fPIC libmy_shared.c -o libmy_shared.o

cc -shared -o libmy_shared.so libmy_shared.o

$ make clean

rm * .oAfter executing these commands, these files should be added to the directory (run

my_applsto see them):

libmy_static.a

libmy_shared.so About static linkingWhen your application links against a static library, the library's code becomes part of the resulting executable. This is performed only once at linking time, and these static libraries usually end with a

.aextension.A static library is an archive ( ar ) of object files. The object files are usually in the ELF format. ELF is short for Executable and Linkable Format , which is compatible with many operating systems.

The output of the

$ file libmy_static.afilecommand tells you that the static librarylibmy_static.ais theararchive type:

libmy_static.a: current ar archiveWith

$ ar -t libmy_static.aar -t, you can look into this archive; it shows two object files:

libmy_static_a.o

libmy_static_b.oYou can extract the archive's files with

$ ar -x libmy_static.aar -x <archive-file>. The extracted files are object files in ELF format:

$ file libmy_static_a.o

libmy_static_a.o: ELF 64 -bit LSB relocatable, x86- 64 , version 1 ( SYSV ) , not stripped About dynamic linking More Linux resourcesDynamic linking means the use of shared libraries. Shared libraries usually end with

- Linux commands cheat sheet

- Advanced Linux commands cheat sheet

- Free online course: RHEL Technical Overview

- Linux networking cheat sheet

- SELinux cheat sheet

- Linux common commands cheat sheet

- What are Linux containers?

- Our latest Linux articles

.so(short for "shared object").Shared libraries are the most common way to manage dependencies on Linux systems. These shared resources are loaded into memory before the application starts, and when several processes require the same library, it will be loaded only once on the system. This feature saves on memory usage by the application.

Another thing to note is that when a bug is fixed in a shared library, every application that references this library will profit from it. This also means that if the bug remains undetected, each referencing application will suffer from it (if the application uses the affected parts).

It can be very hard for beginners when an application requires a specific version of the library, but the linker only knows the location of an incompatible version. In this case, you must help the linker find the path to the correct version.

Although this is not an everyday issue, understanding dynamic linking will surely help you in fixing such problems.

Fortunately, the mechanics for this are quite straightforward.

To detect which libraries are required for an application to start, you can use

$ ldd my_appldd, which will print out the shared libraries used by a given file:

linux-vdso.so.1 ( 0x00007ffd1299c000 )

libmy_shared.so = > not found

libc.so.6 = > / lib64 / libc.so.6 ( 0x00007f56b869b000 )

/ lib64 / ld-linux-x86- 64 .so.2 ( 0x00007f56b8881000 )Note that the library

libmy_shared.sois part of the repository but is not found. This is because the dynamic linker, which is responsible for loading all dependencies into memory before executing the application, cannot find this library in the standard locations it searches.Errors associated with linkers finding incompatible versions of common libraries (like

$ LD_LIBRARY_PATH =$ ( pwd ) : $LD_LIBRARY_PATHbzip2, for example) can be quite confusing for a new user. One way around this is to add the repository folder to the environment variableLD_LIBRARY_PATHto tell the linker where to look for the correct version. In this case, the right version is in this folder, so you can export it:

$ export LD_LIBRARY_PATHNow the dynamic linker knows where to find the library, and the application can be executed. You can rerun

$ ldd my_applddto invoke the dynamic linker, which inspects the application's dependencies and loads them into memory. The memory address is shown after the object path:

linux-vdso.so.1 ( 0x00007ffd385f7000 )

libmy_shared.so = > / home / stephan / library_sample / libmy_shared.so ( 0x00007f3fad401000 )

libc.so.6 = > / lib64 / libc.so.6 ( 0x00007f3fad21d000 )

/ lib64 / ld-linux-x86- 64 .so.2 ( 0x00007f3fad408000 )To find out which linker is invoked, you can use

$ file my_appfile:

my_app: ELF 64 -bit LSB executable, x86- 64 , version 1 ( SYSV ) , dynamically linked, interpreter / lib64 / ld-linux-x86- 64 .so.2, BuildID [ sha1 ] =26c677b771122b4c99f0fd9ee001e6c743550fa6, for GNU / Linux 3.2.0, not strippedThe linker

$ file / lib64 / ld-linux-x86- 64 .so.2/lib64/ld-linux-x86–64.so.2is a symbolic link told-2.30.so, which is the default linker for my Linux distribution:

/ lib64 / ld-linux-x86- 64 .so.2: symbolic link to ld- 2.31 .soLooking back to the output of

ldd, you can also see (next tolibmy_shared.so) that each dependency ends with a number (e.g.,/lib64/libc.so.6). The usual naming scheme of shared objects is:**lib** XYZ.so **.<MAJOR>** . **<MINOR>**On my system,

$ file / lib64 / libc.so.6libc.so.6is also a symbolic link to the shared objectlibc-2.30.soin the same folder:

/ lib64 / libc.so.6: symbolic link to libc- 2.31 .soIf you are facing the issue that an application will not start because the loaded library has the wrong version, it is very likely that you can fix this issue by inspecting and rearranging the symbolic links or specifying the correct search path (see "The dynamic loader: ld.so" below).

For more information, look on the

Dynamic loadinglddman page .Dynamic loading means that a library (e.g., a

.sofile) is loaded during a program's runtime. This is done using a certain programming scheme.Dynamic loading is applied when an application uses plugins that can be modified during runtime.

See the

The dynamic loader: ld.sodlopenman page for more information.On Linux, you mostly are dealing with shared objects, so there must be a mechanism that detects an application's dependencies and loads them into memory.

ld.solooks for shared objects in these places in the following order:

- The relative or absolute path in the application (hardcoded with the

-rpathcompiler option on GCC)- In the environment variable

LD_LIBRARY_PATH- In the file

/etc/ld.so.cacheKeep in mind that adding a library to the systems library archive

unset LD_LIBRARY_PATH/usr/lib64requires administrator privileges. You could copylibmy_shared.somanually to the library archive and make the application work without settingLD_LIBRARY_PATH:

sudo cp libmy_shared.so / usr / lib64 /When you run

$ ldd my_appldd, you can see the path to the library archive shows up now:

linux-vdso.so.1 ( 0x00007ffe82fab000 )

libmy_shared.so = > / lib64 / libmy_shared.so ( 0x00007f0a963e0000 )

libc.so.6 = > / lib64 / libc.so.6 ( 0x00007f0a96216000 )

/ lib64 / ld-linux-x86- 64 .so.2 ( 0x00007f0a96401000 ) Customize the shared library at compile timeIf you want your application to use your shared libraries, you can specify an absolute or relative path during compile time.

Modify the makefile (line 10) and recompile the program by invoking

make -B. Then, the output oflddshowslibmy_shared.sois listed with its absolute path.Change this:

CFLAGS =-Wall -Werror -Wl,-rpath,$(shell pwd)To this (be sure to edit the username):

CFLAGS =/home/stephan/library_sample/libmy_shared.soThen recompile:

$ makeConfirm it is using the absolute path you set, which you can see on line 2 of the output:

$ ldd my_app

linux-vdso.so.1 ( 0x00007ffe143ed000 )

libmy_shared.so = > / lib64 / libmy_shared.so ( 0x00007fe50926d000 )

/ home / stephan / library_sample / libmy_shared.so ( 0x00007fe509268000 )

libc.so.6 = > / lib64 / libc.so.6 ( 0x00007fe50909e000 )

/ lib64 / ld-linux-x86- 64 .so.2 ( 0x00007fe50928e000 )This is a good example, but how would this work if you were making a library for others to use? New library locations can be registered by writing them to

/etc/ld.so.confor creating a<library-name>.conffile containing the location under/etc/ld.so.conf.d/. Afterward,ldconfigmust be executed to rewrite theld.so.cachefile. This step is sometimes necessary after you install a program that brings some special shared libraries with it.See the

How to handle multiple architecturesld.soman page for more information.Usually, there are different libraries for the 32-bit and 64-bit versions of applications. The following list shows their standard locations for different Linux distributions:

Red Hat family

- 32 bit:

/usr/lib- 64 bit:

/usr/lib64Debian family

- 32 bit:

/usr/lib/i386-linux-gnu- 64 bit:

/usr/lib/x86_64-linux-gnuArch Linux family

- 32 bit:

/usr/lib32- 64 bit:

/usr/lib64FreeBSD (technical not a Linux distribution)

- 32bit:

/usr/lib32- 64bit:

/usr/libKnowing where to look for these key libraries can make broken library links a problem of the past.

While it may be confusing at first, understanding dependency management in Linux libraries is a way to feel in control of the operating system. Run through these steps with other applications to become familiar with common libraries, and continue to learn how to fix any library challenges that could come up along your way.

Sep 04, 2012 | sanctum.geek.nz

If you don't have root access on a particular GNU/Linux system that you use, or if you don't want to install anything to the system directories and potentially interfere with others' work on the machine, one option is to build your favourite tools in your

$HOMEdirectory.This can be useful if there's some particular piece of software that you really need for whatever reason, particularly on legacy systems that you share with other users or developers. The process can include not just applications, but libraries as well; you can link against a mix of your own libraries and the system's libraries as you need.

PreparationIn most cases this is actually quite a straightforward process, as long as you're allowed to use the system's compiler and any relevant build tools such as

autoconf. If the./configurescript for your application allows a--prefixoption, this is generally a good sign; you can normally test this with--help:$ mkdir src $ cd src $ wget -q http://fooapp.example.com/fooapp-1.2.3.tar.gz $ tar -xf fooapp-1.2.3.tar.gz $ cd fooapp-1.2.3 $ pwd /home/tom/src/fooapp-1.2.3 $ ./configure --help | grep -- --prefix --prefix=PREFIX install architecture-independent files in PREFIXDon't do this if the security policy on your shared machine explicitly disallows compiling programs! However, it's generally quite safe as you never need root privileges at any stage of the process.

Naturally, this is not a one-size-fits-all process; the build process will vary for different applications, but it's a workable general approach to the task.

InstallingConfigure the application or library with the usual call to

./configure, but use your home directory for the prefix:$ ./configure --prefix=$HOMEIf you want to include headers or link against libraries in your home directory, it may be appropriate to add definitions for

CFLAGSandLDFLAGSto refer to those directories:$ CFLAGS="-I$HOME/include" \ > LDFLAGS="-L$HOME/lib" \ > ./configure --prefix=$HOMESome

configurescripts instead allow you to specify the path to particular libraries. Again, you can generally check this with--help.$ ./configure --prefix=$HOME --with-foolib=$HOME/libYou should then be able to install the application with the usual

makeandmake install, needingrootprivileges for neither:$ make $ make installIf successful, this process will insert files into directories like

$HOME/binand$HOME/lib. You can then try to call the application by its full path:$ $HOME/bin/fooapp -v fooapp v1.2.3Environment setupTo make this work smoothly, it's best to add to a couple of environment variables, probably in your

.bashrcfile, so that you can use the home-built application transparently.First of all, if you linked the application against libraries also in your home directory, it will be necessary to add the library directory to

LD_LIBRARY_PATH, so that the correct libraries are found and loaded at runtime:$ /home/tom/bin/fooapp -v /home/tom/bin/fooapp: error while loading shared libraries: libfoo.so: cannot open shared... Could not load library foolib $ export LD_LIBRARY_PATH=$HOME/lib $ /home/tom/bin/fooapp -v fooapp v1.2.3An obvious one is adding the

$HOME/bindirectory to your$PATHso that you can call the application without typing its path:$ fooapp -v -bash: fooapp: command not found $ export PATH="$HOME/bin:$PATH" $ fooapp -v fooapp v1.2.3Similarly, defining

MANPATHso that calls tomanwill read the manual for your build of the application first is worthwhile. You may find that$MANPATHis empty by default, so you will need to append other manual locations to it. An easy way to do this is by appending the output of themanpathutility:$ man -k fooapp $ manpath /usr/local/man:/usr/local/share/man:/usr/share/man $ export MANPATH="$HOME/share/man:$(manpath)" $ man -k fooapp fooapp (1) - Fooapp, the programmer's foo apperThis done, you should be able to use your private build of the software comfortably, and all without never needing to reach for

Caveatsroot.This tends to work best for userspace tools like editors or other interactive command-line apps; it even works for shells. However this is not a typical use case for most applications which expect to be packaged or compiled into

/usr/local, so there are no guarantees it will work exactly as expected. I have found that Vim and Tmux work very well like this, even with Tmux linked against a home-compiled instance oflibevent, on which it depends.In particular, if any part of the install process requires

rootprivileges, such as making asetuidbinary, then things are likely not to work as expected.

Feb 14, 2012 | sanctum.geek.nz

When unexpected behaviour is noticed in a program, GNU/Linux provides a wide variety of command-line tools for diagnosing problems. The use of

gdb, the GNU debugger, and related tools like the lesser-known Perl debugger, will be familiar to those using IDEs to set breakpoints in their code and to examine program state as it runs. Other tools of interest are available however to observe in more detail how a program is interacting with a system and using its resources.Debugging with

gdbYou can use

gdbin a very similar fashion to the built-in debuggers in modern IDEs like Eclipse and Visual Studio.If you are debugging a program that you've just compiled, it makes sense to compile it with its debugging symbols added to the binary, which you can do with a

gcccall containing the-goption. If you're having problems with some code, it helps to also use-Wallto show any errors you may have otherwise missed:$ gcc -g -Wall example.c -o exampleThe classic way to use

gdbis as the shell for a running program compiled in C or C++, to allow you to inspect the program's state as it proceeds towards its crash.$ gdb example ... Reading symbols from /home/tom/example...done. (gdb)At the

(gdb)prompt, you can typerunto start the program, and it may provide you with more detailed information about the causes of errors such as segmentation faults, including the source file and line number at which the problem occurred. If you're able to compile the code with debugging symbols as above and inspect its running state like this, it makes figuring out the cause of a particular bug a lot easier.(gdb) run Starting program: /home/tom/gdb/example Program received signal SIGSEGV, Segmentation fault. 0x000000000040072e in main () at example.c:43 43 printf("%d\n", *segfault);After an error terminates the program within the

(gdb)shell, you can typebacktraceto see what the calling function was, which can include the specific parameters passed that may have something to do with what caused the crash.(gdb) backtrace #0 0x000000000040072e in main () at example.c:43You can set breakpoints for

gdbusing thebreakto halt the program's run if it reaches a matching line number or function call:(gdb) break 42 Breakpoint 1 at 0x400722: file example.c, line 42. (gdb) break malloc Breakpoint 1 at 0x4004c0 (gdb) run Starting program: /home/tom/gdb/example Breakpoint 1, 0x00007ffff7df2310 in malloc () from /lib64/ld-linux-x86-64.so.2Thereafter it's helpful to step through successive lines of code using

step. You can repeat this, like anygdbcommand, by pressing Enter repeatedly to step through lines one at a time:(gdb) step Single stepping until exit from function _start, which has no line number information. 0x00007ffff7a74db0 in __libc_start_main () from /lib/x86_64-linux-gnu/libc.so.6You can even attach

gdbto a process that is already running, by finding the process ID and passing it togdb:$ pgrep example 1524 $ gdb -p 1524This can be useful for redirecting streams of output for a task that is taking an unexpectedly long time to run.

Debugging withvalgrindThe much newer valgrind can be used as a debugging tool in a similar way. There are many different checks and debugging methods this program can run, but one of the most useful is its Memcheck tool, which can be used to detect common memory errors like buffer overflow:

$ valgrind --leak-check=yes ./example ==29557== Memcheck, a memory error detector ==29557== Copyright (C) 2002-2011, and GNU GPL'd, by Julian Seward et al. ==29557== Using Valgrind-3.7.0 and LibVEX; rerun with -h for copyright info ==29557== Command: ./example ==29557== ==29557== Invalid read of size 1 ==29557== at 0x40072E: main (example.c:43) ==29557== Address 0x0 is not stack'd, malloc'd or (recently) free'd ==29557== ...The

Tracing system and library calls withgdbandvalgrindtools can be used together for a very thorough survey of a program's run. Zed Shaw's Learn C the Hard Way includes a really good introduction for elementary use ofvalgrindwith a deliberately broken program.ltraceThe

straceandltracetools are designed to allow watching system calls and library calls respectively for running programs, and logging them to the screen or, more usefully, to files.You can run

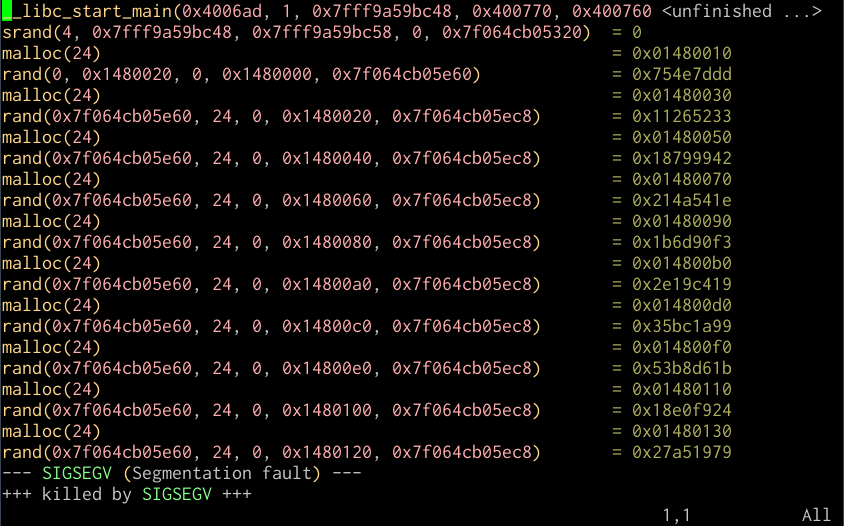

ltraceand have it run the program you want to monitor in this way for you by simply providing it as the sole parameter. It will then give you a listing of all the system and library calls it makes until it exits.$ ltrace ./example __libc_start_main(0x4006ad, 1, 0x7fff9d7e5838, 0x400770, 0x400760 srand(4, 0x7fff9d7e5838, 0x7fff9d7e5848, 0, 0x7ff3aebde320) = 0 malloc(24) = 0x01070010 rand(0, 0x1070020, 0, 0x1070000, 0x7ff3aebdee60) = 0x754e7ddd malloc(24) = 0x01070030 rand(0x7ff3aebdee60, 24, 0, 0x1070020, 0x7ff3aebdeec8) = 0x11265233 malloc(24) = 0x01070050 rand(0x7ff3aebdee60, 24, 0, 0x1070040, 0x7ff3aebdeec8) = 0x18799942 malloc(24) = 0x01070070 rand(0x7ff3aebdee60, 24, 0, 0x1070060, 0x7ff3aebdeec8) = 0x214a541e malloc(24) = 0x01070090 rand(0x7ff3aebdee60, 24, 0, 0x1070080, 0x7ff3aebdeec8) = 0x1b6d90f3 malloc(24) = 0x010700b0 rand(0x7ff3aebdee60, 24, 0, 0x10700a0, 0x7ff3aebdeec8) = 0x2e19c419 malloc(24) = 0x010700d0 rand(0x7ff3aebdee60, 24, 0, 0x10700c0, 0x7ff3aebdeec8) = 0x35bc1a99 malloc(24) = 0x010700f0 rand(0x7ff3aebdee60, 24, 0, 0x10700e0, 0x7ff3aebdeec8) = 0x53b8d61b malloc(24) = 0x01070110 rand(0x7ff3aebdee60, 24, 0, 0x1070100, 0x7ff3aebdeec8) = 0x18e0f924 malloc(24) = 0x01070130 rand(0x7ff3aebdee60, 24, 0, 0x1070120, 0x7ff3aebdeec8) = 0x27a51979 --- SIGSEGV (Segmentation fault) --- +++ killed by SIGSEGV +++You can also attach it to a process that's already running:

$ pgrep example 5138 $ ltrace -p 5138Generally, there's quite a bit more than a couple of screenfuls of text generated by this, so it's helpful to use the

-ooption to specify an output file to which to log the calls:$ ltrace -o example.ltrace ./exampleYou can then view this trace in a text editor like Vim, which includes syntax highlighting for

ltraceoutput:

Vim session with ltrace output

I've found

Tracking open files withltracevery useful for debugging problems where I suspect improper linking may be at fault, or the absence of some needed resource in achrootenvironment, since among its output it shows you its search for libraries at dynamic linking time and opening configuration files in/etc, and the use of devices like/dev/randomor/dev/zero.lsofIf you want to view what devices, files, or streams a running process has open, you can do that with

lsof:$ pgrep example 5051 $ lsof -p 5051For example, the first few lines of the

apache2process running on my home server are:# lsof -p 30779 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME apache2 30779 root cwd DIR 8,1 4096 2 / apache2 30779 root rtd DIR 8,1 4096 2 / apache2 30779 root txt REG 8,1 485384 990111 /usr/lib/apache2/mpm-prefork/apache2 apache2 30779 root DEL REG 8,1 1087891 /lib/x86_64-linux-gnu/libgcc_s.so.1 apache2 30779 root mem REG 8,1 35216 1079715 /usr/lib/php5/20090626/pdo_mysql.so ...Interestingly, another way to list the open files for a process is to check the corresponding entry for the process in the dynamic

/procdirectory:# ls -l /proc/30779/fdThis can be very useful in confusing situations with file locks, or identifying whether a process is holding open files that it needn't.

Viewing memory allocation withpmapAs a final debugging tip, you can view the memory allocations for a particular process with

pmap:# pmap 30779 30779: /usr/sbin/apache2 -k start 00007fdb3883e000 84K r-x-- /lib/x86_64-linux-gnu/libgcc_s.so.1 (deleted) 00007fdb38853000 2048K ----- /lib/x86_64-linux-gnu/libgcc_s.so.1 (deleted) 00007fdb38a53000 4K rw--- /lib/x86_64-linux-gnu/libgcc_s.so.1 (deleted) 00007fdb38a54000 4K ----- [ anon ] 00007fdb38a55000 8192K rw--- [ anon ] 00007fdb392e5000 28K r-x-- /usr/lib/php5/20090626/pdo_mysql.so 00007fdb392ec000 2048K ----- /usr/lib/php5/20090626/pdo_mysql.so 00007fdb394ec000 4K r---- /usr/lib/php5/20090626/pdo_mysql.so 00007fdb394ed000 4K rw--- /usr/lib/php5/20090626/pdo_mysql.so ... total 152520KThis will show you what libraries a running process is using, including those in shared memory. The total given at the bottom is a little misleading as for loaded shared libraries, the running process is not necessarily the only one using the memory; determining "actual" memory usage for a given process is a little more in-depth than it might seem with shared libraries added to the picture. Posted in GNU/Linux Tagged backtrace , breakpoint , debugging , file , file handle , gdb , ltrace , memory , process , strace , trace , unix Unix as IDE: Building Posted on February 13, 2012 by Tom Ryder Because compiling projects can be such a complicated and repetitive process, a good IDE provides a means to abstract, simplify, and even automate software builds. Unix and its descendents accomplish this process with a

Makefile, a prescribed recipe in a standard format for generating executable files from source and object files, taking account of changes to only rebuild what's necessary to prevent costly recompilation.One interesting thing to note about

Anatomy of amakeis that while it's generally used for compiled software build automation and has many shortcuts to that effect, it can actually effectively be used for any situation in which it's required to generate one set of files from another. One possible use is to generate web-friendly optimised graphics from source files for deployment for a website; another use is for generating static HTML pages from code, rather than generating pages on the fly. It's on the basis of this more flexible understanding of software "building" that modern takes on the tool like Ruby'srakehave become popular, automating the general tasks for producing and installing code and files of all kinds.MakefileThe general pattern of a

Makefileis a list of variables and a list of targets , and the sources and/or objects used to provide them. Targets may not necessarily be linked binaries; they could also constitute actions to perform using the generated files, such asinstallto instate built files into the system, andcleanto remove built files from the source tree.It's this flexibility of targets that enables

maketo automate any sort of task relevant to assembling a production build of software; not just the typical parsing, preprocessing, compiling proper and linking steps performed by the compiler, but also running tests (make test), compiling documentation source files into one or more appropriate formats, or automating deployment of code into production systems, for example, uploading to a website via agit pushor similar content-tracking method.An example

Makefilefor a simple software project might look something like the below:all: example example: main.o example.o library.o gcc main.o example.o library.o -o example main.o: main.c gcc -c main.c -o main.o example.o: example.c gcc -c example.c -o example.o library.o: library.c gcc -c library.c -o library.o clean: rm *.o example install: example cp example /usr/binThe above isn't the most optimal

Makefilepossible for this project, but it provides a means to build and install a linked binary simply by typingmake. Each target definition contains a list of the dependencies required for the command that follows; this means that the definitions can appear in any order, and the call tomakewill call the relevant commands in the appropriate order.Much of the above is needlessly verbose or repetitive; for example, if an object file is built directly from a single C file of the same name, then we don't need to include the target at all, and

makewill sort things out for us. Similarly, it would make sense to put some of the more repeated calls into variables so that we would not have to change them individually if our choice of compiler or flags changed. A more concise version might look like the following:CC = gcc OBJECTS = main.o example.o library.o BINARY = example all: example example: $(OBJECTS) $(CC) $(OBJECTS) -o $(BINARY) clean: rm -f $(BINARY) $(OBJECTS) install: example cp $(BINARY) /usr/binMore general uses ofmakeIn the interests of automation, however, it's instructive to think of this a bit more generally than just code compilation and linking. An example could be for a simple web project involving deploying PHP to a live webserver. This is not normally a task people associate with the use of

make, but the principles are the same; with the source in place and ready to go, we have certain targets to meet for the build.PHP files don't require compilation, of course, but web assets often do. An example that will be familiar to web developers is the generation of scaled and optimised raster images from vector source files, for deployment to the web. You keep and version your original source file, and when it comes time to deploy, you generate a web-friendly version of it.

Let's assume for this particular project that there's a set of four icons used throughout the site, sized to 64 by 64 pixels. We have the source files to hand in SVG vector format, safely tucked away in version control, and now need to generate the smaller bitmaps for the site, ready for deployment. We could therefore define a target

icons, set the dependencies, and type out the commands to perform. This is where command line tools in Unix really begin to shine in use withMakefilesyntax:icons: create.png read.png update.png delete.png create.png: create.svg convert create.svg create.raw.png && \ pngcrush create.raw.png create.png read.png: read.svg convert read.svg read.raw.png && \ pngcrush read.raw.png read.png update.png: update.svg convert update.svg update.raw.png && \ pngcrush update.raw.png update.png delete.png: delete.svg convert delete.svg delete.raw.png && \ pngcrush delete.raw.png delete.pngWith the above done, typing

make iconswill go through each of the source icons files in a Bash loop, convert them from SVG to PNG using ImageMagick'sconvert, and optimise them withpngcrush, to produce images ready for upload.A similar approach can be used for generating help files in various forms, for example, generating HTML files from Markdown source:

docs: README.html credits.html README.html: README.md markdown README.md > README.html credits.html: credits.md markdown credits.md > credits.htmlAnd perhaps finally deploying a website with

git push web, but only after the icons are rasterized and the documents converted:deploy: icons docs git push webFor a more compact and abstract formula for turning a file of one suffix into another, you can use the

.SUFFIXESpragma to define these using special symbols. The code for converting icons could look like this; in this case,$<refers to the source file,$*to the filename with no extension, and$@to the target.icons: create.png read.png update.png delete.png .SUFFIXES: .svg .png .svg.png: convert $< $*.raw.png && \ pngcrush $*.raw.png $@Tools for building aMakefileA variety of tools exist in the GNU Autotools toolchain for the construction of

configurescripts andmakefiles for larger software projects at a higher level, in particularautoconfandautomake. The use of these tools allows generatingconfigurescripts andmakefiles covering very large source bases, reducing the necessity of building otherwise extensive makefiles manually, and automating steps taken to ensure the source remains compatible and compilable on a variety of operating systems.Covering this complex process would be a series of posts in its own right, and is out of scope of this survey.

Thanks to user samwyse for the

.SUFFIXESsuggestion in the comments. Posted in GNU/Linux Tagged build , clean , dependency , generate , install , make , makefile , target , unix Unix as IDE: Compiling Posted on February 12, 2012 by Tom Ryder There are a lot of tools available for compiling and interpreting code on the Unix platform, and they tend to be used in different ways. However, conceptually many of the steps are the same. Here I'll discuss compiling C code withgccfrom the GNU Compiler Collection, and briefly the use ofperlas an example of an interpreter. GCCGCC is a very mature GPL-licensed collection of compilers, perhaps best-known for working with C and C++ programs. Its free software license and near ubiquity on free Unix-like systems like GNU/Linux and BSD has made it enduringly popular for these purposes, though more modern alternatives are available in compilers using the LLVM infrastructure, such as Clang .

The frontend binaries for GNU Compiler Collection are best thought of less as a set of complete compilers in their own right, and more as drivers for a set of discrete programming tools, performing parsing, compiling, and linking, among other steps. This means that while you can use GCC with a relatively simple command line to compile straight from C sources to a working binary, you can also inspect in more detail the steps it takes along the way and tweak it accordingly.

I won't be discussing the use of

Compiling and assembling object codemakefiles here, though you'll almost certainly be wanting them for any C project of more than one file; that will be discussed in the next article on build automation tools.You can compile object code from a C source file like so:

$ gcc -c example.c -o example.oAssuming it's a valid C program, this will generate an unlinked binary object file called

example.oin the current directory, or tell you the reasons it can't. You can inspect its assembler contents with theobjdumptool:$ objdump -D example.oAlternatively, you can get

gccto output the appropriate assembly code for the object directly with the-Sparameter:$ gcc -c -S example.c -o example.sThis kind of assembly output can be particularly instructive, or at least interesting, when printed inline with the source code itself, which you can do with:

$ gcc -c -g -Wa,-a,-ad example.c > example.lstPreprocessorThe C preprocessor

cppis generally used to include header files and define macros, among other things. It's a normal part ofgcccompilation, but you can view the C code it generates by invokingcppdirectly:$ cpp example.cThis will print out the complete code as it would be compiled, with includes and relevant macros applied.

Linking objectsOne or more objects can be linked into appropriate binaries like so:

$ gcc example.o -o exampleIn this example, GCC is not doing much more than abstracting a call to

Compiling, assembling, and linkingld, the GNU linker. The command produces an executable binary calledexample.All of the above can be done in one step with:

$ gcc example.c -o exampleThis is a little simpler, but compiling objects independently turns out to have some practical performance benefits in not recompiling code unnecessarily, which I'll discuss in the next article.

Including and linkingC files and headers can be explicitly included in a compilation call with the

-Iparameter:$ gcc -I/usr/include/somelib.h example.c -o exampleSimilarly, if the code needs to be dynamically linked against a compiled system library available in common locations like

/libor/usr/lib, such asncurses, that can be included with the-lparameter:$ gcc -lncurses example.c -o exampleIf you have a lot of necessary inclusions and links in your compilation process, it makes sense to put this into environment variables:

$ export CFLAGS=-I/usr/include/somelib.h $ export CLIBS=-lncurses $ gcc $CFLAGS $CLIBS example.c -o exampleThis very common step is another thing that a

Compilation planMakefileis designed to abstract away for you.To inspect in more detail what

gccis doing with any call, you can add the-vswitch to prompt it to print its compilation plan on the standard error stream:$ gcc -v -c example.c -o example.oIf you don't want it to actually generate object files or linked binaries, it's sometimes tidier to use

-###instead:$ gcc -### -c example.c -o example.oThis is mostly instructive to see what steps the

More verbose error checkinggccbinary is abstracting away for you, but in specific cases it can be useful to identify steps the compiler is taking that you may not necessarily want it to.You can add the

-Walland/or-pedanticoptions to thegcccall to prompt it to warn you about things that may not necessarily be errors, but could be:$ gcc -Wall -pedantic -c example.c -o example.oThis is good for including in your

Profiling compilation timeMakefileor in yourmakeprgdefinition in Vim, as it works well with the quickfix window discussed in the previous article and will enable you to write more readable, compatible, and less error-prone code as it warns you more extensively about errors.You can pass the flag

-timetogccto generate output showing how long each step is taking:$ gcc -time -c example.c -o example.oOptimisationYou can pass generic optimisation options to

gccto make it attempt to build more efficient object files and linked binaries, at the expense of compilation time. I find-O2is usually a happy medium for code going into production:

gcc -O1gcc -O2gcc -O3Like any other Bash command, all of this can be called from within Vim by:

:!gcc % -o exampleInterpretersThe approach to interpreted code on Unix-like systems is very different. In these examples I'll use Perl, but most of these principles will be applicable to interpreted Python or Ruby code, for example.

InlineYou can run a string of Perl code directly into the interpreter in any one of the following ways, in this case printing the single line "Hello, world." to the screen, with a linebreak following. The first one is perhaps the tidiest and most standard way to work with Perl; the second uses a heredoc string, and the third a classic Unix shell pipe.

$ perl -e 'print "Hello world.\n";' $ perl <<<'print "Hello world.\n";' $ echo 'print "Hello world.\n";' | perlOf course, it's more typical to keep the code in a file, which can be run directly:

$ perl hello.plIn either case, you can check the syntax of the code without actually running it with the

-cswitch:$ perl -c hello.plBut to use the script as a logical binary , so you can invoke it directly without knowing or caring what the script is, you can add a special first line to the file called the "shebang" that does some magic to specify the interpreter through which the file should be run.

#!/usr/bin/env perl print "Hello, world.\n";The script then needs to be made executable with a

chmodcall. It's also good practice to rename it to remove the extension, since it is now taking the shape of a logic binary:$ mv hello{.pl,} $ chmod +x helloAnd can thereafter be invoked directly, as if it were a compiled binary:

$ ./helloThis works so transparently that many of the common utilities on modern GNU/Linux systems, such as the

adduserfrontend touseradd, are actually Perl or even Python scripts.In the next post, I'll describe the use of

makefor defining and automating building projects in a manner comparable to IDEs, with a nod to newer takes on the same idea with Ruby'srake. Posted in GNU/Linux Tagged assembler , compiler , gcc , interpreter , linker , perl , python , ruby , unix Unix as IDE: Editing Posted on February 11, 2012 by Tom Ryder The text editor is the core tool for any programmer, which is why choice of editor evokes such tongue-in-cheek zealotry in debate among programmers. Unix is the operating system most strongly linked with two enduring favourites, Emacs and Vi, and their modern versions in GNU Emacs and Vim, two editors with very different editing philosophies but comparable power.Being a Vim heretic myself, here I'll discuss the indispensable features of Vim for programming, and in particular the use of shell tools called from within Vim to complement the editor's built-in functionality. Some of the principles discussed here will be applicable to those using Emacs as well, but probably not for underpowered editors like Nano.

This will be a very general survey, as Vim's toolset for programmers is enormous , and it'll still end up being quite long. I'll focus on the essentials and the things I feel are most helpful, and try to provide links to articles with a more comprehensive treatment of the topic. Don't forget that Vim's

Filetype detection:helphas surprised many people new to the editor with its high quality and usefulness.Vim has built-in settings to adjust its behaviour, in particular its syntax highlighting, based on the filetype being loaded, which it happily detects and generally does a good job at doing so. In particular, this allows you to set an indenting style conformant with the way a particular language is usually written. This should be one of the first things in your

.vimrcfile.if has("autocmd") filetype on filetype indent on filetype plugin on endifSyntax highlightingEven if you're just working with a 16-color terminal, just include the following in your

.vimrcif you're not already:syntax onThe colorschemes with a default 16-color terminal are not pretty largely by necessity, but they do the job, and for most languages syntax definition files are available that work very well. There's a tremendous array of colorschemes available, and it's not hard to tweak them to suit or even to write your own. Using a 256-color terminal or gVim will give you more options. Good syntax highlighting files will show you definite syntax errors with a glaring red background.

Line numberingTo turn line numbers on if you use them a lot in your traditional IDE:

set numberYou might like to try this as well, if you have at least Vim 7.3 and are keen to try numbering lines relative to the current line rather than absolutely:

set relativenumberTags filesVim works very well with the output from the

ctagsutility. This allows you to search quickly for all uses of a particular identifier throughout the project, or to navigate straight to the declaration of a variable from one of its uses, regardless of whether it's in the same file. For large C projects in multiple files this can save huge amounts of otherwise wasted time, and is probably Vim's best answer to similar features in mainstream IDEs.You can run

:!ctags -Ron the root directory of projects in many popular languages to generate atagsfile filled with definitions and locations for identifiers throughout your project. Once atagsfile for your project is available, you can search for uses of an appropriate tag throughout the project like so::tag someClassThe commands

Calling external programs:tnand:tpwill allow you to iterate through successive uses of the tag elsewhere in the project. The built-in tags functionality for this already covers most of the bases you'll probably need, but for features such as a tag list window, you could try installing the very popular Taglist plugin . Tim Pope's Unimpaired plugin also contains a couple of useful relevant mappings.Until 2017, there were three major methods of calling external programs during a Vim session:

:!<command>-- Useful for issuing commands from within a Vim context particularly in cases where you intend to record output in a buffer.:shell-- Drop to a shell as a subprocess of Vim. Good for interactive commands.- Ctrl-Z -- Suspend Vim and issue commands from the shell that called it.

Since 2017, Vim 8.x now includes a

Lint programs and syntax checkers:terminalcommand to bring up a terminal emulator buffer in a window. This seems to work better than previous plugin-based attempts at doing this, such as Conque . For the moment I still strongly recommend using one of the older methods, all of which also work in othervi-type editors.Checking syntax or compiling with an external program call (e.g.

perl -c,gcc) is one of the calls that's good to make from within the editor using:!commands. If you were editing a Perl file, you could run this like so::!perl -c % /home/tom/project/test.pl syntax OK Press Enter or type command to continueThe

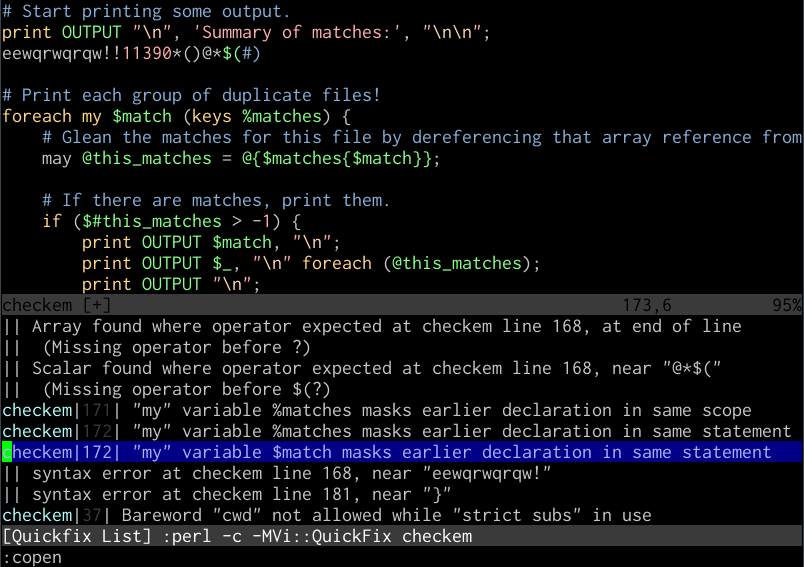

%symbol is shorthand for the file loaded in the current buffer. Running this prints the output of the command, if any, below the command line. If you wanted to call this check often, you could perhaps map it as a command, or even a key combination in your.vimrcfile. In this case, we define a command:PerlLintwhich can be called from normal mode with\l:command PerlLint !perl -c % nnoremap <leader>l :PerlLint<CR>For a lot of languages there's an even better way to do this, though, which allows us to capitalise on Vim's built-in quickfix window. We can do this by setting an appropriate

makeprgfor the filetype, in this case including a module that provides us with output that Vim can use for its quicklist, and a definition for its two formats::set makeprg=perl\ -c\ -MVi::QuickFix\ % :set errorformat+=%m\ at\ %f\ line\ %l\. :set errorformat+=%m\ at\ %f\ line\ %lYou may need to install this module first via CPAN, or the Debian package

libvi-quickfix-perl. This done, you can type:makeafter saving the file to check its syntax, and if errors are found, you can open the quicklist window with:copento inspect the errors, and:cnand:cpto jump to them within the buffer.

Vim quickfix working on a Perl file

This also works for output from

Reading output from other commandsgcc, and pretty much any other compiler syntax checker that you might want to use that includes filenames, line numbers, and error strings in its error output. It's even possible to do this with web-focused languages like PHP , and for tools like JSLint for JavaScript . There's also an excellent plugin named Syntastic that does something similar.You can use

:r!to call commands and paste their output directly into the buffer with which you're working. For example, to pull a quick directory listing for the current folder into the buffer, you could type::r!lsThis doesn't just work for commands, of course; you can simply read in other files this way with just

:r, like public keys or your own custom boilerplate::r ~/.ssh/id_rsa.pub :r ~/dev/perl/boilerplate/copyright.plFiltering output through other commandsYou can extend this to actually filter text in the buffer through external commands, perhaps selected by a range or visual mode, and replace it with the command's output. While Vim's visual block mode is great for working with columnar data, it's very often helpful to bust out tools like

column,cut,sort, orawk.For example, you could sort the entire file in reverse by the second column by typing:

:%!sort -k2,2rYou could print only the third column of some selected text where the line matches the pattern

/vim/with::'<,'>!awk '/vim/ {print $3}'You could arrange keywords from lines 1 to 10 in nicely formatted columns like:

:1,10!column -tReally any kind of text filter or command can be manipulated like this in Vim, a simple interoperability feature that expands what the editor can do by an order of magnitude. It effectively makes the Vim buffer into a text stream, which is a language that all of these classic tools speak.

There is a lot more detail on this in my "Shell from Vi" post.

Built-in alternativesIt's worth noting that for really common operations like sorting and searching, Vim has built-in methods in

Diffing:sortand:grep, which can be helpful if you're stuck using Vim on Windows, but don't have nearly the adaptability of shell calls.Vim has a diffing mode,

vimdiff, which allows you to not only view the differences between different versions of a file, but also to resolve conflicts via a three-way merge and to replace differences to and fro with commands like:diffputand:diffgetfor ranges of text. You can callvimdifffrom the command line directly with at least two files to compare like so:$ vimdiff file-v1.c file-v2.c

Vim diffing a .vimrc file Version control

You can call version control methods directly from within Vim, which is probably all you need most of the time. It's useful to remember here that

%is always a shortcut for the buffer's current file::!svn status :!svn add % :!git commit -aRecently a clear winner for Git functionality with Vim has come up with Tim Pope's Fugitive , which I highly recommend to anyone doing Git development with Vim. There'll be a more comprehensive treatment of version control's basis and history in Unix in Part 7 of this series.

The differencePart of the reason Vim is thought of as a toy or relic by a lot of programmers used to GUI-based IDEs is its being seen as just a tool for editing files on servers, rather than a very capable editing component for the shell in its own right. Its own built-in features being so composable with external tools on Unix-friendly systems makes it into a text editing powerhouse that sometimes surprises even experienced users.

Oct 30, 2017 | sanctum.geek.nz

In programming, a library is an assortment of pre-compiled pieces of code that can be reused in a program. Libraries simplify life for programmers, in that they provide reusable functions, routines, classes, data structures and so on (written by a another programmer), which they can use in their programs.For instance, if you are building an application that needs to perform math operations, you don't have to create a new math function for that, you can simply use existing functions in libraries for that programming language.

Examples of libraries in Linux include libc (the standard C library) or glibc (GNU version of the standard C library), libcurl (multiprotocol file transfer library), libcrypt (library used for encryption, hashing, and encoding in C) and many more.

Linux supports two classes of libraries, namely:

- Static libraries – are bound to a program statically at compile time.

- Dynamic or shared libraries – are loaded when a program is launched and loaded into memory and binding occurs at run time.

Dynamic or shared libraries can further be categorized into:

Shared Library Naming Conventions

- Dynamically linked libraries – here a program is linked with the shared library and the kernel loads the library (in case it's not in memory) upon execution.

- Dynamically loaded libraries – the program takes full control by calling functions with the library.

Shared libraries are named in two ways: the library name (a.k.a soname ) and a "filename" (absolute path to file which stores library code).

For example, the soname for libc is libc.so.6 : where lib is the prefix, is a descriptive name, so means shared object, and is the version. And its filename is: /lib64/libc.so.6 . Note that the soname is actually a symbolic link to the filename.

Locating Shared Libraries in LinuxShared libraries are loaded by ld.so (or ld.so.x ) and ld-linux.so (or ld-linux.so.x ) programs, where is the version. In Linux, /lib/ld-linux.so.x searches and loads all shared libraries used by a program.