|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

|

|

No matter how secure and robust your web, mail and other servers are, compromised and/or corrupted DNS systems can prevent customers and legitimate users from ever reaching them.

|

|

RFC2541, NSA, CERT and Center for Internet Security (CIS) are good documents and administrators are expected to be familiar with those guidelines.

The original DNS design was focused on availability not security and included no authentication. Still DNS is used as key component of security infrastructure as usually firewall rules operate with DNS names, not actual IP addresses. Because of the lack of understanding of the critical role of DNS in the security infrastructure, companies often neglect to manage and configure DNS properly. “It is clear that a stunning number of companies have serious DNS configuration problems which can lead to failure at any time.” states Cricket Liu the author of the Reilly Nutshell Handbook ‘DNS and BIND “It’s unfortunately widely known that DNS health on a global scale is poor. Anyone doing business on the Internet needs to take DNS outages seriously.”

There are four major points of attack: cache spoofing, traffic diversion, distributed denial-of-service (DDoS) attacks on the top-level domain servers themselves, and buffer overflow attacks. See for example Attacking_the_DNS_Protocol Add to this attacks on underling OS. Such attacks puts critical enterprise infrastructure, such as web presence and e-mail traffic, at risk. Therefore adequate attention needs to be paid to securing DNS servers and the OS on which they are running. Generally the OS should be secured on the level typical of bastion host and have internal firewall enabled.

Approximately 80% of DNS servers run on bind. That means that might make sense to stay away from the crowd. While abandonment of bind might be unwise, the first line of defense is to use platform different from x86, OS different from Linux (Solaris, AIX or OpenBSD) and compile BIND using the compiler different from gcc (for example using Studio 11 on Solaris). You can also buy a DNS appliance, but this is a mixed blessing.

Solaris X86 can be run under Oracle VM, as Oracle servers are way too expensive for the purpose.

On 2001's ICANN security meeting Patrick Thibodeau said,

“Some of the vulnerabilities potentially affecting the domain name system include its heavy reliance on Berkeley Internet Name Domain (BIND) software, which is freely distributed by the Internet Software Consortium.”

In the summer of 1997, Eugene Kashpureff demonstrated vulnerability of DNS quite convincingly by performing (as a protest against the InterNIC's monopoly over the top-level domain names) the mother of all DNS hacks -- the redirection of all of the traffic destined to the InterNIC to his own Alternic.net service (see Kashpureff to face federal charges CNET News.com). More commonly, a DNS system that is not properly hardened/configured and tells the world quite a lot, including staff than you do not want them to know. This can simplify the creation of attacks on other elements of IS infrastructure.

DNS vulnerabilities often make it to the very top of the list of most critical security vulnerabilities, but that does not mean that understanding of DNS servers security improves after this :-). See the SANS Institute paper: "How To Eliminate The Ten Most Critical Internet Security Threats: The Experts’ Consensus." 12 July, 2000. Version 1.25. URL: http://www.sans.org/topten.htm ( 23 July, 2000).

DNS, like many of the older protocols, was developed at a time when the Internet was a research network and was meant to provide a simple and unlimited way to provide information about what computers you have to anyone else. Obviously the Internet has changed, and changes to BIND 9 have improved our ability to lock down DNS.

Among important DNS security practices are:

In firewalled environment you need to split DNS servers into internal and external.

Simplest DNS checks can be done using "dig" (Domain Information Groper). For instance, the command

dig -t txt -c chaos [email protected]

will query for the version of BIND running on your DNS name server. You can block this capability using in named.conf:

options {

...

version "0.1";

};

But there are a lot of subtle things that you may miss in manual checks, so using hardening tools are desirable. They do not guarantee anything as your understanding of the DNS infrastructure cannot be matched by the tools but they can pinpoint some blunders and inconsistencies and they are much more diligent that individual sysadmin ;-).

Protecting DNS has several dimensions.

Since DNS security is one of the most complicated topics in DNS and you can definitely can go too far getting zero or negative return on investment, it is critical to understand first what you want to secure -- or rather what threat level you want to secure against. This will be very different, if you run a large corporation DNS server vs running a modest in-house DNS serving a couple of low volume web sites. Security is always a blend of real threat and paranoia -- but remember just because you are naturally paranoid does not mean that they are not after you... Some security measures like running bind in chrooted environment and split DNS solutions are actually universal. Solaris Zones are even better solution. Other like blocking zones transfers make more sense to external DNS.

If DNS is installed on Windows 2000 it should be compliant with NSA guidelines Guide to Securing Microsoft Windows 2000 DNS. This document contins a lot of useful information about security Unix based DNS servers too.

Zone transfers pose a significant risk for organizations running DNS. While there are legitimate and necessary reasons for why zone transfers occur, an attacker may request a zone transfer from any domain name server or secondary name server on the Internet. The reason attackers do this is to gather intimate details of an organization’s internal network, and use this information for further reconnaissance or as a launch pad for an attack. So set of IP from which zone transfers are accepted should be restricted.

The attacker’s job is made even easier by the existence of legitimate websites that host DNS tools that ease reconnaissance.

The risk that zone transfers pose may be reduced by incorporating a split-DNS architecture. Split-DNS uses a public DNS domain server for publicly reachable services within the DMZ, and a private DNS domain server for the private internal network. The public DNS server, and usually public www and mail servers, are the only servers defined in the public DNS server’s database. While the internal DNS server contains all the private server and workstation information for the internal network in its DNS databases.

If internal users are allowed to access the Internet, the firewall should allow the internal DNS server to query the Internet. All DNS queries from the Internet should use the external DNS server. Outbound DNS queries from the external DNS server should also be permitted.

BIND version 8.1 (and higher) uses the “allow-query” directive, which is also set in /etc/named.boot, to impose access list controls on IP address queries.

The BIND tar file includes the tool “DIG” (Domain Information Groper), which may be used to debug DNS servers and test security by generating queries that run against the server. For instance, the command “dig -t txt -c chaos VERSION.BIND @yourcompany.com” will query for the version of BIND running on the DNS name server. BIND also comes with an older tool called “nslookup". This is useful for doing inverse IP address to host-name DNS queries. For instance, the command “nslookup 10.5.4.3” will actually perform a regular DNS query on the domain name 3.4.5.10.in-addr.arpa (the IP address is reversed).

Stateful firewall should enforce packet filtering for the UDP/TCP port 53 (DNS). By doing so, IP packets bound for UDP port 53 from the Internet are limited to authorized replies from queries made from the internal network. If such a packet was not replying to a request from the internal DNS server, the firewall would deny it. The firewall should also deny IP packets bound for TCP port 53 on the internal DNS server to prevent unauthorized zone transfers. This is redundant if access control has been established using BIND, but it establishes “defense in depth”.

Dr. Nikolai Bezroukov

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

May 08, 2020 | www.redhat.com

In part 1 of this article, I introduced you to Unbound , a great name resolution option for home labs and small network environments. We looked at what Unbound is, and we discussed how to install it. In this section, we'll work on the basic configuration of Unbound.

Basic configuration

First find and uncomment these two entries in

unbound.conf:interface: 0.0.0.0 interface: ::0Here, the

0entry indicates that we'll be accepting DNS queries on all interfaces. If you have more than one interface in your server and need to manage where DNS is available, you would put the address of the interface here.Next, we may want to control who is allowed to use our DNS server. We're going to limit access to the local subnets we're using. It's a good basic practice to be specific when we can:

Access-control: 127.0.0.0/8 allow # (allow queries from the local host) access-control: 192.168.0.0/24 allow access-control: 192.168.1.0/24 allowWe also want to add an exception for local, unsecured domains that aren't using DNSSEC validation:

domain-insecure: "forest.local"Now I'm going to add my local authoritative BIND server as a stub-zone:

stub-zone: name: "forest" stub-addr: 192.168.0.220 stub-first: yesIf you want or need to use your Unbound server as an authoritative server, you can add a set of local-zone entries that look like this:

local-zone: "forest.local." static local-data: "jupiter.forest" IN A 192.168.0.200 local-data: "callisto.forest" IN A 192.168.0.222These can be any type of record you need locally but note again that since these are all in the main configuration file, you might want to configure them as stub zones if you need authoritative records for more than a few hosts (see above).

If you were going to use this Unbound server as an authoritative DNS server, you would also want to make sure you have a

root hintsfile, which is the zone file for the root DNS servers.Get the file from InterNIC . It is easiest to download it directly where you want it. My preference is usually to go ahead and put it where the other unbound related files are in

/etc/unbound:wget https://www.internic.net/domain/named.root -O /etc/unbound/root.hintsThen add an entry to your

unbound.conffile to let Unbound know where the hints file goes:# file to read root hints from. root-hints: "/etc/unbound/root.hints"Finally, we want to add at least one entry that tells Unbound where to forward requests to for recursion. Note that we could forward specific domains to specific DNS servers. In this example, I'm just going to forward everything out to a couple of DNS servers on the Internet:

forward-zone: name: "." forward-addr: 1.1.1.1 forward-addr: 8.8.8.8Now, as a sanity check, we want to run the

unbound-checkconfcommand, which checks the syntax of our configuration file. We then resolve any errors we find.[root@callisto ~]# unbound-checkconf /etc/unbound/unbound_server.key: No such file or directory [1584658345] unbound-checkconf[7553:0] fatal error: server-key-file: "/etc/unbound/unbound_server.key" does not existThis error indicates that a key file which is generated at startup does not exist yet, so let's start Unbound and see what happens:

[root@callisto ~]# systemctl start unboundWith no fatal errors found, we can go ahead and make it start by default at server startup:

[root@callisto ~]# systemctl enable unbound Created symlink from /etc/systemd/system/multi-user.target.wants/unbound.service to /usr/lib/systemd/system/unbound.service.And you should be all set. Next, let's apply some of our DNS troubleshooting skills to see if it's working correctly.

First, we need to set our DNS resolver to use the new server:

[root@showme1 ~]# nmcli con mod ext ipv4.dns 192.168.0.222 [root@showme1 ~]# systemctl restart NetworkManager [root@showme1 ~]# cat /etc/resolv.conf # Generated by NetworkManager nameserver 192.168.0.222 [root@showme1 ~]#Let's run

digand see who we can see:root@showme1 ~]# dig; <<>> DiG 9.11.4-P2-RedHat-9.11.4-9.P2.el7 <<>> ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 36486 ;; flags: qr rd ra ad; QUERY: 1, ANSWER: 13, AUTHORITY: 0, ADDITIONAL: 1 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 4096 ;; QUESTION SECTION: ;. IN NS ;; ANSWER SECTION: . 508693 IN NS i.root-servers.net. <snip>Excellent! We are getting a response from the new server, and it's recursing us to the root domains. We don't see any errors so far. Now to check on a local host:

;; ANSWER SECTION: jupiter.forest. 5190 IN A 192.168.0.200Great! We are getting the A record from the authoritative server back, and the IP address is correct. What about external domains?

;; ANSWER SECTION: redhat.com. 3600 IN A 209.132.183.105Perfect! If we rerun it, will we get it from the cache?

;; ANSWER SECTION: redhat.com. 3531 IN A 209.132.183.105 ;; Query time: 0 msec ;; SERVER: 192.168.0.222#53(192.168.0.222)Note the Query time of

0seconds- this indicates that the answer lives on the caching server, so it wasn't necessary to go ask elsewhere. This is the main benefit of a local caching server, as we discussed earlier.Wrapping up

While we did not discuss some of the more advanced features that are available in Unbound, one thing that deserves mention is DNSSEC. DNSSEC is becoming a standard for DNS servers, as it provides an additional layer of protection for DNS transactions. DNSSEC establishes a trust relationship that helps prevent things like spoofing and injection attacks. It's worth looking into a bit if you are using a DNS server that faces the public even though It's beyond the scope of this article.

[ Getting started with networking? Check out the Linux networking cheat sheet . ]

Dec 01, 2019 | www.2daygeek.com

The common syntax for dig command as follows:

dig [Options] [TYPE] [Domain_Name.com]1) How to Lookup a Domain "A" Record (IP Address) on Linux Using the dig CommandUse the dig command followed by the domain name to find the given domain "A" record (IP address).

$ dig 2daygeek.com ; <<>> DiG 9.14.7 <<>> 2daygeek.com ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 7777 ;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 512 ;; QUESTION SECTION: ;2daygeek.com. IN A ;; ANSWER SECTION: 2daygeek.com. 299 IN A 104.27.157.177 2daygeek.com. 299 IN A 104.27.156.177 ;; Query time: 29 msec ;; SERVER: 192.168.1.1#53(192.168.1.1) ;; WHEN: Thu Nov 07 16:10:56 IST 2019 ;; MSG SIZE rcvd: 73It used the local DNS cache server to obtain the given domain information from via port number 53.

2) How to Only Lookup a Domain "A" Record (IP Address) on Linux Using the dig CommandUse the dig command followed by the domain name with additional query options to filter only the required values of the domain name.

In this example, we are only going to filter the Domain A record (IP address).

$ dig 2daygeek.com +nocomments +noquestion +noauthority +noadditional +nostats ; <<>> DiG 9.14.7 <<>> 2daygeek.com +nocomments +noquestion +noauthority +noadditional +nostats ;; global options: +cmd 2daygeek.com. 299 IN A 104.27.157.177 2daygeek.com. 299 IN A 104.27.156.1773) How to Only Lookup a Domain "A" Record (IP Address) on Linux Using the +answer OptionAlternatively, only the "A" record (IP address) can be obtained using the "+answer" option.

$ dig 2daygeek.com +noall +answer 2daygeek.com. 299 IN A 104.27.156.177 2daygeek.com. 299 IN A 104.27.157.1774) How Can I Only View a Domain "A" Record (IP address) on Linux Using the "+short" Option?This is similar to the output above, but it only shows the IP address.

$ dig 2daygeek.com +short 104.27.157.177 104.27.156.1775) How to Lookup a Domain "MX" Record on Linux Using the dig CommandAdd the

MXquery type in the dig command to get the MX record of the domain.# dig 2daygeek.com MX +noall +answer or # dig -t MX 2daygeek.com +noall +answer 2daygeek.com. 299 IN MX 0 dc-7dba4d3ea8cd.2daygeek.com.According to the above output, it only has one MX record and the priority is 0.

6) How to Lookup a Domain "NS" Record on Linux Using the dig CommandAdd the

NSquery type in the dig command to get the Name Server (NS) record of the domain.# dig 2daygeek.com NS +noall +answer or # dig -t NS 2daygeek.com +noall +answer 2daygeek.com. 21588 IN NS jean.ns.cloudflare.com. 2daygeek.com. 21588 IN NS vin.ns.cloudflare.com.7) How to Lookup a Domain "TXT (SPF)" Record on Linux Using the dig CommandAdd the

TXTquery type in the dig command to get the TXT (SPF) record of the domain.# dig 2daygeek.com TXT +noall +answer or # dig -t TXT 2daygeek.com +noall +answer 2daygeek.com. 288 IN TXT "ca3-8edd8a413f634266ac71f4ca6ddffcea"8) How to Lookup a Domain "SOA" Record on Linux Using the dig CommandAdd the

SOAquery type in the dig command to get the SOA record of the domain.# dig 2daygeek.com SOA +noall +answer or # dig -t SOA 2daygeek.com +noall +answer 2daygeek.com. 3599 IN SOA jean.ns.cloudflare.com. dns.cloudflare.com. 2032249144 10000 2400 604800 36009) How to Lookup a Domain Reverse DNS "PTR" Record on Linux Using the dig CommandEnter the domain's IP address with the host command to find the domain's reverse DNS (PTR) record.

# dig -x 182.71.233.70 +noall +answer 70.233.71.182.in-addr.arpa. 21599 IN PTR nsg-static-070.233.71.182.airtel.in.10) How to Find All Possible Records for a Domain on Linux Using the dig CommandInput the domain name followed by the dig command to find all possible records for a domain (A, NS, PTR, MX, SPF, TXT).

# dig 2daygeek.com ANY +noall +answer ; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.23.rc1.el6_5.1 <<>> 2daygeek.com ANY +noall +answer ;; global options: +cmd 2daygeek.com. 12922 IN TXT "v=spf1 ip4:182.71.233.70 +a +mx +ip4:49.50.66.31 ?all" 2daygeek.com. 12693 IN MX 0 2daygeek.com. 2daygeek.com. 12670 IN A 182.71.233.70 2daygeek.com. 84670 IN NS ns2.2daygeek.in. 2daygeek.com. 84670 IN NS ns1.2daygeek.in.11) How to Lookup a Particular Name Server for a Domain NameAlso, you can look up a specific name server for a domain name using the dig command.

# dig jean.ns.cloudflare.com 2daygeek.com ; <<>> DiG 9.14.7 <<>> jean.ns.cloudflare.com 2daygeek.com ;; global options: +cmd ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 10718 ;; flags: qr rd ra ad; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 512 ;; QUESTION SECTION: ;jean.ns.cloudflare.com. IN A ;; ANSWER SECTION: jean.ns.cloudflare.com. 21599 IN A 173.245.58.121 ;; Query time: 23 msec ;; SERVER: 192.168.1.1#53(192.168.1.1) ;; WHEN: Tue Nov 12 11:22:50 IST 2019 ;; MSG SIZE rcvd: 67 ;; Got answer: ;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 45300 ;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1 ;; OPT PSEUDOSECTION: ; EDNS: version: 0, flags:; udp: 512 ;; QUESTION SECTION: ;2daygeek.com. IN A ;; ANSWER SECTION: 2daygeek.com. 299 IN A 104.27.156.177 2daygeek.com. 299 IN A 104.27.157.177 ;; Query time: 23 msec ;; SERVER: 192.168.1.1#53(192.168.1.1) ;; WHEN: Tue Nov 12 11:22:50 IST 2019 ;; MSG SIZE rcvd: 7312) How To Query Multiple Domains DNS Information Using the dig CommandYou can query DNS information for multiple domains at once using the dig command.

# dig 2daygeek.com NS +noall +answer linuxtechnews.com TXT +noall +answer magesh.co.in SOA +noall +answer 2daygeek.com. 21578 IN NS jean.ns.cloudflare.com. 2daygeek.com. 21578 IN NS vin.ns.cloudflare.com. linuxtechnews.com. 299 IN TXT "ca3-e9556bfcccf1456aa9008dbad23367e6" linuxtechnews.com. 299 IN TXT "google-site-verification=a34OylEd_vQ7A_hIYWQ4wJ2jGrMgT0pRdu_CcvgSp4w" magesh.co.in. 3599 IN SOA jean.ns.cloudflare.com. dns.cloudflare.com. 2032053532 10000 2400 604800 360013) How To Query DNS Information for Multiple Domains Using the dig Command from a text FileTo do so, first create a file and add it to the list of domains you want to check for DNS records.

In my case, I've created a file named

dig-demo.txtand added some domains to it.# vi dig-demo.txt 2daygeek.com linuxtechnews.com magesh.co.inOnce you have done the above operation, run the dig command to view DNS information.

# dig -f /home/daygeek/shell-script/dig-test.txt NS +noall +answer 2daygeek.com. 21599 IN NS jean.ns.cloudflare.com. 2daygeek.com. 21599 IN NS vin.ns.cloudflare.com. linuxtechnews.com. 21599 IN NS jean.ns.cloudflare.com. linuxtechnews.com. 21599 IN NS vin.ns.cloudflare.com. magesh.co.in. 21599 IN NS jean.ns.cloudflare.com. magesh.co.in. 21599 IN NS vin.ns.cloudflare.com.14) How to use the .digrc FileYou can control the behavior of the dig command by adding the ".digrc" file to the user's home directory.

If you want to perform dig command with only answer section. Create the

.digrcfile in the user's home directory and save the default options+noalland+answer.# vi ~/.digrc +noall +answerOnce you done the above step. Simple run the dig command and see a magic.

# dig 2daygeek.com NS 2daygeek.com. 21478 IN NS jean.ns.cloudflare.com. 2daygeek.com. 21478 IN NS vin.ns.cloudflare.com.

zwischenzugs

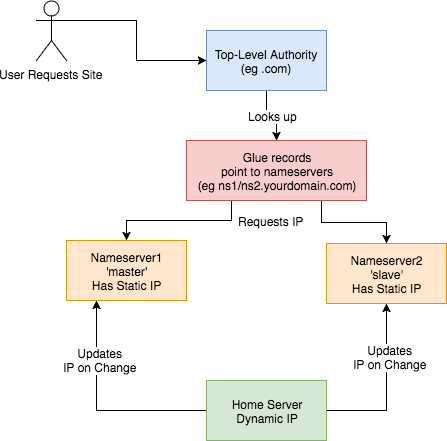

Introduction Despite my woeful knowledge of networking, I run my own DNS servers on my own websites run from home. I achieved this through trial and error and now it requires almost zero maintenance, even though I don't have a static IP at home.Here I share how (and why) I persist in this endeavour.

Overview This is an overview of the setup:

This is how I set up my DNS. I:

How? Walking through step-by-step how I did it: 1) Set up two Virtual Private Servers (VPSes) You will need two stable machines with static IP addresses. If you're not lucky enough to have these in your possession, then you can set one up on the cloud. I used this site , but there are plenty out there. NB I asked them, and their IPs are static per VPS. I use the cheapest cloud VPS (1$/month) and set up debian on there. NOTE: Replace any mention of

- got a domain from an authority (a

.tkdomain in my case)- set up glue records to defer DNS queries to my nameservers

- set up nameservers with static IPs

- set up a dynamic DNS updater from home

DNSIP1andDNSIP2below with the first and second static IP addresses you are given.Log on and set up root passwordSSH to the servers and set up a strong root password. 2) Set up domains You will need two domains: one for your dns servers, and one for the application running on your host. I use dot.tk to get free throwaway domains. In this case, I might setup a myuniquedns.tk DNS domain and a myuniquesite.tk site domain. Whatever you choose, replace your DNS domain when you seeYOURDNSDOMAINbelow. Similarly, replace your app domain when you seeYOURSITEDOMAINbelow. 3) Set up a 'glue' record If you use dot.tk as above, then to allow you to manage theYOURDNSDOMAINdomain you will need to set up a 'glue' record. What this does is tell the current domain authority (dot.tk) to defer to your nameservers (the two servers you've set up) for this specific domain. Otherwise it keeps referring back to the.tkdomain for the IP. See here for a fuller explanation. Another good explanation is here . To do this you need to check with the authority responsible how this is done, or become the authority yourself. dot.tk has a web interface for setting up a glue record, so I used that. There, you need to go to 'Manage Domains' => 'Manage Domain' => 'Management Tools' => 'Register Glue Records' and fill out the form. Your two hosts will be calledns1.YOURDNSDOMAINandns2.YOURDNSDOMAIN, and the glue records will point to either IP address. Note, you may need to wait a few hours (or longer) for this to take effect. If really unsure, give it a day.

If you like this post, you might be interested in my book Learn Bash the Hard Way , available here for just $5.![hero]()

4) Installbindon the DNS Servers On a Debian machine (for example), and as root, type:apt install bind9bindis the domain name server software you will be running. 5) Configurebindon the DNS Servers Now, this is the hairy bit. There are two parts this with two files involved:named.conf.local, and thedb.YOURDNSDOMAINfile. They are both in the/etc/bindfolder. Navigate there and edit these files.Part 1 –This file lists the 'zone's (domains) served by your DNS servers. It also defines whether thisnamed.conf.localbindinstance is the 'master' or the 'slave'. I'll assumens1.YOURDNSDOMAINis the 'master' andns2.YOURDNSDOMAINis the 'slave.Part 1a – the master

On the master/ns1.YOURNDSDOMAIN, thenamed.conf.localshould be changed to look like this:zone "YOURDNSDOMAIN" { type master; file "/etc/bind/db.YOURDNSDOMAIN"; allow-transfer { DNSIP2; }; }; zone "YOURSITEDOMAIN" { type master; file "/etc/bind/YOURDNSDOMAIN"; allow-transfer { DNSIP2; }; }; zone "14.127.75.in-addr.arpa" { type master; notify no; file "/etc/bind/db.75"; allow-transfer { DNSIP2; }; }; logging { channel query.log { file "/var/log/query.log"; // Set the severity to dynamic to see all the debug messages. severity debug 3; }; category queries { query.log; }; };The logging at the bottom is optional (I think). I added it a while ago, and I leave it in here for interest. I don't know what the 14.127 zone stanza is about.Part 1b – the slave

Jan 26, 2019 | zwischenzugs.com

On the slave/

ns2.YOURNDSDOMAIN, thenamed.conf.localshould be changed to look like this:zone "YOURDNSDOMAIN" { type slave; file "/var/cache/bind/db.YOURDNSDOMAIN"; masters { DNSIP1; }; }; zone "YOURSITEDOMAIN" { type slave; file "/var/cache/bind/db.YOURSITEDOMAIN"; masters { DNSIP1; }; }; zone "14.127.75.in-addr.arpa" { type slave; file "/var/cache/bind/db.75"; masters { DNSIP1; }; };Part 2 –db.YOURDNSDOMAINNow we get to the meat – your DNS database is stored in this file.

On the master/

ns1.YOURDNSDOMAINthedb.YOURDNSDOMAINfile looks like this :$TTL 4800 @ IN SOA ns1.YOURDNSDOMAIN. YOUREMAIL.YOUREMAILDOMAIN. ( 2018011615 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 604800 ) ; Negative Cache TTL ; @ IN NS ns1.YOURDNSDOMAIN. @ IN NS ns2.YOURDNSDOMAIN. ns1 IN A DNSIP1 ns2 IN A DNSIP2 YOURSITEDOMAIN. IN A YOURDYNAMICIPOn the slave/

ns2.YOURDNSDOMAINit's very similar, but has ns1 in theSOAline, and theIN NSlines reversed. I can't remember if this reversal is needed or not :$TTL 4800 @ IN SOA ns1.YOURDNSDOMAIN. YOUREMAIL.YOUREMAILDOMAIN. ( 2018011615 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 604800 ) ; Negative Cache TTL ; @ IN NS ns1.YOURDNSDOMAIN. @ IN NS ns2.YOURDNSDOMAIN. ns1 IN A DNSIP1 ns2IN A DNSIP2 YOURSITEDOMAIN. IN A YOURDYNAMICIPA few notes on the above:

- The dots at the end of lines are not typos – this is how domains are written in bind files. So

google.comis writtengoogle.com.- The

YOUREMAIL.YOUREMAILDOMAIN.part must be replaced by your own email. For example, my email address: [email protected] becomesianmiell.gmail.com.. Note also that the dot between first and last name is dropped. email ignores those anyway!YOURDYNAMICIPis the IP address your domain should be pointed to (ie the IP address returned by the DNS server). It doesn't matter what it is at this point, because .the next step is to dynamically update the DNS server with your dynamic IP address whenever it changes.

6) Copy ssh keysBefore setting up your dynamic DNS you need to set up your ssh keys so that your home server can access the DNS servers.

NOTE: This is not security advice. Use at your own risk.

First, check whether you already have an ssh key generated:

ls ~/.ssh/id_rsaIf that returns a file, you're all set up. Otherwise, type:

ssh-keygenand accept the defaults.

Then, once you have a key set up, copy your ssh ID to the nameservers:

ssh-copy-id root@DNSIP1 ssh-copy-id root@DNSIP2Inputting your root password on each command.

7) Create an IP updater scriptNow ssh to both servers and place this script in

/root/update_ip.sh:#!/bin/bash set -o nounset sed -i "s/^(.*) IN A (.*)$/1 IN A $1/" /etc/bind/db.YOURDNSDOMAIN sed -i "s/.*Serial$/ $(date +%Y%m%d%H) ; Serial/" /etc/bind/db.YOURDNSDOMAIN /etc/init.d/bind9 restartMake it executable by running:

chmod +x /root/update_ip.shGoing through it line by line:

set -o nounsetThis line throws an error if the IP is not passed in as the argument to the script.

sed -i "s/^(.*) IN A (.*)$/1 IN A $1/" /etc/bind/db.YOURDNSDOMAINReplaces the IP address with the contents of the first argument to the script.

sed -i "s/.*Serial$/ $(date +%Y%m%d%H) ; Serial/" /etc/bind/db.YOURDNSDOMAINUps the 'serial number'

/etc/init.d/bind9 restartRestart the bind service on the host.

8) Cron Your Dynamic DNSAt this point you've got access to update the IP when your dynamic IP changes, and the script to do the update.

Here's the raw cron entry:

* * * * * curl ifconfig.co 2>/dev/null > /tmp/ip.tmp && (diff /tmp/ip.tmp /tmp/ip || (mv /tmp/ip.tmp /tmp/ip && ssh root@DNSIP1 "/root/update_ip.sh $(cat /tmp/ip)")); curl ifconfig.co 2>/dev/null > /tmp/ip.tmp2 && (diff /tmp/ip.tmp2 /tmp/ip2 || (mv /tmp/ip.tmp2 /tmp/ip2 && ssh [email protected] "/root/update_ip.sh $(cat /tmp/ip2)"))Breaking this command down step by step:

curl ifconfig.co 2>/dev/null > /tmp/ip.tmpThis curls a 'what is my IP address' site, and deposits the output to

/tmp/ip.tmpdiff /tmp/ip.tmp /tmp/ip || (mv /tmp/ip.tmp /tmp/ip && ssh root@DNSIP1 "/root/update_ip.sh $(cat /tmp/ip)"))This diffs the contents of

/tmp/ip.tmpwith/tmp/ip(which is yet to be created, and holds the last-updated ip address). If there is an error (ie there is a new IP address to update on the DNS server), then the subshell is run. This overwrites the ip address, and then ssh'es onto theThe same process is then repeated for

Why!?DNSIP2using separate files (/tmp/ip.tmp2and/tmp/ip2).You may be wondering why I do this in the age of cloud services and outsourcing. There's a few reasons.

It's CheapThe cost of running this stays at the cost of the two nameservers (24$/year) no matter how many domains I manage and whatever I want to do with them.

LearningI've learned a lot by doing this, probably far more than any course would have taught me.

More ControlI can do what I like with these domains: set up any number of subdomains, try my hand at secure mail techniques, experiment with obscure DNS records and so on.

I could extend this into a service. If you're interested, my rates are very low :)

If you like this post, you might be interested in my book Learn Bash the Hard Way , available here for just $5.

Jan 08, 2019 | www.reddit.com

submitted 11 days ago by

Bind 9 on my CentOS 7.6 machine threw this error:cryan7755 1 point 2 points 3 points 11 days ago (1 child)error (network unreachable) resolving './DNSKEY/IN': 2001:7fe::53#53 error (network unreachable) resolving './NS/IN': 2001:7fe::53#53 error (network unreachable) resolving './DNSKEY/IN': 2001:500:a8::e#53 error (network unreachable) resolving './NS/IN': 2001:500:a8::e#53 error (FORMERR) resolving './NS/IN': 198.97.190.53#53 error (network unreachable) resolving './DNSKEY/IN': 2001:dc3::35#53 error (network unreachable) resolving './NS/IN': 2001:dc3::35#53 error (network unreachable) resolving './DNSKEY/IN': 2001:500:2d::d#53 error (network unreachable) resolving './NS/IN': 2001:500:2d::d#53 managed-keys-zone: Unable to fetch DNSKEY set '.': failure

What does it mean? Can it be fixed?

And is it at all related with DNSSEC cause I cannot seem to get it working whatsoever.

Looks like failure to reach ipv6 addressed NS servers. If you don't utilize ipv6 on your network then this should be expected.knobbysideup 1 point 2 points 3 points 11 days ago (0 children)Can be dealt with by adding#/etc/sysconfig/named OPTIONS="-4"

Jake Montgomery discovered that Bind incorrectly handled certain specific combinations of RDATA. A remote attacker could use this flaw to cause Bind to crash, resulting in a denial of service.

Posted by kdawson on Tuesday July 15, @08:07AM

from the be-afraid-be-very-afraid-then-get-patching dept.syncro writes "The recent massive, multi-vendor DNS patch advisory related to DNS cache poisoning vulnerability, discovered by Dan Kaminsky, has made headline news. However, the secretive preparation prior to the July 8th announcement and hype around a promised full disclosure of the flaw by Dan on August 7 at the Black Hat conference has generated a fair amount of backlash and skepticism among hackers and the security research community. In a post on CircleID, Paul Vixie offers his usual straightforward response to these allegations. The conclusion: 'Please do the following. First, take the advisory seriously - we're not just a bunch of n00b alarmists, if we tell you your DNS house is on fire, and we hand you a fire hose, take it. Second, take Secure DNS seriously, even though there are intractable problems in its business and governance model - deploy it locally and push on your vendors for the tools and services you need. Third, stop complaining, we've all got a lot of work to do by August 7 and it's a little silly to spend any time arguing when we need to be patching.'"

A server problem at the U.S. National Security Agency has knocked the secretive intelligence agency off the Internet. The nsa.gov Web site was unresponsive at 7 a.m. Pacific time Thursday and continued to be unavailable

throughout the morning for Internet users.The Web site was unreachable because of a problem with the NSA's DNS (Domain Name System) servers, said Danny McPherson, chief research officer with Arbor Networks. DNS servers are used to translate things like the Web addresses typed into machine-readable Internet Protocol addresses that computers use to find each other on the Internet.

The agency's two authoritative DNS servers were unreachable Thursday morning, McPherson said.

Because this DNS information is sometimes cached by Internet service providers, the NSA would still be temporarily reachable by some users, but unless the problem is fixed, NSA servers will be knocked completely off-line. That means that e-mail sent to the agency will not be delivered, and in some cases, e-mail being sent by the NSA would not get through.

"We are aware of the situation and our techs are working on it," a NSA spokeswoman said at 9:45 a.m. PT. She declined to identify herself.

A similar DNS problem knocked Youtube.com off-line in early May.

There are three possible reasons the DNS server was knocked off-line, McPherson said. "It's either an internal routing problem of some sort on their side or they've messed up some firewall or ACL [access control list] policy," he said. "Or they've taken their servers off-line because something happened."

That "something else" could be a technical glitch or a hacking incident, McPherson said.

In fact, the NSA has made some basic security mistakes with its DNS servers, according to McPherson.

- The NSA should have hosted its two authoritative DNS servers on different machines, so that if a technical glitch knocked one of the servers off-line, the other would still be reachable.

- Compounding problems is the fact that the DNS servers are hosted on a machine that is also being used as a Web server for the NSA's National Computer Security Center.

"Say there was some Apache or Windows vulnerability and hackers controlled that server, they would now own the DNS server for nsa.gov," he said. "That really surprised me. I wouldn't think that these guys would do something like that."

The NSA is responsible for analysis of foreign communications, but it is also charged with helping protect the U.S. government against cyber attacks, so the outage is an embarrassment for the agency.

"I am certain that someone's going to send an e-mail at some point that's not going to get through," McPherson said. "If it's related to national security and it's not getting through, then as a U.S. citizen, that concerns me."

(Anders Lotsson with Computer Sweden contributed to this report.)

Hours after anti-spam company Blue Security pulled the plug on its spam-fighting Blue Frog software and service, the spammers whose attack caused the company to wave the white flag have escalated their assault, knocking Blue Security's farewell message and thousands more Web sites offline.

Just before midnight ET, Blue Security posted a notice on its home page that it was bowing out of the anti-spam business due to concerted attacks against its Web site that took millions of other sites and blogs with it. Within minutes of that online posting, bluesecurity.com went down and remains inaccessible at the time of this writing.

According to information obtained by Security Fix, the reason is that the attackers were hellbent on taking down Blue Security's site again, but had trouble because the company had signed up with Prolexic, which specializes in protecting Web sites from "distributed denial-of-service" (DDoS) attacks.

These massive assaults harness the power of thousands of hacked PCs to swamp sites with so much bogus traffic that they can no longer accommodate legitimate visitors. Prolexic built its business catering to the sites most frequently targeted by DDoS extortion attacks -- chiefly, online gambling and betting houses. But the company also serves thousands of other businesses, including banks, insurance companies and online payment processors.

For the past nine hours, however, most of Prolexic's customers have been knocked offline by an attack that flanked its defenses. Turns out the attackers decided not to attack Prolexic, but rather UltraDNS, its main provider of domain name system (DNS) services. (DNS is what helps direct Internet traffic to its destination by translating human-readable domain names like "www.example.com" into numeric Internet addresses that are easier for computers to understand.)

UltraDNS is the authoritative DNS provider for all Web sites ending in ".org" and ".uk," and also markets its "DNS Shield" service designed to help sites defend against another, increasingly common type of DDoS -- one that targets weaknesses inherent in the DNS system. (Incidentally, UltraDNS was recently acquired by Neustar, which in turn is responsible for handling all ".biz" domain registrations, and for overseeing the nation's authoritative directory of telephone numbers.)

In this case, at least, it does not appear that the DNS Shield service worked as advertised. Earlier today, I spoke with Prolexic founder Barrett G. Lyon, who told me the attack on UltraDNS had knocked about 80 percent of his company's clients offline, or roughly 2,000 or so Web businesses. Most of those businesses also remain offline as of this writing.

According to Lyon, the unknown attackers hit a key portion of UltraDNS's network with a flood of spoofed DNS requests at a rate of around 4 to 5 gigabits per second, which is enough traffic to make just about any Web site on the Internet fall over (many Internet routers can handle only a few hundred megabits of traffic before they start to fail). But this was no normal DDoS attack-- it was a kind of DDoS on the DNS system that security experts say has become alarmingly more common over the past six to eight months.

Known as DNS amplification attacks or "reflected DNS attacks," these kinds of DDoS assaults increase the traffic hurled at a victim by orders of magnitude. In a nutshell, the attackers find a whole bunch of poorly configured DNS servers and use them to create and send spoofed DNS requests from systems they control to the DNS servers they want to cripple. Because the DNS requests appear to be coming from other trusted DNS servers, the target servers have trouble distinguishing regular, legitimate DNS lookups from ones sent by the attackers. Sustained for long enough, the attack eventually overloads the victim's DNS servers with queries and knocks them out of commission.

To put the raw power of DNS amplification into perspective, consider the attack that knocked Akamai offline in the summer of 2004. For anyone unfamiliar with this company, Akamai sells a rather pricey service that lets deep-pocketed companies like FedEx, Microsoft and Xerox mirror their Web site content at thousands of different online servers, making DDoS attacks against their sites extremely difficult.

Akamai was for a long time considered the gold standard until one day in June 2004, when a DDoS attack knocked the company's services offline for about an hour. Akamai never talked publicly about the specifics of the attack, but several sources close to the investigation told me later that the outage was the result of a carefully coordinated DNS amplification attack -- one that was stopped when the attackers decided they had made their point (which was no doubt to demonstrate to would-be buyers of their DDoS services that they could knock just about anyone off the face of the Web.)

So where am I going with all of this? Well, UltraDNS marketed its DNS Shield as a protection against exactly these same types of amplification attacks. Only in this case it doesn't appear to have worked -- though, to be fair I haven't heard UltraDNS's side of the story since they have yet to return my calls. No doubt they are busy putting out fires. At any rate, score another one for the spammers, I suppose.

Update, 7:46 p.m. ET: I heard back from Neustar. Their spokesperson, Elizabeth Penniman, declined to discuss anything about today's attacks, saying only that "we have a handle on the situation and continue to work with service providers to ensure the best possible level of service to our customers."

April 08, 2005 (IDG News Service) Problems with the Domain Name System (DNS) servers at Internet service provider Comcast Corp. prevented customers around the U.S. from surfing the Web yesterday, but the company said the interruptions weren't linked in any way to a spate of recent DNS attacks known as "pharming" scams.

Comcast technicians noticed problems with the Philadelphia-based company's DNS servers around 6:30 p.m. EDT. The problems interrupted DNS service to Comcast high-speed Internet customers across the U.S. just hours after the SANS Institute's Internet Storm Center advised Internet service providers to make sure their DNS servers weren't vulnerable to new attacks.

However, the outage wasn't caused by those attacks or by maintenance related to the attacks, according to company spokeswoman Jeanne Russo.

During the outage, Comcast customers who attempted to connect to Web sites such as Google.com received frequent "Page not found" errors on their Web browsers. However, entering the numeric Internet Protocol address of the Web site in question would connect the user to the page.

Comcast technicians began working on the DNS problem immediately after identifying it yesterday evening and restored service to the company's customers by 12:00 a.m. EDT today, Russo said.

The DNS is a global network of computers that translates requests for reader-friendly Web domains, such as www.computerworld.com, into the numeric IP addresses that machines on the Internet use to communicate.

The recent attacks on DNS servers use a strategy called "DNS cache poisoning," in which malicious hackers use a DNS server they control to feed erroneous information to other DNS servers. The attacks take advantage of a vulnerable feature of DNS that allows any DNS server that receives a request about the IP address of a Web domain to return information about the address of other Web domains.

Online criminal groups and malicious hackers have used DNS cache poisoning recently in pharming scams, which are similar to phishing identity theft attacks but don't require a lure, such as a Web link that victims must click on to be taken to the attack Web site. Instead, corrupted DNS servers forward Internet users who are looking for legitimate Web pages, such as Google.com, to Web pages controlled by the attackers, which harvest personal information such as user names and passwords from the victims or install Trojan horse programs or other malicious code, according to the Anti-Phishing Working Group.

The attacks have been increasing in recent months, as Internet users become more savvy about traditional phishing scams and online criminal groups look for new ways to collect sensitive information or financial data from victims, the Anti-Phishing Working Group said.

In March, a rogue DNS server posed as the authoritative DNS server for the entire .com Web domain. Other DNS servers that were poisoned with this false information redirected all .com requests to the rogue server, which responded to all .com requests with one of two IP addresses controlled by the attackers.

An earlier attack in March targeted vulnerable products from Symantec Corp. and other companies to redirect requests from more than 900 unique Internet addresses and more than 75,000 e-mail messages, according to log data obtained from compromised Web servers that were used in the attacks, the Internet Storm Center said.

In recent days, a spate of such attacks prompted the Internet Storm Center to issue a "code yellow" alert, signifying increasing threats on the Internet, and prompted Microsoft Corp. to issue revised instructions for configuring Windows machines used as DNS servers to prevent cache poisoning.

Online scam artists are manipulating the Internet's directory service and taking advantage of a hole in some Symantec Corp. products to trick Internet users into installing adware and other annoying programs on their computers, according to an Internet security monitoring organization.Customers who use older versions of Symantec's Gateway Security Appliance and Enterprise Firewall are being hit by Domain Name System (DNS) "poisoning attacks." Such attacks cause Web browsers pointed at popular Web sites such as Google.com, eBay.com and Weather.com to go to malicious Web pages that install unwanted programs, according to Johannes Ullrich, chief technology officer at the SANS Institute's Internet Storm Center (ISC).

The attacks, which began on Thursday or Friday, may be one of the largest to use DNS poisoning, Ullrich said.

Symantec issued an emergency patch for the DNS poisoning hole on Friday. The company didn't immediately respond to requests for comment today.

The DNS is a global network of computers that translates requests for reader-friendly Web domains, such as www.computerworld.com, into the numeric IP addresses that machines on the Internet use to communicate.

In DNS poisoning attacks, malicious hackers take advantage of a feature that allows any DNS server that receives a request about the IP address of a Web domain to return information about the address of other Web domains.

For example, a DNS server could respond to a request for the address of www.yahoo.com with information on the address of www.google.com or www.amazon.com, even if information on those domains wasn't requested. The updated addresses are stored by the requesting DNS server in a temporary listing, or cache, of Internet domains and used to respond to future requests.

In poisoning attacks, malicious hackers use a DNS server they control to send out erroneous addresses to other DNS servers. Internet users who rely on a poisoned DNS server to manage their Web surfing requests might find that entering the URL of a well-known Web site directs them to an unexpected or malicious Web page, Ullrich said.

Some Symantec products, such as the Enterprise Security Gateway, include a proxy that can be used as a DNS server for users on the network that the product protects. That DNS proxy is vulnerable to the DNS poisoning attack, Symantec said on its Web site. Symantec's Enterprise Firewall Versions 7.04 and 8.0 for Microsoft Corp.'s Windows and Sun Microsystems Inc.'s Solaris have the DNS poisoning flaw, as do Versions 1.0 and 2.0 of the company's Gateway Security Appliance.

Internet users on some networks protected by the vulnerable Symantec products had requests for Web sites directed to attack Web pages that attempted to install the ABX tool bar, a search tool bar and spyware program that displays pop-up ads, Ullrich said.

The DNS poisoning attacks were easy to detect because Web sites involved in the attack don't mimic the sites that users were trying to reach, Ullrich said. However, DNS poisoning could be a potent tool for online identity thieves who could set up phishing Web sites that are identical to sites like Google.com or eBay.com but secretly capture user information, he said.

Some of those customers told ISC that they installed a patch that the company issued in June to fix a DNS cache-poisoning problem in many of the same products, but they were still susceptible to the latest DNS cache-poisoning attacks, according to information on the ISC Web site.

Ullrich said he doesn't believe that Symantec's customers are being targeted, just that they are susceptible to attacks that are being launched at a broad swath of DNS servers.

The ISC is collecting the Internet addresses of Web sites and DNS servers used in the attack and trying to have them shut down or blacklisted, ISC said.

Symantec customers using one of the affected products are advised to install the most recent hotfixes from the company, Ullrich said.

The following are assumed:

- The role is: dnsrole

- The user is: dnsadmin

- The profile is: DNS Admin ( case sensitive )

- Home directory for dnsrole is: /export/home/dnsrole

- Home directory for dnsadmin is: /export/home/dnsadmin

Configuration Steps

1. Create the role and assign it a password:

# roleadd -u 1001 -g 10 -d /export/home/dnsrole -m dnsrole# passwd dnsroleNOTE: Check in /etc/passwd that the shell is /bin/pfsh. This ensures that nobody can log in as the role.

Example line in /etc/passwd:

dnsrole:x:1001:10::/export/home/dnsrole:/bin/pfsh2. Create the profile called "DNS Admin":

Edit /etc/security/prof_attr and insert the following line:

DNS Admin:::BIND Domain Name System administrator:3. Add profile to the role using rolemod(1) or by editing /etc/user_attr:

# rolemod -P "DNS Admin" dnsroleVerify that the changes have been made in /etc/user_attr with profiles(1) or grep(1):

# profiles dnsroleDNS AdminBasic Solaris UserAll# grep dnsrole /etc/user_attrdnsrole::::type=role;profiles=DNS Admin

- Assign the role 'dnsrole' to the user 'dnsadmin':

- If 'dnsadmin' already exists then use usermod(1M) to add the role (user must not be logged in):

# usermod -R dnsrole dnsadmin- Otherwise create new user using useradd(1M) and passwd(1):

# useradd -u 1002 -g 10 -d /export/home/dnsadmin -m \-s /bin/ksh -R dnsrole dnsadmin# passwd dnsadmin- Confirm user has been added to role with roles(1) or grep(1):

# roles dnsadmidnsrole# grep ^dnsadmin: /etc/user_attrdnsadmin::::type=normal;roles=dnsrole5. Assign commands to the profile 'dns':

Add the following entries to /etc/security/exec_attr:

DNS Admin:suser:cmd:BIND 8:BIND 8 DNS:/usr/sbin/in.named:uid=0DNS Admin:suser:cmd:ndc:BIND 8 control program:/usr/sbin/ndc:uid=0If using Solaris 10 you may need to add a rule for BIND 9:

DNS Admin:suser:cmd:BIND 9:BIND 9 DNS:/usr/sfw/sbin/named:uid=0BIND 9 does not use ndc, instead rndc(1M) is used which does not require RBAC.

6. Create or Modify named configuration files.

To further limit the use of root in configuring and maintaining BIND make dnsadmin the owner of /etc/named.conf and the directory it specifies.

# chown dnsadmin /etc/named.conf# grep -i directory /etc/named.confdirectory "/var/named/";# chown dnsadmin /var/named7. Test the configuration:

Login as the user "dnsadmin" and start named:

$ su dnsrole -c '/usr/sbin/in.named -u dnsadmin'To stop named use ndc (for BIND 9 use rndc):

$ su dnsrole -c '/usr/sbin/ndc stop'Summary:

In this example the user 'dnsadmin' has been set up to manage the DNS configuration files and assume the role 'dnsrole' to start the named process.

The role 'dnsrole' is only used to start named and to control it with ndc (for BIND 8).

With this RBAC configuration the DNS process when started by user 'dnsrole' would acquire root privileges and thus have access to its configuration files.

The named options '-u dnsadmin' may be used to specify the user that the server should run as after it initializes. Furthermore 'dnsadmin' is then permitted to send signals to named as described in the named manual page.

References:

ndc(1M), named(1M), rbac(5), profiles(1), rolemod(1M), roles(1),rndc(1M), usermod(1M), useradd(1M)

[Feb 11, 2005] How To Create Custom Roles Using Based Access Control (RBAC)

#41168 How to set up RBAC to allow non-root user to manage in.named on DNS server

The following are assumed:

- The role is: dnsrole

- The user is: dnsadmin

- The profile is: DNS Admin ( case sensitive )

- Home directory for dnsrole is: /export/home/dnsrole

- Home directory for dnsadmin is: /export/home/dnsadmin

Configuration Steps

1. Create the role and assign it a password:

# roleadd -u 1001 -g 10 -d /export/home/dnsrole -m dnsrole# passwd dnsroleNOTE: Check in /etc/passwd that the shell is /bin/pfsh. This ensures that nobody can log in as the role.

Example line in /etc/passwd:

dnsrole:x:1001:10::/export/home/dnsrole:/bin/pfsh2. Create the profile called "DNS Admin":

Edit /etc/security/prof_attr and insert the following line:

DNS Admin:::BIND Domain Name System administrator:3. Add profile to the role using rolemod(1) or by editing /etc/user_attr:

# rolemod -P "DNS Admin" dnsroleVerify that the changes have been made in /etc/user_attr with profiles(1) or grep(1):

# profiles dnsroleDNS AdminBasic Solaris UserAll# grep dnsrole /etc/user_attrdnsrole::::type=role;profiles=DNS Admin

- Assign the role 'dnsrole' to the user 'dnsadmin':

- If 'dnsadmin' already exists then use usermod(1M) to add the role (user must not be logged in):

# usermod -R dnsrole dnsadmin- Otherwise create new user using useradd(1M) and passwd(1):

# useradd -u 1002 -g 10 -d /export/home/dnsadmin -m \-s /bin/ksh -R dnsrole dnsadmin# passwd dnsadmin- Confirm user has been added to role with roles(1) or grep(1):

# roles dnsadmidnsrole# grep ^dnsadmin: /etc/user_attrdnsadmin::::type=normal;roles=dnsrole5. Assign commands to the profile 'dns':

Add the following entries to /etc/security/exec_attr:

DNS Admin:suser:cmd:BIND 8:BIND 8 DNS:/usr/sbin/in.named:uid=0DNS Admin:suser:cmd:ndc:BIND 8 control program:/usr/sbin/ndc:uid=0If using Solaris 10 you may need to add a rule for BIND 9:

DNS Admin:suser:cmd:BIND 9:BIND 9 DNS:/usr/sfw/sbin/named:uid=0BIND 9 does not use ndc, instead rndc(1M) is used which does not require RBAC.

6. Create or Modify named configuration files.

To further limit the use of root in configuring and maintaining BIND make dnsadmin the owner of /etc/named.conf and the directory it specifies.

# chown dnsadmin /etc/named.conf# grep -i directory /etc/named.confdirectory "/var/named/";# chown dnsadmin /var/named7. Test the configuration:

Login as the user "dnsadmin" and start named:

$ su dnsrole -c '/usr/sbin/in.named -u dnsadmin'To stop named use ndc (for BIND 9 use rndc):

$ su dnsrole -c '/usr/sbin/ndc stop'Summary:

In this example the user 'dnsadmin' has been set up to manage the DNS configuration files and assume the role 'dnsrole' to start the named process.

The role 'dnsrole' is only used to start named and to control it with ndc (for BIND 8).

With this RBAC configuration the DNS process when started by user 'dnsrole' would acquire root privileges and thus have access to its configuration files.

The named options '-u dnsadmin' may be used to specify the user that the server should run as after it initializes. Furthermore 'dnsadmin' is then permitted to send signals to named as described in the named manual page.

References:

ndc(1M), named(1M), rbac(5), profiles(1), rolemod(1M), roles(1),rndc(1M), usermod(1M), useradd(1M)

Sys Admin Maintaining DNS Sanity with Hawk

If you are a DNS administrator for anything more than a few dozen hosts, it's easy for your database to get out of sync with what's really on your network. The GPL software tool, Hawk, is designed to help administrators track which hosts in DNS are really on your network and, just as importantly, which hosts are on your network but not in DNS. Hawk can help take the mystery out of DNS maintenance, resulting in a much cleaner, up-to-date database.

Hawk consists of three components: a monitor written in Perl, a MySQL database backend, and a PHP Web interface. The monitor periodically checks whether hosts on your network appear in DNS and are answering on your network. It checks for existence on the network by way of an ICMP ping. I mention ICMP because by default, the Perl Net::Ping module "pings" by attempting a UDP connection to a host's echo port. With the various types of hosts possible on a typical network, this is probably not desirable. As each IP address on your network is polled, the monitor records or updates in the database the current IP address and the hostname, if one exists. If the ping is successful, this timestamp is also recorded in the database.

The Hawk interface consists of a Web page that allows you to choose which "network" to view and how to sort the results. You can also choose whether to view addresses that are neither in DNS nor have responded to pings. These are typically uninteresting, so by default they are not displayed. Each host displayed on the page has a hostname (if available), a last ping time, and a colored "LED" indicating the current status of the address. The LED color will indicate one of five states:

- Green - Address exists in DNS, and is currently answering pings.

- Yellow - Address exists in DNS, but has not answered in more than 24 hours (configurable).

- Red - Address exists in DNS, but has not answered in more than 1 week (configurable).

- Blue - Address does not exist in DNS, however it is answering pings. This could indicate an unauthorized use of an address, or it could indicate something such as a DHCP - assigned address that has no DNS entry.

- Purple - Address does not exist in DNS, and does not answer pings. This is generally uninteresting to us, so by default these hosts are not displayed. See Figure 1.

Installation

Perl

As previously mentioned, Hawk has three components. These components may each be hosted on separate machines or the same machine, depending on your environment. The monitor should run happily with any version of Perl 5. But the following additional modules will need to be installed: Net::Netmask, Net::Ping, DBI, and DBD::mysql. You can install these modules as follows:

# perl -MCPAN -e "install Net::Netmask" # perl -MCPAN -e "install Net::Ping" # perl -MCPAN -e "install DBI" # perl -MCPAN -e "install DBD::mysql"MySQL

The database used for storing Hawk's data is MySQL. Hawk was originally written using MySQL 3.23, but since the database requirements are minimal, you can probably get away with older versions, and certainly newer ones. Before the Perl backend and PHP frontend can communicate with the database, you must create the appropriate database and table to store the data. Next, you need to create a database user to allow read and write access to the data from the scripts. Connect to the database as follows:

# mysql --user=<mysql admin user> --password --host=<mysql server> Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 8 to server version: 3.23.40-log Type 'help;' or '\h' for help. Type '\c' to clear the buffer. mysql>Create the database "hawk" and table "ip" using the following SQL statements:

create database hawk; use hawk; create table ip ( ip char(16) NOT NULL default '0', hostname char(255) default NULL, lastping int(10) default NULL, primary key (ip), unique key ip (ip), key ip_2 (ip) ) type=MyISAM comment='Table for last ping time of hosts';Create the user "hawk" using the following SQL:

grant select,insert,update,delete on hawk.* to hawk@localhost identified by 'hawk'; grant select,insert,update,delete on hawk.* to hawk@"%" identified by 'hawk'; flush privileges;This will give permission for the user "hawk" to do basic selects and updates from any host on the network. For added security, you can limit this to a given host by changing the "%" to a specific hostname.

For managing MySQL, you may want to consider installing phpMyAdmin, which is available from:

phpMyAdmin is a Web-based tool for administering MySQL databases. It can be used to add/drop databases, create/drop/alter tables, delete/edit/add fields, execute SQL, manage keys, and import/export data. You can use this tool later in the installation process to verify that your database is being populated with data.

Apache/PHP

The interface was written using PHP 4.0.6 under Apache 1.3.22. Later versions of PHP should work fine, and any version of Apache will probably work. If your Web server is running on the same machine as the Hawk monitor, you can simply make a symbolic link in the Apache document root to the PHP directory of hawk as follows:

# cd /var/apache/htdocs # ln -s /opt/hawk/php hawkIf you are running on separate machines, you will need to copy the entire PHP directory from the installation directory to a directory named "hawk" within the Apache document root.

Hawk

Hawk is hosted at SourceForge. To download, go to:

http://sourceforge.net/projects/iphawk

or

ftp://ftp.sourceforge.net/pub/sourceforge/iphawk

Under "Latest File Releases", click Download and you will be taken to the download page. The latest version will be highlighted. This article is based on Hawk version 0.6. The downloaded file will be called hawk-0.6.tar.gz. You can save this in the directory in which you want to extract the Hawk program, (e.g., /opt). Extract the software as follows:

# cd /opt # tar xvzf hawk-0.6.tar.gz # ln -s /opt/hawk-0.6 /opt/hawkWithin the installation directory, you have two subdirectories - one for the monitor and one for the PHP interface. Following is a basic breakdown of what is installed:

./daemon - directory for perl monitor daemon ./daemon/hawk - the monitor daemon ./daemon/hawk.conf - config file for monitor daemon ./php - directory for php interface ./php/hawk.conf.inc - php web interface config file ./php/hawk.css - style sheet file for web interface ./php/hawk.php - web interface script ./php/images - directory for web interface imagesThe first step to configure Hawk is to edit the monitor config file daemon/hawk.conf. The variables in this file need to follow standard Perl syntax conventions, as this file is read into the monitor script using a "do" statement. Configurable parameters in the config file are as follows:

- @networks - This should contain a list of local networks you want to be monitored by Hawk. The networks must be specified in CIDR format. That is, if you have a class C network (24 bits) of the range 192.168.2.0-255, the CIDR form would be "192.168.2.0/24."

- @gateways - This should contain the list of gateways used by all networks. This parameter is not required, however if it is used, the monitor will verify that the gateway is up before trying the rest of the hosts on that network.

- $frequency - This parameter is the number of seconds between checks. A 0 value will cause the monitor to loop continuously.

- $pingtimeout - This variable indicates how long a ping will wait before giving up and moving on to the next host.

- $debuglevel - This can be set at 0-2. During initial setup, you may want to use debug level 2. This will allow you to see every ping, and verify database operations. Level 1 will give basic progress reports (e.g., when each network is being checked). Level 0 is, of course, no logging at all. You probably want to switch to level 1 or 0 after initial install.

- $logfile - The name of the logfile where the above debugging information is written.

$dbuser: mysql database username $dbpass: mysql database password $dbhost: database server hostname or ip address $dbname: database name ("hawk") $pidfile: pid file used for shutting down and restarting hawk.See hawk.conf.sample (Listing 1).

The PHP backend has a similar simple configuration. The config file is php/hawk.conf.inc. This file is sourced into the main hawk.php script so, like the monitor config file, it must contain syntax understood by PHP. The configurable parameters are as follows:

- $dbuser, $dbpass, $dbhost, $dbname - Should be set to the same as above.

- $redzone - If a host has not been ping-able in this amount of time (in seconds), the LED will glow red.

- $yellowzone - If a host has not been ping-able in this amount of time (in seconds), the LED will glow yellow, unless it has also surpassed $redzone.

- $networks - This should contain all the networks you specified within your hawk.conf file above. However, each of the networks is paired with a human-readable name for this parameter. If your network contains a really large broadcast domain, it may be too large for easy viewing on a single Web page, in which case you can break it into logical pieces. You can do so by specifying smaller, adjacent networks. For example, if you specified 192.168.4.0/22 in your daemon/hawk.conf above, you can break that into the following networks in php/hawk.conf.inc for display purposes: 192.168.4.0/24, 192.168.5.0/24, 192.168.6.0/24, 192.168.7.0/24. See hawk.conf.inc.sample (Listing 2).

The look and feel of the Web interface for Hawk are customizable using cascading style sheets. All of the styles have been placed into a separate CSS file, php/hawk.css.

Running Hawk

After installation of all components is complete, the next step is to start Hawk by hand and watch the logfile to verify proper operation:

# /opt/hawk-0.6/daemon/hawk & # tail -f /var/log/hawkIf you set your $debuglevel to 2, this should provide a sufficient level of detail to identify any problems. The most common problem is database connectivity. If the logging seems to hang at the point it is doing a database access, the database server name might be the issue. This will also eventually cause the script to fail and exit. If there is a problem with user credentials (e.g., username/password), the script will fail immediately. Once database connectivity is properly established, the log should display every ping attempt and every database access/update. Also, verify that data is going into the database by viewing database logs or using a tool like phpMyAdmin.

Once proper operation is verified above, configure your system to start Hawk at boot. Below is a sample init.d script that can be used for starting/stopping/restarting Hawk. See hawk.init.d.sample (Listing 3).

You will need to copy the script into your init.d directory and make symbolic links to the appropriate rc?.d directories as follows:

For the 0, 1, S, and 6, runlevels:

ln -s /etc/init.d/hawk /etc/rc0.d/K90hawk ln -s /etc/init.d/hawk /etc/rc1.d/K90hawk ln -s /etc/init.d/hawk /etc/rc6.d/K90hawk ln -s /etc/init.d/hawk /etc/rcS.d/K90hawkFor the 2, 3, and 4 runlevels:

ln -s /etc/init.d/hawk /etc/rc2.d/S90hawk ln -s /etc/init.d/hawk /etc/rc3.d/S90hawk ln -s /etc/init.d/hawk /etc/rc4.d/S90hawkThe location of init.d and rc?.d directories will vary between systems, so modify the commands to match the layout of your system. Also, runlevel 5 is used differently on different systems. Some UNIX systems use runlevel 5 for shutdown, while some Linux systems use runlevel 5 as the default runlevel. Verify how your system uses this runlevel and create the appropriate symbolic links as above.

Once you've verified proper operation of Hawk and installed the above startup scripts, reboot your system at the next opportunity to verify proper startup.

Next, you need to verify the interface is working properly by opening the page in your browser. The URL should be something like http://hawk.someplace.org/hawk/hawk.php. When the page loads, select a network and click "Go". The page will be redisplayed listing the hosts on your network as in Figure 1. If this does not work as expected, database connectivity is most likely the problem. PHP will generally report any connectivity problems directly on the Web page. The error messages given are usually very specific and you should be able to identify the problem right away. You should also check your MySQL log to verify the PHP queries are actually reaching the database.

If you were successful with your installation, you will be able to use Hawk to manage your DNS with a little more sanity.

Greg Heim has been working as a UNIX systems administrator for 13 years. He has a strong background in programming and relational databases. He can be contacted at: [email protected].

Ethereal User's Guide

Identify and Mitigate Windows DNS Threats

Zone transfers are preventable at the firewall and routers on the perimeter of your network. DNS client queries are transmitted on UDP port 53, and TCP port 53 is used for zone transfers. Zone transfers outside of the protected network (outside your firewall) via TCP port 53 should be avoided.Most organizations have internet-facing systems with both internal and external DNS servers to service each zone. In this case, incoming UDP and TCP port 53 should be blocked at the internal and external firewall, and DMZ routers. Allow TCP port 53 only through the routers and firewall which connect the internal and external DNS servers. To resolve queries for external names made by internal hosts, the internal DNS servers should forward queries out to the external DNS servers. External DNS servers in front of the firewall should be configured with root hints pointing to the root servers for the Internet. External hosts should use only the external DNS servers for Internet name resolution.

Even though Windows Server 2003 DNS only performs zone transfers with servers that are listed in the zone's Name Server (NS) resource records, you should still set your Windows DNS server to only allow zone transfers with specific IP addresses. Only allow reverse lookup zones to external DNS servers if necessary. Network Address Translation (NAT) (define) is a very good strategy to use on many networks and can be implemented on the DMZ where the DNS server is situated. NATing adds further protection from hackers and intruders as is obfuscates the issue by translating IP addresses to predetermined address ranges. Restricting zone transfers to only authorized or known servers also helps prevent the injection of unauthorized data into your DNS zone files by an attacker. If an attacker can't capture your zone data from a zone transfer, he won't be able to determine the makeup of your network and do ugly things such as spoofing IP addresses to make them appear to have come from an internal host.

Windows DNS Zone Transfer Configuration

(Click for a larger image)Another option, and undoubtedly the one Microsoft would prefer you use, is to use only AD-integrated DNS zones, as opposed to Standard DNS zones. AD-integrated DNS servers will only participate in zone replication with other AD-integrated DNS servers. Also, all DNS servers hosting AD-integrated zones must be registered in AD before they'll even be functional, and replication traffic between AD-integrated DNS servers is encrypted.

DNS Cache Poisoning is a situation in which an attacker is able to predict the DNS sequence numbers in a DNS conversation between server and client, and then insert bogus data into the data stream. This can be used by the attacker in a number of ways including redirecting a popular search engine to a pop-up ad site, or redirecting a user to a bogus bank website to gain access to account passwords.

Windows Server 2003 DNS servers use a secure response option that eliminates the addition of unrelated resource records that are included in a referral answer to the cache. Typically, a DNS server caches any names in referral answers, expediting the resolution of subsequent DNS queries. However, when the Secure Cache Against Pollution option is enabled, which it is by default on Windows 2003 DNS servers, the server can determine whether the referred name is polluting or insecure and discard it. The server determines whether to cache the name offered in the referral depending on whether it is part of the exact DNS domain tree for which the original name query was made. As an example, a query made for marketing.companysix.com with a referral answer of companyeight.net would not be cached.

Windows 2003 'Secure Cache Against Pollution Setting'

(Click for a larger image)If you have BIND-based DNS servers in your environment, you should update to BIND 9, which helps alleviate some of the more commonly used methods of DNS cache poisoning. It doesn't prevent them, however it does contain some improvements to the BIND protocol that make cache poisoning more difficult.

Denial of Service (DoS) attacks can occur when an attacker attempts to obstruct the availability of network services by flooding one or more DNS servers in the network with recursive queries or zone transfer requests. As a DNS server is flooded with queries, its resources will eventually reach their maximum and the DNS Server service will become unavailable. Blocking UDP and TCP port 53 at internal and external firewalls and DMZ routers should help alleviate this, as well as only allowing DNS-related traffic to and from authorized servers. There is a feature built into Windows 200x DNS called zone transfer metering. When a zone transfer occurs within the server, the server won't allow another zone transfer to happen for a period of time, because it is possible that a denial of service attack could be perpetrated on the server by flooding it with requests for zone transfers and queries causing the to be locked and preventing it from being able to do updates or answer queries efficiently - or at all.

Also on DNS at ENP In a DNS bind? Get Out with dnsmasq Harden BIND9 Against Cache Poisoning DNSSEC: For When a Spoof Isn't a Comedy DNSSEC: What Is It Good For? Client security and Dynamic updates: Dynamic updates are required for Active Directory-integrated zones. For highest protection, AD should be configured to allow secure dynamic updates or dynamic updates from DHCP instead of DNS clients wherever possible, to increase security of the DNS zone data. When using Secure Dynamic Updates, the DNS zone information is stored in Active Directory and thus is protected using Active Directory security features. When a zone has been configured as an Active Directory-integrated zone, Access Control List (ACL) entries can be used to specify which users, computers, and groups can make changes to a zone or a specific record. This restricts your DNS server to only accept new registrations from computers that have a computer account in Active Directory, and to only accept updates from the computer that registered the DNS record initially. It also forces the DHCP server and/or client PC's to encrypt the information.DNS Security (DNSSEC, RFC2535) is a public key infrastructure (PKI) (define) based system in which authentication and data integrity can be provided to DNS resolvers. Digital signatures are used and encrypted with private keys. These digital signatures can then be authenticated by DNSSEC-aware resolvers by using the corresponding public key. The required digital signature and public keys are added to the DNS zone in the form of resource records.

The public key is stored in the KEY RR (Resource Record), and the digital signature is stored in the SIG RR. The KEY RR must be supplied to the DNS resolver before it can successfully authenticate the SIG RR. DNSSEC also introduces one additional RR, the NXT RR, which is used to cryptographically assure the resolver that a particular RR does not exist in the zone.

DNSSEC is only partially supported in Windows Server 2003 DNS, providing basic support as specified in RFC 2535. A Windows Server 2003 DNS server can only operate as a secondary to a BIND server that fully supports DNSSEC. The support is partial because DNS in Windows Server 2003 does not provide any means to sign or verify the digital signatures. In addition, the Windows Server 2003 DNS resolver does not validate any of the DNSSEC data that is returned as a result of queries.

All of this by no means covers everything you need to know about installing and hardening your Windows-based DNS servers, but it should be a good start in giving you a better idea of the key things you need to do to protect your servers and your network. Grab some aspirin

FAQservers

We're not filtering UDP on port 53, but nobody can resolve from us. What do we need to change? Old versions of BIND made DNS resolution queries by attaching to port 53 of the remote nameserver and receiving replies back on port 53 as well. The new software connects to port 53, but the back-channel for data is designated as a random channel at port 1023 or higher. This presents a problem for sites that are filtering UDP traffic on port 1023 or higher.Most "older" firewalls will have ports 1023 and higher filtered as a matter of course. This will result in resolvers using BIND 8.1.1 not being able to get proper name resolution for sites behind those firewalls. This impacts customers using Allegiance Internet name resolvers, since those name servers will not be able to query the remote site about the names in question, and will time out.

If you are running a firewall and nameservers, it is necessary to remove UDP filtering for your nameserver from not only port 53 but 1023 and higher.

Won't removal of those filters create a security hole in my network?