|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

|

|

|

|

Grid Engine which is often called Sun Grid Engine (SGE) is a software classic. It is a batch jobs controller like batch command on steroids, not so much a typical scheduler. At one point Sun open source the code, so open source version exists. It is the most powerful (albeit specialized) open source scheduler in existence. This is one of most valuable contributions of Sun to open source community as it provides industrial strength batch scheduler for Unix/Linux.

Again this is one of the few classic Unix software systems. SGE 6.2u7 as released by Sun has all signs of the software classic. It inherited from Sun days a fairly good documentation (although software vandals form Oracle destroyed a lot of valuable Sun documents). Any engineer or scientist can read the SGE User Manual and Installation Guide. Then install it (it set up a single queue all.q that can be used immediately), and start using it for his/her needs in a day or two using the defaults without any training. As long as the networking is reliable and jobs are submitted correctly, SGE runs them with nearly zero administration.

SGE is an very powerful and flexible batch system, that probably should became standard Linux subsystem replacing or supplementing very basic batch command. It is available in several Linux distributions such as Debian and Ubuntu as installable software package from the main depository. It is available from "other" depositories for CentOS, RHEL and Suse.

SGE has many options to help effectively use all of computational resources -- grid consisting of head node and computational nodes, each with certain number of cores (aka slots).

But the back side of power and flexibility is complexity. It is a complex system that requires study. You need carefully study the man pages and manuals to get most out of it. The SGE mailing list is also a great educational resource. Don't hesitate to ask questions. Then when you become an expert you can help others to get to speed with the product. Installation is easy, but it usually take a from six month to a year for an isolated person to master the basics (much less if you have at least one expert on the floor). But like with any complex and powerful system even admins with 10years experience probably know only 60-70% of the SGE.

Now that the pieces are falling into place, after Oracle's acquisition of Sun Microsystems and then abandoning the product, we can see that open source can help "vendor-proof" important parts of Unix. Unix did not have a decent batch scheduler before Grid Engine and now it has it. Grid Engine is alive and well, with a blog, a mailing list, a git repository, and even commercial version from Univa. Source code repositories can also be found from the Open Grid Scheduler (Grid Engine 2011.11 is compatible with Sun Grid Engine 6.2u7 ) and Son of Grid Engine projects. Open Grid Scheduler looks like abandonware (user group is active), while Son of Grid Engine is actively developed and currently represents the most viable open source SGE implementation.

As of version 8.1.8 it is the most well debugged open source distribution. It might be especially attractive for those who what have experience with building software, but can be used by everybody on RHEL for which precompiled binaries exist.

Installation is pretty raw, but I tried to compensate for that by creating several pages which together document installation process of RHEL 6.5 or 6.6 pretty well:

Even in the present form they are definitely more clear and useful then old Sun 6.2u5 installation documentation ;-).

Most of the SGE discussion uses the term cluster, but SGE is not linked to cluster technology in any meaningful way. In reality it designed to operate on a heterogeneous server farm.

We will use the term "server farm" here as an alternative and less ambitious term then the term "grid".

The default installation of Grid Engine assumes that the $SGE_ROOT directory (root directory for Grid Engine installation) is on a shared (for example by NFS) filesystem accessible by all hosts.

Right now SGE exists in several competing versions (see SGE implementations) but the last version of Son of Grid engine produced was 8.1.9. After that Dave Love abandoned the project. So while it can be installed on RHEL7 and works, the future of SGE is again in limbo.

The last version of Son grid engine was released in March 2016 (all versions listed below are also downloadable from Son of Grid Engine (SGE) - Browse -SGE-releases at SourceForge.net):

2016-03-02: Version 8.1.9 available. Note that this changes the communication protocol due to the MUNGE support, and really should have been labelled 8.2 in hindsight ensure you close down execds before upgrading.

2014-11-03: Version 8.1.8 available.

2014-06-01: Version 8.1.7 available.

2013-11-01: Version 8.1.6 available, fixing various bugs.

2013-09-29: Version 8.1.5 available, mainly to fix MS Windows build problems.

2013-08-30: Version 8.1.4available; bug fixes and some enhancements, now with over 1000 patches since Oracle pulled the plug.

2013-02-27: Version 8.1.3available; bug fixes and a few enhancements, plus Debian packaging (as an add-on).

2013-01: The gridengine.debian repository contains proposed new packaging for Debian (as opposed to the standalone packaging now in the sge repository).

The Grid Engine system has functions typical for any powerful batch system:

But as a powerful batch system it is oriented on running multiple jobs optimally on the available resources Typically multiple computers(nodes) of a computational cluster. In its simplest form, a grid appears to users as a large system that provides a single point of access to multiple computers.

In other words grid is just a loose confederation of different computers which can run different OSes connected by regular TCP/IP links. In this sense it is close to the concept of a server farm. Grid engine does not care about uniformity of a server farm and along with scheduling provides some central administration and monitoring capabilities to server farm environment.

SGE enables to distribute jobs across a grid and treat the grid as a single computational resource. It accepts jobs submitted by user(s) and schedule them to be run on appropriate systems in the grid. Users can submit as many jobs at a time as they want without being concerned about where the jobs run.

The main purpose of a batch system like SGE is to optimally utilize system resources that are present in a server farm. To schedule jobs ob available nodes in the most efficient way possible.

Every aspect of the batch system is accessible through the perl API. There is almost no documentation but a few sample scripts in gridengine/source/experimental/perlgui and on Internet such as by Wolfgand Frieebel from DESY ( see ifh.de) can be used as a guidance

Grid Engine architecture is structured around two main concepts:

A queue is a container for a class of jobs that are allowed to run on one or more hosts concurrently. Logically queue is a child of parallel environment (see below) although it can have several such parents. It defines set of hosts and limitation of resources on those hosts.

A queue can reside on a single host, or a queue can extend across multiple hosts. The latter are called server farm queues. Server farm queues enable users and administrators to work with a server farm of execution hosts by means of a single queue configuration. Each host that is attached to the head node can belong to one of more queues

A queue determines certain job attributes. Association with a queue affects some of the things that can happen to a job. For example, if a queue is suspended, all jobs associated with that queue are also suspended.

Grid Engine has always have one default queue called all.q, which is created during the initial installation and updated each time you add another execution host. You can can have several additional queries each of them defining the set of host to run the jobs each with own computational requirements, for example, number of CPUs (ala slots) . The problem here is that without special measures queues are independent and if they contain the same set of nodes oversubscription can easily occur.

Each job should not exceed maximum parameters defined in queue (directly or indirectly via parralal environment). Then SGE scheduler can optimize the job mix for available resources by selecting the most suitable job from the input query and sending it to the most appropriate node of a grid.

Queue defines class of jobs that consume computer resources in a similar way. It also define list of computational nodes on which such jobs can be run.

Jobs typically are submitted to a queue.

In the book Building N1 Grid Solutions Preparing, Architecting, and Implementing Service-Centric Data Centers we can find an interesting although overblown statement:

The N1 part of the name was never intended to be a product name or a strategy name visible outside of Sun. The name leaked out and stuck. It is the abbreviation for the original project name Network-1. The SUN1 workstation was Sun's first workstation. It was designed specifically to be connected to the network.

N1 Grid systems are the first systems intended to be built with the network at their core and be based on the principle that an IP-based network is effectively the system bus.

Parallel environment (PE) is the central notion of SGE and represents a set of settings that tell Grid Engine how to start, stop, and manage jobs run by the class of queues that are using this PE.

It sets the maximum number of slot that can be assigned to all jobs within a given queue. It also set some parameters for parallel messaging framework such as MPI, that is used by parallel jobs.

|

|

The usual syntax applies:

Parallel environment is the defining characteristic of each queue. Needs to be specified in correctly for queue to work. It is specified in pe_list attribute which can contain a single PE or list of PEs. For example:

pe_list make mpi mpi_fill_up

Each parallel environment determines a class of queues that use it and has several important attributes are:

If wallclock accounting is used (execd_params ACCT_RESERVED_USAGE and/or SHARETREE_RESERVED_USAGE set to TRUE) and control_slaves

is set to FALSE, the job_is_first_task parameter influences the accounting for the job: A value of TRUE means that accounting

for cpu and requested memory gets multiplied by the number of slots requested with the -pe switch, if job_is_first_task is

set to FALSE, the accounting information gets multiplied by number of slots + 1.

The accounting_summary parameter can be set to TRUE or FALSE. A value of TRUE indicates that only a single accounting record

is written to the accounting(5) file, containing the accounting summary of the whole job including all slave tasks, while

a value of FALSE indicates an individual accounting(5) record is written for every slave task, as well as for the master task.

Note: When running tightly integrated jobs with SHARETREE_RESERVED_USAGE set, and with having accounting_summary

enabled in the parallel environment, reserved usage will only be reported by the master task of the parallel job. No per parallel

task usage records will be sent from execd to qmaster, which can significantly reduce load on qmaster when running large tightly

integrated parallel jobs.

Some important details are well explained in the blog post Configuring a New Parallel Environment

Grid generally consists of a head node and computational nodes. Head node typically runs sge_master and often called master host. Master host can and often is be the source of export of NFS to computational nodes but this is not necessary.

qconf -ah <hostname>

Two daemons provide the functionality of the Grid Engine system. They are started via init scripts.

Documentation for such a complex and powerful system is fragmentary and generally is of low quality. Even some man pages contain questionable information. Many does not explain features available well, or at all.

This is actually why this set of pages was created: to compensate for insufficient documentation for SGE.

Although version of SGE generally are compatible, some features implementation depends on version used. See history for the list of major implementations.

Documentation to the last opensource version produced by Sun (version 6.2u5) is floating on the Internet. See, for example:

There are docs for older versions too,

And some presentations

Some old Sun Blueprints about SGe still can be found too. But generally Oracle behaved horribly bad as a trustee of Sun documentation portal. They proved to be simply vandals in this particular respect: discarding almost everything without mercy, destroying considerable value and an important part of Sun heritage.

Moreover, those documents organized into historical website might still can earn some money (and respect, which is solely missing now, after this vandalism) for Oracle if they preserved the website. No they discarded everything mercilessly.

Documentation for Oracle Grid Engine which is now abandonware might also floating around.

For more information see

See also SGE Documentation

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

Jan 29, 2021 | finance.yahoo.com

So it now owns the only commercial SGE offering along with PBSpro. Univa Grid Engine will now be referred to as Altair Grid Engine.

Altair will continue to invest in Univa's technology to support existing customers while integrating with Altair's HPC and data analytics solutions. These efforts will further enhance the capability and performance requirements for all Altair customers and solidify the company's leadership in workload management and cloud enablement for HPC. Univa has two flagship products:

· Univa ® Grid Engine ® is a leading distributed resource management system to optimize workloads and resources in thousands of data centers, improving return-on-investment and delivering better results faster.

Dec 16, 2018 | liv.ac.uk

Index of /downloads/SGE/releases/8.1.9

This is Son of Grid Engine version v8.1.9. See <http://arc.liv.ac.uk/repos/darcs/sge-release/NEWS> for information on recent changes. See <https://arc.liv.ac.uk/trac/SGE> for more information. The .deb and .rpm packages and the source tarball are signed with PGP key B5AEEEA9. * sge-8.1.9.tar.gz, sge-8.1.9.tar.gz.sig: Source tarball and PGP signature * RPMs for Red Hat-ish systems, installing into /opt/sge with GUI installer and Hadoop support: * gridengine-8.1.9-1.el5.src.rpm: Source RPM for RHEL, Fedora * gridengine-*8.1.9-1.el6.x86_64.rpm: RPMs for RHEL 6 (and CentOS, SL) See <https://copr.fedorainfracloud.org/coprs/loveshack/SGE/> for hwloc 1.6 RPMs if you need them for building/installing RHEL5 RPMs. * Debian packages, installing into /opt/sge, not providing the GUI installer or Hadoop support: * sge_8.1.9.dsc, sge_8.1.9.tar.gz: Source packaging. See <http://wiki.debian.org/BuildingAPackage>, and see <http://arc.liv.ac.uk/downloads/SGE/support/> if you need (a more recent) hwloc. * sge-common_8.1.9_all.deb, sge-doc_8.1.9_all.deb, sge_8.1.9_amd64.deb, sge-dbg_8.1.9_amd64.deb: Binary packages built on Debian Jessie. * debian-8.1.9.tar.gz: Alternative Debian packaging, for installing into /usr. * arco-8.1.6.tar.gz: ARCo source (unchanged from previous version) * dbwriter-8.1.6.tar.gz: compiled dbwriter component of ARCo (unchanged from previous version) More RPMs (unsigned, unfortunately) are available at <http://copr.fedoraproject.org/coprs/loveshack/SGE/>.

Dec 16, 2018 | github.com

docker-sge

Dockerfile to build a container with SGE installed.

To build type:

git clone [email protected]:gawbul/docker-sge.git cd docker-sge docker build -t gawbul/docker-sge .To pull from the Docker Hub type:

docker pull gawbul/docker-sgeTo run the image in a container type:

docker run -it --rm gawbul/docker-sge login -f sgeadminYou need the

login -f sgeadminas root isn't allowed to submit jobsTo submit a job run:

echo "echo Running test from $HOSTNAME" | qsub

Dec 16, 2018 | hub.docker.com

Docker SGE (Son of Grid Engine) Kubernetes All-in-One Usage

Kubernetes Step-by-Step Usage

- Setup Kubernetes cluster, DNS service, and SGE cluster

Set

KUBE_SERVER,DNS_DOMAIN, andDNS_SERVER_IPcurrectly. And run./kubernetes/setup_all.shwith number of SGE workers.export KUBE_SERVER=xxx.xxx.xxx.xxx export DNS_DOMAIN=xxxx.xxxx export DNS_SERVER_IP=xxx.xxx.xxx.xxx ./kubernetes/setup_all.sh 20- Submit Job

kubectl exec sgemaster -- sudo su sgeuser bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' kubectl exec sgemaster -- sudo su sgeuser bash -c 'cat /home/sgeuser/STDIN.o1'- Add SGE workers

./kubernetes/add_sge_workers.sh 10Simple Docker Command Usage

- Setup Kubernetes cluster

./kubernetes/setup_k8s.sh- Setup DNS service

Set

KUBE_SERVER,DNS_DOMAIN, andDNS_SERVER_IPcurrectlyexport KUBE_SERVER=xxx.xxx.xxx.xxx export DNS_DOMAIN=xxxx.xxxx export DNS_SERVER_IP=xxx.xxx.xxx.xxx ./kubernetes/setup_dns.sh- Check DNS service

- Boot test client

kubectl create -f ./kubernetes/skydns/busybox.yaml

- Check normal lookup

kubectl exec busybox -- nslookup kubernetes

- Check reverse lookup

kubectl exec busybox -- nslookup 10.0.0.1- Check pod name lookup

kubectl exec busybox -- nslookup busybox.default- Setup SGE cluster

Run

./kubernetes/setup_sge.shwith number of SGE workers../kubernetes/setup_sge.sh 10- Submit job

kubectl exec sgemaster -- sudo su sgeuser bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' kubectl exec sgemaster -- sudo su sgeuser bash -c 'cat /home/sgeuser/STDIN.o1'- Add SGE workers

./kubernetes/add_sge_workers.sh 10

- Load nfsd module

modprobe nfsd- Boot DNS server

docker run -d --hostname resolvable -v /var/run/docker.sock:/tmp/docker.sock -v /etc/resolv.conf:/tmp/resolv.conf mgood/resolvable- Boot NFS servers

docker run -d --name nfshome --privileged cpuguy83/nfs-server /exports docker run -d --name nfsopt --privileged cpuguy83/nfs-server /exports- Boot SGE master

docker run -d -h sgemaster --name sgemaster --privileged --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-master:ubuntu- Boot SGE workers

docker run -d -h sgeworker01 --name sgeworker01 --privileged --link sgemaster:sgemaster --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-worker:ubuntu docker run -d -h sgeworker02 --name sgeworker02 --privileged --link sgemaster:sgemaster --link nfshome:nfshome --link nfsopt:nfsopt wtakase/sge-worker:ubuntu- Submit job

docker exec -u sgeuser -it sgemaster bash -c '. /etc/profile.d/sge.sh; echo "/bin/hostname" | qsub' docker exec -u sgeuser -it sgemaster cat /home/sgeuser/STDIN.o1

Nov 08, 2018 | liv.ac.uk

I installed SGE on Centos 7 back in January this year. If my recolection is correct, the procedure was analogous to the instructions for Centos 6. There were some issues with the firewalld service (make sure that it is not blocking SGE), as well as some issues with SSL.

Check out these threads for reference:http://arc.liv.ac.uk/pipermail/sge-discuss/2017-January/001050.html

Max

Sep 07, 2018 | auckland.ac.nz

Experiences with Sun Grid Engine

In October 2007 I updated the Sun Grid Engine installed here at the Department of Statistics and publicised its presence and how it can be used. We have a number of computation hosts (some using Māori fish names as fish are often fast) and a number of users who wish to use the computation power. Matching users to machines has always been somewhat problematic.

Fortunately for us, SGE automatically finds a machine to run compute jobs on . When you submit your job you can define certain characteristics, eg, the genetics people like to have at least 2GB of real free RAM per job, so SGE finds you a machine with that much free memory. All problems solved!

Let's find out how to submit jobs ! (The installation and administration section probably won't interest you much.)

I gave a talk on 19 February 2008-02-19 to the Department, giving a quick overview of the need for the grid and how to rearrange tasks to better make use of parallelism.

- Installation

- Administration

- Submitting jobs

- Thrashing the Grid

- Advanced methods of queue submission

- Talk at Department Retreat

- Talk for Department Seminar

- Summary

Installation

My installation isn't as polished as Werner's setup, but it comes with more carrots and sticks and informational emails to heavy users of computing resources.

For this very simple setup I first selected a master host, stat1. This is also the submit host. The documentation explains how to go about setting up a master host.

Installation for the master involved:

- Setting up a configuration file, based on the default configuration.

- Uncompressing the common and architecture-specific binaries into /opt/sge

- Running the installation. (Correcting mistakes, running again.)

- Success!

With the master setup I was ready to add compute hosts. This procedure was repeated for each host. (Thankfully a quick for loop in bash with an ssh command made this step very easy.)

- Login to the host

- Create

/opt/sge.- Uncompress the common and architecture-specific binaries into

/opt/sge- Copy across the cluster configuration from

/opt/sge/default/common. (I'm not so sure on this step, but I get strange errors if I don't do this.)- Add the host to the cluster. (Run qhost on the master.)

- Run the installation, using the configuration file from step 1 of the master. (Correcting mistakes, running again. Mistakes are hidden in

/tmp/install_execd.*until the installation finishes. There's a problem where if/opt/sge/default/common/install_logsis not writeable by the user running the installation then it will be silently failing and retrying in the background. Installation is pretty much instantaneous, unless it's failing silently.)

- As a sub-note, you receive architecture errors on Fedora Core. You can fix this by editing

/opt/sge/util/archand changing line 248 that reads3|4|5)to3|4|5|6).- Success!

If you are now to run qhost on some host, eg, the master, you will now see all your hosts sitting waiting for instructions.

Administration

The fastest way to check if the Grid is working is to run qhost , which lists all the hosts in the Grid and their status. If you're seeing hyphens it means that host has disappeared. Is the daemon stopped, or has someone killed the machine?

The glossiest way to keep things up to date is to use qmon . I have it listed as an application in X11.app on my Mac. The application command is as follows. Change 'master' to the hostname of the Grid master. I hope you have SSH keys already setup.

ssh master -Y . /opt/sge/default/common/settings.sh \; qmonWant to gloat about how many CPUs you have in your cluster? (Does not work with machines that have > 100 CPU cores.)

admin@master:~$ qhost | sed -e 's/^.\{35\}[^0-9]\+//' | cut -d" " -f1Adding Administrators

SGE will probably run under a user you created it known as "sgeadmin". "root" does not automatically become all powerful in the Grid's eyes, so you probably want to add your usual user account as a Manager or Operator. (Have a look in the manual for how to do this.) It will make your life a lot easier.

Automatically sourcing environment

Normally you have to manually source the environment variables, eg, SGE_ROOT, that make things work. On your submit hosts you can have this setup to be done automatically for you.

Create links from /etc/profile.d to the settings files in /opt/sge/default/common and they'll be automatically sourced for bash and tcsh (at least on Redhat).

Slots

The fastest processing you'll do is when you have one CPU core working on one problem. This is how the Grid is setup by default. Each CPU core on the Grid is a slot into which a job can be put.

If you have people logging on to the machines and checking their email, or being naughty and running jobs by hand instead of via the Grid engine, these calculations get mucked up. Yes, there still is a slot there, but it is competing with something being run locally. The Grid finds a machine with a free slot and the lowest load for when it runs your job so this won't be a problem until the Grid is heavily laden.

Setting up queues

Queues are useful for doing crude prioritisation. Typically a job gets put in the default queue and when a slot becomes free it runs.

If the user has access to more than one queue, and there is a free slot in that queue, then the job gets bumped into that slot.

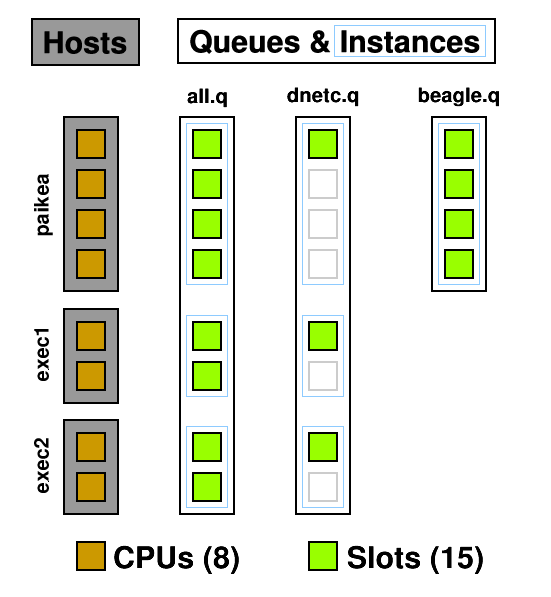

A queue instance is the queue on a host that it can be run on. 10 hosts, 3 queues = 30 queue instances. In the below example you can see three queues and seven queue instances : all.q@paikea, dnetc.q@paikea, beagle.q@paikea, all.q@exec1, dnetc.q@exec1, all.q@exec2, dnetc.q@exec2. Each queue can have a list of machines it runs on so, for example, the heavy genetics work in beagle.q can be run only on the machines attached to the SAN holding the genetics data. A queue does not have to include all hosts, ie, @allhosts.)

From this diagram you can see how CPUs can become oversubscribed. all.q covers every CPU. dnetc.q covers some of those CPUs a second time. Uh-oh! (dnetc.q is setup to use one slot per queue instance. That means that even if there are 10 CPUs on a given host, it will only use 1 of those.) This is something to consider when setting up queues and giving users access to them. Users can't put jobs into queues they don't have access to, so the only people causing contention are those with access to multiple queues but don't specify a queue ( -q ) when submitting.

Another use for queues are subordinate queues . I run low priority jobs in dnetc.q. When the main queue gets busy, all the jobs in dnetc.q are suspended until the main queue's load decreases. To do this I edited all.q, and under Subordinates added dnetc.q.

So far the shortest queue I've managed to make is one that uses 1 slot on each host it is allowed to run on. There is some talk in the documentation regarding user defined resources ( complexes ) which, much like licenses, can be "consumed" by jobs, thus limiting the number of concurrent jobs that can be run. (This may be useful for running an instance of Folding@Home, as it is not thread-safe , so you can set it up with a single "license".)

You can also change the default nice value of processes, but possibly the most useful setting is to turn on "rerunnable", which allows a task to be killed and run again on a different host.

Parallel Environment

Something that works better than queues and slots is to set up a

parallel environment. This can have a limited number of slots which counts over the entire grid and over every queue instance. As an example, Folding@Home is not thread safe. Each running thread needs its own work directory.How can you avoid contention in this case? Make each working directory a parallel environment, and limit the number of slots to 1.

I have four working directories named fah-a to fah-d . Each contains its own installation of the Folding@Home client:

$ ls ~/grid/fah-a/ fah-a client.cfg FAH504-Linux.exe workFor each of these directories I have created a parallel environment:

admin@master:~$ qconf -sp fah-a pe_name fah-a slots 1 user_lists fahThese parallel environments are made available to all queues that the job can be run in and all users that have access to the working directory - which is just me.

The script to run the client is a marvel of grid arguments. It requests the parallel environment, bills the job to the Folding@Home project, names the project, etc. See for yourself:

#!/bin/sh # use bash #$ -S /bin/sh # current directory #$ -cwd # merge output #$ -j y # mail at end #$ -m e # project #$ -P fah # name in queue #$ -N fah-a # parallel environment #$ -pe fah-a 1 ./FAH504-Linux.exe -oneunitNote the -pe argument that says this job requires one slot worth of fah-a please.

Not a grid option, but the -oneunit flag for the folding client is important as this causes the job to quit after one work unit and the next work unit can be shuffled around to an appropriate host with a low load whose queue isn't disabled. Otherwise the client could end up running in a disabled queue for a month without nearing an end.

With the grid taking care of the parallel environment I no longer need to worry about manually setting up job holds so that I can enqueue multiple units for the same work directory. -t 1-20 ahoy!

Complex Configuration

An alternative to the parallel environment is to use a Complex. You create a new complex, say how many slots are available, and then let people consume them!

- In the QMON Complex Configuration, add a complex called "fah_l", type INT, relation <=, requestable YES, consumable YES, default 0. Add, then Commit.

- I can't manage to get this through QMON, so I do it from the command line. qconf -me global and then add fah_l=1 to the complex_values.

- Again through the command line. qconf -mq all.q and then add fah_l=1 to the complex_values. Change this value for the other queues. (Note that a value of 0 means jobs requesting this complex cannot be run in this queue.)

- When starting a job, add -l fah_l=1 to the requirements.

I had a problem to start off with, where qstat was telling me that -25 licenses were available. However this is due to the default value, so make sure that is 0!

Using Complexes I have set up license handling for Matlab and Splus .

- -l splus=1 (to request a Splus license)

- -l matlab=1 (to request a Matlab license)

- -l ml=1,matlabc=1 (to request a Matlab license and a Matlab Compiler license)

- -l ml=1,matlabst=1 (to request a Matlab license and a Matlab Statistics Toolbox license)

As one host group does not have Splus installed on them I simply set that host group to have 0 Splus licenses available. A license will never be available on the @gradroom host group, thus Splus jobs will never be queued there.

Quotas

Instead of Complexes and parallel environments, you could try a quota!

Please excuse the short details:

admin@master$ qconf -srqsl admin@master$ qconf -mrqs lm2007_slots { name lm2007_slots description Limit the lm2007 project to 20 slots across the grid enabled TRUE limit projects lm2007 to slots=20 }Pending jobs

Want to know why a job isn't running?

- Job Control

- Pending Jobs

- Select a job

- Why ?

This is the same as qstat -f , shown at the bottom of this page.

Using Calendars

A calendar is a list of days and times along with states: off or suspended. Unless specified the state is on.

A queue, or even a single queue instance, can have a calendar attached to it. When the calendar says that the queue should now be "off" then the queue enters the disabled (D) state. Running jobs can continue, but no new jobs are started. If the calendar says it should be suspended then the queue enters the suspended (S) state and all currently running jobs are stopped (SIGSTOP).

First, create the calendar. We have an upgrade for paikea scheduled for 17 January:

admin@master$ qconf -scal paikeaupgrade calendar_name paikeaupgrade year 17.1.2008=off week NONEBy the time we get around to opening up paikea's case and pull out the memory jobs will have had several hours to complete after the queue is disabled. Now, we have to apply this calendar to every queue instance on this host. You can do this all through qmon but I'm doing it from the command line because I can. Simply edit the calendar line to append the hostname and calendar name:

admin@master$ qconf -mq all.q ... calendar NONE,[paikea=paikeaupgrade] ...Repeat this for all the queues.

There is a user who likes to use one particular machine and doesn't like jobs running while he's at the console. Looking at the usage graphs I've found out when he is using the machine and created a calendar based on this:

admin@master$ qconf -scal michael calendar_name michael year NONE week mon-sat=13-21=offThis calendar is obviously recurring weekly. As in the above example it was applied to queues on his machine. Note that the end time is 21, which covers the period from 2100 to 2159.

Suspending jobs automatically

Due to the number of slots being equal to the number of processors, system load is theoretically not going to exceed 1.00 (when divided by the number of processors). This value can be found in the np_load_* complexes .

But (and this is a big butt) there are a number of ways in which the load could go past a reasonable level:

- There are interactive users: all of the machines in the grad room (@gradroom) have console access.

- Someone logged in when they should not have: we're planning to disable ssh access to @ngaika except for %admins.

- A job is multi-threaded, and the submitter didn't mention this. If your Java program is using 'new Thread' somewhere, then it's likely you'll end up using multiple CPUs. Request more than one CPU when you're submitting the job. (-l slots=4 does not work.)

For example, with paikea , there are three queues:

- all.q (4 slots)

- paikea.q (4 slots)

- beagle.q (overlapping with the other two queues)

all.q is filled first, then paikea.q. beagle.q, by project and owner restrictions, is only available to the sponsor of the hardware. When their jobs come in, they can get put into beagle.q, even if the other slots are full. When the load average comes up, other tasks get suspended: first in paikea.q, then in all.q.

Let's see the configuration:

qname beagle.q hostlist paikea.stat.auckland.ac.nz priority 19,[paikea.stat.auckland.ac.nz=15] user_lists beagle projects beagleWe have the limited access to this queue through both user lists and projects. Also, we're setting the Unix process priority to be higher than the other queues.

qname paikea.q hostlist paikea.stat.auckland.ac.nz suspend_thresholds NONE,[paikea.stat.auckland.ac.nz=np_load_short=1.01] nsuspend 1 suspend_interval 00:05:00 slots 0,[paikea.stat.auckland.ac.nz=4]The magic here being that suspend_thresholds is set to 1.01 for np_load_short. This is checked every 5 minutes, and 1 process is suspended at a time. This value can be adjusted to get what you want, but it seems to be doing the trick according to graphs and monitoring the load. np_load_short is chosen because it updates the most frequently (every minute), more than np_load_medium (every five), and np_load_long (every fifteen minutes).

all.q is fairly unremarkable. It just defines four slots on paikea.

Submitting jobs Jobs are submitted to the Grid using qsub . Jobs are shell scripts containing commands to be run.

If you would normally run your job by typing ./runjob , you can submit it to the Grid and have it run by typing: qsub -cwd ./runjob

Jobs can be submitted while logged on to any submit host: sge-submit.stat.auckland.ac.nz .

For all the commands on this page I'm going to assume the settings are all loaded and you are logged in to a submit host. If you've logged in to a submit host then they'll have been sourced for you. You can source the settings yourself if required: . /opt/sge/default/common/settings.sh - the dot and space at the front are important .

Depending on the form your job is currently in they can be very easy to submit. I'm just going to go ahead and assume you have a shell script that runs the CPU-intensive computations you want and spits them out to the screen. For example, this tiny test.sh :

#!/bin/sh expr 3 + 5This computation is very CPU intensive!

Please note that the Sun Grid Engine ignores the bang path at the top of the script and will simply run the file using the queue's default shell which is csh. If you want bash, then request it by adding the very cryptic line: #$ -S /bin/sh

Now, let's submit it to the grid for running: Skip submission output

user@submit:~$ qsub test.sh Your job 464 ("test.sh") has been submitted user@submit:~$ qstat job-ID prior name user state submit/start at queue slots ja-task-ID ------------------------------------------------------------------------------------------------------- 464 0.00000 test.sh user qw 01/10/2008 10:48:03 1There goes our job, waiting in the queue to be run. We can run qstat a few more times to see it as it goes. It'll be run on some host somewhere, then disappear from the list once it is completed. You can find the output by looking in your home directory: Skip finding output

user@submit:~$ ls test.sh* test.sh test.sh.e464 test.sh.o464 user@submit:~$ cat test.sh.o464 8The output file is named based on the name of the job, the letter o , and the number of the job.

If your job had problems running have a look in these files. They probably explain what went wrong.

Easiest way to submit R jobs

Here are two scripts and a symlink I created to make it easy as possible to submit R jobs to your Grid:

qsub-R

If you normally do something along the lines of:

user@exec:~$ nohup nice R CMD BATCH toodles.RNow all you need to do is:

user@submit:~$ qsub-R toodles.R Your job 3540 ("toodles.R") has been submittedqsub-R is linked to submit-R, a script I wrote. It calls qsub and submits a simple shell wrapper with the R file as an argument. It ends up in the queue and eventually your output arrives in the current directory: toodles.R.o3540

Download it and install it. You'll need to make the ' qsub-R ' symlink to ' 3rd_party/uoa-dos/submit-R ' yourself, although there is one in the package already for lx24-x86: qsub-R.tar (10 KiB, tar)

Thrashing the Grid

Sometimes you just want to give something a good thrashing, right? Never experienced that? Maybe it's just me. Anyway, here are two ideas for submitting lots and lots of jobs:

- Write a script that creates jobs and submits them

- Submit the same thing a thousand times

There are merits to each of these methods, and both of them mimic typical operation of the grid, so I'm going to explain them both.

Computing every permutation

If you have two lists of values and wish to calculate every permutation, then this method will do the trick. There's a more complicated solution below .

qsub will happily pass on arguments you supply to the script when it runs. Let us modify our test.sh to take advantage of this:

#!/bin/sh #$ -S /bin/sh echo Factors $1 and $2 expr $1 + $2Now, we just need to submit every permutation to Grid:

user@submit:~$ for A in 1 2 3 4 5 ; do for B in 1 2 3 4 5 ; do qsub test.sh $A $B ; done ; doneAway the jobs go to be computed. If we have a look at different jobs we can see that it works. For example, job 487 comes up with:

user@submit:~$ cat test.sh.?487 Factors 3 and 5 8Right on, brother! That's the same answer as we got previously when we hard coded the values of 3 and 5 into the file. We have algorithm correctness!

If we use qacct to look up the job information we find that it was computed on host mako (shark) and used 1 units of wallclock and 0 units of CPU.

Computing every permutation, with R

This method of creating job scripts and running them will allow you to compute every permutation of two variables. Note that you can supply arguments to your script, so it is not actually necessary to over-engineer your solution quite this much. This script has the added advantage of not clobbering previous computations. I wrote this solution for Yannan Jiang and Chris Wild and posted it to the r-downunder mailing list in December 2007. ( There is another method of doing this! )

In this particular example the output of the R command is deterministic, so it does not matter that a previous run (which could have taken days of computing time) gets overwritten, however I also work around this problem.

To start with I have my simple template of R commands (template.R):

alpha <- ALPHA beta <- c(BETA) # magic happens here alpha betaThe ALPHA and BETA parameters change for each time this simulation is run. I have these values stored, one per line, in the files ALPHA and BETA.

ALPHA:

0.9 0.8 0.7BETA (please note that these contents must work both in filenames, bash commands, and R commands):

0,0,1 0,1,0 1,0,0I have a shell script that takes each combination of ALPHA x BETA, creates a .R file based on the template, and submits the job to the Grid. This is called submit.sh:

#!/bin/sh if [ "X${SGE_ROOT}" == "X" ] ; then echo Run: . /opt/sge/default/common/settings.sh exit fi cat ALPHA | while read ALPHA ; do cat BETA | while read BETA ; do FILE="t-${ALPHA}-${BETA}" # create our R file cat template.R | sed -e "s/ALPHA/${ALPHA}/" -e "s/BETA/${BETA}/" > ${FILE}.R # create a script echo \#!/bin/sh > ${FILE}.sh echo \#$ -S /bin/sh >> ${FILE}.sh echo "if [ -f ${FILE}.Rout ] ; then echo ERROR: output file exists already ; exit 5 ; fi" >> ${FILE}.sh echo R CMD BATCH ${FILE}.R ${FILE}.Rout >> ${FILE}.sh chmod +x ${FILE}.sh # submit job to grid qsub -j y -cwd ${FILE}.sh done done qstatWhen this script runs it will, for each permutation of ALPHA and BETA,

- create an R file based on the template, filling in the values of ALPHA and BETA,

- create a script that checks if this permutation has been calculated and then calls R,

- submits this job to the queue

... and finally shows the jobs waiting in the queue to execute.

Once computation is complete you will have a lot of files waiting in your directory. You will have:

- template.R -- our R commands template

- t-ALPHA-BETA.sh -- generated shell script that calls R

- t-ALPHA-BETA.R -- generated (from template) R commands

- t-ALPHA-BETA.Rout -- output from the command; this is a quirk of R

- t-ALPHA-BETA.sh.oNNN -- any output or errors from job (merged using qsub -j y )

The output files, stderr and stdout from when R was run, are always empty (unless something goes terribly wrong). For each permutation we receive four files. There are nine permutations (n ALPHA = 3, n BETA = 3, 3 × 3 = 9). A total of 36 files are created. (This example has been pared down from the original for purposes of demonstration.)

My initial question to the r-downunder list was how to get the output from R to stdout and thus t-ALPHA-BETA.sh.oNNN instead of t-ALPHA-BETA.Rout, however in this particular case, I have dodged that. In fact, being deterministic it is better that this job writes its output to a known filename, so I can do a one line test to see if the job has already been run.

I should also point out the -cwd option to the qsub command, which causes the job to be run in the current directory (which if it is in your home directory is accessible in the same place on all machines), rather than in /tmp/* . This allows us to find the R output, since R writes it to the directory it is currently in. Otherwise it could be discarded as a temporary file once the job ends!

Submit the same thing a thousand times

Say you have a job that, for example, pulls in random numbers and runs a simulation, or it grabs a work unit from a server, computes it, then quits. ( FAH -oneunit springs to mind, although it cannot be run in parallel. Refer to the parallel environment setup .) The script is identical every time.

SGE sets the SGE_JOB_ID environment variable which tells you the job number. You can use this as some sort of crude method for generating a unique file name for your output. However, the best way is to write everything to standard output (stdout) and let the Grid take care of returning it to you.

There are also Array Jobs which are

identical tasks being differentiated only by an index number, available through the -t option on qsub . This sets the environment variable of SGE_TASK_ID.For this example I will be using the Distributed Sleep Server . The Distributed Sleep Project passes out work units, packages of time, to clients who then process the unit. The Distributed Sleep Client, dsleepc , connects to the server to fetch a work unit. They can then be processed using the sleep command. A sample script: Skip sample script

#!/bin/sh #$ -S /bin/sh WORKUNIT=`dsleepc` sleep $WORKUNIT && echo Processed $WORKUNIT secondsWork units of 300 seconds typically take about five minutes to complete, but are known to be slower on Windows. (The more adventurous can add the -bigunit option to get a larger package for themselves, but note that they take longer to process.)

So, let us submit an array job to the Grid. We are going to submit one job with 100 tasks, and they will be numbered 1 to 100:

user@submit:~$ qsub -t 1-100 dsleep Your job-array 490.1-100:1 ("dsleep") has been submittedJob 490, tasks 1 to 100, are waiting to run. Later we can come back and pick up our output from our home directory. You can also visit the Distributed Sleep Project and check the statistics server to see if your work units have been received.

Note that running 100 jobs will fill the default queue, all.q. This has two effects. First, if you have any other queues that you can access jobs will be added to those queues and then run. (As the current setup of queues overlaps with CPUs this can lead to over subscription of processing resources. This can cause jobs to be paused, depending on how the queue is setup.) Second, any subordinate queues to all.q will be put on hold until the jobs get freed up.

Array jobs, with R

Using the above method of submitting multiple jobs, we can access this and use it in our R script, as follows: Skip R script

# alpha+1 is found in the SGE TASK number (qsub -t) alphaenv <- Sys.getenv("SGE_TASK_ID") alpha <- (as.numeric(alphaenv)-1)Here the value of alpha is being pulled from the task number. Some manipulation is done of it, first to turn it from a string into a number, and secondly to change it into the expected form. Task numbers run from 1+, but in this case the code wants them to run from 0+.

Similar can be done with Java, by adding the environment value as an argument to invocation of the main class.

Advanced methods of queue submission

When you submit your job you have a lot of flexibility over it. Here are some options to consider that may make your life easier. Remember you can always look in the man page for qsub for more options and explanations.

qsub -N timmy test.shHere the job is called "timmy" and runs the script test.sh . Your the output files will be in timmy.[oe]*

The working directory is usually somewhere in /tmp on the execution host. To use a different working directory, eg, the current directory, use -cwd

qsub -cwd test.shTo request specific characteristics of the execution host, for example, sufficient memory, use the -l argument.

qsub -l mem_free=2500M test.shThis above example requests 2500 megabytes (M = 1024x1024, m = 1000x1000) of free physical memory (mem_free) on the remote host. This means it won't be run on a machine that has 2.0GB of memory, and will instead be put onto a machine with sufficient amounts of memory for BEAGLE Genetic Analysis . There are two other options for ensuring you get enough memory:

- Submit your job to the BEAGLE queue: -q beagle.q . This queue is specifically setup only on machines with a lot of free memory, and has 10 slots. You do have to be one of the allowed BEAGLE users to put jobs into this queue.

- Specify the amount of memory required in your script:

#$ -l mem=2500If your binary is architecture dependent you can ask for a particular architecture.

qsub -l arch=lx24-amd64 test.binThis can also be done in the script that calls the binary so you don't accidentally forget about including it.

#$ -l arch=lx24-amd64This requesting of resources can also be used to ask for a specific host, which goes against the idea of using the Grid to alleviate finding a host to use! Don't do this!

qsub -l hostname=mako test.shIf your job needs to be run multiple times then you can create an array job. You ask for a job to be run several times, and each run (or task) is given a unique task number which can be accessed through the environment variable SGE_TASK_ID. In each of these examples the script is run 50 times:

qsub -t 1-50 test.sh qsub -t 75-125 test.shYou can request a specific queue. Different queues have different characteristics.

- lm2007.q uses a maximum of one slot per host (although once I figure out how to configure it, it will use a maximum of six slots Grid-wide).

- dnetc.q is suspended when the main queue (all.q) is busy.

- beagle.q runs only on machines with enough memory to handle the data (although this can be requested with -l , as shown above).

qsub -q dnetc.q test.shA job can be held until a previous job completes. For example, this job will not run until job 380 completes:

qsub -hold_jid 380 test.shCan't figure out why your job isn't running? qstat can tell you:

qstat -j 490 ... lots of output ... scheduling info: queue instance "[email protected]" dropped because it is temporarily not available queue instance "[email protected]" dropped because it is full cannot run in queue "all.q" because it is not contained in its hard queue list (-q)Requesting licenses

Should you be using software that requires licenses then you should specify this when you submit the job. We have two licenses currently set up but can easily add more as requested:

- -l splus=1 (to request a Splus license)

- -l matlab=1 (to request a Matlab license)

- -l ml=1,matlabc=1 (to request a Matlab license and a Matlab Compiler license)

- -l ml=1,matlabst=1 (to request a Matlab license and a Matlab Statistics Toolbox license)

The Grid engine will hold your job until a Splus license or Matlab license becomes available.

Note: The Grid engine keeps track of the license pool independently of the license manager. If someone is using a license that the Grid doesn't know about, eg, an interactive session you left running on your desktop, then the count will be off. Believing a license is available, the Grid will run your job, but Splus will not run and your job will end. Here is a job script that will detect this error and then allow your job to be retried later: Skip Splus script

#!/bin/sh #$ -S /bin/bash # run in current directory, merge output #$ -cwd -j y # name the job #$ -N Splus-lic # require a single Splus license please #$ -l splus=1 Splus -headless < $1 RETVAL=$? if [ $RETVAL == 1 ] ; then echo No license for Splus sleep 60 exit 99 fi if [ $RETVAL == 127 ] ; then echo Splus not installed on this host # you could try something like this: #qalter -l splus=1,h=!`hostname` $JOB_ID sleep 60 exit 99 fi exit $RETVALPlease note that the script exits with code 99 to tell the Grid to reschedule this job (or task) later. Note also that the script, upon receiving the error, sleeps for a minute before exiting, thus slowing the loop of errors as the Grid continually reschedules the job until it runs successfully. Alternatively you can exit with error 100, which will cause the job to be held in the error (E) state until manually cleared to run again.

You can clear a job's error state by using qmod -c jobid .

Here's the same thing for Matlab. Only minor differences from running Splus: Skip Matlab script

#!/bin/sh #$ -S /bin/sh # run in current directory, merge output #$ -cwd -j y # name the job #$ -N ml # require a single Matlab license please #$ -l matlab=1 matlab -nodisplay < $1 RETVAL=$? if [ $RETVAL == 1 ] ; then echo No license for Matlab sleep 60 exit 99 fi if [ $RETVAL == 127 ] ; then echo Matlab not installed on this host, `hostname` # you could try something like this: #qalter -l matlab=1,h=!`hostname` $JOB_ID sleep 60 exit 99 fi exit $RETVALSave this as "run-matlab". To run your matlab.m file, submit with: qsub run-matlab matlab.m

Processing partial parts of input files in Java

Here is some code I wrote for Lyndon Walker to process a partial dataset in Java.

It comes with two parts: a job script that passes the correct arguments to Java, and some Java code that extracts the correct information from the dataset for processing.

First, the job script gives some Grid task environment variables to Java. Our job script is merely translating from the Grid to the simulation:

java Simulation $@ $SGE_TASK_ID $SGE_TASK_LASTThis does assume your shell is bash, not csh. If your job is in 10 tasks, then SGE_TASK_ID will be a number between 1 and 10, and SGE_TASK_LAST will be 10. I'm also assuming that you are starting your jobs from 1, but you can also change that setting and examine SGE_TASK_FIRST.

Within Java we now read these variables and act upon them:

sge_task_id = Integer.parseInt(args[args.length-2]); sge_task_last = Integer.parseInt(args[args.length-1]);For a more complete code listing, refer to sun-grid-qsub-java-partial.java (Simulation.java).

Preparing confidential datasets

The Grid setup here includes machines on which users can login. That creates the problem where someone might be able to snag a confidential dataset that is undergoing processing. One particular way to keep the files secure is as follows:

- Check we are able to securely delete files

- Locate a safe place to store files locally (the Grid sets up ${TMPDIR} to be unique for this job and task)

- Copy over dataset; it is expected you have setup password-less scp

- Preprocess dataset, eg, unencrypt

- Process dataset

- Delete dataset

A script that does this would look like the following: Skip dataset preparation script

#!/bin/sh #$ -S /bin/sh DATASET=confidential.csv # check our environment umask 0077 cd ${TMPDIR} chmod 0700 . # find srm SRM=`which srm` NOSRM=$? if [ $NOSRM -eq 1 ] ; then echo system srm not found on this host, exiting >> /dev/stderr exit 99 fi # copy files from data store RETRIES=0 while [ ${RETRIES} -lt 5 ] ; do ((RETRIES++)) scp user@filestore:/store/confidential/${DATASET} . if [ $? -eq 0 ] ; then RETRIES=5000 else # wait for up to a minute (MaxStartups 10 by default) sleep `expr ${RANDOM} / 542` fi done if [ ! -f ${DATASET} ] ; then # unable to copy dataset after 5 retries, quit but retry later echo unable to copy dataset from store >> /dev/stderr exit 99 fi # if you were decrypting the dataset, you would do that here # copy our code over too cp /mount/code/*.class . # process data java Simulation ${DATASET} # collect results # (We are just printing to the screen.) # clean up ${SRM} -v ${DATASET} >> /dev/stderr echo END >> /dev/stderrCode will need to be adjusted to match your particular requirements, but the basic form is sketched out above.

As the confidential data is only in files and directories that root and the running user can access, and the same precaution is taken with the datastore, then only the system administrator and the user who has the dataset has access to these files.

The one problem here is how to manage the password-less scp securely. As this is run unattended, it would not be possible to have a password on a file, nor to forward authentication to some local agent. It may be possible to grab the packets that make up the key material. There must be a better way to do this. Remember that the job script is stored world-readable in the Grid cell's spool, so nothing secret can be put in there either.

Talk at Department Retreat

I gave a talk about the Sun Grid Engine on 19 February 2008-02-19 to the Department, giving a quick overview of the need for the grid and how to rearrange tasks to better make use of parallelism. It was aimed at end users and summarises into neat slides the reason for using the grid engine as well as a tutorial and example on how to use it all.

Download: Talk (with notes) PDF 5.9MiB

Question time afterwards was very good. Here are, as I recall them, the questions and answers.

Which jobs are better suited to parallelism?

Q (Ross Ihaka): Which jobs are better suited to parallelism? (Jobs with large data sets do not lend themselves to this sort of parallelism due to I/O overheads.)

A: Most of the jobs being used here are CPU intensive. The grid copies your script to /tmp on the local machine on which it runs. You could copy your data file across as well at the start of the job, thus all your later I/O is local.

(This is a bit of a poor answer. I wasn't really expecting it.) Bayesian priors and multiple identical simulations (eg, MCMC differing only by random numbers) lend themselves well to being parallelised.

Can I make sure I always run on the fastest machine?

A: The grid finds the machine with the least load to run jobs on. If you pile all jobs onto one host, then that host will slow down and become the slowest overall. Submit it through the grid and some days you'll get the fast host, and some days you'll get the slow host, and it is better in the long run. Also it is fair for other users. You can force it with -l, however, it is selfish.

Preemptable queues?

Q (Nicholas Horton): Is there support for preemptable queues? A person who paid for a certain machine might like it to be available only to them when they require it all for themselves.

A: Yes, the Grid has support for queues like that. It can all be configured. This particular example will have to be looked in to further. Beagle.q, as an example, only runs on paikea and overlaps with all.q . Also when the load on paikea , again using that as an example, gets too high, jobs in a certain queue (dnetc.q) are stopped.

An updated answer: the owner of a host can have an exclusive queue that preempts the other queues on the host. When the system load is too high, less important jobs can be suspended using suspend_thresholds .

Is my desktop an execution host?

Q (Ross Ihaka): Did I see my desktop listed earlier?

A: No. So far the grid is only running on the servers in the basement and the desktops in the grad room. Desktops in staff offices and used by PhD candidates will have to opt in.

(Ross Ihaka) Offering your desktop to run as an execution host increases the total speed of the grid, but your desktop may run slower at times. It is a two way street.

Is there job migration?

A: It's crude, and depends on your job. If something goes wrong (eg, the server crashes, power goes out) your job can be restarted on another host. When queue instances become unavailable (eg, we're upgrading paikea) they can send a signal to your job, telling it to save its work and quit, then can be restarted on another host.

Migration to faster hosts

Q (Chris Wild): What happens if a faster host becomes available while my job is running?

A: Nothing. Your job will continue running on the host it is on until it ends. If a host is overloaded, and not due to the grid's fault, some jobs can be suspended until load decreases . The grid isn't migrating jobs. The best method is to break your job down into smaller jobs, so that when the next part of the job is started it gets put onto what is currently the best available host.

Over sufficient jobs it will become apparent that the faster host is processing more jobs than a slower host.

Desktops and calendars

Q (Stephane Guindon): What about when I'm not at my desktop. Can I have my machine be on the grid then, and when I get to the desktop the jobs are migrated?

A: Yes, we can set up calendars so that at certain times no new jobs will be started on your machine. Jobs that are already running will continue until they end. (Disabling the queue.) Since some jobs run for days this can appear to have no influence on how many jobs are running. Alternatively jobs can be paused, which frees up the CPU, but leaves the job sitting almost in limbo. (Suspending the queue.) Remember the grid isn't doing migration. It can stop your job and run it elsewhere (if you're using the -notify option on submission and handling the USR1 signal).

Jobs under the grid

Q (Sharon Browning): How can I tell if a job is running under the grid's control? It doesn't show this under top .

A: Try ps auxf . You will see the job taking a lot of CPU time, the parent script, and above that the grid (sge_shepherd and sge_execd).

Talk for Department Seminar

On September 11 I gave a talk to the Department covering:

- Trends in Supercomputing

- Increase in power

- Increase in power consumption

- Increase in data

- Collection of servers

- Workflows

- Published services

- Collaboration

- How to use it

- Jargon

- Taking advantage of more CPUs

- Rewriting your code

- Case Studies

- Increasing your Jargon

- Question time

Download slides with extensive notes: Supercomputing and You (PDF 3MiB)

A range of good questions:

- What about psuedo-random number generators being initialised with the same seed?

- How do I get access to these resources if I'm not a researcher here?

- How do I get access to this Department's resources?

- Do Windows programs (eg, WinBUGS) run on the Grid?

Summary

In summary, I heartily recommend the Sun Grid Engine. After a few days installation, configuring, messing around, I am very impressed with what can be done with it.

Try it today.

Aug 17, 2018 | www.rocksclusters.org

Operating System Base

Rocks 7.0 (Manzanita) x86_64 is based upon CentOS 7.4 with all updates available as of 1 Dec 2017.

Building a bare-bones compute clusterBuilding a more complex cluster

- Boot your frontend with the kernel roll

- Then choose the following rolls: base , core , kernel , CentOS and Updates-CentOS

In addition to above, select the following rolls:

Building Custom Clusters

- area51

- fingerprint

- ganglia

- kvm (used for virtualization)

- hpc

- htcondor (used independently or in conjunction with sge)

- perl

- python

- sge

- zfs-linux (used to build reliable storage systems)

If you wish to build a custom cluster, you must choose from our a la carte selection, but make sure to download the required base , kernel and both CentOS rolls. The CentOS rolls include CentOS 7.4 w/updates pre-applied. Most users will want the full updated OS so that other software can be added.

MD5 ChecksumsPlease double check the MD5 checksums for all the rolls you download.

DownloadsAll ISOs are available for downloads from here . Individual links are listed below.

Name Description Name Description kernel Rocks Bootable Kernel Roll required zfs-linux ZFS On Linux Roll. Build and Manage Multi Terabyte File Systems. base Rocks Base Roll required fingerprint Fingerprint application dependencies core Core Roll required hpc Rocks HPC Roll CentOS CentOS Roll required htcondor HTCondor High Throughput Computing (version 8.2.8) Updates-CentOS CentOS Updates Roll required sge Sun Grid Engine (Open Grid Scheduler) job queueing system kvm Support for building KVM VMs on cluster nodes perl Support for Newer Version of Perl ganglia Cluster monitoring system from UCB python Python 2.7 and Python 3.x area51 System security related services and utilities openvswitch Rocks integration of OpenVswitch

Oct 15, 2017 | biohpc.blogspot.com

Installation of Son of Grid Engine(SGE) on CentOS7

SGE Master installation

master# hostnamectl set-hostname qmaster.local

master# vi /etc/hosts

192.168.56.101 qmaster.local qmaster

192.168.56.102 compute01.local compute01master# mkdir -p /BiO/src

master# yum -y install epel-release

master# yum -y install jemalloc-devel openssl-devel ncurses-devel pam-devel libXmu-devel hwloc-devel hwloc hwloc-libs java-devel javacc ant-junit libdb-devel motif-devel csh ksh xterm db4-utils perl-XML-Simple perl-Env xorg-x11-fonts-ISO8859-1-100dpi xorg-x11-fonts-ISO8859-1-75dpi

master# groupadd -g 490 sgeadmin

master# useradd -u 495 -g 490 -r -m -d /home/sgeadmin -s /bin/bash -c "SGE Admin" sgeadmin

master# visudo

%sgeadmin ALL=(ALL) NOPASSWD: ALL

master# cd /BiO/src

master# wget http://arc.liv.ac.uk/downloads/SGE/releases/8.1.9/sge-8.1.9.tar.gz

master# tar zxvfp sge-8.1.9.tar.gz

master# cd sge-8.1.9/source/

master# sh scripts/bootstrap.sh && ./aimk && ./aimk -man

master# export SGE_ROOT=/BiO/gridengine && mkdir $SGE_ROOT

master# echo Y | ./scripts/distinst -local -allall -libs -noexit

master# chown -R sgeadmin.sgeadmin /BiO/gridenginemaster# cd $SGE_ROOT

master# ./install_qmaster

press enter at the intro screen

press "y" and then specify sgeadmin as the user id

leave the install dir as /BiO/gridengine

You will now be asked about port configuration for the master, normally you would choose the default (2) which uses the /etc/services file

accept the sge_qmaster info

You will now be asked about port configuration for the master, normally you would choose the default (2) which uses the /etc/services file

accept the sge_execd info

leave the cell name as "default"

Enter an appropriate cluster name when requested

leave the spool dir as is

press "n" for no windows hosts!

press "y" (permissions are set correctly)

press "y" for all hosts in one domain

If you have Java available on your Qmaster and wish to use SGE Inspect or SDM then enable the JMX MBean server and provide the requested information - probably answer "n" at this point!

press enter to accept the directory creation notification

enter "classic" for classic spooling (berkeleydb may be more appropriate for large clusters)

press enter to accept the next notice

enter "20000-20100" as the GID range (increase this range if you have execution nodes capable of running more than 100 concurrent jobs)

accept the default spool dir or specify a different folder (for example if you wish to use a shared or local folder outside of SGE_ROOT

enter an email address that will be sent problem reports

press "n" to refuse to change the parameters you have just configured

press enter to accept the next notice

press "y" to install the startup scripts

press enter twice to confirm the following messages

press "n" for a file with a list of hosts

enter the names of your hosts who will be able to administer and submit jobs (enter alone to finish adding hosts)

skip shadow hosts for now (press "n")

choose "1" for normal configuration and agree with "y"

press enter to accept the next message and "n" to refuse to see the previous screen again and then finally enter to exit the installermaster# cp /BiO/gridengine/default/common/settings.sh /etc/profile.d/

master# qconf -ah compute01.local

compute01.local added to administrative host listmaster# yum -y install nfs-utils

master# vi /etc/exports

/BiO 192.168.56.0/24(rw,no_root_squash)master# systemctl start rpcbind nfs-server

master# systemctl enable rpcbind nfs-serverSGE Client installation

compute01# yum -y install hwloc-devel

compute01# hostnamectl set-hostname compute01.local

compute01# vi /etc/hosts

192.168.56.101 qmaster.local qmaster

192.168.56.102 compute01.local compute01compute01# groupadd -g 490 sgeadmin

compute01# useradd -u 495 -g 490 -r -m -d /home/sgeadmin -s /bin/bash -c "SGE Admin" sgeadmincompute01# yum -y install nfs-utils

compute01# systemctl start rpcbind

compute01# systemctl enable rpcbind

compute01# mkdir /BiO

compute01# mount -t nfs 192.168.56.101:/BiO /BiO

compute01# vi /etc/fstab

192.168.56.101:/BiO /BiO nfs defaults 0 0compute01# export SGE_ROOT=/BiO/gridengine

compute01# export SGE_CELL=default

compute01# cd $SGE_ROOT

compute01# ./install_execd

compute01# cp /BiO/gridengine/default/common/settings.sh /etc/profile.d/

Apr 25, 2018 | github.com

nicoulaj commented

on Dec 1 2016 FYI, I got a working version with SGE on CentOS 7 on my linked branch.

This is quick and dirty because I need it working right now, there are several issues:

- I inverted the SGE setup/NFS export order due to #347 , so Debian support is probably broken

- The RPMs for RHEL do not contain systemd init files, so there are some workarounds to start

sge_execdmanually- I used

set_factto set global variables forSGE_ROOT, maybe there is a cleaner way ? I never used Ansible until now, I don't know the good practices...

Apr 24, 2018 | liv.ac.uk

From: JuanEsteban.Jimenez at mdc-berlin.de [mailto: JuanEsteban.Jimenez at mdc-berlin.de ]

Sent: 27 April 2017 03:54 PM

To: yasir at orionsolutions.co.in ; 'Maximilian Friedersdorff'; sge-discuss at liverpool.ac.uk

Subject: Re: [SGE-discuss] SGE Installation on Centos 7I am running SGE on nodes with both 7.1 and 7.3. Works fine on both.

Just make sure that if you are using Active Directory/Kerberos for authentication and authorization, your DC's are capable of handling a lot of traffic/requests. If not, things like DRMAA will uncover any shortcomings.

Mfg,

Juan Jimenez

System Administrator, BIH HPC Cluster

MDC Berlin / IT-Dept.

Tel.: +49 30 9406 2800====================

I installed SGE on Centos 7 back in January this year. If my recolection is correct, the procedure was analogous to the instructions for Centos 6. There were some issues with the firewalld service (make sure that it is not blocking SGE), as well as some issues with SSL.

Check out these threads for reference:http://arc.liv.ac.uk/pipermail/sge-discuss/2017-January/001047.html

http://arc.liv.ac.uk/pipermail/sge-discuss/2017-January/001050.html

May 08, 2017 | ctbp.ucsd.edu

- An example of simple APBS serial job.

#!/bin/csh -f #$ -cwd # #$ -N serial_test_job #$ -m e #$ -e sge.err #$ -o sge.out # requesting 12hrs wall clock time #$ -l h_rt=12:00:00 /soft/linux/pkg/apbs/bin/apbs inputfile >& outputfile- An example script for running executable

a.outin parallel on 8 CPUs. (Note: For your executable to run in parallel it must be compiled with parallel library like MPICH, LAM/MPI, PVM, etc.) This script shows file staging, i.e., using fast local filesystem/scratchon the compute node in order to eliminate speed bottlenecks.#!/bin/csh -f #$ -cwd # #$ -N parallel_test_job #$ -m e #$ -e sge.err #$ -o sge.out #$ -pe mpi 8 # requesting 10hrs wall clock time #$ -l h_rt=10:00:00 # echo Running on host `hostname` echo Time is `date` echo Directory is `pwd` set orig_dir=`pwd` echo This job runs on the following processors: cat $TMPDIR/machines echo This job has allocated $NSLOTS processors # copy input and support files to a temporary directory on compute node set temp_dir=/scratch/`whoami`.$$ mkdir $temp_dir cp input_file support_file $temp_dir cd $temp_dir /opt/mpich/intel/bin/mpirun -v -machinefile $TMPDIR/machines \ -np $NSLOTS $HOME/a.out ./input_file >& output_file # copy files back and clean up cp * $orig_dir rm -rf $temp_dir- An example of SGE script for Amber users (parallel run, 4 CPUs, with input file generated on the fly):

#!/bin/csh -f #$ -cwd # #$ -N amber_test_job #$ -m e #$ -e sge.err #$ -o sge.out #$ -pe mpi 4 # requesting 6hrs wall clock time #$ -l h_rt=6:00:00 # setenv MPI_MAX_CLUSTER_SIZE 2 # export all environment variables to SGE #$ -V echo Running on host `hostname` echo Time is `date` echo Directory is `pwd` echo This job runs on the following processors: cat $TMPDIR/machines echo This job has allocated $NSLOTS processors set in=./mdin set out=./mdout set crd=./inpcrd.equil cat <<eof > $in short md, nve ensemble &cntrl ntx=7, irest=1, ntc=2, ntf=2, tol=0.0000001, nstlim=1000, ntpr=10, ntwr=10000, dt=0.001, vlimit=10.0, cut=9., ntt=0, temp0=300., &end &ewald a=62.23, b=62.23, c=62.23, nfft1=64,nfft2=64,nfft3=64, skinnb=2., &end eof set sander=/soft/linux/pkg/amber8/exe.parallel/sander set mpirun=/opt/mpich/intel/bin/mpirun # needs prmtop and inpcrd.equil files $mpirun -v -machinefile $TMPDIR/machines -np $NSLOTS \ $sander -O -i $in -c $crd -o $out < /dev/null /bin/rm -f $in restrt

Please note that if you are running parallel amber8 you must include the following in your.cshrc:# Set P4_GLOBMEMSIZE environment variable used to reserve memory in bytes # for communication with shared memory on dual nodes # (optimum/minimum size may need experimentation) setenv P4_GLOBMEMSIZE 32000000- An example of SGE script for APBS job (parallel run, 8 CPUs, running example input file which is included in APBS distribution (/soft/linux/src/apbs-0.3.1/examples/actin-dimer):

#!/bin/csh -f #$ -cwd # #$ -N apbs-PARALLEL #$ -e apbs-PARALLEL.errout #$ -o apbs-PARALLEL.errout # # requesting 8 processors #$ -pe mpi 8 echo -n "Running on: " hostname setenv APBSBIN_PARALLEL /soft/linux/pkg/apbs/bin/apbs-icc-parallel setenv MPIRUN /opt/mpich/intel/bin/mpirun echo "Starting apbs-PARALLEL calculation ..." $MPIRUN -v -machinefile $TMPDIR/machines -np 8 \ $APBSBIN_PARALLEL apbs-PARALLEL.in >& apbs-PARALLEL.out echo "Done."- An example of SGE script for parallel CHARMM job (4 processors):

#!/bin/csh -f #$ -cwd # #$ -N charmm-test #$ -e charmm-test.errout #$ -o charmm-test.errout # # requesting 4 processors #$ -pe mpi 4 # requesting 2hrs wall clock time #$ -l h_rt=2:00:00 # echo -n "Running on: " hostname setenv CHARMM /soft/linux/pkg/c31a1/bin/charmm.parallel.092204 setenv MPIRUN /soft/linux/pkg/mpich-1.2.6/intel/bin/mpirun echo "Starting CHARMM calculation (using $NSLOTS processors)" $MPIRUN -v -machinefile $TMPDIR/machines -np $NSLOTS \ $CHARMM < mbcodyn.inp > mbcodyn.out echo "Done."- An example of SGE script for parallel NAMD job (8 processors):

#!/bin/csh -f #$ -cwd # #$ -N namd-job #$ -e namd-job.errout #$ -o namd-job.out # # requesting 8 processors #$ -pe mpi 8 # requesting 12hrs wall clock time #$ -l h_rt=12:00:00 # echo -n "Running on: " hostname /soft/linux/pkg/NAMD/namd2.sh namd_input_file > namd2.log echo "Done."- An example of SGE script for parallel Gromacs job (4 processors):

#!/bin/csh -f #$ -cwd # #$ -N gromacs-job #$ -e gromacs-job.errout #$ -o gromacs-job.out # # requesting 4 processors #$ -pe mpich 4 # requesting 8hrs wall clock time #$ -l h_rt=8:00:00 # echo -n "Running on: " cat $TMPDIR/machines setenv MDRUN /soft/linux/pkg/gromacs/bin/mdrun-mpi setenv MPIRUN /soft/linux/pkg/mpich/intel/bin/mpirun $MPIRUN -v -machinefile $TMPDIR/machines -np $NSLOTS \ $MDRUN -v -nice 0 -np $NSLOTS -s topol.tpr -o traj.trr \ -c confout.gro -e ener.edr -g md.log echo "Done."

biowiki.org

After submitting your job to Grid Engine you may track its status by using either the qstat command, the GUI interface QMON, or by email.

Monitoring with qstatThe qstat command provides the status of all jobs and queues in the cluster. The most useful options are:

- qstat: Displays list of all jobs with no queue status information.

- qstat -u hpc1***: Displays list of all jobs belonging to user hpc1***

- qstat -f: gives full information about jobs and queues.

- qstat -j [job_id]: Gives the reason why the pending job (if any) is not being scheduled.

You can refer to the man pages for a complete description of all the options of the qstat command.

Monitoring Jobs by Electronic MailAnother way to monitor your jobs is to make Grid Engine notify you by email on status of the job.

In your batch script or from the command line use the -m option to request that an email should be send and -M option to precise the email address where this should be sent. This will look like:

#$ -M myaddress@work

#$ -m beasWhere the (-m) option can select after which events you want to receive your email. In particular you can select to be notified at the beginning/end of the job, or when the job is aborted/suspended (see the sample script lines above).

And from the command line you can use the same options (for example):

qsub -M myaddress@work -m be job.sh

How do I control my jobsBased on the status of the job displayed, you can control the job by the following actions:

Monitoring and controlling with QMON

Modify a job: As a user, you have certain rights that apply exclusively to your jobs. The Grid Engine command line used is qmod. Check the man pages for the options that you are allowed to use.

- Suspend/(or Resume) a job: This uses the UNIX kill command, and applies only to running jobs, in practice you type

qmod -s/(or-r)job_id (where job_id is given by qstat or qsub).

- Delete a job: You can delete a job that is running or spooled in the queue by using the qdel command like this

qdel job_id (where job_id is given by qstat or qsub).

You can also use the GUI QMON, which gives a convenient window dialog specifically designed for monitoring and controlling jobs, and the buttons are self explanatory.

For further information, see the SGE User's Guide ( PDF, HTML).

May 07, 2017 | biowiki.org

Does your job show "Eqw" or "qw" state when you run qstat , and just sits there refusing to run? Get more info on what's wrong with it using:

$ qstat -j <job number>

Does your job actually get dispatched and run (that is, qstat no longer shows it - because it was sent to an exec host, ran, and exited), but something else isn't working right? Get more info on what's wrong with it using:

$ qacct -j <job number> (especially see the lines "failed" and "exit_status")

If any of the above have an "access denied" message in them, it's probably a permissions problem. Your user account does not have the privileges to read from/write to where you told it (this happens with the -e and -o options to qsub often). So, check to make sure you do. Try, for example, to SSH into the node on which the job is trying to run (or just any node) and make sure that you can actually read from/write to the desired directories from there. While you're at it, just run the job manually from that node, see if it runs - maybe there's some library it needs that the particular node is missing.