|

|

Home | Switchboard | Unix Administration | Red Hat | TCP/IP Networks | Neoliberalism | Toxic Managers |

| (slightly skeptical) Educational society promoting "Back to basics" movement against IT overcomplexity and bastardization of classic Unix | |||||||

Version 2.01 (March 2017)

|

Any sufficiently large and complex monitoring package written in C contains

buggy Reinterpretation of P. Greenspun quote about Lisp |

|

|

System monitoring, specifically Unix system monitoring, is an old idea and there was not much progress for the last thirty years in comparison with state of the art in early 1990th when Tivoli and OpenView entered the marketplace. Moreover now it is clear that any too complex monitoring system is actually counterproductive: sysadmins simply don't use it or use small fraction of its functionality because they are unable or unwilling to master the required level of complexity. they have already too much complexity on the plate to want more.

Another important factor (or nail into expensive proprietary monitoring system, such as IBM Tivoli, or HOP OpenView, coffin) is the tremendous success of protocols such as ssh and tool like rsync that change the equation making separate/proprietary channels of communication between the monitoring clients and the "mother ship" less necessary.

Another important development is proliferation and relative success of Unix/Linux configuration management system which also have some monitoring component (or can be programmed to perform those tasks along with configuration tasks).

Even HP OpenView which is somewhat better that several other commercial systems looks like a huge overkill for a typical sysadmin. Too much staff to learn, too little return on investment. And if OpenView is managed by a separate department this is simply a disaster: typically those guys are completely detached from the needs of the rank-and-file sysadmins and live in their imaginary (compartmentalized) world. Moreover they are prone of creating red tape and as a result stupid, unnecessary probes are installed and stupid tickets are generated.

In one organization those guys decided to offload the problem of dying Open Views agents daemons ( which in Open View tent to die regularly and spontaneously) to sysadmins, creating a stream of completely useless tickets. That was probably the easiest way to condition sysadmins to hate OpenView. As a result, communication lines between OpenView team and sysadmins became frozen, the system fossilized and served no useful purpose at all. Just "waiving dead chicken type of system. Those "monitoring honchos" enjoyed their life for a while until they were outsourced. At the same time useful monitoring of filesystems free space was done by a simple shell script, written by one of sysadmins ;-). So much for investment in Open View and paying for specialized monitoring staff.

As for Tivoli deployments, sometimes I think that selling their products in a kind of a subversive work of some foreign power (is not IBM too cozy with China :-) which wants to undermine the USA IT. They do produced good eBooks called Redbooks, though ;-)

At the core monitoring system is a specialized scheduler that executes local or remote jobs (called probes) at predetermined time (typically each N minutes). In case of remote servers execution can be agentless (in this case ssh or telnet typically are used as an agent, but shared filesystem like NFS also can be used) or using specialized agent (end point). There was not any really revolutionary ideas in this space for the last 20 years or so. That absence of radical new ideas permits commodization of the field and corresponding downward pressure on prices. With open source product now "good enough". Some firms still try to play the "high price" - "high value" game with the second rate software they own, but I think that time for premium prices for monitoring products has gone.

Now the baseline for comparison is several open source systems which you can try free and buy professional support later on, which usually has lower maintenance costs them proprietary systems. That does not mean that they can compete in all areas and, for example, agent-based monitoring and event correlation is still done better by proprietary, closed source systems, but they are usually more adaptable and flexible which is an important advantage. Here is one apt quote:

Nagios is frankly not very good, but it's better than most of the alternatives in my opinion. After all, you could spend buckets of cash on HP OpenView or Tivoli and still be faced with the same amount of work to customize it into a useful state....

|

|

Unix system monitoring includes several layers:

In this page we will mainly discuss operating system monitoring. And we will discuss it from our traditional "slightly skeptical" viewpoint. First of all it is important to understand that if the system is geographically remote it is considerably more difficult to determine what went wrong, as you lose significant part of the context of the situation that is transparent to the local personnel. Remote cameras can help to provide some local context, but still they are not enough. It's much like flying airplane at night: you need to rely solely on instruments. In this case you need more a sophisticated system. Another large and somewhat distinct category are virtual machines. Which actually can be remote, in distant locations too.

Most system processes write some messages to syslog, if things went wrong. That means that first thing in OS monitoring should be monitoring of system logs, but this is seldom done and extremely rarely done correctly. the second thing is monitoring of disks free space. Which also seldom is done correctly, as this simple problem does not have a simple solution and have a lot of intricate details that needs to be taken into account (various filesystem usually need to have different thresholds, 100% utilization of some filesystem in Ok, while others (such a /tmp is a source of problems, But logs and free space is two areas were the real work on a robust monitoring system should start. Not from acquiring system with a set of some useful or semi-useful probes but getting a sophisticated log analyzer and writing yourself a customized for your environment free filesystems space analyzer. Reasonably competent free space analyzer that allows individual thresholds for filesystem and two stages of alerted (warning and critical) can be written in less then 1000 lines of Perl or Python and that means that it can be written and debugged in a week or two.

Please be aware that some commercial offerings in the category of log analyzers are weak and are close to junk and (in case of commercial offerings) survive only due to relentless marketing (splunk might be one such example).

Some use databases to process log. Which is not a bad idea but it depends on your level of familiarity with database and SQL (typically this attractive option for those sysadmin, who maintain a lof of MySQL or Oracle databases) and the size of your log files. With extremely large log files you better stay within flat file paradigm, although SSD changed this equation recently. Spam filters can serve as a prototype for useful log analyzers. In case of analyzing flat file usage of regex is a must, so Perl looks like a preferable scripting language for this typ of alayser. A reasonably competent analyzer can be written in 2-3K of code. Multiple prototypes can be downloaded from he Web or from the distribution you are using (see, for example, Logwatch ). The key problem here (vividly represented by Logwatch) is that the set of "informative" log messages tend to fluctuate with time and is generally OS version depends (varying even from one release to another, but drastically different for example between RHEL 5 and RHEL 6) and in one year and a couple of upgrades your database of alerts becomes semi-useless. If you have time to do another cycle of modifying the script -- then good, if not, you have another monitoring script that is "waving dead chicken". One way to avoid this situation is to use Syslog Anomaly Detection Analyzers but ther are still pretty raw and can produce many false positives.

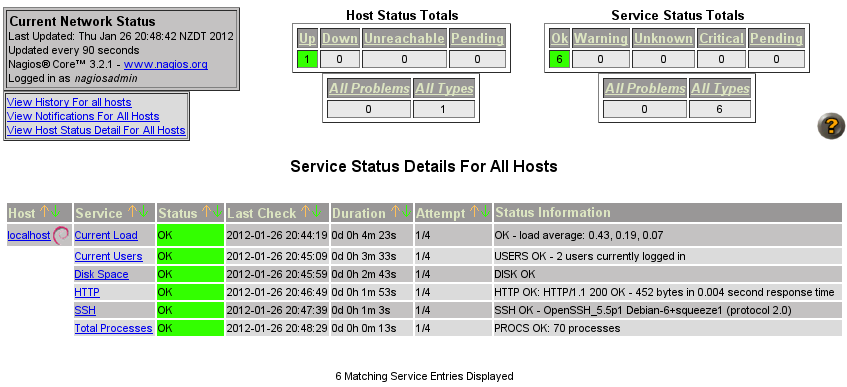

If you manage large number of systems it is important for your sanity to see the situation on existing boxes via dashboard and integrated alert stream. You just physically can't login and check boxes one by one. While monitoring is not a one-size-fits-all solution, a lot of tasks can be standardized and instead of reinventing the bicycle adopted from or with some existing open source system. Reinventing the bicycle (unless your a real expect in LAMP) is usually pretty expensive exercise. You probably are better off betting on one of the popular open source system such as Nagios and using its framework for writing your own scripts.

The problem of monitoring is complicated by the fact that situation with Unix systems monitoring in most large organizations typically is far from rational. Which means that sometimes it is close of Kafkaesque level of bureaucratic absurdity ;-) Here we means that it is marked by a senseless, illogical, disorienting, often menacing complexity and bureaucratic barriers. Most large organizations have crippled by this phenomenon monitoring infrastructures. The following situations are pretty typical:

Proliferation of overlapping tools is a typical situation in large corporations, where left hand does not know what

right is doing and the current infrastructure is a slight adaptation of a mess created by serial

acquisitions. In a way, this is also yet another case of overcomplexity, but instead of single too complex

tool (or two) that supposedly unifies most aspects of monitoring of Unix systems, we have multiple overlapping tools. Often

such tools contain redundant, expensive components which don’t play well with one another or are

completely useless. Often

some acquired or were inherited via acquisitions and might be used for very limited cases but

not

discarded. Sometime acquired via acquisitions tools that are mercilessly discarded (with expensive

licenses) are far superior to the existing solutions.

Higher level IT management often can't distinguish between reality and illusion produced

by skilled marketing and force rank-and-file workers into systems that are not that useful (to

say politely). And it is low level staff, especially system administrators, who later

pays for top brass state of self-indulged juvenility. Delusional marketing of pretty weak IT products

to the IT brass (the tactic perfected by IBM, but used by all major vendors), when a crappy

system is sold as the next revelation

is pretty successful in keeping fools and their money parted, but in this case we are talking about

multi-billion corporations and that's a chump change for them. Still the fact on the ground is that

IT brass prefer to cling to fantasies that some expensive IT system from top four vendors recommended

by Gartner (which has huge problems with distinguishing between marketing hype and reality, see

Gartner Magic Quadrant for IT Event Correlation and Analysis) will snatch them from the current

harsh reality and create a Disneyland out of the messed up corporate datacenter. Those gullible

IT management clowns destroy the existing American programmers and system administration culture

of Unix system adaptation and integration (which created many first class products by adapting average

product to the organizational needs) adopting Windows-style approach and make their staff enslaved in the support of complex but

inefficient system with GUI. Without critical thinking, without a culture of "do/adapt it yourself"

business IT is doomed to fall victim to charlatans, who sell one or the other version of snake oil.

Typically such situation is a side effect of outsourcing.

Excessive bureaucratization of IT paralyzes effective decision making. There are very few places where Jerry Pournelle’s Iron Law of Bureaucracy is more prevalent than in large corporate IT:

“In any bureaucracy, the people devoted to the benefit of the bureaucracy itself always get in control and those dedicated to the goals the bureaucracy is supposed to accomplish have less and less influence, and sometimes are eliminated entirely.”

Few people understand that the key question in sound approach to monitoring is the selection of the level of complexity that is optimal both for the system administrators (who due to the overload is the weakest link in the system) and at the same time produce at least 80% of the results necessary to keep a healthy system. Actually in many cases useful set of probes is much smaller that one would expect. For example, monitoring of disk filesystems for free space typically is No.1 task that in many cases of enterprise deployment probably constitute 80% of total value of monitoring system, monitoring performance of few parameters the server (CPU, uptime, I/O) is probably No.2 that has 80% of residual 20% and so on. In other word Pareto law is fully applicable to monitoring

Simplicity pays nice dividends: if tool is written in a scripting language and matches the level of skills of sysadmins they can better understand it and possibly adapt it to the environment and thus get far superior results then any "of the shelf" tool. For example, if local sysadmins just know shell (no Perl, no Javascript), then the ability to write probes in shell is really important and any attempt to deploy tools like ITM 5.1 (with probes written in JavaScript) is just a costly mistake.

Also avoiding spending a lot of money on acquisition, training and support of overly complex tool provide opportunity to pay more for support including separately paid incidents which vendors love and typically serve with very high priority as for unlike annual maintenance contract they represent "unbooked" revenue source.

Let's think if any set of proprietary tools that companies like IBM try to push thou the throat for, say, half-million dollars in just annual maintenance fees (using cheap tricks like charging per core, etc) are that much better that a set of free open source tools that covers the same set of monitoring and scheduling tasks. I bet you get pretty good quality 24x7 support for a small fraction of this sum and at the end of the day it all that matter. I saw many cases in which companies used an expensive package and implemented subset of functionality that was just a little more then ICMP (aka ping) monitoring. Or that the subset of used functionality can be replicated much more successfully by a half dozen simple Perl scripts. The Alice in Wonderland of perversions of corporate system monitoring still need to be written, but it is clear that regular logic is not applicable to a typical corporate environment. Or many if should be not Alice of Wonderland but

Another important consideration is what we can call Softpanorama law of monitoring: If in a large organization, the level of complexity of a monitoring tool exceeds certain threshold (which depends on the number and the level of specialization of dedicated to this task sysadmins and the level of their programming skills of all other sysadmins) the monitoring system usually became stagnant and people are reluctant to extend and adapt it to new tasks. Instead of being a part of the solution such a tool becomes a part of the problem.

This is typical situation on the level of complexity typical for Tivoli, CA Unicenter and, to a slightly lesser extent, HP Operations Manager (former Open View). For example, writing rules for Tivoli TEC requires some understanding of Prolog (which is very rare, almost non-existent skill, among Unix sysadmins) as well as Perl ( knowledge of which is far more common, but far from universal among sysadmins, especially on Windows).

Adaptability means that simpler open source monitoring systems that uses just the language sysadmin know well be it Bash or Perl has tremendous advantages over the complex one in the long run. Adaptability of the tool is an important characteristic and it is unwise (but pretty common) to ignore it.

|

If in a large organization if the level of complexity of a monitoring tool exceeds certain threshold (which depends on the number and the level of specialization of dedicated to this task sysadmins and the level of their programming skills) the monitoring system usually became stagnant and people are reluctant to extend and adapt it to new tasks. Adaptability of the tool is an important characteristic and it is unwise (but pretty common) to ignore it. |

I suspect that the level of complexity should be much lower that the complexity of monitoring solutions used in most large organizations (actually Goldman Sachs extensively uses Nagios, despite being probably the richest organization on the planet ;-). Such cases allow to overcome corporate IT bureaucracy. In any case that fact on the ground is that in many current implementations in large organization complex monitoring system are badly maintained (to the extent they become almost useless as in example with Open View above) and their capabilities are hugely underutilized. That demonstrate that raising above certain level of complexity of monitoring system is simply counterproductive, and simple, more nimble systems have an edge. sometime two simple systems (one for OS monitoring, one for network and applications probes) outperform a single complex system by large margin.

In other words most organizations suffer from the feature creep in monitoring systems in the same way they are suffering from feature creep in regular applications.

Like love system monitoring is a word with multiple meanings. We can define several categories of operating system monitoring:

Monitoring system logs. This is

sine

qua non of operating system monitoring. A must. If this is not done (and done

properly), there not reason to discuss any

other aspects of monitoring because as

Talleyrand characterized such situation "this is worse then a crime -- this is a blunder." In Unix this

presuppose the existence of centralized server, so called LOGHOST server.

Few people understand that log analyses on LOGHOST server by itself represents a pretty decent distributed monitoring

system and that instead reinventing the wheel it is possible to enhance it by writing probes

that run from cron and which

write messages to syslog as well as monitoring script on the LOGHOST that pickup specific messages (or sets of messages) from the

log.

In a typical Unix implementation such as Solaris or RHEL 6 a wealth of information is collected by syslog daemon and put in /var/log/messages

(linux) or /var/adm/messages (Solaris, HP-US). There are now "crippled"

distributions that uses jounald without syslog daemon, but RHEL in version 7 continues

to use rsyslogd.

Unix syslog, which originated from Sendmail project records various conditions including

crashes of components, failed login attempts, and many other useful things including information

about health of key daemons. This is an integral area that overlaps each and every areas described

above, but still deserve to be treated as a separate. System logs provide a wealth of information

about the health of the system, most of which is usually never used as it is buried in the noise

and because regular syslog daemon outlived its usefulness (syslog-ng

used as a replacement for syslogd in Suse 10 and 11 provides quite good abilities to filter logs,

but unfortunately they are very complex to configure and difficult to debug).

Sending log stream from all similar systems to the special log server is also important from the

security standpoint.

Monitoring System Configuration Changes This category includes monitoring for changes in hardware and software configurations that can be caused by an operating system upgrade, patches applied to the system, changes to kernel parameters, or the installation of a new software application.

The root cause of system problems can often be traced back to an inappropriate hardware or software configuration change. Therefore, it is important to keep accurate records of these changes, because the problem that a change causes may remain latent for a long period before it surfaces. Adding or removing hardware devices typically requires the system to be restarted, so configuration changes can be tracked indirectly (in other words, remote monitoring tools would notice system status changes).

However, software configuration changes, or the installation of a new application, are not tracked in this way, so reporting tools are needed. Also, more systems are becoming capable of adding hardware components online, so hardware configuration tracking is becoming increasingly more important.

Here version control systems and Unix configuration management tools directly compete with

monitoring systems. As I mentioned some Unix configuration management systems have agents and as

such can replicate lion share of typical Unix monitoring system tasks.

Monitoring System Faults. After ensuring that the configuration is correct, the first

thing to monitor is the overall condition of the system. Is the system up? Can you talk to it, ping

it, run a command? If not, a fault may have occurred. Detecting system problems varies from determining

whether the system is up to determining whether it is behaving properly. If the system either isn't

up or is up but not behaving properly, then you must determine which system component or application

is having a problem.

Monitoring System Resource Utilization. For an application to run correctly, it may need

certain system resources such as the amount of CPU, memory or I/O bandwidth an application

is entitled to use during a time interval. Other examples include the number of open files or sockets,

message segments, and system semaphores that an application has. Usually an application (and operating

system) has fixed limits for each of these resources, so monitoring their use at levels close to

threshold is important. If they are exhausted, the system may no longer function properly. Another

aspect of resource utilization is studying the amount of resources that an application has used.

You may not want a given workload to use more than a certain amount of CPU time or fixed amount

of disk space. Some resource management tools, such as quota, can help with this.

Monitoring System Performance. Monitoring the performance of system resources can help

to indicate problems with the operation of the system. Bottlenecks in one area usually impact system

performance in another area. CPU, memory, and disk I/O bandwidth are the important resources to

watch for performance bottlenecks. establish baselines you should monitor system during typical

usage periods. Understanding what is "normal" helps to identify when system resources are

scares during a particular periods (for example "rush hours"). Resource management tools are

available that can help you to allocate system resources among applications and users.

Monitoring System Security. While the ability to protect your systems and information from determine intruders is a pipe dream due to existence of such organizations as NSA and CIA (and you really should consider the return to typewriters for such materials disallowing any electronic copy) , some level of difficulties for intruders can and should be created. Among other things that includes so called "monitoring for unusual activities" . This type of monitoring includes monitoring of last log, unusual permissions, unusual changes in /etc/passwd files and other similar "suspicious" activities. This is generally a separate area from "regular monitoring" for which specialized systems exist. A separate task is so called hardening of the system -- ensuring compliance with the policies set for the systems (permissions of key files, configuration of user accounts, set of people who can assume the role of root), etc. This is type of monitoring that is difficult to do right as the notions of superior activity is so fuzzy. Performance and resource controls are also can be useful for detecting such activities. The value of specialized security tools is often overstated, but in small doses they can be useful not harmful. That first of all is applicable to so called hardening scripts and local firewall configurators. For example it is easy to monitor for world writable files and wrong permissions on home directories and key system directories. There no reason not to implement this set of checks. In many cases static (configuration settings) security monitoring can be adapted from existing hardening package such as (now obsolete) Titan or its more modern derivatives.

As a side note I would like to mention that rarely used and almost forgotten

AppArmor (that is available in

Suse by default) can do wonders with application security.

Monitoring system performance. Here in the simplest form, the output of System Activity Reporter (sar) can be processed and displayed. Sar is a simple and very good tool first developed for Solaris and later adopted by all other flavors of Unix, including Linux. This solution should always be implemented first, before any more complex variants of performance monitoring are even considered. Intel provides good performance monitoring tools with their compiler suit.

"The big four" - HP Operations Center (with Operations Manager as the key component), Tivoli. BMC and CA Unicenter dominate large enterprise space. They are very complex and expensive products, products which require dedicated staff and provide relatively low return on investment. Especially taking into account the TCO which dramatically increases with each new version due to overcomplexity. In a way dominant vendors painted themselves into a corner by raising the complexity far above the level normal sysadmin can bear.

My experience with big troika is mainly in "classic" Tivoli (before Candle, aka Tivoli Monitoring 6.1, and Micromuse, aka Netcool, acquisitions) and HP_Operations Manager, but still I think this statement reflects the reality of all "big vendors ESM products": also mentioned vendors use overcomplexity as a shield to protect against competitors and to extract a rent from customers. IBM is especially guilty in "incorrect" behavior as it become very greedy resorting to such dirty tricks as licensing of their software products per socket or, worse, per core. You reject such offers as a matter of prudency: you can definitely utilize your money ten times more efficiently then buying such a product by using a decent open source product such as Puppet (which while not a monitoring system per se duplicates much of this functionality) with professional support. Nothing in monitoring space even remotely justifies licensing per socket or per core. Let Watt Street firms use those wonderful products as only for them one million more one million less is a rounding error.

Also despite level of architectural thinking is iether completely absent, or is very low, new versions of such commercial systems are produced with excessive frequency to keep the ball in play. While the technologies used can be ridiculously outdated: those products often use obsolete of semi-obsolete architecture and sometimes obscure, outdated and difficult to understand and debug protocols. In the latter case, the products became the source of hidden (or not so hidden) security vulnerabilities. That actually is not limited to monitoring tools and it typical for any large complex enterprise applications (HP Data Protector with its free root telnet for all nodes in an insecure mode comes to mind). In a way, the agents on each server are always should be viewed as hidden backdoors, not that different from backdoors used for "zombification" of servers by hackers. That does not mean that agentless tools are more secure. If they use protocols such as SSH for running remote probes, the "mothership" server that host such a system became a "key to the kingdom" too. This is a pretty typical situation for such tools as Nagios and HP SiteScope.

For major vendors of monitoring products with substantial installed userbase overcomplexity is to certain extent unavoidable: they need to increase complexity with each version due to the feeling of insecurity and the desire to protect and extend their franchise. What is bad is that overcomplexity is used as the mean of lock-in of users and as a shield that protects against competitors simultaneously helping to extract rent from existing customers (the more complex the tool is the more profitable are various training classes). Certain vendors simply cannot and do not want to compete on the basis of functionality provided. They do need a lock-in to survive and prosper.

| For major vendors of monitoring products with substantial installed userbase overcomplexity is to certain extent unavoidable: they need to increase complexity with each version due to the feeling of insecurity and the desire to protect and extend their franchise. What is bad is that overcomplexity is used as the mean of lock-in of users and as a shield that protects against competitors simultaneously helping to extract rent from existing customers (the more complex the tool is the more profitable are various training classes). |

In a way, this is very similar pressures to those that destroyed the US investment banks in recent "subprime mess". Due to such pressures vendors are logically pushed by events into the road which inevitably leads to converting their respective systems into barely manageable monsters. They still can be very scalable despite overcomplexity, but the flexibility of the solutions and the quality of interface suffers greatly. And only due to high quality and qualification of tech support those system can be maintained and remain stable in a typical enterprise.

That opens some space for open source monitoring solutions which can be much simpler and rely much more on established protocols (for example, HTTP, SMTP and SSH). Important fact which favors simpler solutions is that in any organization, usefulness of the monitoring package is limited to the ability of personnel to tweak it to the environment. Packages with tuning that are above the head of the personnel can actually be harmful (Tivoli Monitoring 5.1 with its complex API and JavaScript-based extensions is a nice example of the genre)

| In any organization, usefulness of the monitoring package is limited to the ability of personnel to tweak it to the environment. Packages with the complexity of tuning that are above the head of the personnel can actually be harmful (Tivoli Monitoring 5.1 with its complex API and JavaScript-based extensions is a nice example of the genre) |

Since adequate (and very expensive) training for those products is often skipped as an overhead, it' not surprising that many companies never get more than the most basic functionality for a very expensive (and theoretically capable) product. And basic functionality is better provided by simple free or low cost packages. So extremes meet. This situation might be called a system monitoring paradox. That's exactly what makes Tivoli, HP Operations Center, BMC and CA Unicenter consultants happy and in business for many years.

|

System monitoring paradox is that both expensive and cheap monitoring solution usually provide very similar quality of monitoring and both have adequate capabilities for a typical large company |

It costs quite a lot to maintain and customize tools like Tivoli or Open view in large enterprise environment where money for this are readily available. Keeping good monitoring specialist on the job is also a problem as once person become really good in scripting they tend to move to other, more interesting areas, like web development. There is nothing too exciting in daily work of monitoring specialist and after a couple of years the usual feeling is that his/her IQ is underutilized is to be expected. So most capable people typically move on. The strong point of big troika is support and availability of professional services but the costs are very high. But it is important to understand that complex products to a certain extent reflect the large datacenter environment complexity and not all tasks can be performed by simple products although 80% might be s a reasonable estimate.

That means that the $3.6 billion market for enterprise system management software is ripe for competition from products that utilize scripting languages instead of trying to foresee each and every need the enterprise can have. Providing simple scripting framework for writing probes and implementing the event log, dashboard and configuration viewer on a webserver lower the barrier of entry.

But such solutions are not in the interests of large vendors as they can lower their profits. They cannot do not want to compete in this space. What is interesting is that scripting-based monitoring solutions are pretty powerful and proved to be competitive with much more complex "pre-compiled" or Java-based offerings. There are multiple scripting-based offerings from startups and even individual developers which can deliver 80% of the benefits of big troika products for 20% of cost of less and without millions of lines of Java code, an army of consultants and IT managers and annual conferences for big brass.

In other words "something is rotten in the state of Denmark." (Hamlet Quotes)

Scripting languages beat Java in area of monitoring hands down and if a monitoring product is written in a scripting language this should be considered to be a strategic advantage. Advantage that is worth to fight for.

| Scripting languages beat Java in the area of monitoring hands down and if a monitoring product is written in a scripting language and/or is extendable using scripting language this should be considered to be a strategic advantage. Advantage that is worth fighting for. |

First of all because codebase is more maintainable and flexible. Integration of plug-ins written in the same scripting language is simpler. Debugging problems is much simpler. Everything is simpler because scripting language is a higher level language then Java or C#. But at the same time I would like to warn that open source is not a panacea and it has its own (often hidden) costs and pitfalls. In a corporate environment other things equal you are better off with an open source solution behind which there is at least one start-up. Badly configured or buggy monitoring package can be a big security risk. In no way that means that, say, Tivoli installations in real world are secure, but they are more obscure and security via obscurity works pretty well in a real world ;-)

Let's reiterate the key problems with monster, "enterprise ready", packages:

Licensing and Maintenance Costs. One of the most common problems is the cost of license. Often "the big troika" is too expensive and just priced smaller and medium size companies out of the market. But the picture is more complex then that. For example IBM used to sell ITM Express 6 really cheap and this is actually full blown enterprise class monitoring system that is just limited to few nodes. But nodes can be aggregators of events based on some open source package, not individual servers so this limitation can be partially bypassed. By buying minimal or "express" edition or expensive tools organizations can get the first class GUI and robust correlation engine.

The second part of the total cost of ownership is the cost of maintenance contracts. Tech support

provided by large vendors is usually good or excellent but it costs money. Also due to the

level of complexity (or more correctly level of overcomplexity ;-) for some tasks you need

expensive consultants and those costs in five-ten years can run to the level comparable with the

cost of the license (see below).

Overcomplexity. Often smaller and medium size companies do not want all the "Christmas

tree" of features and wants slimmer, more flexible and more focused on their needs product. They

also cannot afford using expensive consultants on a regular basis (which is often the way Tivoli

is deployed and maintained so upfront costs is just the tip of the iceberg). Due to IT outsourcing

it is not clear if usage of consultant is the best path as in the absence of loyal staff there is

not countervailing force in complex technical negotiations and company are bound to overpay or buy

unnecessary services and solutions. I know several companies that use TEC but paradoxically do not

have specialists to write rules for TEC (TEC uses

Prolog as a rules language). That situation makes TEC inferior to simpler packages. Also

there are companies which use Tivoli monitoring exclusively to monitor disk space on the servers

the way even a simple Perl script that runs via cron can accomplish much better. In this case money

were wasted for tool that is used for tiny fraction of capacity.

Absence of insurance in case of abrupt changes of the course by the vendor. Tivoli users now understand that the fact the TEC is a close source can cost them substantial amount of money. Even if they do not want to move to Micromuse style solution IBM will drag them kicking and screaming. That would be good if the new solution is a clearly superior to the old. But this is not the case.

If you are designing a monitoring solution you need to solve almost a dozen of pretty complex design problem. The ingenuity and flexibility the solution for each of those problems represent the quality of architecture. Among those that we consider the most important are:

Often the interface with the "mothership" is delegated to a special agent (adapter in Tivoli

terminology) which contains all the complex machinery necessary for transmission of event

to the event server using some secure or not very secure protocol. In this case probes communicate

with the agent. In the simplest case it can be syslogd daemon, SMTP daemon of simple HTTP-client

(id HTTP is used for communication with the mothership.

In the simplest case the agent can be a stand alone executable that is invoked by each probe via pipe ("send event" type of the agent). In this case HTML/XML based protocols are natural (albeit more complex and more difficult to parse then necessary), although SMTP-style keyword-value pairs are also pretty competitive and much simpler. The only problem is long, multiline values, but here the boxy of smtp message can be used instead of extended headers. Unix also provides the necessary syntax in "here" documents.

For efficiency an agent can be coded in C, although on modern machines this is not strictly necessary. In case of HTML any command like browser like lynx can be used as a "poor man agent". In this case the communication with the server needs to be organized via forms.

I would like to stress that SMTP mail, as imperfect as it is, proved to be a viable communication

channel for transmitting events from probes to the "mothership" and then distributing them to interested

parties.

One simple and effective way of aggregation is converting events into "tickets": groups of events

that corresponds to a serviceable entity (for example a server)

Those question make sense for users too: if you are able to answer those questions for a particular monitoring solution that means that you pretty much understand the particular system architecture.

Not all components of the architecture need to be implemented. The most essential are probes. At the beginning everything else can be reused via available subsystems/protocols. Typically the first probe implemented and monitoring disk free space ;-) But even is you run pretty complex applications (for example LAMP stack) you can assemble your own monitoring solution just by integrating of ssh, custom shell/Perl/Python scripts (some can be adapted from existing solutions, for example from mon) and Apache server. Basic HTML tables serve well in this respect as a simple but effective dashboard, and are easy to generate, especially from Perl. SSH proved to adequate as a agent and data delivery mechanism. You can even run proves via ssh (so called agentless solution), but this solution has an obvious drawback in comparison from running the from cron -- if the server is overloaded of ssh daemon malfunctions the only thing you can say that you can't connect. But other protocols such as syslog might still be operative and prove that use them can still deliver useful information. If you run you probes from say /etc/cron.hourly (very few probes need to be run more often because in large organization, like in dinosaurs, the reaction is very slow, and nothing can be done in less then an hour ;-) you can automatically switch to syslog delivery if for example you ssh delivery does not work. Such adaptive delivery mechanism when the best channel of delivery of "tick" information is determined on the fly is more resilient.

The simples script that can run probes sequentially and can be called from cron can look something like this:

let $POLLING_INTERVAL=60 # 1 minute sleeping interval between probes.

for probe in /usr/local/monitor/probes/* ; do

$probe >> /tmp/probe_dir/tick # execute probe and send output to a named pipe

sleep $POLLING_INTERVAL # sleep interval should be specified in seconds

done

scp /tmp/probe_dir/tick $LOGHOST/tmp/probes_collector/$HOSTNAME

Another approach is to "inject" each server local crontab with necessary entries once a day and rely on local atd daemon for scheduling. This offloads large part of scheduling load from the "mothership" and at the same time has enough flexibility (some local cron scripts can be mini-schedulers in their own right).

As for representation of the results on the "mothership" server, typically local probes can be made capable generating HTML and submitting it as reply to some form to the Web server running on the mothership, which performs additional rendering and maintenance of history, trends, etc (see finance.yahoo.com for inspiration). Creating a convenient event viewer and dashboard is a larger and more complex task, but basic functionality can achieved without too much effort using apache, off-the shelf SMTP email Web browser (used as event viewer) and some SCI scripts. Again adaptability and programmability are much more important then fancy capabilities.

|

Adaptability and programmability are much more important then fancy capabilities. |

For example you can write a Perl script that generates a HTML table which contains the status of your devices. In such a table color bars can represent the status of the server ( for example, Green=GOOD : Yellow=LATENCY >100ms : Red=UNREACHABLE). See Set up customized network monitoring with Perl. I actually like very much the design of finance.yahoo.com interface and consider it to be a good prototype for generic system monitoring, as it is customizable and fits the need of server monitoring reasonably well. For example, the concept of portfolios is directly transferable to the concept of groups of servers or locations.

Similarly any Web-mail implementation represents an almost complete implementation of the event log. If it is written in a scripting language it can be gradually adapted to the needs (instead of trying to reinvent the bicycle and writing the event log software from scratch). I would like to reiterate it again that this is a very strong argument for SMTP-based or SMTP compatible/convertible structure of events, for example, sequence of lines with structure

keyword: value

until blank line and then text part of the message.

Using paradigm of small reusable components are the key to creation of flexible monitoring system. Even in Windows environment you now can do wonders using Cygwin, or free Microsoft analog called "Linux for Windows" ( SFU 3.5. ). SSH solves pretty complex problem of component delivery and updates over secure channel, so other things equal it might be preferable to installation of often buggy and insecure (and that includes many misconfigured Tivoli installations) local agents. Actually this is not completely true: local installation of Perl can serve as a very powerful local agent with probes scripts sending information, for example to Web server. And Perl is installed by default on all major Unixes and Linux. In the most primitive way refreshing of information from probes can be implemented as automatic refresh of HTML pages in frames. But there are multiple open source monitoring packages were people worked on refining those ideas for several years and you need critically analyze them and select the package that is most suitable for you.

Still simplicity pays great dividends in monitoring as you can add your own customarization with much less efforts.

|

Simplicity pays great dividends in monitoring as you can add your own customarization with much less efforts and without spending inordinate amount of time studying obscure details of excessively complex architecture |

I would recommend to start with a very simple package written in Perl (which every sysadmin should know ;-) and later when you get understanding of issues and compromises inherent in the design of monitoring for your particular environment (which can deviate from a typical in a number of ways) you can move up in complexity. Return on investment in fancy graphs is usually less then expected after first two or three days (outside presentations to executives), but your mileage may vary. If you need graphic output then you definitely need a more complex package that does the necessary heavy lifting for you. It does not make much sense to reinvent the bicycle again and again but in case you need usually a spreadsheet has the ability to create complex graphs from tables and some spreadsheets are highly programmable.

| I would recommend to start with a very simple package written in Perl (which every Unix sysadmin should know ;-) and later when you get understanding of issues and compromises inherent in the design you can move up in complexity. |

Open source packages show great promise in monitoring and in my opinion can compete with packages from traditional vendors in small and medium size enterprise space. The only problematic area is the correlation of events but even here you can do quite a lot by simply using capabilities of manipulation of "event window" by any SQL database (preferably memory based database).

The key question of adopting an open source package is deciding whether it can satisfy you r needs and have architecture that you consider logical enough to work with. This requirement translates into amount of time and patience necessary to evaluate them. I hope that this page (and relevant subpages) might provide some starting points and hints on where to look. Also with AJAX the flexibility and quality of open source Web server based monitoring consoles dramatically increased. Again, for the capabilities of the AJAX technology you can look at finance.yahoo.com.

Even if the company anticipates getting a commercial product, creating a prototype using an open source tools might pay off in the major way, giving the ability to cut though the thick layer of vendor hype into the actual capabilities of a particular commercial application. Even in production environment the simplicity and flexibility can compensate for less polished interface and lack of certain more complex capabilities, so I would like to stress it again that in this area open source tools looks very competitive to complex and expensive commercial tools like Tivoli.

The tales about overcomplexity of Tivoli product line are simply legendary and we will not repeat them here. But one lesson emerges: simple applications can complete with very complex commercial monitoring solutions for one simple reason: overcomplexity undermines both reliability and flexibility, the two major criteria for monitoring application. Consider criteria for the monitoring application to be close to criteria for the handguns or rifles: it should not jam in sand and water.

|

Overcomplexity undermines both reliability and flexibility |

If you use ticker based architecture in which individual probes run from cron script on each individual server and push "ticks" to the "mothership" (typically LOGHOST server) were it is process by special "electrocardiogram" script each hour (or each 15 min if you inpatient ;-), you can write a usable variant with half a dozen of most useful checks (uptime check for overload, DF check for missing mounts and free space, log check for strange of too many messages per interval, check of status for a couple of critical daemons, and a couple of others) in say 40-80 hours in shell. Probably less if you use Perl (you can also use both writing probes in shell and electrocardiogram script in Perl). Probes are generally should be written in uniform style and use common library of functions. This is easier done in Perl but if the server is heavily loaded such probes might not run. ticks can be displayed via web server, providing a primitive dashboard.

If you are good programmer you probably can write such system in one evening, but as Russians say The appetite comes during a meal… and this system need to evolve for at least a week to be really usable and satisfy real needs. BTW to write a good, flexible "filesystem free space' script is a real challenge, despite the fact that the task is really simple. The simplest way to start might be to rely on individual "per server" manifests (edited outputs of df from the server), which specify which filesystems to check and what are upper limits and one "universal" config file which deals with default percentages that are uniform across the servers.

There are several interesting open source monitoring products each of which tries "to reinvent the bicycle" in a different way (and/or convert it into moped ;-) by adding heartbeat, graphic and statistical packages, AJAX, improving the security and storing events in backend database. But again the essence of monitoring is reliability and flexibility, not necessary the availability of eye popping excel-style graphs.

Monitoring Unix system is a tool by sysadmins for sysadmins and should be useful primarily for this purpose, not for the occasional demonstration to the vice-president for the IT of the particular company. That means that even within open source monitoring system not all systems belong to the same category and we need to distinguish between them based both on the implementation language and complexity of the codebase.

Like in boxing there should be several categories (usage of scripting language and the size of codebase if the main create used here):

| Weight | Examples |

|---|---|

| Featherweight | mon (Perl) |

| Lightweight | Spong (Perl) |

| Middleweight | Big Sister (Perl) |

| Super middleweight | OpenSMART (Perl), ZABBIX (PHP, C, agent and agentless) |

| Light heavyweight | Nagios (C, agentless, primitive agent support), OpenNMS (Java) |

| Heavyweight | Tivoli (old line of products in mostly mainly C++, new line is mostly Java), OpenView, Unicenter |

One very useful feature is the concept of server groups -- servers that have similar characteristics. That gives you an ability to perform group probes and/or configuration files changes for the whole group as a single operation. Groups are actually sets and standard set operations can be performed on them. For example HTTP servers evolved into highly specialized class of servers and can benefit from less generic scripts to monitor key components, but in your organization the can belong to a larger group of RHEL 6.8 servers. The same is true for DNS servers, mail servers and database servers.

Another useful feature is hierarchical HTML pages layout that provides a nice general picture (in most primitive form using 3-5 animated icons for "big picture" (OK, warnings, problems, serious problems, dead) with the ability of more detailed multilevel drilling "in depth" for each icon. Generic groupings of servers can include, for example:

the first level icons are displaying a general health picture composed of server groups

the second level displaying a specific server group information

the third level is an individual server level

the fourth level is individual sensor (CPU, disk space, etc)/script level.

Dr. Nikolai Bezroukov

|

|

Switchboard | ||||

| Latest | |||||

| Past week | |||||

| Past month | |||||

| Always listen to experts. They'll tell you what can't be done, and why. Then

do it.

-- Robert Heinlein |

May 21, 2020 | www.tecmint.com

Watchman – A File and Directory Watching Tool for Changes

by Aaron Kili | Published: March 14, 2019 | Last Updated: April 7, 2020

Linux Certifications - RHCSA / RHCE Certification | Ansible Automation Certification | LFCS / LFCE Certification Watchman is an open source and cross-platform file watching service that watches files and records or performs actions when they change. It is developed by Facebook and runs on Linux, OS X, FreeBSD, and Solaris. It runs in a client-server model and employs the inotify utility of the Linux kernel to provide a more powerful notification.Useful Concepts of Watchman

- It recursively watches watch one or more directory trees.

- Each watched directory is called a root.

- It can be configured via the command-line or a configuration file written in JSON format.

- It records changes to log files.

- Supports subscription to file changes that occur in a root.

- Allows you to query a root for file changes since you last checked, or the current state of the tree.

- It can watch an entire project.

In this article, we will explain how to install and use watchman to watch (monitor) files and record when they change in Linux. We will also briefly demonstrate how to watch a directory and invoke a script when it changes.

Installing Watchman File Watching Service in LinuxWe will install watchman service from sources, so first install these required dependencies libssl-dev , autoconf , automake libtool , setuptools , python-devel and libfolly using following command on your Linux distribution.

----------- On Debian/Ubuntu ----------- $ sudo apt install autoconf automake build-essential python-setuptools python-dev libssl-dev libtool ----------- On RHEL/CentOS ----------- # yum install autoconf automake python-setuptools python-devel openssl-devel libssl-devel libtool # yum groupinstall 'Development Tools' ----------- On Fedora ----------- $ sudo dnf install autoconf automake python-setuptools openssl-devel libssl-devel libtool $ sudo dnf groupinstall 'Development Tools'Once required dependencies installed, you can start building watchman by downloading its github repository, move into the local repository, configure, build and install it using following commands.

$ git clone https://github.com/facebook/watchman.git $ cd watchman $ git checkout v4.9.0 $ ./autogen.sh $ ./configure $ make $ sudo make installWatching Files and Directories with Watchman in LinuxWatchman can be configured in two ways: (1) via the command-line while the daemon is running in background or (2) via a configuration file written in JSON format.

To watch a directory (e.g

~/bin) for changes, run the following command.$ watchman watch ~/bin/<img aria-describedby="caption-attachment-32120" src="https://www.tecmint.com/wp-content/uploads/2019/03/watch-a-directory.png" alt="Watch a Directory in Linux" width="572" height="135" />

Watch a Directory in Linux

The following command writes a configuration file called

stateunder /usr/local/var/run/watchman/<username>-state/ , in JSON format as well as a log file calledlogin the same location.You can view the two files using the cat command as show.

$ cat /usr/local/var/run/watchman/aaronkilik-state/state $ cat /usr/local/var/run/watchman/aaronkilik-state/logYou can also define what action to trigger when a directory being watched for changes. For example in the following command, '

test-trigger' is the name of the trigger and~bin/pav.shis the script that will be invoked when changes are detected in the directory being monitored.For test purposes, the

pav.shscript simply creates a file with a timestamp (i.efile.$time.txt) within the same directory where the script is stored.time=`date +%Y-%m-%d.%H:%M:%S` touch file.$time.txtSave the file and make the script executable as shown.

$ chmod +x ~/bin/pav.shTo launch the trigger, run the following command.

$ watchman -- trigger ~/bin 'test-trigger' -- ~/bin/pav.sh<img aria-describedby="caption-attachment-32121" src="https://www.tecmint.com/wp-content/uploads/2019/03/create-a-trigger.png" alt="Create a Trigger on Directory" width="842" height="135" srcset="https://www.tecmint.com/wp-content/uploads/2019/03/create-a-trigger.png 842w, https://www.tecmint.com/wp-content/uploads/2019/03/create-a-trigger-768x123.png 768w" sizes="(max-width: 842px) 100vw, 842px" />

Create a Trigger on Directory

When you execute watchman to keep an eye on a directory, its added to the watch list and to view it, run the following command.

$ watchman watch-list<img aria-describedby="caption-attachment-32122" src="https://www.tecmint.com/wp-content/uploads/2019/03/view-watch-list.png" alt="View Watch List " width="572" height="173" />

View Watch List

To view the trigger list for a root , run the following command (replace

~/binwith therootname).$ watchman trigger-list ~/bin<img aria-describedby="caption-attachment-32124" src="https://www.tecmint.com/wp-content/uploads/2019/03/show-trigger-list-for-a-root.png" alt="Show Trigger List for a Root" width="612" height="401" />

Show Trigger List for a Root

Based on the above configuration, each time the

~/bindirectory changes, a file such asfile.2019-03-13.23:14:17.txtis created inside it and you can view them using ls command .$ ls<img aria-describedby="caption-attachment-32123" src="https://www.tecmint.com/wp-content/uploads/2019/03/test-watchman-configuration.png" alt="Test Watchman Configuration" width="672" height="648" />

Test Watchman Configuration Uninstalling Watchman Service in Linux

If you want to uninstall watchman , move into the source directory and run the following commands:

$ sudo make uninstall $ cd '/usr/local/bin' && rm -f watchman $ cd '/usr/local/share/doc/watchman-4.9.0 ' && rm -f README.markdownFor more information, visit the Watchman Github repository: https://github.com/facebook/watchman .

You might also like to read these following related articles.

- Swatchdog – Simple Log File Watcher in Real-Time in Linux

- 4 Ways to Watch or Monitor Log Files in Real Time

- fswatch – Monitors Files and Directory Changes in Linux

- Pyintify – Monitor Filesystem Changes in Real Time in Linux

- Inav – Watch Apache Logs in Real Time in Linux

Watchman is an open source file watching service that watches files and records, or triggers actions, when they change. Use the feedback form below to ask questions or share your thoughts with us.

Sharing is Caring...

Mar 23, 2020 | linuxconfig.org

In this tutorial you will learn:

- How to install NRPE on Debian/Red Hat based distributions

- How to configure NRPE to accept commands from the server

- How to configure a custom check on the server and client side

Nov 08, 2019 | opensource.com

Common types of alerts and visualizations Alerts

Let's first cover what alerts are not . Alerts should not be sent if the human responder can't do anything about the problem. This includes alerts that are sent to multiple individuals with only a few who can respond, or situations where every anomaly in the system triggers an alert. This leads to alert fatigue and receivers ignoring all alerts within a specific medium until the system escalates to a medium that isn't already saturated.

For example, if an operator receives hundreds of emails a day from the alerting system, that operator will soon ignore all emails from the alerting system. The operator will respond to a real incident only when he or she is experiencing the problem, emailed by a customer, or called by the boss. In this case, alerts have lost their meaning and usefulness.

Alerts are not a constant stream of information or a status update. They are meant to convey a problem from which the system can't automatically recover, and they are sent only to the individual most likely to be able to recover the system. Everything that falls outside this definition isn't an alert and will only damage your employees and company culture.

Everyone has a different set of alert types, so I won't discuss things like priority levels (P1-P5) or models that use words like "Informational," "Warning," and "Critical." Instead, I'll describe the generic categories emergent in complex systems' incident response.

You might have noticed I mentioned an "Informational" alert type right after I wrote that alerts shouldn't be informational. Well, not everyone agrees, but I don't consider something an alert if it isn't sent to anyone. It is a data point that many systems refer to as an alert. It represents some event that should be known but not responded to. It is generally part of the visualization system of the alerting tool and not an event that triggers actual notifications. Mike Julian covers this and other aspects of alerting in his book Practical Monitoring . It's a must read for work in this area.

Non-informational alerts consist of types that can be responded to or require action. I group these into two categories: internal outage and external outage. (Most companies have more than two levels for prioritizing their response efforts.) Degraded system performance is considered an outage in this model, as the impact to each user is usually unknown.

Internal outages are a lower priority than external outages, but they still need to be responded to quickly. They often include internal systems that company employees use or components of applications that are visible only to company employees.

External outages consist of any system outage that would immediately impact a customer. These don't include a system outage that prevents releasing updates to the system. They do include customer-facing application failures, database outages, and networking partitions that hurt availability or consistency if either can impact a user. They also include outages of tools that may not have a direct impact on users, as the application continues to run but this transparent dependency impacts performance. This is common when the system uses some external service or data source that isn't necessary for full functionality but may cause delays as the application performs retries or handles errors from this external dependency.

VisualizationsThere are many visualization types, and I won't cover them all here. It's a fascinating area of research. On the data analytics side of my career, learning and applying that knowledge is a constant challenge. We need to provide simple representations of complex system outputs for the widest dissemination of information. Google Charts and Tableau have a wide selection of visualization types. We'll cover the most common visualizations and some innovative solutions for quickly understanding systems.

Line chartThe line chart is probably the most common visualization. It does a pretty good job of producing an understanding of a system over time. A line chart in a metrics system would have a line for each unique metric or some aggregation of metrics. This can get confusing when there are a lot of metrics in the same dashboard (as shown below), but most systems can select specific metrics to view rather than having all of them visible. Also, anomalous behavior is easy to spot if it's significant enough to escape the noise of normal operations. Below we can see purple, yellow, and light blue lines that might indicate anomalous behavior.

monitoring_guide_line_chart.pngAnother feature of a line chart is that you can often stack them to show relationships. For example, you might want to look at requests on each server individually, but also in aggregate. This allows you to understand the overall system as well as each instance in the same graph.

monitoring_guide_line_chart_aggregate.png HeatmapsAnother common visualization is the heatmap. It is useful when looking at histograms. This type of visualization is similar to a bar chart but can show gradients within the bars representing the different percentiles of the overall metric. For example, suppose you're looking at request latencies and you want to quickly understand the overall trend as well as the distribution of all requests. A heatmap is great for this, and it can use color to disambiguate the quantity of each section with a quick glance.

The heatmap below shows the higher concentration around the centerline of the graph with an easy-to-understand visualization of the distribution vertically for each time bucket. We might want to review a couple of points in time where the distribution gets wide while the others are fairly tight like at 14:00. This distribution might be a negative performance indicator.

monitoring_guide_histogram.png GaugesThe last common visualization I'll cover here is the gauge, which helps users understand a single metric quickly. Gauges can represent a single metric, like your speedometer represents your driving speed or your gas gauge represents the amount of gas in your car. Similar to the gas gauge, most monitoring gauges clearly indicate what is good and what isn't. Often (as is shown below), good is represented by green, getting worse by orange, and "everything is breaking" by red. The middle row below shows traditional gauges.

monitoring_guide_gauges.png Image source: Grafana.org (© Grafana Labs)This image shows more than just traditional gauges. The other gauges are single stat representations that are similar to the function of the classic gauge. They all use the same color scheme to quickly indicate system health with just a glance. Arguably, the bottom row is probably the best example of a gauge that allows you to glance at a dashboard and know that everything is healthy (or not). This type of visualization is usually what I put on a top-level dashboard. It offers a full, high-level understanding of system health in seconds.

Flame graphsA less common visualization is the flame graph, introduced by Netflix's Brendan Gregg in 2011. It's not ideal for dashboarding or quickly observing high-level system concerns; it's normally seen when trying to understand a specific application problem. This visualization focuses on CPU and memory and the associated frames. The X-axis lists the frames alphabetically, and the Y-axis shows stack depth. Each rectangle is a stack frame and includes the function being called. The wider the rectangle, the more it appears in the stack. This method is invaluable when trying to diagnose system performance at the application level and I urge everyone to give it a try.

monitoring_guide_flame_graph.png Image source: Wikimedia.org ( Creative Commons BY SA 3.0 ) Tool optionsThere are several commercial options for alerting, but since this is Opensource.com, I'll cover only systems that are being used at scale by real companies that you can use at no cost. Hopefully, you'll be able to contribute new and innovative features to make these systems even better.

Alerting tools BosunIf you've ever done anything with computers and gotten stuck, the help you received was probably thanks to a Stack Exchange system. Stack Exchange runs many different websites around a crowdsourced question-and-answer model. Stack Overflow is very popular with developers, and Super User is popular with operations. However, there are now hundreds of sites ranging from parenting to sci-fi and philosophy to bicycles.

Stack Exchange open-sourced its alert management system, Bosun , around the same time Prometheus and its AlertManager system were released. There were many similarities in the two systems, and that's a really good thing. Like Prometheus, Bosun is written in Golang. Bosun's scope is more extensive than Prometheus' as it can interact with systems beyond metrics aggregation. It can also ingest data from log and event aggregation systems. It supports Graphite, InfluxDB, OpenTSDB, and Elasticsearch.

Bosun's architecture consists of a single server binary, a backend like OpenTSDB, Redis, and scollector agents . The scollector agents automatically detect services on a host and report metrics for those processes and other system resources. This data is sent to a metrics backend. The Bosun server binary then queries the backends to determine if any alerts need to be fired. Bosun can also be used by tools like Grafana to query the underlying backends through one common interface. Redis is used to store state and metadata for Bosun.

A really neat feature of Bosun is that it lets you test your alerts against historical data. This was something I missed in Prometheus several years ago, when I had data for an issue I wanted alerts on but no easy way to test it. To make sure my alerts were working, I had to create and insert dummy data. This system alleviates that very time-consuming process.

Bosun also has the usual features like showing simple graphs and creating alerts. It has a powerful expression language for writing alerting rules. However, it only has email and HTTP notification configurations, which means connecting to Slack and other tools requires a bit more customization ( which its documentation covers ). Similar to Prometheus, Bosun can use templates for these notifications, which means they can look as awesome as you want them to. You can use all your HTML and CSS skills to create the baddest email alert anyone has ever seen.

CabotCabot was created by a company called Arachnys . You may not know who Arachnys is or what it does, but you have probably felt its impact: It built the leading cloud-based solution for fighting financial crimes. That sounds pretty cool, right? At a previous company, I was involved in similar functions around "know your customer" laws. Most companies would consider it a very bad thing to be linked to a terrorist group, for example, funneling money through their systems. These solutions also help defend against less-atrocious offenders like fraudsters who could also pose a risk to the institution.

So why did Arachnys create Cabot? Well, it is kind of a Christmas present to everyone, as it was a Christmas project built because its developers couldn't wrap their heads around Nagios . And really, who can blame them? Cabot was written with Django and Bootstrap, so it should be easy for most to contribute to the project. (Another interesting factoid: The name comes from the creator's dog.)

The Cabot architecture is similar to Bosun in that it doesn't collect any data. Instead, it accesses data through the APIs of the tools it is alerting for. Therefore, Cabot uses a pull (rather than a push) model for alerting. It reaches out into each system's API and retrieves the information it needs to make a decision based on a specific check. Cabot stores the alerting data in a Postgres database and also has a cache using Redis.

Cabot natively supports Graphite , but it also supports Jenkins , which is rare in this area. Arachnys uses Jenkins like a centralized cron, but I like this idea of treating build failures like outages. Obviously, a build failure isn't as critical as a production outage, but it could still alert the team and escalate if the failure isn't resolved. Who actually checks Jenkins every time an email comes in about a build failure? Yeah, me too!

Another interesting feature is that Cabot can integrate with Google Calendar for on-call rotations. Cabot calls this feature Rota, which is a British term for a roster or rotation. This makes a lot of sense, and I wish other systems would take this idea further. Cabot doesn't support anything more complex than primary and backup personnel, but there is certainly room for additional features. The docs say if you want something more advanced, you should look at a commercial option.

StatsAggStatsAgg ? How did that make the list? Well, it's not every day you come across a publishing company that has created an alerting platform. I think that deserves recognition. Of course, Pearson isn't just a publishing company anymore; it has several web presences and a joint venture with O'Reilly Media . However, I still think of it as the company that published my schoolbooks and tests.

StatsAgg isn't just an alerting platform; it's also a metrics aggregation platform. And it's kind of like a proxy for other systems. It supports Graphite, StatsD, InfluxDB, and OpenTSDB as inputs, but it can also forward those metrics to their respective platforms. This is an interesting concept, but potentially risky as loads increase on a central service. However, if the StatsAgg infrastructure is robust enough, it can still produce alerts even when a backend storage platform has an outage.

StatsAgg is written in Java and consists only of the main server and UI, which keeps complexity to a minimum. It can send alerts based on regular expression matching and is focused on alerting by service rather than host or instance. Its goal is to fill a void in the open source observability stack, and I think it does that quite well.

Visualization tools GrafanaAlmost everyone knows about Grafana , and many have used it. I have used it for years whenever I need a simple dashboard. The tool I used before was deprecated, and I was fairly distraught about that until Grafana made it okay. Grafana was gifted to us by Torkel Ödegaard. Like Cabot, Grafana was also created around Christmastime, and released in January 2014. It has come a long way in just a few years. It started life as a Kibana dashboarding system, and Torkel forked it into what became Grafana.

Grafana's sole focus is presenting monitoring data in a more usable and pleasing way. It can natively gather data from Graphite, Elasticsearch, OpenTSDB, Prometheus, and InfluxDB. There's an Enterprise version that uses plugins for more data sources, but there's no reason those other data source plugins couldn't be created as open source, as the Grafana plugin ecosystem already offers many other data sources.

What does Grafana do for me? It provides a central location for understanding my system. It is web-based, so anyone can access the information, although it can be restricted using different authentication methods. Grafana can provide knowledge at a glance using many different types of visualizations. However, it has started integrating alerting and other features that aren't traditionally combined with visualizations.

Now you can set alerts visually. That means you can look at a graph, maybe even one showing where an alert should have triggered due to some degradation of the system, click on the graph where you want the alert to trigger, and then tell Grafana where to send the alert. That's a pretty powerful addition that won't necessarily replace an alerting platform, but it can certainly help augment it by providing a different perspective on alerting criteria.

Grafana has also introduced more collaboration features. Users have been able to share dashboards for a long time, meaning you don't have to create your own dashboard for your Kubernetes cluster because there are several already available -- with some maintained by Kubernetes developers and others by Grafana developers.

The most significant addition around collaboration is annotations. Annotations allow a user to add context to part of a graph. Other users can then use this context to understand the system better. This is an invaluable tool when a team is in the middle of an incident and communication and common understanding are critical. Having all the information right where you're already looking makes it much more likely that knowledge will be shared across the team quickly. It's also a nice feature to use during blameless postmortems when the team is trying to understand how the failure occurred and learn more about their system.

VizceralNetflix created Vizceral to understand its traffic patterns better when performing a traffic failover. Unlike Grafana, which is a more general tool, Vizceral serves a very specific use case. Netflix no longer uses this tool internally and says it is no longer actively maintained, but it still updates the tool periodically. I highlight it here primarily to point out an interesting visualization mechanism and how it can help solve a problem. It's worth running it in a demo environment just to better grasp the concepts and witness what's possible with these systems.

Nov 08, 2019 | opensource.com

Examining collected data

The output from the sar command can be detailed, or you can choose to limit the data displayed. For example, enter the

sarcommand with no options, which displays only aggregate CPU performance data. The sar command uses the current day by default, starting at midnight, so you should only see the CPU data for today.On the other hand, using the

sar -Acommand shows all of the data that has been collected for today. Enter thesar -A | lesscommand now and page through the output to view the many types of data collected by SAR, including disk and network usage, CPU context switches (how many times per second the CPU switched from one program to another), page swaps, memory and swap space usage, and much more. Use the man page for the sar command to interpret the results and to get an idea of the many options available. Many of those options allow you to view specific data, such as network and disk performance.I typically use the

sar -Acommand because many of the types of data available are interrelated, and sometimes I find something that gives me a clue to a performance problem in a section of the output that I might not have looked at otherwise. The-Aoption displays all of the collected data types.Look at the entire output of the